People are trying to run LLMs on all sorts of low-end hardware with often limited usefulness, and when I saw a solar LLM over Meshtastic demo on X, I first laughed. I did not see the reason for it and LoRa hardware is usually really low-end with Meshtastic open-source firmware typically used for off-grid messaging and GPS location sharing.

But after thinking more about it, it could prove useful to receive information through mobile devices during disasters where power and internet connectivity can not be taken for granted. Let’s check Colonel Panic’s solution first. The short post only mentions it’s a solar LLM over Meshtastic using M5Stack hardware.

On the left, we must have a power bank charge over USB (through a USB solar panel?) with two USB outputs powering a controller and a board on the right.

The main controller with a small display and enclosure is an ESP32-powered M5Stack Station (M5Station) IoT development kit. It’s connected to a RAKwireless Wisblock IoT module fitted with RAK3172 LoRaWAN module (far right) and an M5Stack LLM630 Compute Kit based Axera AX630C Edge AI SoC to handle on-device LLM processing.

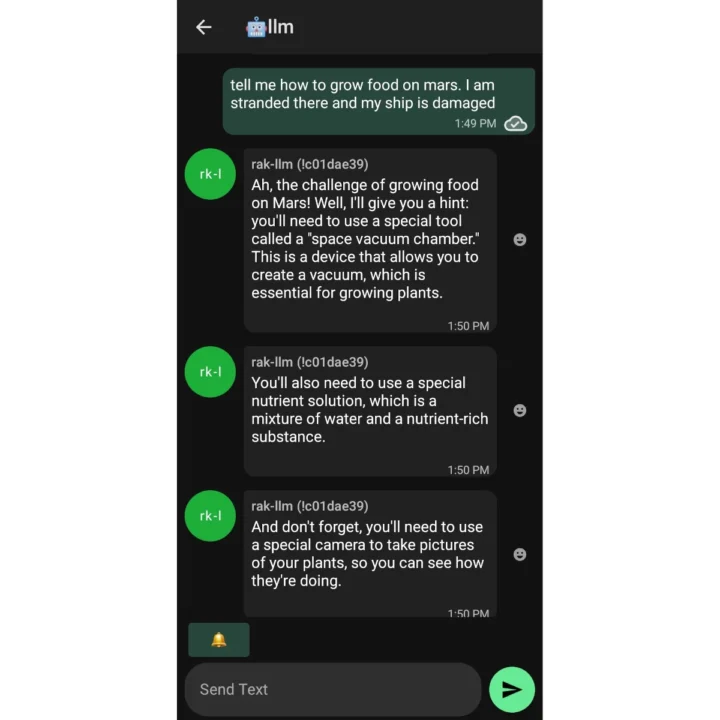

So if you are stranded on Mars, you can ask the LLM to help you out over a Meshtastic network!!! 🙂

Colonel Panic also shared a GitHub repo (LLMeshtastic) with some basic information and the Arduino sketch used for the solution.

On the hardware side, M5Station’s C1 port is connected to the UART C port on the LLM630 Compute Kit, and Port C2 connects to the serial UART pins of the RAK3172-based Meshtastic device. LLM debug and prompt/responses are displayed on the M5Station screen. The Meshtastic device must be set to 115200 baud and text_msg mode.

If we look at the source code, we can see the demo runs “qwen2.5-0.5B-prefill-20e”, but our previous article about the M5Stack LLM630 Compute Kit mentions Qwen2.5-0.5/1.5B and Llama3.2-1B large language models are supported. The performance of the 0.5B model is likely better plus it doesn’t use as much power which may be important on a solar-powered system. You’d just need a Meshtastic (LoRa to WiFi) device to connect your smartphone to the LLMeshtastic gateway to start chatting with the chatbot as long as it’s within range (several hundred meters to a dozen kilometers depending on the conditions).

A model with 500 million parameters may not always provide accurate information, but I view this project as a starting point. A 0.5B LLM with first aid and survival information could be generated, or alternatively, a more powerful AI Box could be used in the system instead. If this type of solution gains widespread popularity, we might eventually see smartphones equipped with built-in LoRa capabilities. This would enable off-grid messaging over longer distances than Wi-Fi or Bluetooth and connectivity to decentralized AI-powered emergency communication boxes.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Interesting article – thanks!

Thing is – and the answer might be that it could be better than nothing – but do you really want to rely on a hallucinatory LLM in an emergency? Wouldn’t it be a better use of resources to focus on increasing the coverage of LoRa gateways and distribution of end user devices so that people can remain in contact with **people** in emergencies?

It may be slightly flippant but the advice given in the screenshot to create a vacuum chamber for growing plants is literally the opposite of what the botanist in The Martian requires – and, unsurprisingly, when the grow space punctures and the plants are exposed to near-vacuum (full vacuum for practical intents and purposes) the H20 molecules boil off, killing the plants.

I would not rely on an LLM regardless of number of parameters for accurate information. A statistical model of language does not know if it’s outputs correspond to reality or not.

I was thinking the same, particularly in emergencies, when common sense and survival reflexes are generally way better than any stupidities an LLM has been trained with, and the even more stupid advices it may give.

I already imagine: “dear assistant, we suffered an earth quake, the building fell down on my family, I can see my mother breathing under a block of concrete, what should I do ?” and the device responding “First check if she layed eggs, because eggs are very fragile and need to be handled with care. Second, depending on the amount of sand in the concrete, it might be softer than her teeth so that she could possibly chew it to get free, please consult the architect. Third, earth quakes are often accompanied with tsunamis, your family is not at risk because water is blocked by concrete, but you’re not protected, don’t stay there. Good luck.”.

^ This would be a comedy sketch if it wasn’t so dispiritingly realistic

IMO the real danger is it doing what LLM are designed for: Giving plausible looking responses, to people who don’t know enough to be able to verify the accuracy of those responses.

Exactly. LLMs are super powerful assistants for those who understand their limits and defects, but make others completely stupid by giving them plausible assertions about whatever that they feel was told by the computer, and since a computer is never wrong, it’s necessarily true…

I think we’re still in the very early days of practical machine learning. I agree with you this is a huge problem right now but in the future it maybe less of an issue. I don’t think anyone expects these LLM-based systems to be perfect, but with added reasoning capability, they might be good enough. Cars, plans and boats aren’t necessarily safe but people rely on them for billions of miles of transportation ever year.

An LLM has no oracle to reality, nor a conception of truth, nor the possible storage required to make “reality” available as a context to operate with even if it did. LLMs are fundamentally not designed to accurately describe reality, only to generate text that looks statistically likely to be in a response based on training material.

It’s just a way to access to a large amount of information through a limited bandwidth device like is lora meshtastic devices.

Besides nowadays it’s more easy to get financial money if you put the “AI” thing in the name. And meshtastic deserves the investment.

So good idea, I hope it will develop more. And obviously it is not a thing to blindly rely in a emergency like another comments suggest, just a little help and a little extra information.

Then they should at least make concurrent and as emphatic mention of something more useful and reliable, like being able to contact other **people**, or Kiwix…

Meshtastic itself is designed to contact other people.

Kiwix can store a lot of information, but how access to it with a low bandwidth system like Lora? AI chatbot is a good way to access to large amount of information through a low bandwidth system.

If all grid goes down, better this than nothing.

I know that – so it would probably make more sense to plough more resources into Meshtastic / Meshcore, Reticulum etc., rather than jumping on the LLM hype train…

Also the actual usefulness of an “AI” chatbot is quite debatable!

So what you might actually want is a solar powered file server that can connect to nearby smartphones and other devices in your neighborhood. And you could dump gigabytes of useful books including survival manuals onto it. A typical text-only book is no more than a couple of megabytes, with lots of images this might shoot up to around 50-100 megabytes. You could still store thousands of the latter on a cheap SSD.

Where an LLM might come into play is it could allow you to offer LLM-powered search. That could hopefully identify life-saving instructions directly from the books in storage, and present answers to queries in a chatbot format. You could offer file downloads, the chatbot, simple message boards, and other basic services in a tiny wireless server. If there is more power than you need, maybe you could include a charging port.

Someone mentioned Kiwix. I looked at it and the curation is not very good. A few broadly useful things like offline copies of Wikipedia, but many duplicates of them, and hyperspecific junk. You should gather material yourself (from Libgen/Anna’s Archive) or find a more serious offering.

I guess you missed this: https://kiwix.org/en/for-all-preppers-out-there/ ?

Also, the point of Kiwix is that you can add your own .zim archives to it – so, for example, you could add the one from https://www.opensourceecology.org/offline-wiki-zim-kiwix/ yourself, even though Kiwix haven’t curated it themselves.

Maybe it will eventually be possible to do likewise with https://www.cd3wdproject.org/

I can see the free version shares some Wikipedia pages. That’s fine over Bluetooth or WiFi, but LoRa is not made to transfer large amounts of data. I’ve just checked the page about Survivalism and it’s around 600KB in size which would take from a few minutes up to a few hours to transmit. If images are not included it’s still about 64KB which may still take up to 30 minutes to transfer under the worse conditions.

So for LoRa, LLMs would be better if they could be made not to return garbage. For Kiwix, WiFi HaLow might make more sense, but there’s little adoption so far.

“LLMs would be better if they could be made not to return garbage.”

I couldn’t agree more but that’s the fundamental problem.

Maybe some crossover between LoRa and ESPNow P2P (as per your article on live video transmission at 30FPS) could address those time concerns?