Firefly EC-R3576PC FD is described as an “Embedded Large-Model Computer” powered by a Rockchip RK3576 octa-core Cortex-A72/A53 processor with a 6 TOPS NPU and supporting large language models (LLMs) such as Gemma-2B, LlaMa2-7B, ChatGLM3-6B, or Qwen1.5-1.8B.

It looks to be based on the ROC-RK3576-PC SBC we covered a few weeks ago, and also designed for LLM. But the EC-R3576PC FD is a turnkey solution that will work out of the box and should deliver decent performance now that the RKLLM toolkit has been released with NPU acceleration. However, note there are some caveats doing that on RK3576 instead of RK3588 that we’ll discuss below.

Firefly EC-R3576PC FD specifications:

- SoC – Rockchip RK3576

- CPU

- 4x Cortex-A72 cores at 2.2GHz, four Cortex-A53 cores at 1.8GHz

- Arm Cortex-M0 MCU at 400MHz

- GPU – ARM Mali-G52 MC3 GPU clocked at 1GHz with support for OpenGL ES 1.1, 2.0, and 3.2, OpenCL up to 2.0, and Vulkan 1.1 embedded 2D acceleration

- NPU – 6 TOPS (INT8) AI accelerator with support for INT4/INT8/INT16/BF16/TF32 mixed operations.

- VPU

- Video Decoder: H.264, H.265, VP9, AV1, and AVS2 up to 8K at 30fps or 4K at 120fps.

- Video Encoder: H.264 and H.265 up to 4K at 60fps, (M)JPEG encoder/decoder up to 4K at 60fps.

- CPU

- System Memory – 4GB or 8GB 32-bit LPDDR4/LPDDR4x

- Storage

- 16GB to 256GB eMMC flash

- Optional UFS 2.0 storage

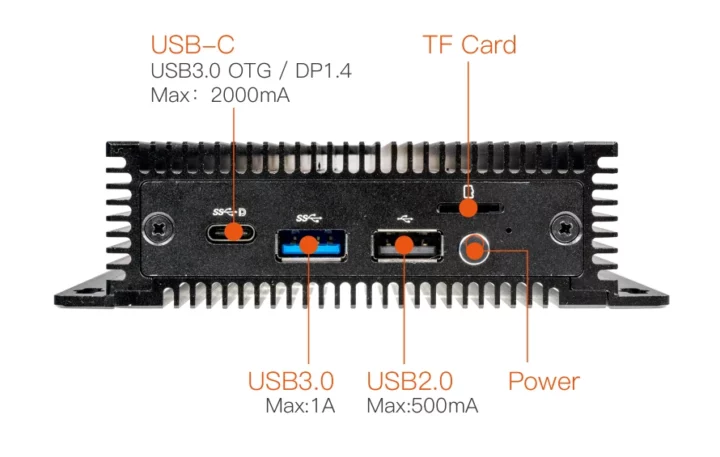

- MicroSD card slot

- M.2 PCIe NVMe/SATA socket for M.2 2242 SSD

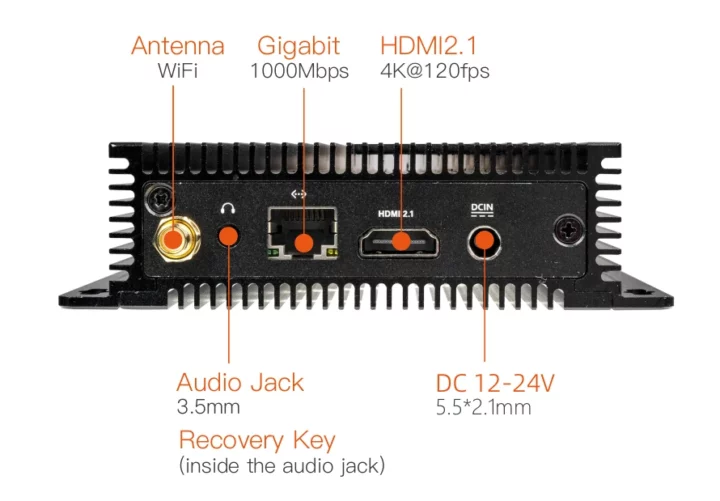

- Video Output

- HDMI 2.0 port up to 4Kp120

- DisplayPort 1.4 via USB-C up to 4Kp120

- Audio

- 3.5mm audio jack (headphone and microphone support)

- Digital audio output via HDMI and USB-C/DisplayPort

- Camera I/F – 1x MIPI CSI DPHY(30Pin-0.5mm, 1*4 lanes/2*2 lanes)

- Networking

- Low-profile Gigabit Ethernet RJ45 port with Motorcomm YT8531

- Dual-band WiFi 5 and Bluetooth 5.0 via AMPAK AP6256; SMA antenna connector

- USB – 1x USB 3.0 port, 1x USB 2.0 port, 1x USB 3.0 Type-C port with DisplayPort Alt mode

- Expansion

- 40-pin GPIO header

- M.2 for PCIe socket

- Misc – External watchdog

- Power Supply – 12V DC via 5.5/2.1mm DC jack; support for 12V to 24V DC wide voltage input

- Power Consumption – Typ.: 1.2W (12V/100mA); max: 6W (12V/500mA); min: 0.096W (12V/8mA)

- Dimensions – 116 x 105.2 x 31.5mm

- Weight – 430 grams

- Temperature Range – -20°C to 60°C

- Humidity – 10% to 90% RH (non-condensing)

The Firefly EC-R3576PC FD embedded large-model computer supports Android 14, Ubuntu, and Linux+Qt built with Buildroot. Since the system is mostly aimed as an Edge AI computer, the company highlights support for large-scale parameter models under the Transformer architecture, such as Gemma-2B, LlaMa2-7B, ChatGLM3-6B, Qwen1.5-1.8B, and others. But it also supports traditional neural network architectures such as CNN, RNN, and LSTM compatible with deep learning frameworks that include TensorFlow, PyTorch, MXNet, PaddlePaddle, ONNX, and Darknet. The wiki for the embedded computer is currently empty, but the one of the ROC-RK3576-PC SBC comes with Android 14 and Ubuntu 22.04 images and various tools.

When the RKLLM library was tested on Rockchip RK3588(S)-based Radxa SBC, the following performance was achieved:

- TinyLlama 1.1B – 15.03 tokens/s

- Qwen 1.8B – 14.18 tokens/s

- Phi3 3.8B – 6.46 tokens/s

- ChatGLM3 – 3.67 tokens/s

One would think the RK3588 and RK3576 would have similar performance considering they both share the same 6 TOPS. But it was pointed out to me that while the RK3588 supports 64-bit memory, the RK3576 is limited to 32-bit memory, and LLM models are highly dependent on memory bandwidth, so the performance might actually be halved on the RK3576. Smaller LLMs with 1 to 2B parameters should still be usable, but larger LLMs should be fairly slow.

One potential use case could be a robot, smart speaker, or interactive kiosks with voice recognition and text-to-speech leveraging an LLM for interaction with the user. The Firefly EC-R3576PC FD will not be quite as powerful as other embedded AI boxes such as the Radxa Fogwise Airbox, but it’s also fairly cheaper going for $185.00 in 4GB/32GB configuration and $215 with 8GB RAM and 64GB flash. Further details may be found on the product page. The RK3588 version is available on AliExpress, but at $300 and up, it’s not competitive with the Radxa Fogwise Airbox for LLM processing.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

BTW I’ve run a small LLM comparison between my ROCK 5 ITX and an Ampere Altra with 80 cores at 2.6 GHz, both in CPU-only mode, using the 4 A76 cores on the ROCK 5 and 4 N1 cores on the Altra (A76 and N1 cores are the same). The RK3588 has four 16-bit DDR5 channels (hence 64-bit total) while the Altra has six 64-bit channels. Asking Phi-3.1-mini-Q8 (3.8B) “Tell me about the differences between DDR4 and DDR5 memory.” delivered a response at 4.35 token/s on the ROCK 5 vs 8.15 on the Ampere, indicating that in order to fully use its 4 cores with such a model, the RK3588 would need a second 64-bit channel. With 12 cores, the Ampere reaches 20 tok/s. With 24 cores, the performance caps at 26 token/s, or just 6 times the RK3588’s performance. On the RK3588, using the Q5_K_M model yields 5.37 t/s. And the IQ4_XS reaches 8.64 t/s or almost exactly twice the original performance for half the size. The exact same speed is reached on the ROCK 5B (DDR4-4224). The Ampere with 8 cores shows 10.44 token/s on this model, or about the same clock-for-clock (2.6 GHz vs ~2.2-2.3), indicating that the RK3588 is almost CPU-bound with this model on 4 cores.

The numbers correspond to 17.5 GB/s of DRAM read speed, which is also exactly what I’m measuring on both ROCK5.

Thus I think that the board above, with its 4 smaller cores and 32-bit RAM would reach at best around 4 tok/s with a heavily compressed small model like phi-3.8B-IQ4_XS.

Last time I tested TinyLlama it was all but useless, sure it was fast but the “answers” it provided were generally full of mistakes.

I find the usefulness of these small/tiny models dubious at best and harmful at worst.

Are larger models much more reliable? I honestly don’t know as I stopped using ChatGPT and the likes a while ago after seeing a lot of garbage being generated.

The larger the model the higher the chance the provided output is accurate but until we have a way to verify the output without human interaction LLMs can only be really useful in niche ways.

They excel at input/output, such as summarizing and structuring data. They are useful for generating code but mostly harmful if you don’t understand the code it generates.

The largest issue I see currently is an Internet littered with AI hallucinations showing up in searches for people researching and not understanding or knowing this is AI output which is inaccurate. Then using the wrong data which further propagates the false information, this sort of thing is already happening and I don’t see how it won’t exponentially get worse.