Edge Impulse machine learning platform for edge devices has released a new suite of tools developed on NVIDIA TAO Toolkit and Omniverse that brings new AI models to entry-level hardware based on Arm Cortex-A processors, Arm Cortex-M microcontrollers, or Arm Ethos-U NPUs.

By combining Edge Impulse and NVIDIA TAO Toolkit, engineers can create computer vision models that can be deployed to edge-optimized hardware such as NXP I.MX RT1170, Alif E3, STMicro STM32H747AI, and Renesas CK-RA8D1. The Edge Impulse platform allows users to provide their own custom data with GPU-trained NVIDIA TAO models such as YOLO and RetinaNet, and optimize them for deployment on edge devices with or without AI accelerators.

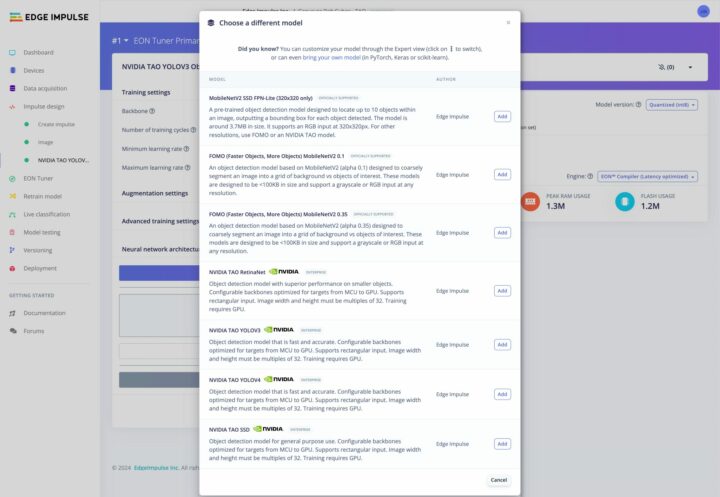

NVIDIA and Edge Impulse claim this new solution enables the deployment of large-scale NVIDIA models to Arm-based devices, and right now the following object detection and image classification tasks are available: RetinaNet, YOLOv3, YOLOv4, SSD, and image classification. You can check out the documentation to try it out on your own platform. The downside is that the solution requires GPU hours for training which are only available to enterprise customers (paid plans).

The second part of the news is Edge Impulse integration with NVIDIA Omniverse for applications using synthetic data and testing environments for the edge. Synthetic data generation is useful for cases where obtaining real-world data can be costly, time-consuming, create privacy concerns, or simply cannot account for all types of scenarios. Edge Impulse relies on NVIDIA Omniverse Replicator to generate synthetic data that can be then fed into their AI model for edge devices for applications such as inspection of manufacturing production lines to detect defects, equipment malfunctions, or surgery inventory object detection to prevent postoperative complications.

The benefits of synthetic data generation include:

- Reduce physical prototyping and testing costs via virtual tools

- Speed up development time and experimentation, leading to faster time-to-market

- Simulate sensor and model behavior, test MCU compatibility, and more

- Use synthetic data to fortify model reliability and create difficult-to-replicate scenarios

As I understand it, this doesn’t require a paid plan, as the documentation states this is done on the user’s own Windows 10/11 or Linux machine with at least a quad-core CPU (eight cores recommended), 16GB RAM (32GB or more recommended), and an NVIDIA GeForce RTX 2080, but getting a Quadro RTX500 is better, and a system with multiple Quadro RTX 8000 graphics cards is best…

Further information may be found in the announcement blog post.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress