Some of the newer AMD Ryzen processors come with an AI Engine (also called NPU or IPU) that works in Windows 11 including the Ryzen 9 7940HS, Ryzen 7 7840HS, Ryzen 5 7640HS, Ryzen 7 7840U, and Ryzen 5 7640U. I’ve just completed the review of the GEEKOM A7 mini PC powered by an AMD Ryzen 9 7940HS CPU with Windows 11 – but need to wait before publishing it – so I decided to try the AI engine on the Ryzen 9 7940HS processor.

The AI Engine relies on AMD XDNA architecture, and AMD provides instructions to get started with examples, demos, and developer resources. So I decided to try some examples, but for some reasons I’ll explain below, I eventually had to settle for some demos.

Ryzen AI software installation (for the demos)

The examples hosted on GitHub require us to install dependencies for the Ryzen AI software stack.

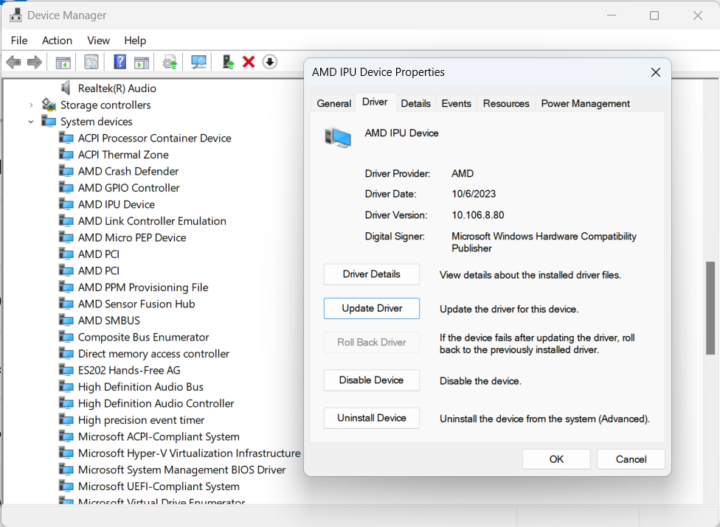

The first step is the installation of the IPU driver which can be done by downloading the “ipu_stack_rel_silicon_prod.zip” after registering an account on the AMD website.

Note that this requires signing a “Beta Software End User License Agreement”, and I initially assumed that was not needed here because the AMD IPU Device driver is already installed in Windows 11 Pro running on the GEEKOM A7 mini PC…

But I was wrong. I had to install the new one (and uninstall the old one) for this part to work.

We are then asked to install a few programs:

- Visual Studio 2019 (I’d recommend downloading it from a third-party website like TechSpot, because Microsoft requires login to download previous versions of VS).

- CMake version >= 3.26

- Python version >= 3.9

- Anaconda3 or (Miniconda3)

I made sure to select the option add to PATH when installing CMake, Python 3.12, and Anaconda3. Note that Visual Studio 2019 may also be used to install CMake and other tools.

The next step is to download the Ryzen AI Software installation package (ryzen-ai-sw-1.0.1.zip), but this time around, I could not avoid signing the Beta Software End User License Agreement, so I did. The agreement is confidential (although anybody who registers for an AMD account can read it) and also prevents me from reporting any results without a written agreement from AMD. So I’ll just write public information for that part…

We’ll need to run the installation script and accept another EULA for RyzenAI:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

PS C:\Users\jaufr\Downloads\ryzen-ai-sw-1.0.1\ryzen-ai-sw-1.0.1> .\install.bat -env cnxsoft-ryzenai Windows 11: OK Visual Studio 2019: OK Python: OK CONDA Available: OK CMake: OK IPU driver Available: OK All deps are available. Proceeding to Conda env creation... Do you accept EULA for RyzenAI? [y/n]: y Proceeding further ... Creating conda env: ryzenai-1.0-20240211-173028 ... Collecting package metadata (repodata.json): done ... |

If there are any errors (look for CRITICAL string) or the script does not complete, you’ll want to correct that by installing the relevant programs and making sure they are in the PATH.

The next step is to activate the conda environment…

|

1 |

conda activate cnxsoft-ryzenai |

… and run the test:

|

1 2 3 4 |

cd .\quicktest\ curl https://www.cs.toronto.edu/~kriz/cifar-10-sample/bird6.png -o image_0.png python -m pip install -r requirements.txt python quicktest.py --ep ipu |

Once successful, the output should look like that as per the documentation:

|

1 2 3 4 5 6 7 8 9 |

I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:336] Vitis AI EP Load ONNX Model Success I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:337] Graph Input Node Name/Shape (1) I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:341] input : [-1x3x32x32] I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:347] Graph Output Node Name/Shape (1) I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:351] output : [-1x10] I20231127 16:29:15.010130 13892 vitisai_compile_model.cpp:226] use cache key quickstart_modelcachekey [Vitis AI EP] No. of Operators : CPU 2 IPU 400 99.50% [Vitis AI EP] No. of Subgraphs : CPU 1 IPU 1 Actually running on IPU 1 .... |

It’s a bit longer than that, but you get the idea.

Yolov8 example with Ryzen AI software

Once the installation is complete, we can try some of the examples, and I thought to check out the Yolov8 example. I spent a few hours on it, but eventually failed, and I did not want to spend more time on it since I couldn’t write about it to the Beta software agreement. But AMD published a video about the similar Yolov8_e2e tutorial from the same GitHub repository about two months ago. Some of the versions have changed, but the procedure is similar and requires quite a few more steps than the example.

Ryzen AI demos

I then switched to the demos directory in the same GitHub repository, and I was not asked to sign any legal documents… I started with the Ryzen AI “cloud-to-client” demo that showcases searching and sorting images on AMD Ryzen AI-based PCs using Yolov5 and Retinaface AI models. I used this with the older IPU driver that shipped with Windows 11 Pro in the GEEKOM A7 mini PC.

The first step was to run the setup batch script:

|

1 |

PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\cloud-to-client> .\setup.bat |

It ended with a “critical” file missing, but I was still able to run the one of the programs.

|

1 2 3 4 5 |

2024-02-11 18:16:24,015 - INFO - copying C:\Windows\System32\AMD\xrt_core.dll to C:\Users\jaufr\anaconda3\envs\ms-build-demo\lib\site-packages\onnxruntime\capi 2024-02-11 18:16:24,015 - INFO - copying C:\Windows\System32\AMD\xrt_coreutil.dll to C:\Users\jaufr\anaconda3\envs\ms-build-demo\lib\site-packages\onnxruntime\capi 2024-02-11 18:16:24,015 - INFO - copying C:\Windows\System32\AMD\xrt_phxcore.dll to C:\Users\jaufr\anaconda3\envs\ms-build-demo\lib\site-packages\onnxruntime\capi 2024-02-11 18:16:24,037 - CRITICAL - C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\cloud-to-client\voe-0.1.0-cp39-cp39-win_amd64\onnxruntime.dll does not exist. PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\cloud-to-client> |

You’ll find the log on pastebin.

I then run the program Qt demo:

|

1 |

run_pyapp.bat |

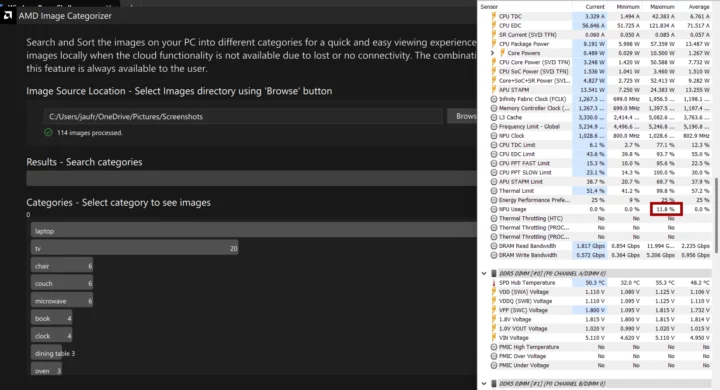

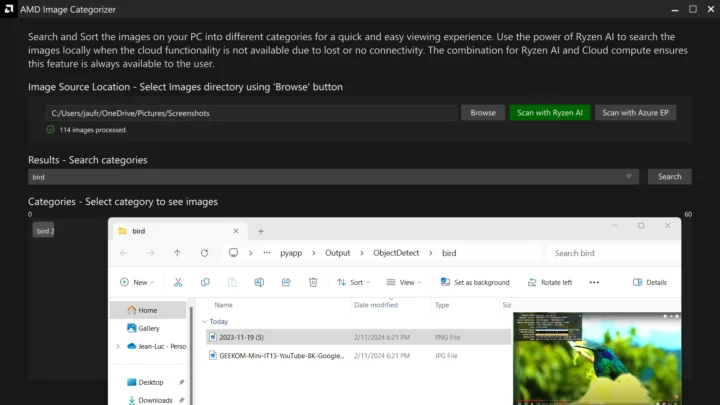

I selected a folder with screenshots (I reckon it may not be the best for this type of test but that is what I had on the test PC), and the AMD Image Categorizer scanned my images, and I could see some NPU usage in HWiNFO64. See full output while scanning for reference.

Each image is now searchable and although many tags are not super relevant because of the source images used, it could find a bird in a screenshot I had done with YouTube.

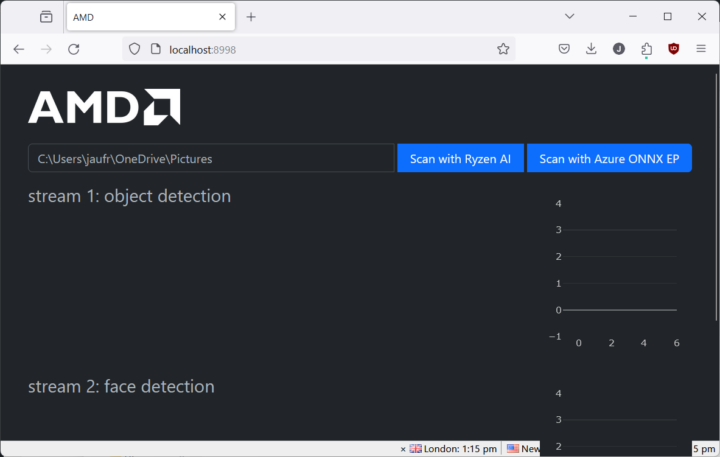

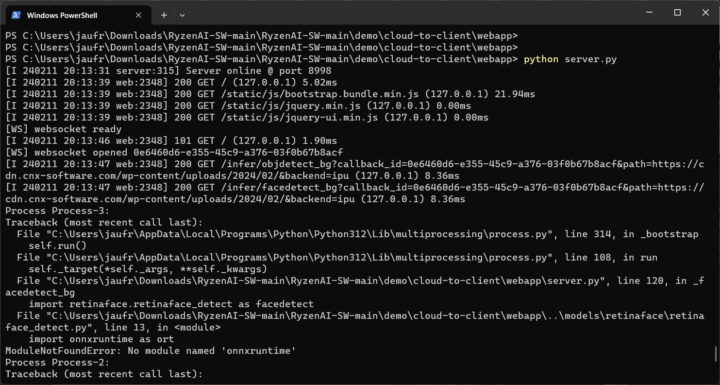

The cloud-to-client demo is also available as a web server, but it did not work for me, even after installing some extra Python modules.

I’d kind of expect demos to work out of the box without having to spend hours getting the right module or DLL version although my recent experience with the Orbbec Femto Mega depth camera in Windows 11 seems to imply this is business as usual in Windows…

There’s also another demo called “multi-model-exec” with the following models:

- MobileNet_v2

- ResNet50

- Retinaface

- Segmentation

- Yolox

Sadly all failed me with the same error. I had to change some of the commands from the instructions or I would have had to stop earlier…

I first had to create a conda environment:

|

1 2 3 |

PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec> conda env create --name cnxsoft --file=env.yaml Collecting package metadata (repodata.json): done Solving environment: done |

Full output:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 |

PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec> conda env create --name cnxsoft --file=env.yaml Collecting package metadata (repodata.json): done Solving environment: done ==> WARNING: A newer version of conda exists. <== current version: 23.7.4 latest version: 24.1.0 Please update conda by running $ conda update -n base -c defaults conda Or to minimize the number of packages updated during conda update use conda install conda=24.1.0 Downloading and Extracting Packages Preparing transaction: done Verifying transaction: done Executing transaction: done Installing pip dependencies: \ Ran pip subprocess with arguments: ['C:\\Users\\jaufr\\anaconda3\\envs\\cnxsoft\\python.exe', '-m', 'pip', 'install', '-U', '-r', 'C:\\Users\\jaufr\\Downloads\\RyzenAI-SW-main\\RyzenAI-SW-main\\demo\\multi-model-exec\\condaenv.q1_003sq.requirements.txt', '--exists-action=b'] Pip subprocess output: Processing c:\users\jaufr\downloads\ryzenai-sw-main\ryzenai-sw-main\demo\multi-model-exec\voe-0.1.0-cp39-cp39-win_amd64.whl (from -r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 2)) Collecting onnxruntime (from -r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Obtaining dependency information for onnxruntime from https://files.pythonhosted.org/packages/6d/22/f84599edb744a06ba86920f51a2f9d5317db2dc496876eb32831f7923196/onnxruntime-1.17.0-cp39-cp39-win_amd64.whl.metadata Using cached onnxruntime-1.17.0-cp39-cp39-win_amd64.whl.metadata (4.3 kB) Collecting coloredlogs (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Using cached coloredlogs-15.0.1-py2.py3-none-any.whl (46 kB) Collecting flatbuffers (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Obtaining dependency information for flatbuffers from https://files.pythonhosted.org/packages/6f/12/d5c79ee252793ffe845d58a913197bfa02ae9a0b5c9bc3dc4b58d477b9e7/flatbuffers-23.5.26-py2.py3-none-any.whl.metadata Using cached flatbuffers-23.5.26-py2.py3-none-any.whl.metadata (850 bytes) Collecting numpy>=1.21.6 (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Obtaining dependency information for numpy>=1.21.6 from https://files.pythonhosted.org/packages/b5/42/054082bd8220bbf6f297f982f0a8f5479fcbc55c8b511d928df07b965869/numpy-1.26.4-cp39-cp39-win_amd64.whl.metadata Using cached numpy-1.26.4-cp39-cp39-win_amd64.whl.metadata (61 kB) Collecting packaging (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Obtaining dependency information for packaging from https://files.pythonhosted.org/packages/ec/1a/610693ac4ee14fcdf2d9bf3c493370e4f2ef7ae2e19217d7a237ff42367d/packaging-23.2-py3-none-any.whl.metadata Using cached packaging-23.2-py3-none-any.whl.metadata (3.2 kB) Collecting protobuf (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Obtaining dependency information for protobuf from https://files.pythonhosted.org/packages/f3/7c/9e78d866916fb07e193a53352453fdc44a9a47d5c30866c40231a03eb3a6/protobuf-4.25.2-cp39-cp39-win_amd64.whl.metadata Using cached protobuf-4.25.2-cp39-cp39-win_amd64.whl.metadata (541 bytes) Collecting sympy (from onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Using cached sympy-1.12-py3-none-any.whl (5.7 MB) Collecting glog==0.3.1 (from voe==0.1.0->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 2)) Using cached glog-0.3.1-py2.py3-none-any.whl (7.8 kB) Collecting python-gflags>=3.1 (from glog==0.3.1->voe==0.1.0->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 2)) Using cached python_gflags-3.1.2-py3-none-any.whl Collecting six (from glog==0.3.1->voe==0.1.0->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 2)) Using cached six-1.16.0-py2.py3-none-any.whl (11 kB) Collecting humanfriendly>=9.1 (from coloredlogs->onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Using cached humanfriendly-10.0-py2.py3-none-any.whl (86 kB) Collecting mpmath>=0.19 (from sympy->onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Using cached mpmath-1.3.0-py3-none-any.whl (536 kB) Collecting pyreadline3 (from humanfriendly>=9.1->coloredlogs->onnxruntime->-r C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\condaenv.q1_003sq.requirements.txt (line 1)) Using cached pyreadline3-3.4.1-py3-none-any.whl (95 kB) Using cached onnxruntime-1.17.0-cp39-cp39-win_amd64.whl (5.6 MB) Using cached numpy-1.26.4-cp39-cp39-win_amd64.whl (15.8 MB) Using cached flatbuffers-23.5.26-py2.py3-none-any.whl (26 kB) Using cached packaging-23.2-py3-none-any.whl (53 kB) Using cached protobuf-4.25.2-cp39-cp39-win_amd64.whl (413 kB) Installing collected packages: python-gflags, pyreadline3, mpmath, flatbuffers, sympy, six, protobuf, packaging, numpy, humanfriendly, glog, coloredlogs, voe, onnxruntime Successfully installed coloredlogs-15.0.1 flatbuffers-23.5.26 glog-0.3.1 humanfriendly-10.0 mpmath-1.3.0 numpy-1.26.4 onnxruntime-1.17.0 packaging-23.2 protobuf-4.25.2 pyreadline3-3.4.1 python-gflags-3.1.2 six-1.16.0 sympy-1.12 voe-0.1.0 done # # To activate this environment, use # # $ conda activate cnxsoft # # To deactivate an active environment, use # # $ conda deactivate PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec> |

We can now enable activate the environment:

|

1 |

conda activate cnxsoft |

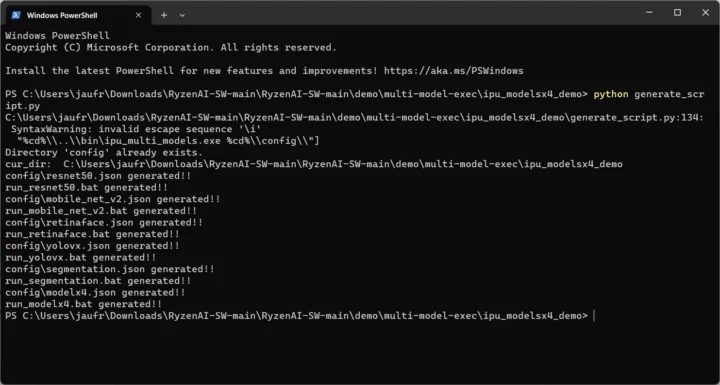

I download the ONNX models and test image/video package (resource_multi_model_demo.zip), and unzip it under demo/multi-model-exec/ipu_modelsx4_demo/. After that, I could run the command to generate the scripts:

It looks good so far:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo> ls Directory: C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo Mode LastWriteTime Length Name ---- ------------- ------ ---- d----- 2/11/2024 4:46 PM config d----- 2/11/2024 8:45 PM resource -a---- 2/11/2024 4:46 PM 7386 generate_script.py -a---- 2/11/2024 8:45 PM 741 run_mobile_net_v2.bat -a---- 2/11/2024 8:45 PM 735 run_modelx4.bat -a---- 2/11/2024 8:45 PM 736 run_resnet50.bat -a---- 2/11/2024 8:45 PM 738 run_retinaface.bat -a---- 2/11/2024 8:45 PM 740 run_segmentation.bat -a---- 2/11/2024 8:45 PM 734 run_yolovx.bat |

But trying any of the scripts fail with the same error:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo> .\run_mobile_net_v2.bat C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_VART_FIRMWARE=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\1x4.xclbin C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set PATH=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\bin;C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\python;C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..;C:\Windows\System32\AMD;C:\WINDOWS\system32;C:\WINDOWS;C:\WINDOWS\System32\Wbem;C:\WINDOWS\System32\WindowsPowerShell\v1.0\;C:\WINDOWS\System32\OpenSSH\;C:\Users\Administrator\AppData\Local\Microsoft\WindowsApps;C:\Program Files\PuTTY\;C:\Program Files\CMake\bin;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Scripts\;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\;C:\Users\jaufr\anaconda3;C:\Users\jaufr\anaconda3\Library\mingw-w64\bin;C:\Users\jaufr\anaconda3\Library\usr\bin;C:\Users\jaufr\anaconda3\Library\bin;C:\Users\jaufr\anaconda3\Scripts;C:\Users\jaufr\AppData\Local\Programs\Python\Launcher\;C:\Users\jaufr\AppData\Local\Microsoft\WindowsApps C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set PYTHONPATH=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\python312.zip;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\DLLs;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Lib;C:\Users\jaufr\AppData\Local\Programs\Python\Python312;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Lib\site-packages;set DEBUG_ONNX_TASK=0 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set DEBUG_DEMO=0 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set NUM_OF_DPU_RUNNERS=4 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_ENABLE_GRAPH_ENGINE_PAD=1 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_ENABLE_GRAPH_ENGINE_DEPAD=1 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\bin\ipu_multi_models.exe C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\config\mobile_net_v2.json WARNING: Logging before InitGoogleLogging() is written to STDERR I20240211 20:47:53.260922 21700 ipu_multi_models.cpp:136] config not set using_onnx_ep, using default: false I20240211 20:47:53.260922 21700 ipu_multi_models.cpp:376] config mobile_net_v2 -> model_filter_id:5 thread_num:4 confidence_threshold:0.3 onnx_model_path:C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\resource\mobilenetv2_1.4_int.onnx video_file_path:C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\resource\detection.avi onnx_x:1 onnx_y:1 onnx_disable_spinning:0 onnx_disable_spinning_between_run:0 intra_op_thread_affinities: using_onnx_ep:0 I20240211 20:47:53.262941 21700 ipu_multi_models.cpp:332] g_show_width: 1024g_show_height: 640matrix_split_num: 1 I20240211 20:47:53.262941 21700 ipu_multi_models.cpp:340] use global gui thread I20240211 20:47:53.486811 21700 onnx_task.hpp:113] using VitisAI I20240211 20:47:56.181272 21700 ipu_multi_models.cpp:382] C:\Users\xbuild\Desktop\xj3\VAI_RT_WIN_ONNX_EP_ALL\onnxruntime\onnxruntime\core\providers\vitisai\imp\global_api.cc:56 OrtVitisAIEpAPI::Ensure [ONNXRuntimeError] : 1 : FAIL : LoadLibrary failed with error 126 "" when trying to load "C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\bin\onnxruntime_vitisai_ep.dll" PS C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo> .\run_resnet50.bat C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_VART_FIRMWARE=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\1x4.xclbin C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set PATH=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\bin;C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\python;C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..;C:\Windows\System32\AMD;C:\WINDOWS\system32;C:\WINDOWS;C:\WINDOWS\System32\Wbem;C:\WINDOWS\System32\WindowsPowerShell\v1.0\;C:\WINDOWS\System32\OpenSSH\;C:\Users\Administrator\AppData\Local\Microsoft\WindowsApps;C:\Program Files\PuTTY\;C:\Program Files\CMake\bin;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Scripts\;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\;C:\Users\jaufr\anaconda3;C:\Users\jaufr\anaconda3\Library\mingw-w64\bin;C:\Users\jaufr\anaconda3\Library\usr\bin;C:\Users\jaufr\anaconda3\Library\bin;C:\Users\jaufr\anaconda3\Scripts;C:\Users\jaufr\AppData\Local\Programs\Python\Launcher\;C:\Users\jaufr\AppData\Local\Microsoft\WindowsApps C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set PYTHONPATH=C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\python312.zip;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\DLLs;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Lib;C:\Users\jaufr\AppData\Local\Programs\Python\Python312;C:\Users\jaufr\AppData\Local\Programs\Python\Python312\Lib\site-packages;set DEBUG_ONNX_TASK=0 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set DEBUG_DEMO=0 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set NUM_OF_DPU_RUNNERS=4 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_ENABLE_GRAPH_ENGINE_PAD=1 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>set XLNX_ENABLE_GRAPH_ENGINE_DEPAD=1 C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo>C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\..\bin\ipu_multi_models.exe C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\config\resnet50.json WARNING: Logging before InitGoogleLogging() is written to STDERR I20240211 20:52:41.000353 20180 ipu_multi_models.cpp:83] resnet50 config not set confidence_threshold, using default: 0.3 I20240211 20:52:41.010800 20180 ipu_multi_models.cpp:136] config not set using_onnx_ep, using default: false I20240211 20:52:41.010800 20180 ipu_multi_models.cpp:376] config resnet50 -> model_filter_id:2 thread_num:4 confidence_threshold:0.3 onnx_model_path:C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\resource\resnet50_pt.onnx video_file_path:C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\ipu_modelsx4_demo\resource\detection.avi onnx_x:1 onnx_y:1 onnx_disable_spinning:0 onnx_disable_spinning_between_run:0 intra_op_thread_affinities: using_onnx_ep:0 I20240211 20:52:41.010800 20180 ipu_multi_models.cpp:332] g_show_width: 1024g_show_height: 640matrix_split_num: 1 I20240211 20:52:41.010800 20180 ipu_multi_models.cpp:340] use global gui thread I20240211 20:52:41.210458 20180 onnx_task.hpp:113] using VitisAI I20240211 20:52:41.910542 20180 ipu_multi_models.cpp:382] C:\Users\xbuild\Desktop\xj3\VAI_RT_WIN_ONNX_EP_ALL\onnxruntime\onnxruntime\core\providers\vitisai\imp\global_api.cc:56 OrtVitisAIEpAPI::Ensure [ONNXRuntimeError] : 1 : FAIL : LoadLibrary failed with error 126 "" when trying to load "C:\Users\jaufr\Downloads\RyzenAI-SW-main\RyzenAI-SW-main\demo\multi-model-exec\bin\onnxruntime_vitisai_ep.dll" |

Windows programs often have cryptic error messages and don’t know what to do with:

|

1 |

FAIL : LoadLibrary failed with error 126 "" when trying to load "...\multi-model-exec\bin\onnxruntime_vitisai_ep.dll" |

The file is there but the system complain about something for which a web search did not help. I’m suspecting some mismatch between versions… It’s probably a matter of spending a few hours or days to fix the issue, and I’m not going to do that.

So the NPU/IPU in the Ryzen 9 7940HS can be used in Windows 11, but that’s not exactly a straightforward task right now, and I’m not sure any programs for end-users have been released just yet… Let me know in the comments if you know any.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

It’s also in the new 8700G and 8600G desktop APUs.

That’s too bad and it might be considered an AMD failure rather than a Windows failure. They fall flat on their faces repeatedly when it comes to software.

Best case scenario, XDNA1 is there just to give time for developers to start using it. The real action will happen after XDNA2 lands in the Zen 5 Strix family of APUs, with triple to quintuple the performance (45-50 TOPS instead of 10/16). That may actually outperform the iGPU in some cases, so they can hype up more than laptop battery life.

Surprising they would have an on-package NPU that can’t keep up with the iGPU, unless it’s drastically more power-efficient than using the CPU – bearing in mind that the CPU will still have to feed the NPU, which is still a large part of the compute power budget. With Zen5 that will get even harder (+VNNI), so if it can’t beat the iGPU and CPU significantly on both power and latency then it’s going to be a waste of die space. Esp as the iGPU has PCI bus control and can do some AI without needing the CPU cores awake. I’ll be interesting, and I don’t know whether the NPU has one or more general compute cores with PCI bus control and there will be well documented APIs, perhaps even allowing apps to use it for wake words.

It should be more power efficient and die area efficient for the operations it does, but I don’t have the numbers. It does have some of its own L2 cache. Intel also claims better power efficiency for their NPU in Meteor Lake.

AMD claims 39 TOPs for Hawk Point, of which 16 TOPS is from the NPU. It was increased from 10 TOPS in Phoenix with clock speed increases alone.

With Strix Point, the NPU hits 45-50 TOPS, the iGPU grows in size from 12 to 16 CUs, and there are 12 cores (4x Zen 5 + 8x Zen 5C) with new AVX-512 instructions we heard about the other day. They are probably increasing the die area devoted to the NPU. Strix Point is supposed to use the same TSMC N4 node as its predecessors.

AMD is thinking about mobile first. There is no evidence they will bring XDNA to the non-APU desktop lineup yet. Microsoft is said to be pushing AMD, Intel, and Qualcomm to adopt >40 TOPS NPUs.

We now know a potential application for using the NPU during gaming. Microsoft announced “Automatic Super Resolution” which may use the NPU for upscaling:

wccftech.com/microsoft-windows-11-24h2-ai-super-resolution-technology-works-across-all-pcs-npus/

> I’ve just completed the review of the GEEKOM A7 mini PC powered by an AMD Ryzen 9 7940HS CPU with Windows 11 – but need to wait before publishing it

Why “need to wait”? Geekom requirement?

Yes, GEEKOM asked me to wait until February 20. Sometimes it’s because they don’t have stock. The post is scheduled for that day.

I got the same LoadLibrary Error. But I reran the driver installer: amd_install_kipudrv.bat. I added “cd /d %~dp0” to the top of the bat file. As there seems to be some file Signing with the CAT file. Or start the BAT file from within CMD as Admin. After a reboot the run_modelx4 demo works

When you run a multi-model demo, could you try using CMD, but not powershell, as I have found some errors when using powershell.

Thanks for looking into it. I have some issues with Python in CMD right now. I’ll check again this coming weekend.

Any idea if CUDA would work on there with Zluda? GitHub – vosen/ZLUDA: CUDA on AMD GPUs

Hello

I use ryzen-ai-sw-1.1 and installation runs without errors. But when I start quicktest I receive the following warning and the test does not bring any result:

C:\Programs\anaconda3\Lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py:69: UserWarning: Specified provider ‘VitisAIExecutionProvider’ is not in available provider names.Available providers: ‘AzureExecutionProvider, CPUExecutionProvider’

warnings.warn(

Test Passed

Any thoughts ? Any help appreciated.

Quicktest works now in a new conda environment and directories without a German character. But I failed to run the Yolo8 example from the tutorial – the build didn’t work.

Compared to Cuda AMD always causes more problems and difficulties to me which is a bit frustrating.

Thanks @cnxsoft for (re)pointing this to me. Now that I bought a Geekom A7 for myself, this is doubly interesting!

Given how alpha-testing-like your entire experience with this on Win11 went, I wonder whether it would fare any better on Linux, specially considering that AMD has since then published (and been updating) a XDNA driver for Linux: https://github.com/amd/xdna-driver

Have you had any experience with this on Linux? Any advice for me, in case I decide to try it? I’m a total newbie re: AMD GPUs, this is actually my first AMD graphics ‘card’ since they bought the ATI business in the 2000s..

I don’t know about the XDNA-driver and I can’t see any reference to 7940HS in it.

I still think it should be easier in Windows. It’s been almost three months since I tested it on my A7 so it might work better now.