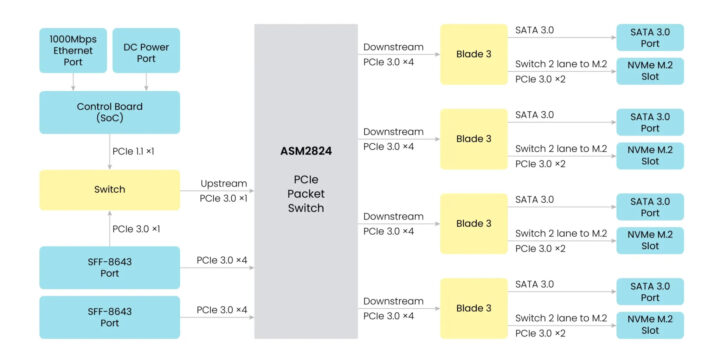

The Mixtile Cluster Box is comprised of four Mixtile Blade 3 Pico-ITX single board computers each powered by a Rockchip RK3588 processor and connected over a 4-lane PCIe Gen3 interface through a U.2 to PCIe/SATA breakout board.

We mentioned the Cluster Box last year, but Mixtile had few details about it at the time. The company has now released more technical information, worked on the software, and just launched the box for $339 (without the SBCs).

Mixtile Cluster Box specifications:

- Supported SBCs – Up to 4x Mixtile Blade 3 with Rockchip RK3588, up to 32GB LPDDR4 each, up to 256GB eMMC flash each

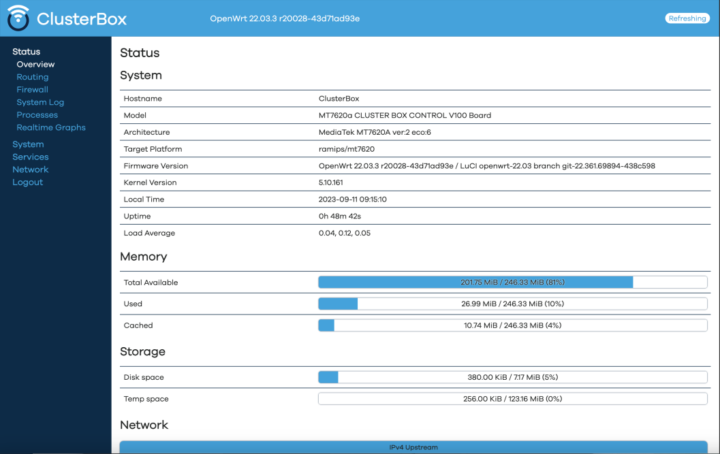

- Control board running OpenWrt 22.03

- SoC – MediaTek MT7620A MIPS processor @ 580 MHz

- System Memory 256 MB DDR2

- Storage – 16 MB SPI Flash

- PCIe Switch – ASMedia ASM2824 with four PCIe 3.0 4-lane ports

- Storage interfaces via 4x U.2 breakout boards

- 4x NVMe M.2 M-Key slots (PCIe 3.0 x2 each, connected to Blade 3)

- 4x SATA 3.0 ports (connected to Blade 3)

- Networking – Gigabit Ethernet port

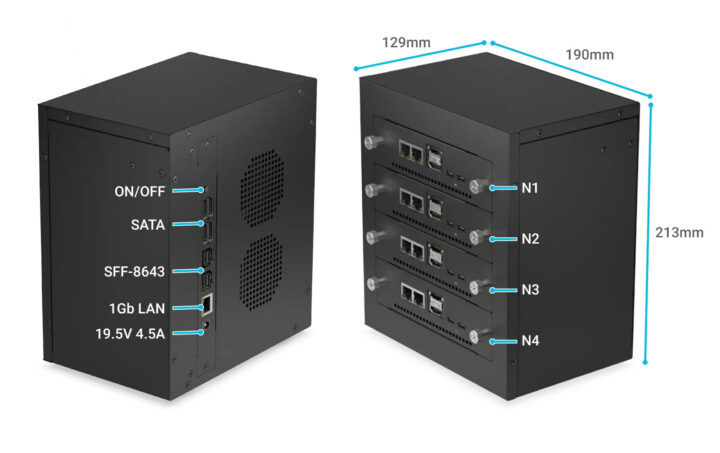

- Expansion – 2x SFF-8643 port (support PCIe 3.0 x4 each, upstream)

- Misc – 2x 60 mm fans, power button with blue LED

- Power Supply – 19 to 19.5V/4.74A via DC jack

- Dimensions – 213 x 190 x 129 mm

- Materials – Metal case, SGCC steel

- Temperature Range – Operating: 0°C to 80°C; Storage: -20°C to 85°C

- Relative humidity – Operating: 10% to 90%; Storage: 5% to 95%

Mixtile Cluster Box is an enclosure with a built-in PCIe switch designed to accommodate a four-node cluster of Mixtile Blade 3 boards suitable for small business applications and edge computing. From the use perspective, the machine can be accessed through OpenWrt using SSH or a web interface.

The Rockchip RK3588 boards come preloaded with a customized Linux system with Kubernetes, and it’s possible to control each Mixtile Blade from OpenWrt using a command called nodectl with the following options:

|

1 2 3 4 5 6 7 |

nodectl list #list all active nodes nodectl rescan #rescan all active nodes nodectl poweron (--all|-n N) #poweron all nodes or one node, use parameter "N" to select specified node nodectl poweroff (--all|-n N) #poweroff all nodes or one node, use parameter "N" to select specified node nodectl reboot (--all|-n N) #reboot all nodes or one node, use parameter "N" to select specified node nodectl flash (--all|-n N) -f /path/to/firmware.img #use PCIe interface to flash the firmware to all nodes or one node, use parameter "N" to select specified node nodectl console -n N #enter console of one node, use parameter "N" to select specified node |

More technical details and a getting started guide can be found on the documentation website.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

The configuration looks very interesting but it is still a pity that the firmware for these compute blades are android based and you have to run everything inside containers due to some driver issues.

You’d have thought that with all the dev work on the RK3588, that firmware would be upstream Linux by now…

Would be interesting to know if the nodes* support P2P transfers, or if everything has to go through the controller memory (PCIe 1.1 x 1)

*) The hardware definitely does, but what is supported by the driver on the controller board is something completely different.

So what is the use case for this? A kubernetes cluster? Is not cheap for what it is IMHO.

[ if ASM2824 supports (downstream/upstream), it might offer an additional 16x PCIe3.x connector, with data-wise x1(250-500MB/s)-x2-x4-x8 (?) ]

Is the latency what makes this interesting? Bandwidth is probably high, but that’s not usually the issue.