Useful Sensors “AI in a box” LLM (large language model) solution works offline with complete privacy and leverages the NPU in Rockchip RK3588S processor for conversational AI similar to ChatGPT but without an internet connection or registration required.

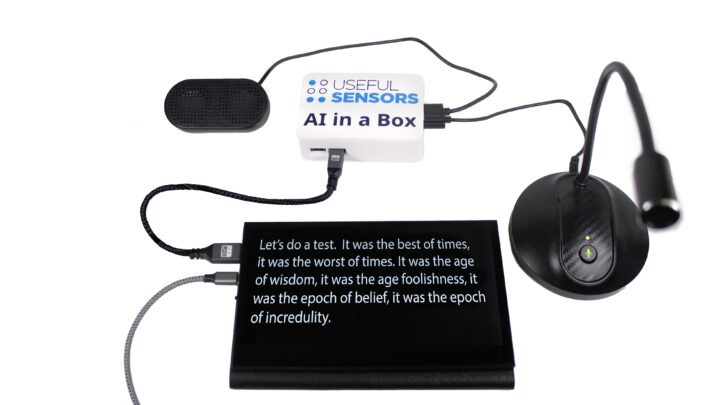

The AI box prototype currently relies on off-the-shelf hardware, specifically the Radxa ROCK 5A SBC with 8GB RAM, housed in a plastic enclosure, and the code relies on open-source models like Whisper speech-to-text model and Llama2 language models.

Besides conversational AI where you can interact with the box as if you were talking to a person, the AI in a Box can also be useful for other use cases:

- Live Captions – The box can display subtitles/closed captions for a live event or help in situations where people have trouble hearing a conversation using the audio input.

- Live Translation – It can also translate various languages close to real-time. Simply select the source and target languages and you’ll see split-screen captions showing the original input and the results of machine translation.

- Full privacy – Radxa ROCK 5A does not come with WiFi and Bluetooth by default, and while there’s an Ethernet port on the board, it does not need to be connected since an Internet connection is not required. The software provided all data locally for full privacy for sensitive conversations or materials.

- Keyboard mode – The box can be used as a USB keyboard when connected to another host such as a Raspberry Pi. So it can be used to transcribe audio files or videos into text format.

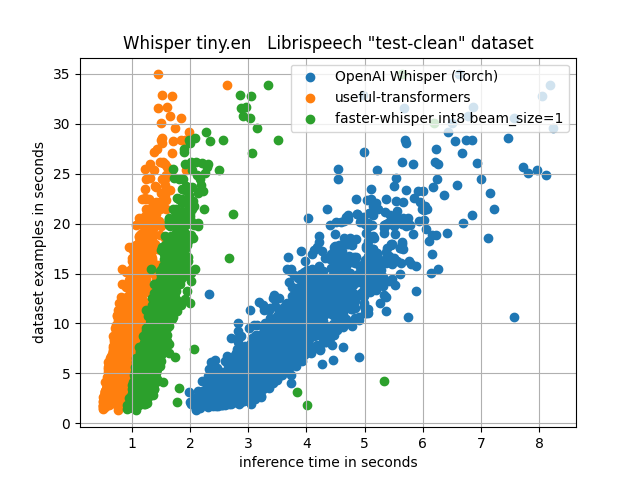

The company developed the Useful Transformers library focused on low-cost, low-energy processors to run inference at the edge, and the first implementation optimizes OpenAI’s Whisper speech-to-text model for RK3588(s) processor. You can find it on GitHub.

The chart above shows the performance of the Useful Transformers library using the tiny.en Whisper model which transcribes speech at 30x real-time speeds, and twice as fast as the best-known implementation. (faster-whisper). The chart shows most inferences take place within 500 ms to 2 seconds.

The currently working hardware is a prototype kit with a Rasxa ROCK 5A housed in a plastic case, connected to an HDMI display, a USB speaker, and a USB microphone.

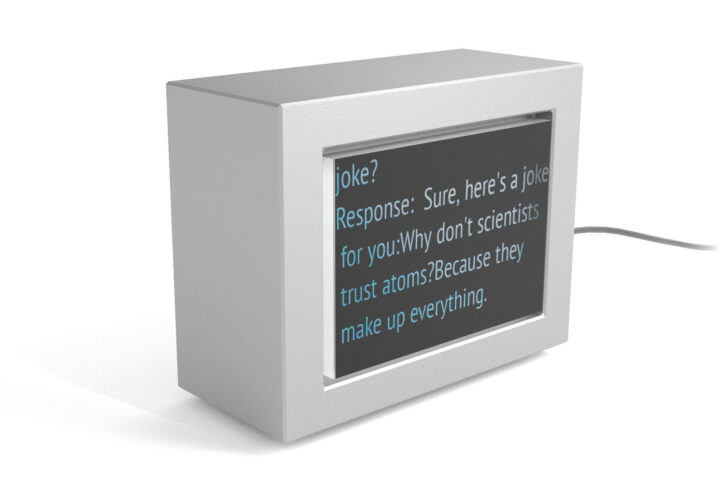

But the final product will be a fully enclosed solution with a Rockchip RK3588S SoC, 8GB RAM, built-in display, speakers, and microphone, and a custom enclosure. The operating system used is Ubuntu 22.04.

Useful Sensors has just launched the AI in a Box on Crowd Supply with a $30,000 funding target. Rewards start $299 for the final product to be shipped by January 31, 2024, and $475 for the AI in a Box Prototype Kit with the ROCK 5A, enclosure, display, and other accessories with delivery scheduled by December 1, 2023 for people who want to experiment earlier with LLM on the Rockchip RK3588S processor. Shipping is $8 to the US and $18 to the rest of the world.

Thanks to TLS for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

I’ve thought already about using the RK3588 with llama2 models, but I’m using an ampere altra with lots of RAM and cores, and the Neoverse-N1 cores are pretty much the same as the Cortex A76 in the RK3588. While the text production doesn’t scale much on many cores and sees a plateau between 20-40, the text parsing scales well. I tried to analyze patches with 4 cores only and it takes a very long time. Thus I tend to consider that RK3588 is a bit “just” to do some AI processing. Maybe Whisper is less CPU-intensive, but when you start to ask the machine to process some tasks, you really want at least a 13B model (at least until qwen-7b becomes usable) and a 13B model will not run on 8GB RAM and will really need 16GB. As such I tend to think that such a board should ship with 16G to be more versatile if it aims at playing with AI.

But here I understand they are using the 6 TOPS NPU instead of the CPU cores only, so the situation may be a bit different.

Maybe but applications making use of the NPU are still quite rare, essentially python-based sucking many times the required memory just due to loading large formats using memory copies instead of mmapping compact ones. Like everyone I first tried with the python horrors found everywhere on the net, forced myself to insist despite the usual errors caused by constant API changes (whoever downloaded a python program more than one month old that still works is lucky) and I quickly figured that it was not going to bring me far with the disk and RAM usage! That’s why I think that their product is probably tailored for their exact application but will not be able to evolve beyond that, just due to the ecosystem and extreme resource usages in this domain.

Skynet personal. Just what every schizophrenic needs for a good time.

I like the idea :

With theses considering, asked price is acceptable IMO.

I will not purchase it (I already have a Rock 5b), but better have a further look at “Whisper”, which I’ve just discovered, thank you JLA.

How does Whisper compare with Vosk ?