Hey Karl here. The timing couldn’t have been more opportune when I was asked to review the TerraMaster F4-423 4-bay NAS. Let me explain why.

I run Unraid as my OS of choice for my home lab. I have found it easy to maintain and hard to break. My old rig had a 3900X with 3 cores, 6 threads dedicated to docker containers, and the remaining cores running VM’s. It has been a fun and learning experience.

I have run it with several different VM configurations over the past few years. The majority of the time I ran 2 VM’s. One personal and one work and I would RDP over to the work VM. If I wanted to game I had a third gaming VM and allocated all resources to it. It’s not super convenient and as convoluted as it sounds. But recently I moved back to my company-provided laptop and was liking the idea of running bare metal again.

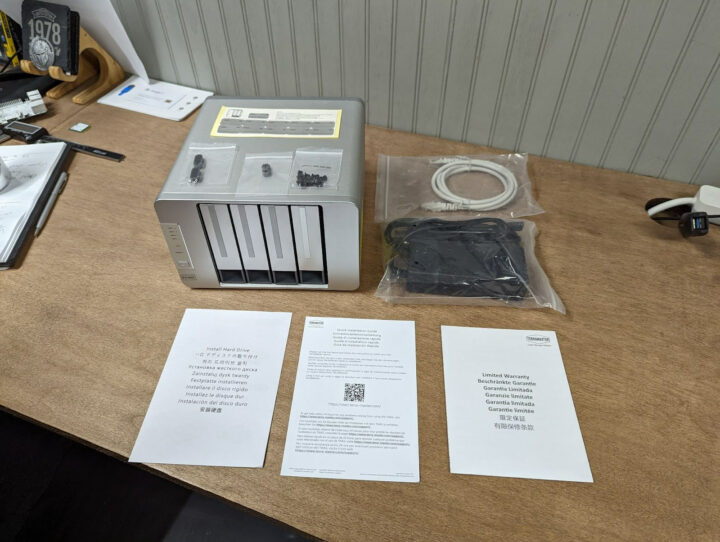

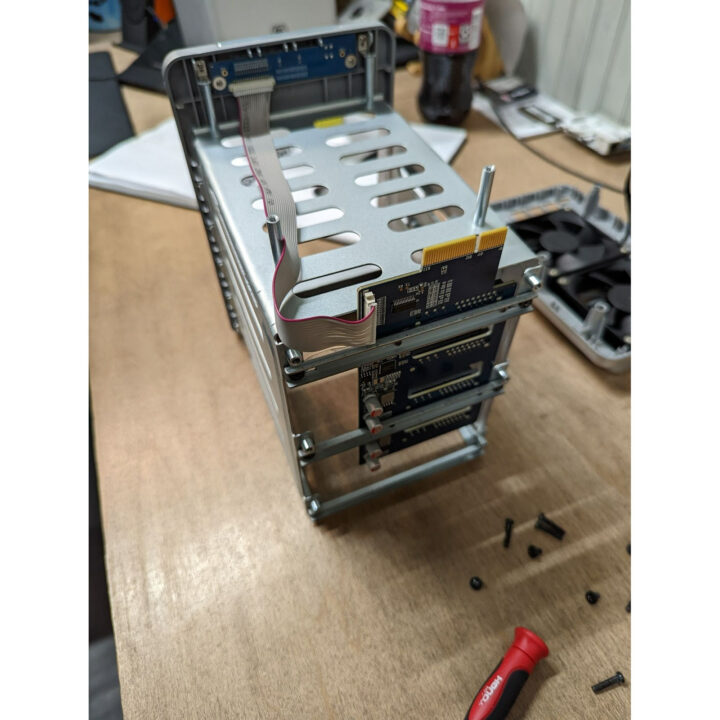

I wanted a dedicated server but had some reservations regarding space, power, and noise. I am not a data hoarder. I have a modest collection of movies and storage is mainly for pictures and home videos. I’m going to share my experience migrating my Unraid server over to the F4-423.

TerraMaster F4-423 specifications

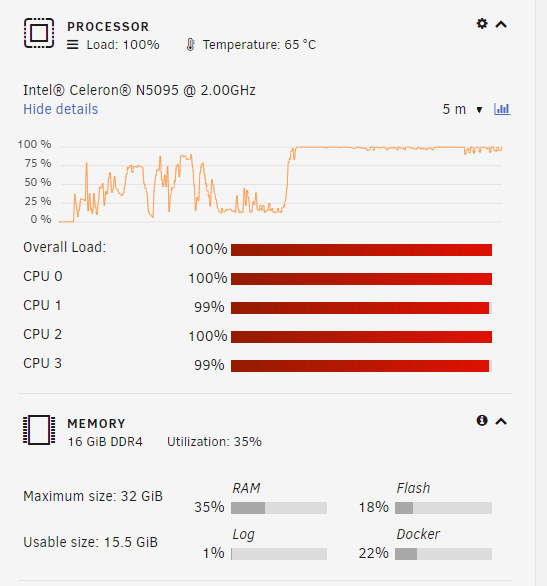

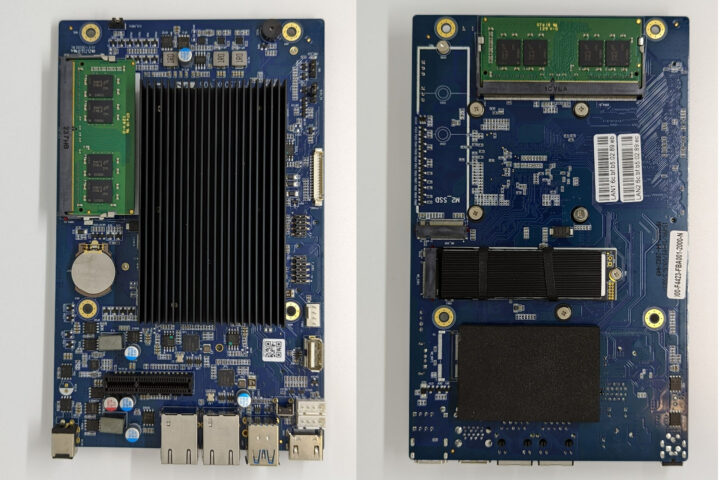

The NAS is driven by an Intel-based Celeron 5095 board which I found wholly adequate for my needs.

TerraMaster F4-423 specifications pulled from the Amazon store:

- Powerful hardware: N5105/5095 Quad-core 2.0GHz CPU, 4GB RAM DDR4 (expandable up to 32GB). Dual 2.5-Gigabit Ethernet ports – Supports up to 5 Gbps under Link Aggregation. 4-bay NAS designed for SMB high-performance requirements.

- Maximum internal raw capacity: 80TB (20 TB drive x 4). Compatible with 3.5-inch and 2.5-inch SATA HDD, and 2.5-inch SATA SSD. Supports RAID 0/1/5/6/10, Supports online capacity expansion, and online migration. The built-in M.2 2280 NVMe SSD slot can realize SSD cache acceleration and increase the storage efficiency of the disk array several times.

- Multiple Backup Solutions: Centralized Backup, Duple Backup, Snapshot, and CloudSync; enhance the safety of your data with multiple backup applications.

- Easily build file storage servers, mail servers, web servers, FTP servers, MySQL databases, CRM systems, Node.js, and Java virtual machines, as well as a host of other commercial applications.

- Small-sized compact design that can be used vertically and horizontally. Features an aluminum-alloy shell and intelligent temperature control ultra-quiet fan, good in heat dissipation and Very Quiet.

Unboxing and Teardown

Let’s review external connectivity first.

HDMI 2.0, USB 3.2 Gen 2×1. This offers 10Gbps throughput per port. I only have one high-speed device – the Elgato Camlink 4k – and during testing ran into no issues. It seems really sensitive to bandwidth limitations and would know it immediately. This was tested passthrough to a Windows VM in Unraid.

Next are two 2.5gbps Ethernet ports. These are using 1 lane each with an Intel i225i chip.

The board only supports 2280 NVMe drives. It is missing the standoffs for the shorter drives. I had a couple of 2230 SSD and tested them with Kapton tape.

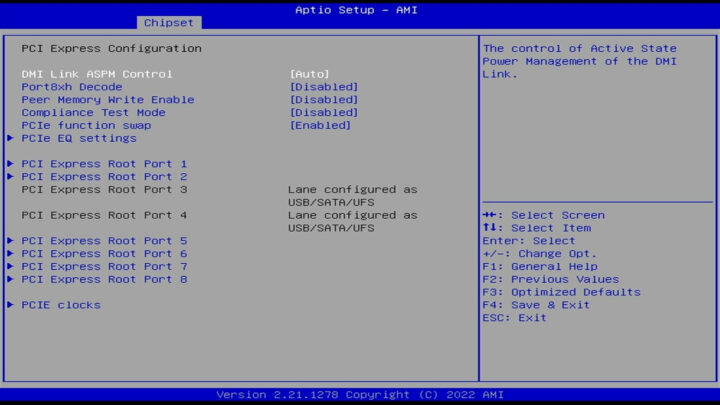

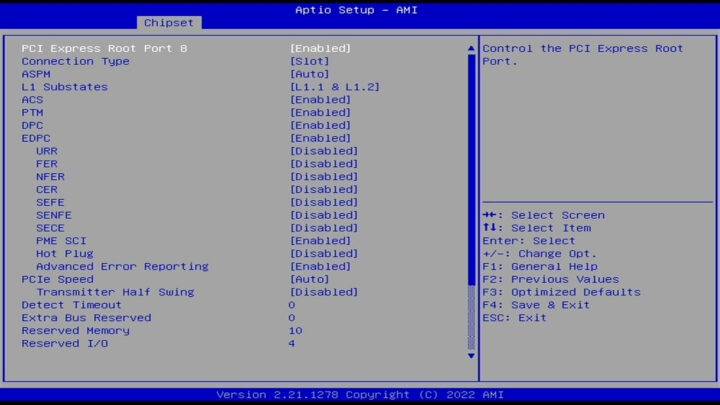

Now let’s look at the PCIe config in the BIOS.

Lanes 1 and 2 are the LAN ports mentioned above.

Lanes 3 and 4 are labeled USB/LAN/UFS. This is what appears to be considered onboard ports and consumes lanes.

Lane 5 and 6 are the M.2 slots.

Lane 7 is the Asmedia ASM1061 providing two additional SATA ports

Lane 8 is not labeled with a very good description. PCI Express Root Port 8. Unused?

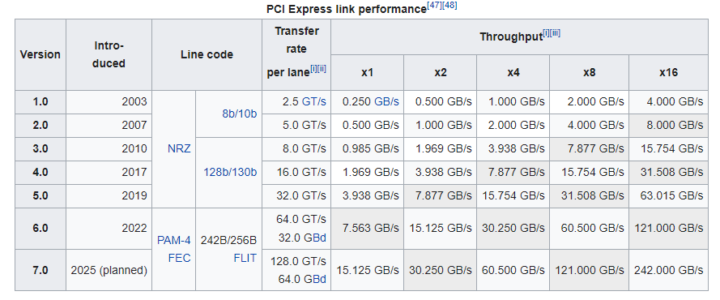

We have PCIe 3.0 which gives us about 0.985 GB/s or 985MB/s or 7.88 Gbps per lane.

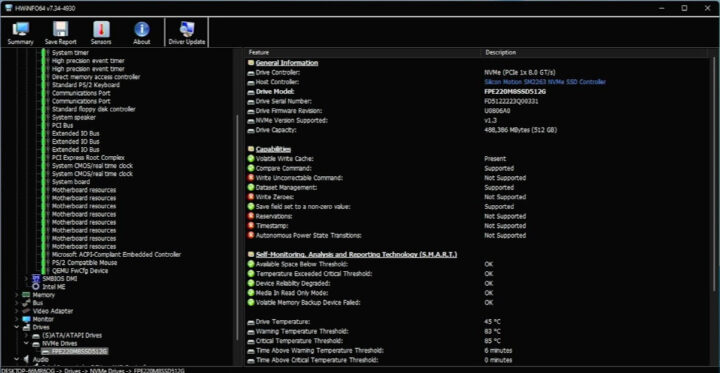

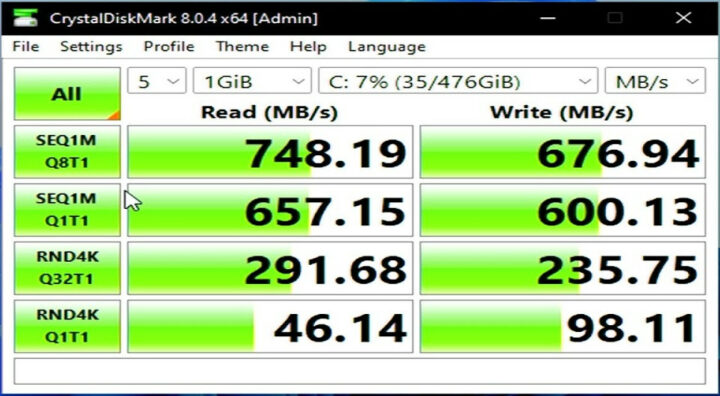

M.2 NVMe

This is the only engineering choice that raises a question for me. It looks like the two M.2 NVMe sockets only have one PCIe lane dedicated to each drive. I tried 3 drives and they all peak around this same speed. If unused I wish they used that last lane on one of the drives.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

03:00.0 Non-Volatile memory controller: SK hynix BC511 (prog-if 02 [NVM Express]) Subsystem: SK hynix BC511 Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx- Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- Latency: 0, Cache Line Size: 64 bytes Interrupt: pin A routed to IRQ 255 IOMMU group: 17 Region 0: Memory at 80100000 (64-bit, non-prefetchable) [size=16K] Capabilities: [80] Power Management version 3 Flags: PMEClk- DSI- D1+ D2- AuxCurrent=0mA PME(D0+,D1+,D2-,D3hot+,D3cold-) Status: D3 NoSoftRst+ PME-Enable+ DSel=0 DScale=0 PME- Capabilities: [90] MSI: Enable- Count=1/32 Maskable+ 64bit+ Address: 0000000000000000 Data: 0000 Masking: 00000000 Pending: 00000000 Capabilities: [b0] MSI-X: Enable- Count=32 Masked- Vector table: BAR=0 offset=00002000 PBA: BAR=0 offset=00003000 Capabilities: [c0] Express (v2) Endpoint, MSI 00 DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 75W DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq- RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+ FLReset- MaxPayload 256 bytes, MaxReadReq 512 bytes DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend- LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 <64us ClockPM+ Surprise- LLActRep- BwNot- ASPMOptComp+ LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ ExtSynch- ClockPM+ AutWidDis- BWInt- AutBWInt- LnkSta: Speed 8GT/s, Width x1 (downgraded) TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt- DevCap2: Completion Timeout: Range B, TimeoutDis+ NROPrPrP- LTR+ 10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix- EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit- FRS- TPHComp- ExtTPHComp- AtomicOpsCap: 32bit- 64bit- 128bitCAS- DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR+ 10BitTagReq- OBFF Disabled, AtomicOpsCtl: ReqEn- LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS- LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis- Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS- Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+ EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest- Retimer- 2Retimers- CrosslinkRes: unsupported |

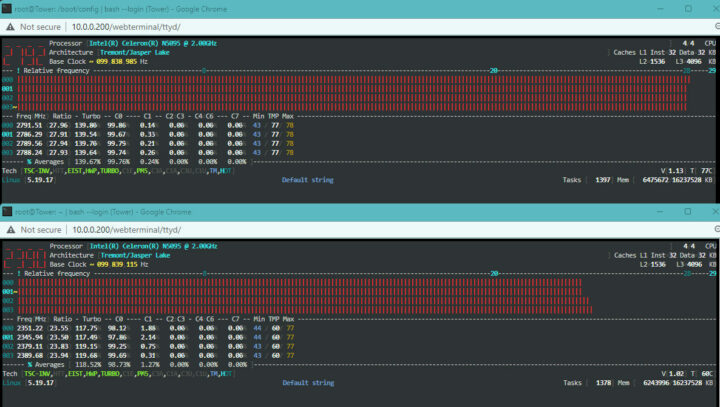

CPU usage & power consumption

Predictable and consistent 2.8GHz boost for a few seconds then down to 2.4GHz.

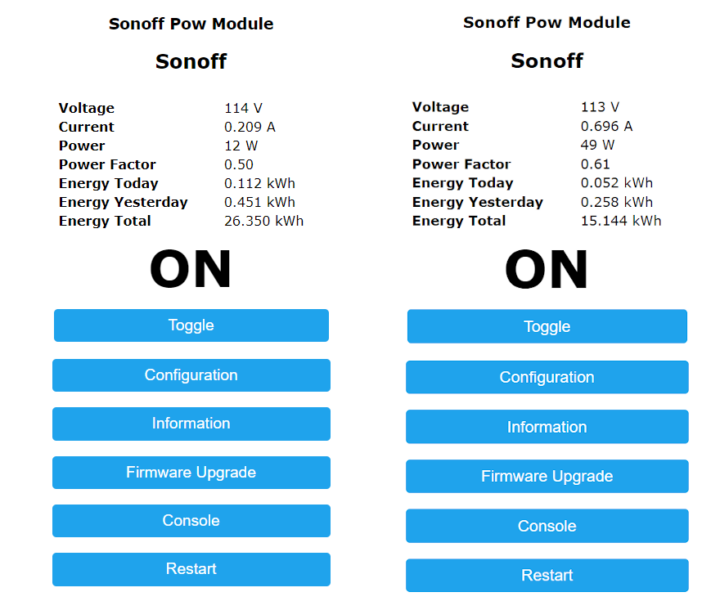

I also tracked power for several weeks with a SONOFF Pow.

Lowest wattage idling with Unraid, no drives spun-up, C states enabled on left. Max Wattage 100% load with all drives spun-up with 3 magnetic, one SSD on right.

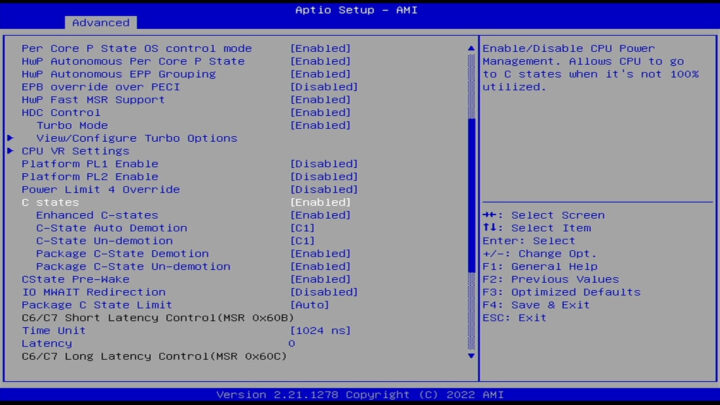

The daily average for 34 days is 0.449 kWh. This includes all the up-and-down times over those days. I feel like my daily average will be less than 0.4 kWh per day. I did 2 parity checks and believe that is where it looks a little heavy. One I did for migration and another due to a power outage. This takes about 11 hours to complete. I only recently turned on C states in the BIOS and it looks to have reduced power usage a little more. I will need more time to determine exactly how significant and ensure no downsides. Regardless it sips power in my opinion.

Unraid notes

Here are my notes from migrating my Unraid installation to the TerraMaster F4-423. It was mostly uneventful. First I worked out how to boot Unraid. There is a USB 2.0 Type-A female directly on the board that boots into TerraMaster’s software installer. I thought about extending it outside the case but I have no need right now, but it is an option in the future. I can only foresee adding a Blu-Ray to rip movies and I still have a USB 3.2 available for that. I tinker a lot and for now, I just removed the TerraMaster drive, stored it, and installed my Unraid boot drive in one of the externally available USB ports. The second USB header looks available inside as well if needed.

I started cautiously and created a new Unraid USB drive to test with and installed one spare hard drive in the enclosure. I went with typical BIOS boot options and Unraid was booting. Posting takes a few seconds longer than on a consumer desktop. I booted and made sure everything was working. After I was confident everything was working as expected I cleaned up my production Unraid drive removing the hardware-specific configs and prepping it for the move. I only missed removing CPU pinning or else it would have been a perfect first boot. I got an error so I booted into safe mode and removed the CPU pinning and all my docker services were restored.

Transcoding videos on the TerraMaster F4-423

Transcoding was easy to enable in Unraid. I installed two plugins through the App Center: ich777’s Intel GPU-TOP which is the only thing that is required, and b3rs3rk’s GPU Statistics for monitoring. Then passthrough /dev/dri to my docker containers. This CPU has been out for a while and all the hard work is done and kinks worked out. I found some older threads and went down some rabbit holes but ultimately above is all that is required.

I only tested Jellyfin and transcoding for the most part was a non-issue. The only caveat is with H.265. My experience did not exactly match what the documentation stated. It is probably a bug. The issue was with 4K H.265 @ 60fps in Chrome as Jellyfin was only able to transcode this file @ locked 45 fps per the log causing issues. But if I kicked it down to 1080 it transcoded @ 30 without pausing (I saw a few updates to Jellyfin and decided to check again. This seems to be a moving target. Now some of the 60fps H.265 files I had issues with are playing in Chrome direct. I did enable Chrome direct HEVC playback originally but it didn’t work. Now it seems to work on some files.) I had no issues with any other codec. That same file streamed to my Shield fine which it should according to the docs. My movies are all in 1080p H.264. I was able to get 6 direct H.264 streams going with Jellyfin. I will never need that many. Max 1 at a time here.

Network Connectivity

I don’t have the network gear to test the 2.5 GbE. Ethernet networking is taken care of by an Intel i225-V chip. It was easy to max out @ gigabit speeds. I did try to link aggregation but I could not get it to work with my Unifi switches. I believe I am going to wait a little while longer before I upgrade my switches. I want to go straight to 10 Gbps but will probably step to 5 Gbps switches first.

Wrap-up and random notes

I have had the F4-423 and tinkered for about 45 days and overall I like it. Small and attractive looking. Quiet and sips power. I was expecting a low-power NAS and that is what I got. Fully decked out you could populate with four 20TB drives and in Unraid you would have 60TB of usable storage. Then a couple of NVME for cache, dockers, and VMs. If we say an average Blu-Ray is 35GB, that would correspond to around 1714 uncompressed movies.

I upgraded the ram with a 16GB 2933 Crucial kit.

Windows boots from an NVMe without issue. The Device Manager was a mess with unknown devices but it booted. I did not test any further than this.

I revisited setting up a LAN cache drive and it worked pretty well but I forgot about the one thing that makes it suck for a permanent setup and that is DNS being a single point of failure.

I wanted to test ripping and transcoding, but don’t have a drive right now. I think it would be a fun project to automate ripping and transcoding movies. From what I am reading this can be completely automated and the TerraMaster F4-423 should handle this fine with hardware transcoding.

The TerraMaster F4-423 NAS can be picked up on Amazon for $499 or purchased directly from TerraMaster for $499.

Karl is a technology enthusiast that contributes reviews of TV boxes, 3D printers, and other gadgets for makers.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

I’ve been looking for a box to run Proxmox Backup Server and this could be it since the main storage is going to be 3.5″ HDDs

Karl, would you be willing to flash PBS to a USB drive and try booting it?

Thanks

But you understand that PBS is just an added repo and a bunch of packages added to an otherwise normal Debian or Proxmox installation?

I will test and get back to you

I’ve bought the TerraMaster F4-423 in May 2022 and tested it with 32GB RAM and TrueNAS Scale (I believe installed on the NVMe SSD), which ran nicely, but I returned it anyway.

The main reason I didn’t find it suitable: The setting in the BIOS for which device to boot from was reset after every boot cycle. This meant I could not reboot the device remotely, because I had to use a connected keyboard/monitor to enter the BIOS boot menu to actualy boot TrueNAS Scale every time.

I contacted TerraMaster support and they informed me the resetting of the BIOS boot device every restart was intended behaviour, because the F4-423 was not meant to run other operating systems.

I decided to return the TerraMaster and buy a QNAP TS-364. It runs TrueNAS Scale just as nicely as the TerraMaster (with 1 disk less), but doesn’t reset its boot device setting every reboot.

Did you also encounter this behaviour? Or has TerraMaster changed it with an update anyway?

I will test and reply

To clarify. Mine boots fine from usb without interaction and doesnt reset the bios every boot. I did find it aggressively boots from the Terramaster installation usb until removed.

I had the same problem with my Terramaster F2-423 and OpenMediaVault. For me it helped to reinstall grub with

grub-install /dev/sda –force-extra-removable

I tried TrueNas also but I didn’t have your issue. I’ve only had issues with the date/time settings.

Works with 2x32GB RAM (64 total RAM)

Bios version 2.21.1278

Bio settings do stick with one exception.

Booting is not as straight forward. Booting order does appear to be unchangeable. The order seems to be USB > NVME > HD. I was unable to boot from a HD with a NVME that has a bootable partition installed until I disabled the NVME. This was able to be overcome but like Job said it is an attended boot every time. Boot into the bios set the order and it will work for only that boot. It only one partition is present this should not be an issue.

Proxmox installs fine.

Conclusion :

To avoid if you care about you data.

Maybe good for a cheap secondary backup of the main Nas where to put replication snapshot.

After reading the CPU data sheet I don’t how even is possible to immagine having 4 X 2.5 Gbe port and 2 nvme ,4 sata ,usb3.1 gen2

🤷🏿♀️🤷🏿♀️🙄🙄

Pcie 3.0 Max 8 of PCI Express Lanes

No Lan integration

2 SATA integrated

And ow is possible that that say max supported ram is 32GB when CPU spec says max supported 16GB????

This kind of products are basically legal scams, they give specs that are really misleading for people that have low tech understanding.

if you aren’t afraid of putting 1000$ of hdd on a cheap case, go ahead.

> I don’t how even is possible to immagine having 4 X 2.5 Gbe port and 2 nvme ,4 sata ,usb3.1 gen2

Just read the review above since Karl explained everything. The NVMe SSDs are attached with a single lane only and the two additional SATA ports come from an ancient ASM1061 (making also use of just a single PCIe lane) which is the same chip millions of motherboards with those Intel Atom thingies used already in the last decade two provide two more SATA ports.

> And ow is possible that that say max supported ram is 32GB when CPU spec says max supported 16GB????

That’s because Karl missed 64GB are possible. At least since Gemini Lake the usual formula for those entry-level Intel SoCs is: ‘max capacity by specs’ * 4

But you need to be careful since in the past DIMMs that were too fast (CAS latency too low) resulted in the CPU not booting: Hardkernel wiki. Maybe that’s why Intel plays safe here?

The 16 Gb on spec sheet threw me off two, but checked and it isn’t uncommon for Intel to underspec actual ram access.

The review could not come at a better time. I was looking for new low power NAS for home usage and if you want more than 2 drives (and you will want more than 2), you will have to make sacrifices because these Intel based Celeron SoCs (no matter the version) can only support 2 native SATA ports. I know that these days a low power NAS is the one you want, especially with the price increase for electricity. I ended up repurposing my old desktop that has an ATX motherboard and kind of doubled my consumption, but I am at least happy it can be a long term solution.

I highly doubt any newer chipsets will support more SATA drives natively, but I will switch to them when and if they will be available. Also I prefer to not spend 500 USD/EUR when I can reuse existing hardware that is anyway a lot more powerful than any of these NAS devices. Anything I would be able to find locally and used in mini-ITX form factor, but with enough power would cost me more or around the same as my old desktop (which is using X99 chipset and has 6 cores with 12 threads). I preferred to keep it at the expense of higher power bills, which were not that big to start with.

> these Intel based Celeron SoCs (no matter the version) can only support 2 native SATA ports

Yep and the simple and obvious solution for more SATA ports is to sacrifice a single PCIe lane for an ASM1061 (as it’s done on the F4-423) or better an JMB582 in the meantime (Gen3 x1) to provide two more SATA ports. Or use an JMB585 or ASM1166 for 5/6 ports.

BTW: the Intel SoC’s native SATA ports support SATA PMs so with a cheap JMB575 you can attach 5 SATA devices to each port.

On my oDroid H2+ it means I have to sacrifice nVME and I really love the fast boot times and the overall snappiness of the system when booting from nVME. I recently learned that there are still ITX motherboards for recent Intel chipsets that have 4 SATA ports, but sacrifice the PCI-E extensions, so adding more SATA drives would be difficult.

As written directly above: two (or even 5 or 6) additional SATA ports require one single PCIe lane if a SATA HBA should be added or just an inexpensive SATA PM connected to a SATA port to get 4 additional SATA ports. On a data dumpster performance never is an issue.

> snappiness of the system when booting from

…SSD. It’s the storage media’s technology that makes SSDs ‘snappy’ since they’re great with random I/O unlike HDDs. The protocol (NVMe, SAS, SATA, USB3, even USB2) doesn’t matter that much.

Unfortunately consumers are trained to look at the wrong metric (MB/s and not IOPS) so they make the wrong buying decisions all the time.

> more than 2 drives (and you will want more than 2)

Nope, why would I? Care to elaborate?

I started with a single SATA 8 TB drive and it filled up sooner than I expected. I added a second drive with a bit higher capacity and that was also filled up. Getting higher capacity drives is not a solution because they are insanely expensive in my country the higher the capacity. The best bang for the money is around 12 TB (sometimes 16 TB drives prices are low as 14 TB, but very, very rarely). If I ever want to use RAID 1, then the number of drives doubles. Sometimes the performance on the low power NAS dropped without any heavy disk activity (a lot more often than on my repurposed desktop) that has 6 usable SATA ports as we speak (using nVME cuts 2 SATA ports). This was my experience and qith 3 drives I reached a compromise.

Using several low power NAS devices is not really a solution, it makes sharing and management difficult.

> If I ever want to use RAID 1

Wow, it’s 2023 and ‘RAID is not backup’ is still a thing… and especially RAID-1 IMO ist the most horrible waste of disks possible. Do you back up your data? If not your NAS is just a data dumpster since obviously the data has no value and you’re OK loosing it any time.

With valuable data you need backup and that’s at least one other copy at another location with 120%-130% the original’s capacity to store versions.

That’s a point where I think we’ll always disagree 🙂

I’m replacing my home RAID-5 file server with a RAID-1 because it’s sufficient and less burden to maintain (i.e. 2 SSD instead of 3, connected directly to H2+ without needing any adapter). There are even a few new mobos with two M2 adapters that allow you to connect two NVME devices, but they appeared just after I bought my H2+ board otherwise I would have gone for this.

What I value here is indeed availability, since this machine is backed up every day. But when you say “how needs that at home?”, I would say “everyone”. Without RAID, you lose your whole data for as long as it takes to fully restore them, which can take from hours to days depending on the volume. That’s the type of thing that can ruin your experience or even a whole week-end. With RAID you just have to go to the local store, buy a replacement for the failed device, take one hour to replace it if a screwdriver is needed, and let it reconstruct in background while the service is operational again. This is why I *do* value RAID1 and RAID5. And at work it has saved us something like 5 times in 20 years, which is not bad at all, considering the alternatives that consist in telling employees “go back home till the end of the week, a disk died, data are currently being restored”!

Where I agree with you is when some people consider RAID as backup, and sadly it’s indeed quite common. But I wouldn’t tell them “RAID is bad”, just “RAID is not backup”.

On the other hand I’ve seen people choose and abuse RAID1 in DCs to speed up server deployments by replicating them, and while I was first horrified by the principle, when they asked me “I dare you to indicate me a faster method to deploy a whole rack”, I noted that sadly, they were right! because the deployment speed grows in 2^N without even needing network transfers, so you can deploy 32 servers with one install + 5 replication steps. This is a bit less interesting with large disks nowadays, but when it takes 10 min to replicate a 60GB partition at 100MB/s, in less than one hour you’ve indeed done 32 servers. And nowadays with SSDs it’s even better, with 500MB/s replication, it only takes 10 min to replicate everything, so most of the time is only spent in hardware manipulations.

> Without RAID, you lose your whole data for as long as it takes to fully restore them

Nope since I’m neither using anachronistic filesystems like ext4/UFS nor anachronistic tasks like ‘full restore’. It’s 2023 after all 🙂

Already 25 years ago the amount of data stored in the graphics industry where I work(ed) in exceeded any limit that could be restored in a reasonable time window. Back then we used scripted sync attempts or commercial alternatives like PresStore Synchronize to keep another copy of data on another machine (primitive versioning included) to switch over to this other server in case disaster strikes. Of course combined with traditional RAID since spinning rust fails unpredictably.

With filesystems developed in this century we can now have all of this as part of the filesystem’s inherent features. Both ZFS and btrfs allow for as many snapshots as you would like so you already have versioning as part of the filesystem as one integral building block of any backup. ‘rm -rf’ on a primitive RAID-1 and your data is gone, on ZFS/btrfs it’s a short laugh and reverting back to last snapshot. Same with ‘ransomware’, you simply laugh and don’t care.

To fulfil the other essential requirement of real backup (at least one data copy physically separated from the original data) both filesystems have a send|receive functionality that unlike primitve rsync doesn’t need to scan source and destination all the time but simply sends the contents of last snapshot to 1-n other locations in the most efficient way imagineable.

The fine-graned versioning is automagically inherited at the backup location so all snapshots exist there too. We usually consolidate snapshots on the productive storage to not go back further than 3 months but keep a lot more snapshots on the backup systems so even user requirements like ‘can we compare the versions of this doc in 2022 and 2021?’ can be fulfilled. Those versions need minimal additional disk space since snapshots only contain changed blocks so they’re the same as ‘differential backup’.

All of the above are integral parts of the filesystems and all of this works flawlessly.

Maybe time to overthink anachronistic backup strategies and rely on tech made in this century?

Almost forgot: unlike mdraid-1 with a zMirror or btrfs’ raid-1 you get ‘data integrity’ detection/correction for free combined with a lot more sources for ‘data loss’ eliminated than primitive/anachronistic RAID-1 that is designed to only take care of a hard fail of a single HDD. A HDD slowly dying while corrupting data en masse will not even be detected!

> Wow, it’s 2023 and ‘RAID is not backup’ is still a thing

Yes, I know about the controversy and want to have some backup (but I don’t have lots of money to throw towards that). I have looked it up online and I will at least backup everything to a secondary drive that will only be used for this purpose only and will be spun down when not used (which will be most of the time).

FWIW I’ve replaced my old backup machines with an Odroid-M1 which makes an excellent local backup machine. Mainline support, powerful but cool CPU (RK3568), gigabit, nvme, SATA, USB3, RTC, eMMC support etc and not very expensive. What’s nice about using such a machine with any form of storage is that it doesn’t inflict a performance constraint on your main NAS. You just nightly rsync to it, and even if it runs off an old spinning rust, it takes the time it takes but you have your backup to restart from in case the NAS dies, is stolen, or the disks die together. Also since the CPU supports crypto you can have inexpensive on-disk crypto storage.

> FWIW I’ve replaced my old backup machines with an Odroid-M1

I have an oDroid H2+ that I replaced because of the lack of native SATA ports. It could be used as a real and separate backup for the main NAS, but for now it will stay unused for now for lack of funding.

I read more today and I will use SnapRAID for the current setup instead of RAID 1. oDroid H2+ will have to wait a little longer.

> I have an oDroid H2+ that I replaced because of the lack of native SATA ports.

Huh? N2+?

H2+ is Gemini Lake refresh with the usual 2 native SATA ports that can be combined with SATA port multipliers to turn two SATA ports into ten.

> Huh? N2+?

My wording was wrong. I really meant H2+ and I meant that H2+ only has 2 native SATA ports and I needed more than 2, while still using nVME to boot OS. I think I will use it in the future as a secondary backup system. 2 large HDDs should be enough for the data I have. I have to draw a line somewhere, otherwise backup costs will increase exponentially with every storage increase. Money is and will always be an issue.

I also think that the smaller case made ventilation a bit of a challenge during the hot season (I was using a Fractal Node 304).

Question:

I’m running Google Coral on one of my NVME slot on my current NAS. If I buy this NAS would the google coral work on the NVME slot? In other words can you install anything aside from NVMES in the (2) NVME slots?

I run Frigate and I need the Coral to do the AI for me.

Thoughts?