Topton “N5105/N6005 NAS board” is a mini-ITX motherboard powered by an Intel Celeron N5105 or N6005 Jasper Lake processor, equipped with six SATA 3.0 ports, two M.2 NVMe sockets, and four Intel i226-V 2.5GbE controllers.

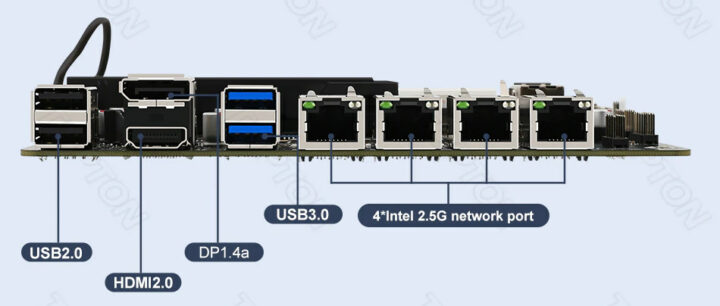

The board also comes with two SO-DIMM DDR4 slots for up to 64GB RAM, HDMI 2.0 and DisplayPort video outputs, and several USB 3.0/2.0 interfaces. As its name implies, it is designed for network access storage (NAS), but I could also see it used as a networked video recorder (NVR), a network appliance, or a multi-purpose machine.

Topton “N5105/N6005 NAS board” specifications:

- SoC (One or the other)

- Intel Celeron N5105 quad-core Jasper Lake processor @ 2.0GHz / 2.9GHz (Turbo) with 24EU Intel UHD graphics @ 450 / 800 MHz (Turbo); 10W TDP

- Intel Pentium N6005 quad-core Jasper Lake processor @ 2.0GHz / 3.3GHz (Turbo) with 32EU Intel UHD graphics @ 450 / 900 MHz (Turbo); 10W TDP

- System Memory – 2x DDR4 SO-DIMM slots for up to 64GB 2933 MHz (2x 32GB) non-ECC memory

- Storage

- 2x M.2 NVMe PCIe 3.0 socket (2280)

- 6x SATA 3.0 connectors, 5x of which are implemented through a Jmicroon JMB585 PCIe Gen3 x2 to x5 SATA 3.0 bridge

- Video Output

- HDMI 2.0 up to 4Kp60

- DisplayPort 1.4a up to 4Kp60

- Audio –

- Networking – 4x 2.5GbE RJ45 ports via Intel i226-V controllers

- USB – 2x USB 3.0 Type-A ports, 2x USB 2.0 Type-A ports, 2x internal USB 2.0 Type-A host ports, USB 2.0 interface(s) via header

- Misc

- UEFI BIOS with support for auto power on, WoL, PXE

- System and case fan connectors

- Buzzer

- TPM connector

- “ESPi” (probably eSPI?) digital card debugging header

- Front panel header

- Power Supply – 24-pin + 4-pin ATX connectors

- Dimensions – 17 x 17 cm (Mini-ITX motherboard)

The company will install Windows 10 Pro by default when ordered with storage and memory, but users can also request Windows 11 Pro. A system ordered with less than 32GB RAM will only come with one memory stick, so no dual-channel memory.

Topton also highlights that Intel i226-V is a new network controller and operating systems like pfSense may not include a compatible driver yet, and they ask users not to open a dispute in case of such (operating) system issue.

Topton “N5105/N6005 NAS” Jasper Lake mini-ITX motherboard is sold for $195.76 to $472.18 depending on the selected processor, and memory & storage capacities.

Via Liliputing

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Finally! Vendors are starting to hear clients asking for hardware redundancy on SSD! I’ve seen a number of ugly hacks when trying to find a solution to this, and it’s nice that the situation is evolving.

> hardware redundancy on SSD

For what exactly? Slapping into both M.2 slots identical SSDs with identical firmware and identical ‘power on hours’ to really ensure if those SSDs ever fail they will fail at exactly the same time?

SSDs die for totally different reasons than spinning rust. Because of firmware bugs like this (I know people who had downtimes caused by this bug since played RAID-1 with absolutely identical SSDs which is IMO both just wasted resources and recipe for failure)

I’ve heard this argument before. The obvious solution seems to be don’t use identical drives.

Can be a hard task if you’re doing a RAIDz out of many SSDs.

Alternative is to take care all involved SSDs have different ‘power on hours’ (differing by at least 24 hours, better a higher number) and that a serious but differing amount of data will be written to them also (the same stupid integer overflow bugs can happen wrt amount of written data).

I know this, and will be careful to use different batches or different ages (e.g. reuse one picked from another machine), but even without it, RAID already saved my data a few times in the past. While hard drives *sometimes* start to show signs of aging before dying, SSDs die without any warning. The device hangs in the middle of operation, and disappears forever. I absolutely want to have a chance of keeping my machine working for the time it takes to order a new one, especially given the ridiculous price of a 500GB SSD nowadays. I’d probably even mix a good one and a low-end one to be sure to have completely different components (hard+soft).

Always label each drive in an array with it’s first active on-line service start date. Replace any drive over 5 years even if there is no failure.

If you’re just starting a new RAID 1 array, buy 3 drives and use one for off-site backup. Rotate the oldest drive to off-site backup every few years.

There are always counter-examples. My home server was built in 2010 with 3 intel X25-M 160GB SSD. One of them died after 3 years, I replaced it. And since then no other incident. Knocking wood, but 12 years of uptime with SSDs constantly writing traffic logs and incoming e-mails looks impressive. I don’t know how long new ones will last, MLC doesn’t seem to exist (or to be affordable) anymore and I’ll have to use TLC at best. It might even be possible that new ones would die before these ones if I changed them now. That’s always a difficult choice. But I’ll try with 2×500 in RAID1 I guess, even if I don’t need 500GB storage.

Two M.2 ports is nice for other reasons, too. E.g. on an NVR machine, I’d stuff an SSD in one and an NPU in another for on-NVR video analytics. (Ideally it’d support bifurcating into 4 separate 1x devices, and someone would design a 4x Coral TPU M.2 card that takes advantage of that.)

Speaking of NVR applications: where does one find specs on H.264/H.265 decode for Intel UHD graphics?

hello my friends, i bought this motherboard for building mini nas, but i can not install XPENOLOGY, may who kwon what problem? when i start run bootloader Xpenology (Tinycore-redpill V0.9.2.9) system can not find Lan IP, and i can not find find this pc on lan with another PC. maybe it need turn on lan in bios? bootloader in another pc works good. i dont know what the problem. please help me.

Bonjour’ j’ai eu ce problème, c’est mon modem box internet qui ne voulait pas m’attribuer d’adresse, mauvaise gestion du DHCP, je suis passé par un routeur TPLINK et ça marche

> As its name implies, it is designed for network access storage (NAS)

IMO strange to add four 2.5GbE controllers to a NAS. Why not for the same amount of bucks using one Aquantia AQtion AQC107 (now Marvell) or its successors to be able to use also 5GbE and 10GbE if needed?

The motherboard would also be nice to use for a firewall. However, the combination of Intel ME and Intel NIC is less nice.

How good are the NICs from Aquantia? How is the compatibility with FreeBSD and Linux?

These Aquantia chips appeared in inexpensive 10GbE cards from ASUS, QNAP and others years ago (example review) and are one of or even the main reason for bringing the price level of 10GbE gear down back then.

I saw the first in an iMac Pro we bought early 2018 and all my personal experiences are with macOS only (no problems). As far as I know no problems with Linux 4.11 or above either. No idea about other OS.

Marvell moved to a second generation last year, that’s more compact and doesn’t seem to run as hot. They also have an option to use a single PCIe 4.0 lane, or two PCIe 3.0 lanes, depending on the SKU. I’ve had a pair of Aquantia cards for a few years now, that required a firmware update to work with my Ryzen 7 1700 at the time, but haven’t missed a beat since.

Thanks.

From a power consumption perspective, it’s always better if the network chip is directly on the motherboard and you don’t have to use PCIe cards. With PCIe cards, the power consumption always goes up. You often don’t reach the low c-states anymore. This is usually only noticeable when you build really low-consumption x86_64 computers. The prerequisites are really low-consumption motherboards (of which there are only a few) and very efficient power supplies (Ironically, exactly these usually have more than 400 watts). But then you can get below 10 watts and even towards 5 watts in IDLE without hard disks, have ECC ram, the software selection for x86_64 and really a lot of power when needed. And then it is important to use Linux. With BSD you don’t really get down deep in the c-states.

If you care that much about power usage, then you simply wouldn’t be using 10 Gbps Ethernet, would you?

I have one of similar firewall boards with 4 ports. BIOS in them have a lot of settings, maybe kind of debug mode. And there is a lot ME settings too. I suspect it can be turned off:

https://evadim.ru/IMG20221014152434.jpg

https://evadim.ru/IMG20221014152456.jpg

If you can flash CoreBoot, then ME should be gone. Maybe your device is identical to Protecli and you can use their CoreBoot?

I flashed Coreboot before to X220t, but now I don’t understand how to flash it. I see no manual for this.

If you give link may be I can try.

But such hardware changed rapidly.

https://protectli.com/kb/coreboot-on-the-vault/?intsrc=eu

In the link there is a tutorial.

I have flashed my small firewall box, which is identical in hardware to the 4fwb, with CoreBoot without any issues. But that was a long time ago and I don’t remember exactly how I did it.

If I ever buy new hardware again, it will probably be directly from Protectli, since I want to support them in CoreBoot development as well.

As I see they use different processors. I think better to buy from them if Coreboot needed.

I bought one a few months ago to test the 2.5G NIC of my Rock5B, and was pleased to see it work very well, with all regular offloading supported, saturate the 10G link without sweating and not being too hot (there’s a heatsink covering the board but it’s a small board). I found that it was a pretty good deal for the price, and if you factor in how hot some intel 10G NIC run in RJ45, that’s pretty correct. However these NICs are particularly hard to find these days. Probably that there isn’t that much demand.

Hard to find Aquantia NICs? Maybe where you live, but even TP-Link makes them these days and they seem to be the cheapest ones at the moment. Not seeing them as hard to find at all.

It was a TP-Link that I found and all the (rare) local ones were out of stock. I finally ordered it from China or somewhere like this while I live in France. Maybe they just became too popular at the moment I was interested in them and stocks went low for some time.

Asus, Gigabyte, Asustor, Edimax, Trendnet, Delock, QNAP, Synology, Zyxel, Sonet and StarTech all have 10 Gbps cards based in Aquantia/Marvell chips.

Good to know. For some it was not easy to figure what chip they were using. Others have/had insane 3-digit prices. It’s great that this chip arrives in consumer products, it’s likely one of the most reasonable options to generalize 10G at home.

Makes more sense to me

i think you mean ddr4 not ddr2?

Hm, I already ordered one of them 😀

Also, shop at Aliexpress named Topton, in article I see Topcon. Typo?

Please let us know what it’s like.

Bigggest conern is UEFI updated.

For sure – no. I even don’t know who to ask about updates. And they update hardware very fast so I have concern if it work after update.

Suddnely, I found manufacturer: CWWK. And they do provide BIOS updates: https://www.changwang.com/down/list-159.html

And even have support, according to ServeTheHome forums.

GORGE = COM header

MAKER BURNER = LAN-status LED connector

Isn’t there AMD and ARM at that price point but with ECC?

I wish!

The rk3568 can theoretically get close to this at a similar price point, but no NAS-oriented rk3568 boards have shown up on the market, yet.

Main differences would be:

https://wiki.pine64.org/wiki/User:CounterPillow/NAS-Board

> ECC RAM

This looks good according to Rockchip_Developer_Guide_HAL_DDR_ECC_CN.txt asides the consequences of UE (BGA soldering or throwing away the board?).

Though side-band ECC RAM doesn’t seem to be available with RK3588 which otherwise would be a nice(r) RK3568 replacement.

BTW: ASM1166 also exists now so one SATA disk more 🙂

Think I can connect a GPU with some sort of adapter to one of those M.2 slots? If so, this would make a heck of a Plex server.

> Think I can connect a GPU with some sort of adapter to one of those M.2 slots?

Sure, it’s PCIe what’s spoken in this slot. But only a fourth of the potential speed since Jasper Lake SoCs feature only eight PCIe Gen3 lanes and there are already five PCIe attached network and storage controllers so each M.2 slot carries only a single Gen3 PCIe lane instead of four as usual.

https://github.com/ThomasKaiser/Knowledge/raw/master/media/rock5b-with-4x10gbe-nic.jpg