Sphery vs. shapes is an open-source 3D raytraced game written in C and translated into FPGA bitstream that runs 50 times more efficiently on FPGA hardware than on an AMD Ryzen processor.

Verilog and VHDL languages typically used on FPGA are not well-suited to game development or other complex applications, so instead, Victor Suarez Rovere and Julian Kemmerer relied on Julian’s “PipelineC” C-like hardware description language (HDL) and Victor’s CflexHDL tool that include parser/generator and math types library in order to run the same code on PC with a standard compile, and on FPGA through a custom C to VHDL translator.

More details about the game development and results are provided in a white paper.

Some math functions were needed, including: floating point addition, subtraction, multiplication, division, reciprocals, square root, inverse square roots, vector dot products, vector normalization, etc. Fixed point counterparts were also used for performance reasons and to make the design easier to fit in the target FPGA, with the corresponding conversions to and from other types (integer and floats).

They compiled the game twice, once to run it on a 7nm Ryzen 4900H 8-core/16 threads processor @ up to 4.4GHz (45W TDP) running Linux, and the other time optimized to run on FPGA hardware, namely Digilent Arty A7-100T board, with a 101k LUT FPGA (Xilinx Artix-7 XC7A100TCSG324-1).

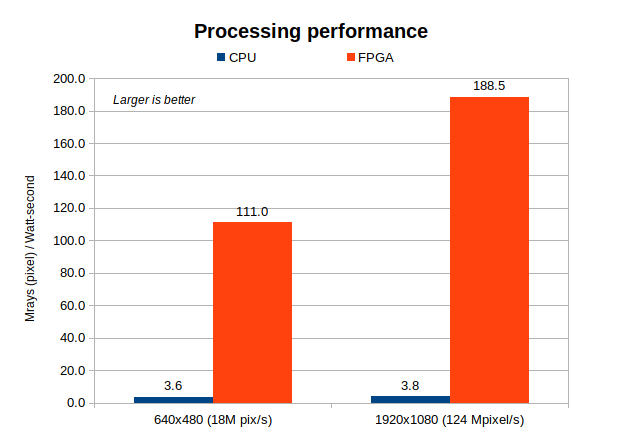

Both platforms could run the game smoothly and the FPGA solution could render the game at 60 fps at 1920×1080 resolution, but the main difference was the power consumption with the FPGA board consuming only 660 mW, while the PC was drawing 35W. Note that, as I understand it, the game does not use the GPU in the Ryzen CPU at all, but SIMD instructions were used to speed up the game. A similar game relying on the GPU for 3D graphics acceleration might consume less, but still significantly more than the FPGA board. On the other hand, the FPGA used was fabricated on a 28nm process, and up to 6 times efficiency gains could be expected on an FPGA built on the same 7nm process as the Ryzen CPU.

You can watch the video below for an explanation of the design and demo of the Sphery vs. shapes 3D raytracing game simulated on the CPU and on the Arty-7 FPGA board.

You’ll find more details on the PipelineC-Graphics GitHub repository. While the graphics demo is pretty cool, the white paper further also explains that PipelineC could also be used for other projects or products with hard real-time and/or low power requirements. Those include aerospace applications where power and weight come at a premium, industrial control systems requiring high reliability and real-time processing, lighter virtual/augmented reality headset, packet filtering in networking applications, and security & cryptographic applications.

In the future, examples for all the above-referenced applications will be implemented together with a RISC-V CPU and simulator. They also plan to design an ASIC with open source silicon IP and open source tools and do a tape out.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Fpga’s are slow! I suspect Linux is getting in the way!

AMD will have a Xilinx AI accelerator in Phoenix Point APUs. I’m not sure if that is an FPGA or more of an ASIC. They clearly want to put FPGAs in Xeons, but it seems like they could come to consumers in some form too.

Of course an FPGA is going to be more efficient. For one, once it’s programmed, it for all intents and purposes becomes a purpose-built device for running a specific task, whereas a PC remains a general-purpose device. Secondly, a PC is running a lot of extra stuff, both in terms of hardware-peripherals and in terms of software, including a big, complex OS.

A comparison between the FPGA and one or another high-performance microcontroller would’ve made more sense.

If I’ve understood the white paper correctly, they had to optimize the code to run on the AMD Ryzen processor at an acceptable frame rate. A microcontroller would not have been able to handle that game.

I was tracking with you until:

“A comparison between the FPGA and one or another high-performance microcontroller would’ve made more sense.”

Huh? I personally think it’s *very* illustrative to see a project that reminds us what the price is we’re paying for all that OS layer BS, and asking us a provocative question: “Are you sure it’s worth it?” Certainly for many applications the tradeoff isn’t important (or the OS adds too much value), but for others, especially in this cloud era we’re in (some of the cloud venders are making FPGAs available now), it very well might be an important design consideration. Put your OS where it matters, and then thunk over to another platform that doesn’t have that burden for high performance — the code pipeline can be the same, or similar, regardless of whether there’s an OS wasting time or not…. that’s potentially an important option for a software engineer working on a tight performance budget

Exactly. One is “compiled code” to run on a CPU, the other is “synthesized dedicated hardware” running in a gate array.

There is very little that you could compare and maintain relevance. For example an ASIC would be even faster. So what? I’ve heard engineers who couldn’t grasp that “HDL code” is not binary code. I don’t think it’s great to encourage this ambiguity through all this dubious terminology (C-like HDL???)

The operating system is totally irrelevant for the efficiency, as it likely uses significantly less than 1 percent of the CPU.

The FPGA is working in a massively parallel fashion, and only has to run at 148.5 MHz. All operations are hardwired and happen in a fixed order. The Ryzen has to run at 4.5 GHz, and at its highest core voltage. It needs very performant caches, branch predictors etc. to reach these frequencies.

Comparision with a GPU would be interesting.

Poorly worded, as the only impressive thing in this study is C to FPGA conversion.

Haha, are you gonna say trucks have more carrying capacity than race cars next? Try comparing it to a gpu.

Hi, author here. To compare with a GPU we’ll need to run the project in a device fabricated in the same process node (let’s say 7nm), something that we plan to do, and we expect about 6X more gains, as analyzed in the paper. But the main aspect here is that a GPU is really limited in the kind of work it can handle, always requiring a fully parallelizable task, while a circuit that you freely define doesn’t have any such limit. All digital computing nowadays is an interconnection of gates and you can express that in C with our tool, quickly testing your development advances with a regular compiler that works as a kind of logic simulator.

Thank you for this very interesting work!

Just thinking is Intel behind this comparison ? Because this is more likely gpu task.

Nope, we’re independent developers and researchers