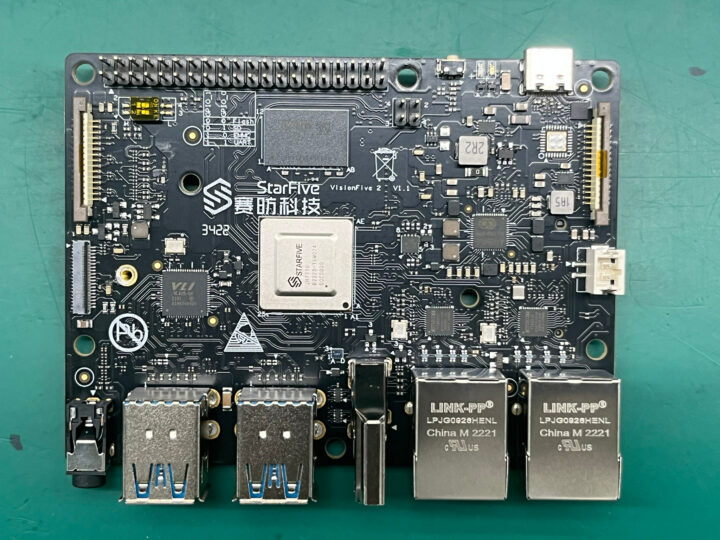

As expected, StarFive has officially unveiled the JH7110 quad-core RISC-V processor with 3D GPU and the VisionFive 2 SBC. I just did not expect the company to also launch a Kickstarter campaign for the board, and the version with 2GB RAM can be had for just about $46 for “early birds”.

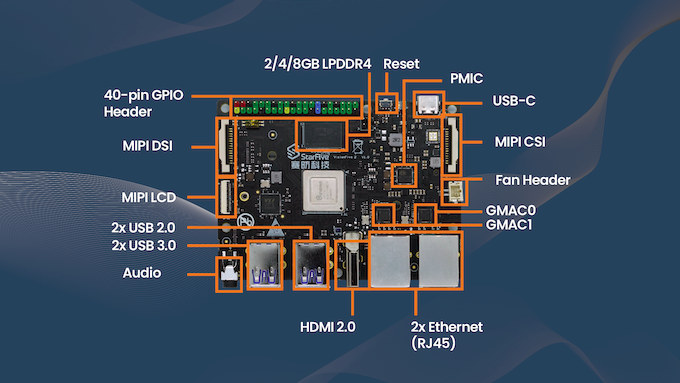

The VisionFive 2 ships with up to 8GB RAM, HDMI 2.0 and MIPI DSI display interfaces, dual Gigabit Ethernet, four USB 3.0/2.0 ports, a QSPI flash for the bootloader, as well as support for eMMC flash module, M.2 NVMe SSD, and microSD card storage.

- SoC – StarFive JH7110 quad-core 64-bit RISC-V (SiFive U74 – RV64GC) processor @ up to 1.5 GHz with

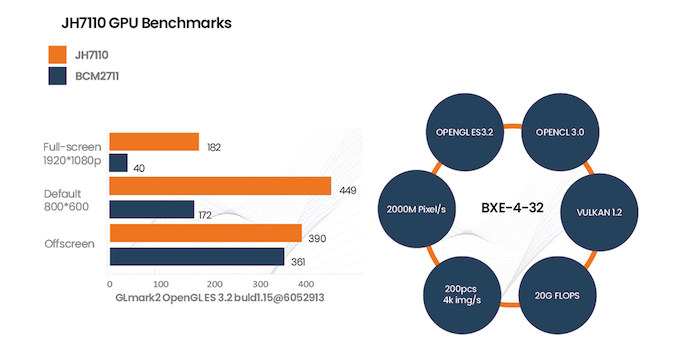

- Imagination BXE-4-32 GPU supporting OpenGL ES 3.2, OpenCL 1.2, Vulkan 1.2

- 4Kp30 H.265/H.264 video decoder

- 1080p30 H.265 video encoder

- System Memory – 2GB, 4GB, or 8GB LPDDR4

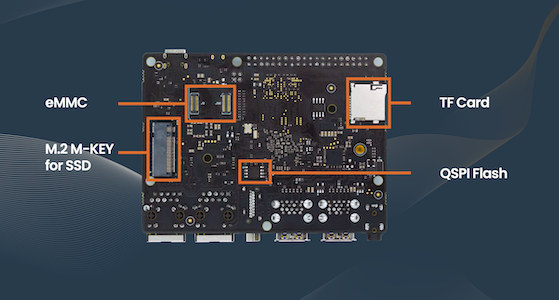

- Storage – MicroSD card slot, eMMC flash module socket, M.2 M-Key socket for NVMe SSD, QSPI flash for U-boot

- Video Output

- HDMI 2.0 port somehow limited up to 4Kp30

- 4-lane MIPI DSI connector up to 2Kp30

- 2-lane MIPI DSI connector

- Camera I/F – 2-lane MIPI CSI camera connector up to 4Kp30

- Audio – 3.5mm audio jack

- Networking – 2x Gigabit Ethernet RJ45 ports

- USB – 2x USB 3.0 ports, 2x USB 2.0 ports

- Expansion – 40-pin GPIO color-coded header

- Misc – Reset button, fan header, debug header

- Power Supply

- Via USB-C port with PD support up to 30W

- 5V DC via GPIO header (3A+ required)

- PoE via additional module

- On-board PMIC

- Dimensions – 100 x 72 mm (Pico-ITX form factor)

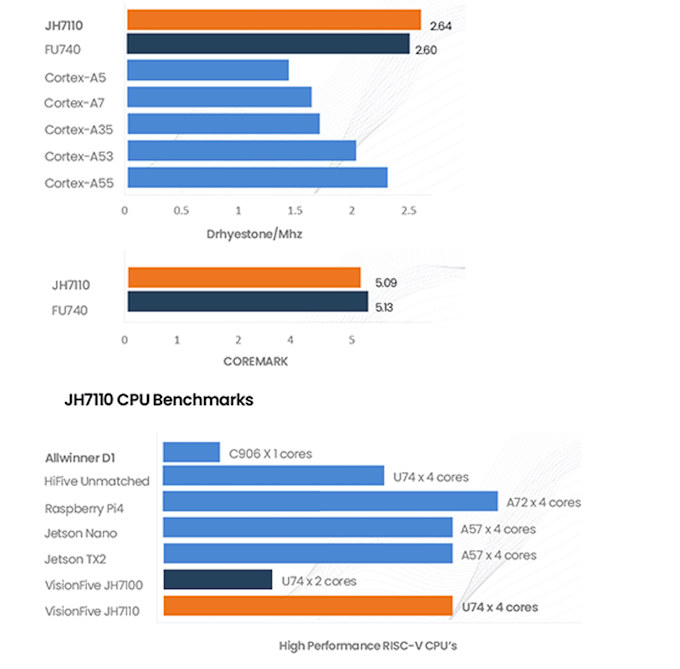

StarFive will provide Debian and Fedora operating systems images for the board. The company also provides some interesting benchmarks showing the CPU performance is actually higher than Cortex-A55-based chips, but not quite up to the performance of the Broadcom BCM2711 quad-core Cortex-A72 processor used in the Raspberry Pi 4.

While the selection of an Imagination GPU will make some disappointed considering the company’s history with regard to open-source, StarFive has made sure to select a GPU who performance puts the VideoCore VI to shame…

There are several options to get the board. The “Super Early Bird” comes with 4GB for only $49, but one of the Ethernet ports is limited to 10/100M. The early bird and Kickstarter special are the same and match the specifications listed above (include 2x GbE) but at different prices with 2GB, 4GB, or 8GB RAM options.

The Innovator Package offers the best price per unit, but you’d need to pledge over $10,000 for a bundle of 140, 180, or 200 boards.

The VisionFive 2 rewards with 4GB RAM are expected to ship in November 2022, while the 2GB and 8GB models should come out in February 2023, so you may want to take this into account. Shipping adds about $9 so it’s not excessive, and the StarFive VisionFive 2 SBC should be the first truly affordable, capable RISC-V Linux SBC on the market.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

> The company also provides some interesting benchmarks showing the CPU performance is actually higher than Cortex-A55

Huh? Dhrystone is a compiler benchmark, it’s pretty easy by using different compiler versions or flags to generate DMIPS scores on the same hardware that differ by 100% or even more.

When done correctly as one of your readers did, the ARM ratings differ but it’s impossible to use them for platform comparisons in the way presented here.

> Huh? Dhrystone is a compiler benchmark, it’s pretty easy by using different compiler versions or flags to generate DMIPS scores on the same hardware that differ by 100% or even more.

So, for which benchmark this is not the case? Do you assume they compiled it on ARM with “-O0”, and “-O3”? Maybe they also used GCC 4 on ARM, and GCC 12 on RISC-V? Or maybe we can just assume they used the same compiler version, and the same optimization levels. The general rule for benchmarks is any parameter which is not listed stays the same.

The point is that you don’t know. And actually (particularly for RISC), the compiler is an important part that should not be dismissed (some people in the past used to say that RISC meant “Reject Important Stuff on Compiler”). The only way this could be used is by reporting the highest speed that could be achieved on a given platform by adjusting compiler options. I.e. if some options to support SIMD are enabled and produce the correct result faster, then that’s fine and one should use this to build their programs. But complete build options must be reported with such benchmarks to be meaningful.

> Do you assume they compiled it on ARM with “-O0”, and “-O3”?

The RPi numbers provided by their ‘GPU benchmark’ are just the result of 1st Google hit for ‘glmark2 rpi4’ (a years old gist rotting on the Internet).

As such I would assume they didn’t create those DMIPS numbers for ARM but simply googled them as well. With that assumption no way they ‘used the same compiler version, and the same optimization levels’.

The numbers may come from a google search, or may not, and they may be created with a different compiler version. But end the end, this no longer matters when the compiler optimizes the code well enough to match the theoretical limit.

For the ARM DMIPS number, we can fairly well assume this is near the theoretical limit – we get the same numbers from various sources, including ARM themselves. There is no magical tweak left which can alter this number in a significant way.

For the RISC-V numbers, we may assume they did their best to select a suitable compiler version and used the right optimization flags. Given RISC-V is fairly young, there *may* be some possible optimization steps left, but maybe not.

Both sets of numbers should be near the theoretical limit, and thats what matters.

> Both sets of numbers should be near the theoretical limit, and thats what matters.

I disagree since the only thing that matters is which use case is represented by a benchmark. For Dhrystone that’s easy: none that existed the last 3 decades.

Anyone talking about ‘benchmarking’ using Dhrystone is either clueless or in the marketing department (maybe both). At least ignoring 4 decades of progress in hardware and software is pathetic.

I think it’s kind of pathetic that you would insist on such exacting standards for a fluff piece about a Crowdfunding/Kickstarter project. Is this what you want to spend your energy on? You have some issues, I know your spouse was telling me as we were running some Drhystone benchmarks. ;-P

They should add a extra target to raise money for full accelerated Linux desktop GPU, VPU drivers. Yes I know some think VHS etc.

Imagination is actively developing GPU support in open way this time. Please find:

– kernel driver: https://lore.kernel.org/all/20220815165156.118212-1-sarah.walker@imgtec.com/

– Vulkan Mesa side: https://gitlab.freedesktop.org/frankbinns/powervr/-/tree/powervr-next

– OpenGL will be available by Mesa zink layer (https://www.phoronix.com/review/zink-radeon-august2022)

Of course several months are still needed, but GPU support looks promising.

> and unknown benchmark…

As such should be considered marketing BS. With this secret benchmark now the U74 cores are as fast as Cortex-A57 while A57 has almost twice the DMIPS compared to A53 that is shown above to be only marginally slower than the U74. Makes zero sense.

Also showing JH7100 with only two U74 cores at ~30% of JH7100’s performance (twice the cores, same clockspeed) makes no sense.

This ‘benchmark’ seems to be some RNG variant.

> Also showing JH7100 with only two U74 cores at ~30% of JH7100’s performance (twice the cores, same clockspeed) makes no sense.

Why not? HiFiveU is about double the speed as JH7100, which is expected, similar silicon revision (same age) with double the cores.

But the JH7110 reportedly has the nasty DMA bug fixed, which definitely affects performance.

And, if you did your homework, you would know clockspeeds *are* different, the VisionFive1 runs with 1 GHz, the HifiveU with 1.2 GHz, and the VisionFive2 with 1.5 GHz. Jetson Nano runs at 1.4 GHz. So actually, the numbers match up fairly well.

> you would know clockspeeds *are* different

Yep, my fault. I googled for the JH7100’s clockspeeds and got two times the ‘1500 MHz’ number that was advertised in the beginning.

> the numbers match up fairly well.

This would mean the U74 being at A57 level while according to their own DMIPS/MHz chart the U74 shows 130% the performance of an A53 which according to DMIPS done correctly is at only 50%-55% of an A57.

‘Unknown benchmark’ and this DMIPS BS are in massive conflict.

According to your link, DMIPS for A57 is 4.1, and the DMIPS of the U74 is given as 2.64. 2.64/4.1 = 64%.

The Jetson Nano is clocked at 1.4 GHz, while the U74 seems to be clocked at 1.5GHz, so only taking the DMIPS numbers, cores and clock frequency, the JH7110/VF2 would be at 70% of the Nano.

But dhrystone mips is just one single, very simple benchmark. If you look at various benchmark results for e.g. AMD vs Intel CPUs, there are sometimes significant differences, sometimes AMD scores better, sometimes Intel. You can’t infer the benchmark results from DMIPS alone.

Yes, more detailed information would be very welcome, but this is just the very first round of marketing material. It may (probably is) biased, but this does not mean it is wrong.

> DMIPS for A57 is 4.1, and the DMIPS of the U74 is given as 2.64. 2.64/4.1 = 64%

Who cares? DMIPS are BS. Already 3 decades ago but definitely in this century.

Dhrystone was a definitive improvement over the older primitve ‘MIPS’ metric 4 decades ago. But as a synthetic benchmark tailored for the CPUs from 1970-1990 it’s 100% worthless today since not being represenative for a single real-world task any more.

So Dhrystone MIPS are BS, but you still use it to “prove” the CPU benchmarks must be incorrect …

Apparently, the U74 is able to almost match A57 or A72 in real world benchmarks, and is significantly faster than e.g. the A53 on RPi3. So the JH7110 is a quite capable SoC, very usable for RISC-V development, and several other use cases. And the price point for the 4GB/8GB variants is very attractive.

> So Dhrystone MIPS are BS

Exactly. We’re not in the 1980s any more. Just as the hardware also the software’s place from back then is a museum and not benchmark charts by marketing departments.

> but you still use it to “prove” the CPU benchmarks must be incorrect

DMIPS are definitely BS today and ‘unknown benchmark’ is obviously BS by design since you can design the graphs just as you like.

> the U74 is able to almost match A57 or A72 in real world benchmarks

If you have any links to real serious benchmarks I would love to read them.

BTW: At least I’m not talking about RISC-V or U74 or JH7110 at all. Just about the lousy marketing department’s job to generate graphs from numbers without meaning.

Pity, 4k at 30 Hz.

Tbh I do wonder if there’s posibility of slotted ram. (For example sodimm ddr4) It’d drag the price down and make more competitive against arm.

No DSP/AI this time?

AI accelerators are gone, but there’s still an audio DSP.

JH7110 specifications summary: https://www.cnx-software.com/2022/08/29/starfive-jh7110-risc-v-processor-specifications/

“Imagination GPU will make some disappointed…” to say the least. But then again I have a couple of black holes that have been waiting for some time to be filled with headless Raspberry Pi’s. But by now it’s clear that’s never gonna happen due to the “forever” chip shortage. Having been burned more than once, crowd funding is no longer an option either, especially with a November first ship date. These days November feels like a lifetime away. Heck by then I might be on a ventilator with the latest SARS-CoV-2 mutant, or God-forbid we’re at war with the CCP over Taiwan! Sigh… I’m going out for some ice cream at my local stripper bar 🙂 That’ll pick me up.

Imagination just submitted the first version of the DRM kernel driver today, which is definitely much more than any other embedded GPU vendor / IP provider has done so far.

As much as I hate to stand up for Intel and AMD, they’re both way beyond what IT has ever done. Even nVidia with all their stonewalling has released more code (only in the form of headers) than IT has.

Can’t wait to see this IT code get rejected because it’s for outdated chips that no one cares about anymore.

The code is for their very latest generation, more or less anything released from 2012 until now.

What do you expect? Imagination submitting in one year the same amount as Intel and AMD in more than 10 years? Let them start small. Also, I have been talking about embedded – e.g. Mali, Adreno, etc., only supported by reverse engineering without any vendor support.

Get your facts straight before posting your FUD.

Rogue is a historical family of GPUs. The current generations date back to late 2018. Rogue is hardly ‘their very latest generation’.

You said that IT had done more than any other GPU/IP provider had done and that’s not even remotely true. I called you out on it for lying. You moved the goalposts.

You’re the one who needs to get their facts straght. The fact is this driver is for *historical* GPUs which were last updated in 2018.

Rogue is an architecture and the name of the first GPU generation using that name. Current generations still use the Rogue architecture.

Quoting from https://lists.freedesktop.org/archives/mesa-dev/2022-March/225699.html

Are all current GPU supported? No. Are all current families supported? Yes.

You are deliberately mangling my words when quoting me – I said “embedded” (twice), but you keep ignoring that.

If they support other than historical Rogue GPUs, then they make the mistake of calling it a Rogue driver. The AXE-1-16M is from the Albiorix family, not Rogue. Similarly for BXS-4-64.

Your insistance on using ’embedded’ is a distinction without a difference. AMD, Intel, and nVidia all produce ’embedded’ GPUs as well. You’re attempting to create a distinction which does not exist as an attempt to make IT’s efforts more signifigant than they are. Why are you so invested in this?

Architecture is something different than family. See e.g. AMD RDNA(2).

Can you license an IP graphics core from Intel or NVidia or Intel, to combine it with your own CPU cores/IP? See the difference?

Providing an upstream, opensource driver is significant. AMD and Intel provide these, though only in the Desktop segment, but NVidia, ARM (Mali), Qualcomm (Adreno), Vivante don’t do this.

> an attempt to make IT’s efforts more signifigant than they are. Why are you so invested in this?

Maybe because Stefan wants to clarify stuff? He has been very clear about his scope: ’embedded GPU vendor / IP provider’. Not full discrete GPUs but the stuff you license from someone to integrate it into your SoC.

As an example: In the past I really hated RealTek NICs since they were slow, buggy, resource hungry, whatever. Their HW changed over the decades and it took me (and many other networking people) way too long to realize this. Much time wasted…

As such I appreciate Stefan’s attempts since ‘those things from the past’ remain to the past and if something significant has changed with Imagination now it should be noticed.

Then he’s purposefully ignoring AMD (which I notice he left out of his previous reply) who does license their graphics IP for custom and semi-custom designs. nVidia does have a history of that as well or are we forgetting previous generations of video consoles?

If this driver goes anywhere, maybe a lot of straing-to-landfill products using PowerVR graphics that have been produces over the last decade will become somewhat less useless.

Is this driver a good start? Sure, but it doesn’t go far in undoing the huge stain they’ve left on the embedded market for the last decade. Ask anyone who’s tried to get an open source desktop Linux distro working on Atom parts how they feel about IT’s IP. How about the many SBCs with SoCs containing PowerVR that never got any support and were abandoned?

This driver may become something useful at some point, but it won’t undo a decade of being a bad actor in this market segment.

Cores ending in XE and XS are Rogue.

XT cores are a different architecture,

Albiorix was the codename for AXT cores.

That is encouraging. Link? I did find this today but no mention of a driver update:

London, England – 23rd August – Imagination TechnologiesIMG BXE GPU IP has been integrated into StarFive’s latest RISC-V single-board computer (SBC), the VisionFive 2.

https://www.imaginationtech.com/news/imagination-gpu-selected-by-starfive-for-next-generation-visionfive-2-single-board-computer/

See think links Marcin posted.

I’d say that if we ignore the totally questionable benchmarks (and final frequency is not even certain, given that it’s written “up to” and the last one dropped by 30% once released), it can still be a reasonable deal for whoever wants to experiment with this platform. It will not be beefy but will be acceptable like many other entry-level platforms that people fiddle with all the time. So let’s give it its chance.

Same thoughts.

I usually write “up to” in application processor specs because of DVFS.

OK, but last time it was “up to 1.5” and it ended with 1.0, which is what makes me look carefully 😉

According to your mhz utility it’s 1750 MHz actually: http://ix.io/4a3s

Great board, I’ll join crowdfunding campaign.

Two open questions

1. What is with WiFi? Usb dongle should be used for WiFi ?

2. M2 slot what PCI express versions and how many lanes it offers.

Thank you

It should be 1-lane PCIe 2.0

> The company also provides some interesting benchmarks

For those trusting into Geekbench (me not!) I prepared two comparisons of JH7110 with ARMv8 SoCs that also lack any Crypto acceleration here.

The “Machine Learning” score is also much different, I’d suspect NEON instructions are used on Arm, and SIMD/Vector instructions don’t seem to be available in the JH7110 RISC-V SoC.

It’s also probably impacting “Image Compression”, “Gaussian Blur”, etc…

I would have assumed “Speech Recognition” would be impacted, but the RISC-V processor is quite faster here. Maybe the “Audio DSP” in JH7110 is somehow used in that benchmark.

There is this PDF trying to explain what the individual tests do. Sometimes what happens simply depends on the libraries Geekbench pulled in like e.g. libjpeg-turbo that uses SIMD instructions where available but not on RISC-V (yet).

As such at this time many of these benchmarks reflect more status of RISC-V software ecosystem maturing than HW capabilities…

Does anybody has the microsd card behind this VisionFive 2 Board so that you can easily kickstart from the microsd card behind either start on Ubuntu 20.04.1 or Debian instead of having to start on Baidu Cloud or Google Cloud version. Thank you so much.

Does anybody has the microsd card with the correct flash image file behind this VisionFive 2 Board so that you can easily help the User to kickstart from the microsd card behind either on Debian or Ubuntu OS. I have noticed VisionFive 1 comes with the 64Gb microsd card but somehow they took it out on VisionFive 2 Board in saving the cost I think. Thank you so much.