I’ve spent a bit more time with Ubuntu 22.04 on Khadas VIM4 Amogic A311D2 SBC, and while the performance is generally good features like 3D graphics acceleration and hardware video decoding are missing. But I was pleased to see a Linux hardware video encoding section in the Wiki, as it’s not something we often see supported early on. So I’ve given it a try…

First, we need to make a video in NV12 pixel format that’s commonly outputted from cameras. I downloaded a 45-second 1080p H.264 sample video from Linaro, and converted it with ffmpeg:

|

1 |

ffmpeg -i big_buck_bunny_1080p_H264_AAC_25fps_7200K.MP4 -pix_fmt nv12 big_buck_bunny_1080p_H264_AAC_25fps_7200K-nv12.yuv |

I did this on my laptop. As a raw video, it’s pretty big with 3.3GB of storage used for a 45-second video:

|

1 2 3 4 |

ls -lh total 3.3G -rw-rw-r-- 1 jaufranc jaufranc 40M Aug 5 2011 big_buck_bunny_1080p_H264_AAC_25fps_7200K.MP4 -rw-rw-r-- 1 jaufranc jaufranc 3.3G May 21 15:03 big_buck_bunny_1080p_H264_AAC_25fps_7200K-nv12.yuv |

Now let’s try to encode the video to H.264 on Khadas VIM4 board using aml_enc_test hardware video encoding sample:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

khadas@Khadas:~$ time aml_enc_test 1080p.nv12 dump.h264 1920 1080 30 25 6000000 1125 1 0 2 4 src_url is : 1080p.nv12 ; out_url is : dump.h264 ; width is : 1920 ; height is : 1080 ; gop is : 30 ; frmrate is : 25 ; bitrate is : 6000000 ; frm_num is : 1125 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 1920x1080 Encode End!width:1920 real 0m26.074s user 0m1.832s sys 0m4.883s |

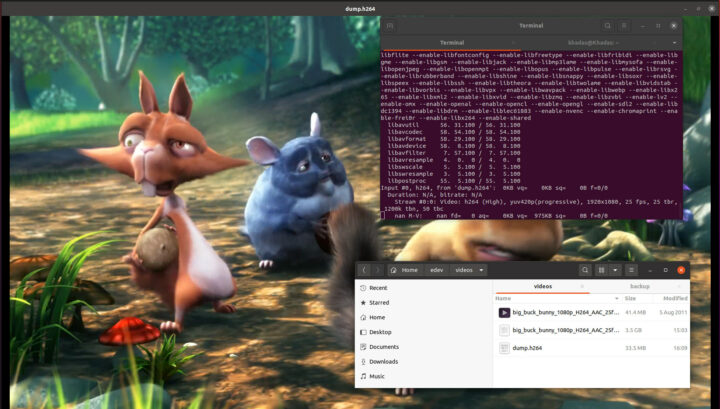

The output explains the parameters used. There are some error messages, but the video can be played back with ffplay on my computer without issues.

We can also see that encoding took place in 26 seconds, which is faster than real-time since the video is 45 seconds long.

Let’s try the same with H.265 encoding:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

time aml_enc_test 1080p.nv12 dump.h265 1920 1080 30 25 6000000 1125 1 0 2 5 src_url is : 1080p.nv12 ; out_url is : dump.h265 ; width is : 1920 ; height is : 1080 ; gop is : 30 ; frmrate is : 25 ; bitrate is : 6000000 ; frm_num is : 1125 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 5 ; codec is H265 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 1920x1080 Encode End!width:1920 real 0m9.561s user 0m1.348s sys 0m2.576s |

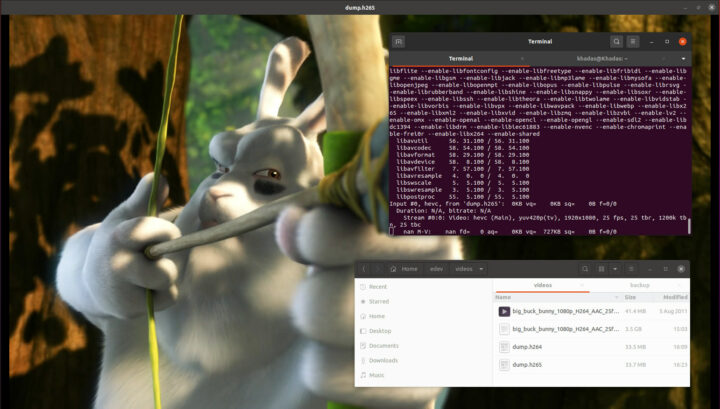

That’s surprising but H.265 video encoding is quite faster than H.264 video encoding. Let’s try H.264 encoding again:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 1080p.nv12 dump2.h264 1920 1080 30 25 6000000 1125 1 0 2 4 src_url is : 1080p.nv12 ; out_url is : dump2.h264 ; width is : 1920 ; height is : 1080 ; gop is : 30 ; frmrate is : 25 ; bitrate is : 6000000 ; frm_num is : 1125 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 1920x1080 Encode End!width:1920 real 0m8.780s user 0m1.416s sys 0m2.274s |

Ah. It’s now taking less than 9 seconds. The first time it’s reading the data from the eMMC flash it is slow, but since the file is 3.3GB, it can fit into the cache so the second time there’s no bottleneck from storage.

Nevertheless, dump.h265 file could also play fine on my computer so the conversion was successful.

Amlogic A311D2 specifications say “H.265 & H.264 at 4Kp50” video encoding is supported. So let’s create a 45-second 4Kp50 video and convert it to NV12 YUV format. Oops, the size of the raw video is 27GB, and it won’t fit into the board’s eMMC flash… Let’s cut that to 30 seconds (about 18GB)…

Now we can encode the video to H.264:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

khadas@Khadas:~$ time aml_enc_test 4k.nv12 dump4k.h264 3840 2160 30 50 10000000 1501 1 0 2 4 src_url is : 4k.nv12 ; out_url is : dump4k.h264 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 50 ; bitrate is : 10000000 ; frm_num is : 1501 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 2m10.611s user 0m5.819s sys 0m26.130s |

Two minutes to encode a 30 seconds video! That does not cut it, so let’s run the sample again:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 4k.nv12 dump4k.h264 3840 2160 30 50 10000000 1501 1 0 2 4 src_url is : 4k.nv12 ; out_url is : dump4k.h264 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 50 ; bitrate is : 10000000 ; frm_num is : 1501 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 2m22.420s user 0m6.543s sys 0m28.102s |

It’s even slower… I really think the storage is the bottleneck here because the required read speed for that file would be over 600 MB/s for real-time encoding. The system would typically encode video from the camera stream, not from the eMMC flash. I should have run iozone before:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

$ iozone -e -I -a -s 1000M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Iozone: Performance Test of File I/O Version $Revision: 3.489 $ Compiled for 64 bit mode. Build: linux Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 1024000 4 42448 49401 33738 35273 30351 33959 1024000 16 95388 84746 83386 87949 78675 72818 1024000 512 109351 90438 166659 166804 144584 70463 1024000 1024 68088 98663 175108 174902 164769 58980 1024000 16384 71086 109715 178448 178144 182913 87181 iozone test complete. |

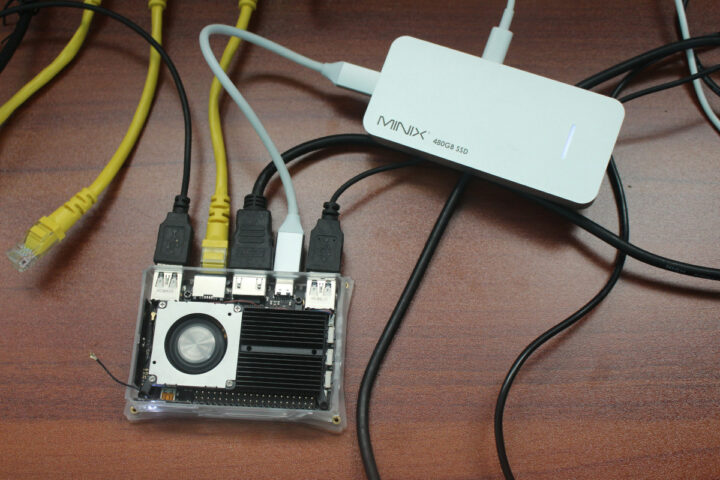

The sequential read speed is about 178MB/s. I have a MINIX USB Hub with a 480GB SSD that I had tested at 400MB/s. Not quite what we need, but we should see an improvement.

Sadly, the drive was not mounted, and even no recognized at all even with tools like fdisk and GParted. When double-checking Khadas VIM4 specifications, I realized the USB Type-C port was a USB 2.0 OTG interface that should recognize the drive, but only support 480 Mbps, so it’s a lost cause anyway… The only way to achieve over 600MB/s would be to use a USB 3.0 NVMe SSD, but I don’t have any.

So instead, I’ll make a 5-second 4Kp50 video that’s about 2.9GB in size.

First run using H.265:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 4Kp50-5s.nv12 dump4k-5s.h265 3840 2160 30 50 10000000 249 1 0 2 5 src_url is : 4Kp50-5s.nv12 ; out_url is : dump4k-5s.h265 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 50 ; bitrate is : 10000000 ; frm_num is : 249 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 5 ; codec is H265 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 0m6.905s user 0m0.661s sys 0m1.885s |

Second run:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 4Kp50-5s.nv12 dump4k-5s-2.h265 3840 2160 30 50 10000000 249 1 0 2 5 src_url is : 4Kp50-5s.nv12 ; out_url is : dump4k-5s-2.h265 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 50 ; bitrate is : 10000000 ; frm_num is : 249 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 5 ; codec is H265 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 0m6.828s user 0m0.663s sys 0m1.822s |

One last try with H.264:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 4Kp50-5s.nv12 dump4k-5s.h264 3840 2160 30 50 5000000 249 1 0 2 4 src_url is : 4Kp50-5s.nv12 ; out_url is : dump4k-5s.h264 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 50 ; bitrate is : 5000000 ; frm_num is : 249 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 0m6.422s user 0m0.644s sys 0m1.879s |

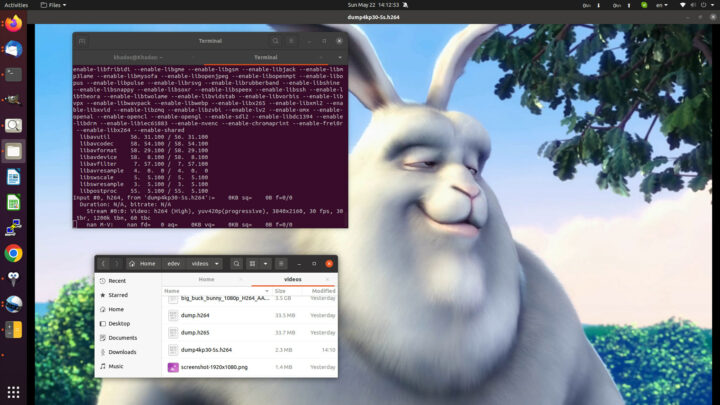

Not quite real-time, but it’s getting closer, and that means 4Kp30 should be feasible. That’s the result with a 5-second 4Kp30 NV12 video encoded with H.264:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

$ time aml_enc_test 4Kp30-5s.nv12 dump4kp30-5s.h264 3840 2160 30 30 5000000 150 1 0 2 4 src_url is : 4Kp30-5s.nv12 ; out_url is : dump4kp30-5s.h264 ; width is : 3840 ; height is : 2160 ; gop is : 30 ; frmrate is : 30 ; bitrate is : 5000000 ; frm_num is : 150 ; fmt is : 1 ; buf_type is : 0 ; num_planes is : 2 ; codec is : 4 ; codec is H264 Set log level to 4 [initEncParams:177] enc_feature_opts is 0x0 , GopPresetis 0x0 [SetupEncoderOpenParam:513] GopPreset GOP format (2) period 30 LongTermRef 0 [vdi_sys_sync_inst_param:618] [VDI] fail to deliver sync instance param inst_idx=0 [AML_MultiEncInitialize:1378] VPU instance param sync with open param failed [SetSequenceInfo:979] Required buffer fb_num=3, src_num=1, actual src=3 3840x2160 Encode End!width:3840 real 0m3.931s user 0m0.378s sys 0m1.161s |

Less than four seconds. So real-time 4Kp30 H.264 hardware video encoding is definitely working on Amlogic A311D2 processor.

It’s playing fine on my PC too.

It’s also possible to encode NV12 YUV images into JPEG, but it won’t work with khadas user:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

$ jpeg_enc_test screenshot-1920x1080.nv12 dump.jpg 1920 1080 100 3 0 16 16 0 screenshot-1920x1080.nv12 dump.jpg src url: screenshot-1920x1080.nv12 out url: dump.jpg width : 1920 height : 1080 quality: 100 iformat: 3 oformat: 0 width alignment: 16 height alignment: 16 memory type: VMALLOC align: 1920->1920 align: 1080->1088 hw_encode open device fail, 13:Permission denied jpegenc_init failed |

But no problem with sudo:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

khadas@Khadas:~$ time sudo jpeg_enc_test screenshot-1920x1080.nv12 dump.jpg 1920 1080 100 3 0 16 16 0 screenshot-1920x1080.nv12 dump.jpg src url: screenshot-1920x1080.nv12 out url: dump.jpg width : 1920 height : 1080 quality: 100 iformat: 3 oformat: 0 width alignment: 16 height alignment: 16 memory type: VMALLOC align: 1920->1920 align: 1080->1088 mapped address is 0xffffb27f0000 hw_info->mmap_buff.size, 0x2300000, hw_info->input_buf.addr:0x0xffffb27f0000 hw_info->assit_buf.addr, 0x0xffffb466c000, hw_info->output_buf.addr:0x0xffffb46f0000 frame_size=3110400 rd_size=3110400, frame_size=3110400 offset=2088960 luma_stride=1920, h_stride=1088, hw_info->bpp=12 real 0m0.044s user 0m0.004s sys 0m0.009s |

Probably just a simple permission issue. it was performed the task in 44ms, and I can open dump.jpg (a screenshot) without issues.

If I use ffmpeg to convert the NV12 file to jpeg, presumably with software encoding, it takes just under 200ms:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

khadas@Khadas:~$ time ffmpeg -pix_fmt nv12 -s 1920x1080 -i screenshot-1920x1080-nv12.yuv dump-ffmpeg.jpg ffmpeg version 4.4.1-3ubuntu5 Copyright (c) 2000-2021 the FFmpeg developers built with gcc 11 (Ubuntu 11.2.0-18ubuntu1) configuration: --prefix=/usr --extra-version=3ubuntu5 --toolchain=hardened --libdir=/usr/lib/aarch64-linux-gnu --incdir=/usr/include/aarch64-linux-gnu --arch=arm64 --enable-gpl --disable-stripping --enable-gnutls --enable-ladspa --enable-libaom --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libcodec2 --enable-libdav1d --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libjack --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librabbitmq --enable-librubberband --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libsrt --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvidstab --enable-libvorbis --enable-libvpx --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzimg --enable-libzmq --enable-libzvbi --enable-lv2 --enable-omx --enable-openal --enable-opencl --enable-opengl --enable-sdl2 --enable-pocketsphinx --enable-librsvg --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-chromaprint --enable-frei0r --enable-libx264 --enable-shared libavutil 56. 70.100 / 56. 70.100 libavcodec 58.134.100 / 58.134.100 libavformat 58. 76.100 / 58. 76.100 libavdevice 58. 13.100 / 58. 13.100 libavfilter 7.110.100 / 7.110.100 libswscale 5. 9.100 / 5. 9.100 libswresample 3. 9.100 / 3. 9.100 libpostproc 55. 9.100 / 55. 9.100 [rawvideo @ 0xaaaad7c07100] Estimating duration from bitrate, this may be inaccurate Input #0, rawvideo, from 'screenshot-1920x1080-nv12.yuv': Duration: 00:00:00.04, start: 0.000000, bitrate: 622080 kb/s Stream #0:0: Video: rawvideo (NV12 / 0x3231564E), nv12, 1920x1080, 622080 kb/s, 25 tbr, 25 tbn, 25 tbc Stream mapping: Stream #0:0 -> #0:0 (rawvideo (native) -> mjpeg (native)) Press [q] to stop, [?] for help [swscaler @ 0xaaaad7c1d2a0] deprecated pixel format used, make sure you did set range correctly Output #0, image2, to 'dump-ffmpeg.jpg': Metadata: encoder : Lavf58.76.100 Stream #0:0: Video: mjpeg, yuvj420p(pc, progressive), 1920x1080, q=2-31, 200 kb/s, 25 fps, 25 tbn Metadata: encoder : Lavc58.134.100 mjpeg Side data: cpb: bitrate max/min/avg: 0/0/200000 buffer size: 0 vbv_delay: N/A frame= 1 fps=0.0 q=10.8 size=N/A time=00:00:00.04 bitrate=N/A speed=4e+04x frame= 1 fps=0.0 q=10.8 Lsize=N/A time=00:00:00.04 bitrate=N/A speed=0.514x video:123kB audio:0kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: unknown real 0m0.190s user 0m0.150s sys 0m0.037s |

aml_enc_test and jpeg_enc_test are nice little utilities to test hardware video/image encoding in Linux on Amlogic A311D2, but the source code would be nice in order to integrate this into an application. But it does not appear to be public at this time, so I’d assume it’s part of Amlogic SDK. I’ll ask Khadas for the source code, or the method to get it.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

> the second time there’s no bottleneck from storage.

That’s why I always run sbc-bench -m (monitoring mode) in parallel with such tests. To see which kind of task the system is spending time on (your 1st run an awful lot of %iowait for sure).

When switching to performance governor on all CPU clusters running iostat 5 instead consumes less resources. Though unadjusted cpufreq governor might be more interesting -> maybe low(er) CPU clockspeeds due to VPU busy and similar…

I’m not even surprised that one can’t use any of the well-known frameworks for utilizing hw-encoding/-decoding, whether it is OMX, VA-API, V4L2 or similar and instead would have to write device-specific software using Amlogic’s SDK.

Want to use some popular, already-existing open-source software? Nope, gotta fork it and (try to!) modify it to work with Amlogic’s libraries!

Software situation around media capabilities with Amlogic’s forward ported 5.4 mess explained more in detail: https://forum.khadas.com/t/khadas-vim4-is-coming-soon/15266/42?u=tkaiser

Not too surprising since the typical Linux userland has zero relevance for the ‘Android e-waste’ world…

BTW: if revisiting this topic it would be interesting to check SoC thermals while testing since Amlogic’s BSP kernel exposes half a dozen thermal sensors for A311D2: find /sys -name “*thermal”

With lm-sensors package installed this will work too ofc: while true ; do sensors; sleep 10; done

But exploring /sys a bit might be worth the efforts (clockspeeds/governors of memory, gpu, vpu and such things)

Would it help to run these test with the media files on a ramdisk/tmpfs forceing them to be in ram regardless of any caching?

Nope for the following simple reasons:

The first time I tried doing YUV encoding for a video codec I was using a PowerMac G5 and a brand new firewire 800 external disk drive. I was taking an 854×480 NTSC MPEG2 stream from a DVD, and writing it to the hard drive at the same time I was reading the YUV (YUV4MPEG) file back in with the encoder software and writing the encoded file to the same external HDD. The drive had a physical failure in under 2 hours and was forevermore unusable.

I switched to two different approaches.

1) mkfifo will make a file that will take input from a process, and block until another process picks up that data to use it. This is great for running ffmpeg for the transcode to the raw format, but not actually using any space as the encoder picks it up.

2) *NIX loves streams. You can have ffmpeg do the transcode to nv12 and pipe the output of that into your encoder. If your encoder can’t get data from stdin, you can get it from /dev/stdin.

All of this is at the cost of some CPU and I/O that is handling the transcode to nv12 (or yuv4mpeg, or other) at runtime.

i pay $199 only if i get the encoding and decoding source code otherwise i keep my rk3568 which has reasonable encoding performance.

GOP should be something like 250 or 300 for better compression. It is how often do you want your key frames( frame without any compression)