This article looks at what the effect of running a different operating system or having more memory has on similarly spec’d Intel and AMD mini PCs when gaming. Note: This article has been updated and corrected as a result of reader feedback and additional testing.

It was inspired by having built and tested a pseudo ‘Steamdeck’ running Manjaro on an AMD-based mini PC with 16GB of memory, which made me wonder what the performance would be like using Windows 11.

Initial results were surprising because Windows appeared much slower. As I’d previously heard of performance improvements when using 64GB of memory I swapped out the currently installed 16GB memory and immediately saw improved results.

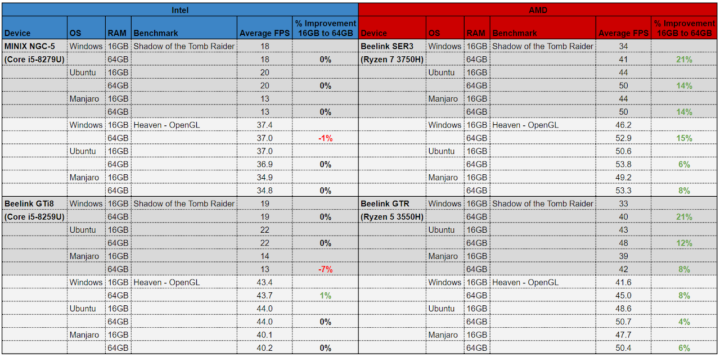

As I’d never observed such a dramatic performance increase on Intel mini PCs just through increasing the memory I decided to explore further by testing gaming performance on similar Intel and AMD mini PCs when using either 16 GB or 64GB of memory and coupled with comparing running Windows with Linux. Given the ‘Steamdeck’ used Manjaro I also wanted to test with Ubuntu to additionally see whether this made any difference.

Hardware under test

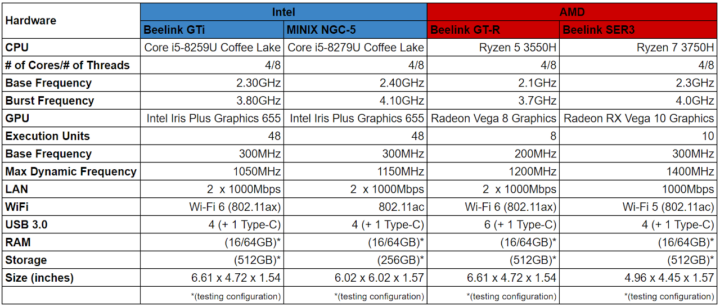

Recent AMD mini PCs have been notable for including the more powerful Radeon integrated graphics whereas Intel mini PC iGPUs are typically much weaker with the exception of the now-dated Intel Iris Plus Graphics 655. As quite a few recent mini PCs have been released using CPUs with these integrated graphics this was the logical choice for my test Intel devices. Limited by what I had available, the following four mini PCs (Intel: GTi & NGC-5, AMD: GT-R & SER3) were selected for testing as they were the most similarly spec’d:

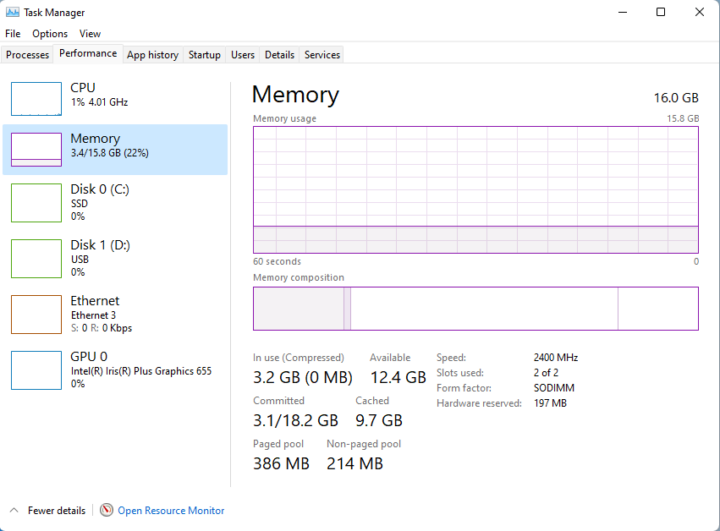

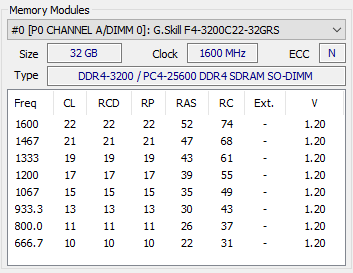

Given memory was the key hardware component being tested, I chose to reuse the same memory in each device to ensure consistency. Both the support for various memory speeds and the ability to overclock the memory was limited in the BIOS of each device. The Intel devices were restricted to running memory at a maximum speed of 2400 MHz however the AMD BIOS allowed a slight memory overclock to be set at 2666 MHz. Running in dual-channel I used two sticks of Crucial 8GB DDR4-2666 CL19 (CT8G4SFS6266) and two sticks of 32GB DDR4-3200 CL22 (F4-3200C22D-64GRS):

The additional testing used a single stick of Crucial 16GB DDR4-2666 CL19 (CT16G4SFD8266.M16FRS) and therefore ran in single-channel together with two sticks of 8GB DDR4-2400 CL16 (F4-2400C16D-16GRS) running in dual-channel:

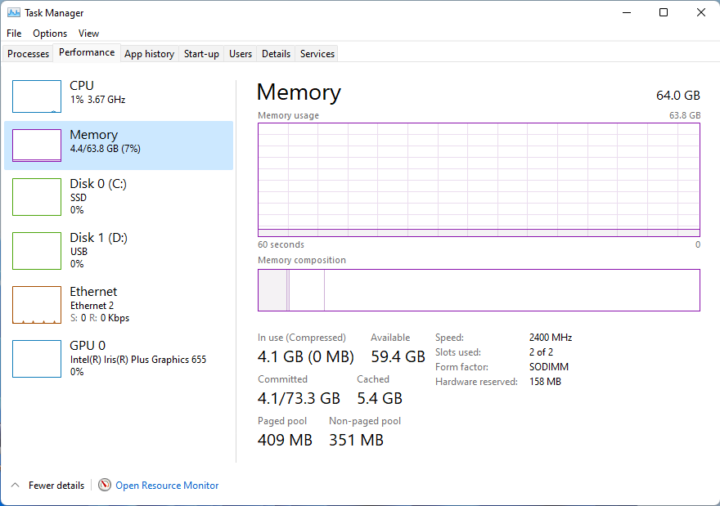

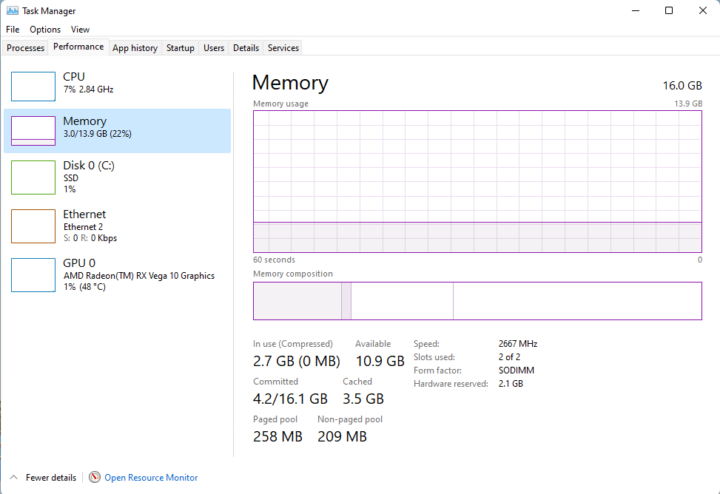

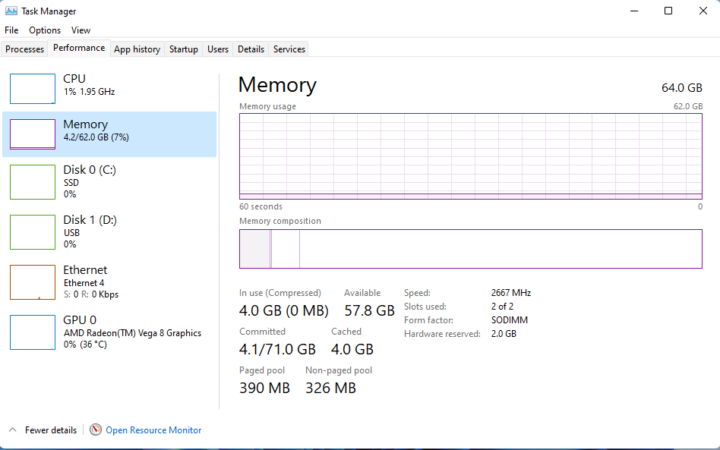

So for the Intel devices, the memory ran at 2400 MHz:

and for the AMD devices, it ran at 2666 MHz:

noting that the DDR4-3200 memory runs at CAS latency 19 when clocked at 2666 MHz:

For the additional testing on the AMD SER3 device the memory was not overclocked and was run at 2400 MHz.

Software

Fresh installations of each OS were performed on each device and updated to the latest versions and then benchmarking software was installed. Additionally ‘RyzenAdj’ was installed on the AMD devices to configure the power limits.

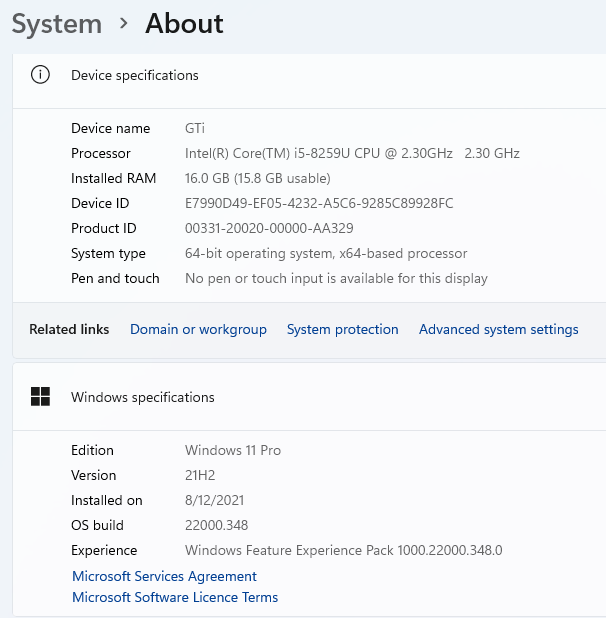

For Windows, Windows 11 Pro Version 21H2 build 22000.348 was used on each mini PC:

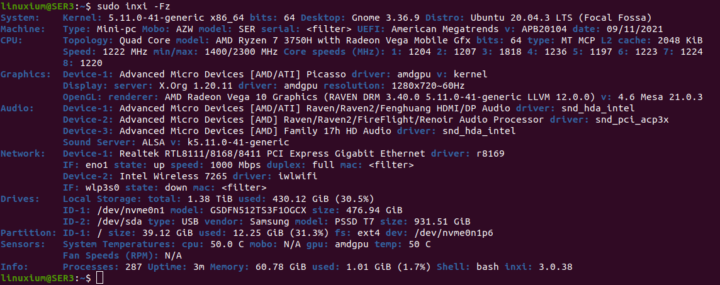

and for Ubuntu, Ubuntu 20.04.3 with the 5.11.0-41-generic kernel was used:

For the additional testing, the latest updates resulted in upgrading Windows to build 22000.376 and the Ubuntu kernel to 5.11.0-43-generic.

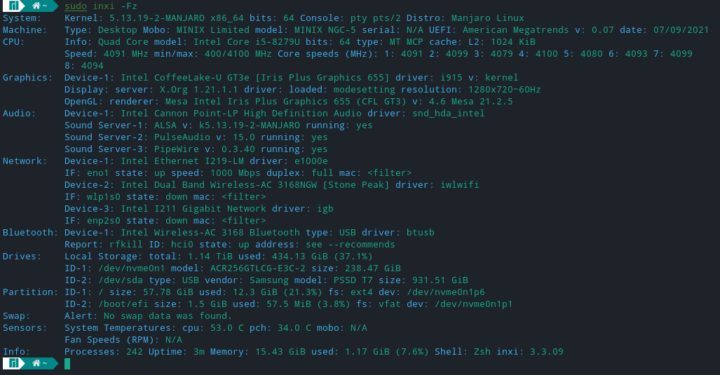

Then for Manjaro, Manjaro 21 KDE Plasma was used however as Manjaro is a rolling release for the first round of testing on the Intel NGC-5 and AMD SER3 mini PCs, Manjaro 21.1.6 with the 5.13.19-2 kernel was used:

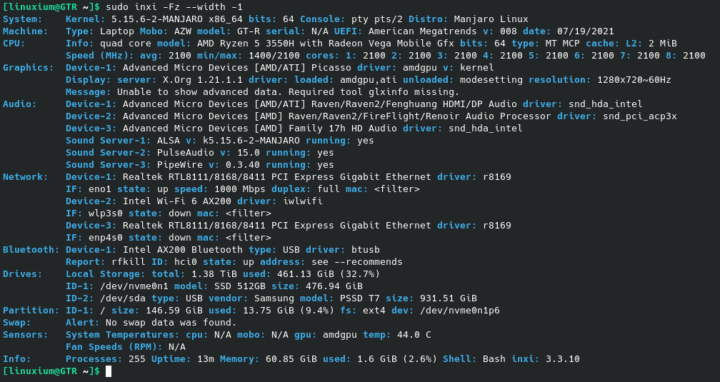

and for the second round of testing on the Intel GTi and AMD GT-R, Manjaro 21.2rc1 with the 5.15.6-2 kernel was used:

I also confirmed that the change in release point and kernels did not appear to influence the results by briefly running some additional benchmark checks.

Finally, Valve’s Steam and Unigine’s Heaven software was installed and used for testing as well as installing the FPS monitoring software of MSI Afterburner with Rivatuner Statistics Server on Windows and MangoHud on Linux.

Systems Configuration

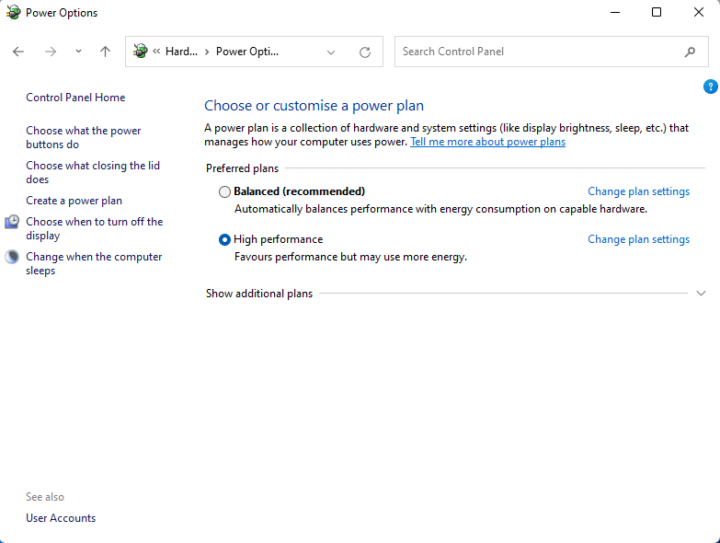

On Windows the power plan was set the power mode to ‘High performance’ on each device:

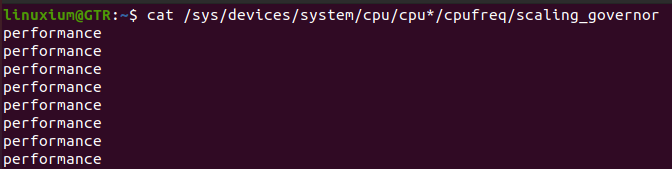

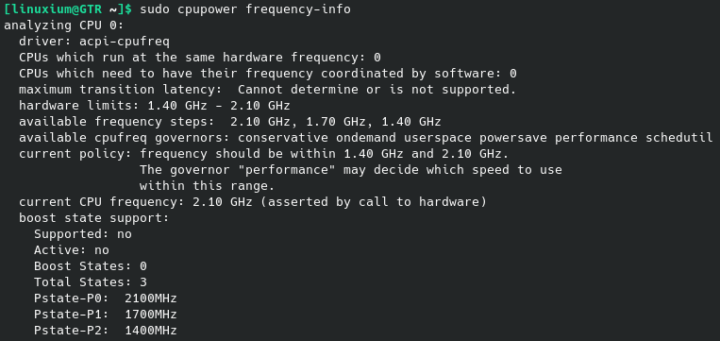

and similarly, on Ubuntu and Manjaro the CPU Scaling Governor was set to ‘performance’:

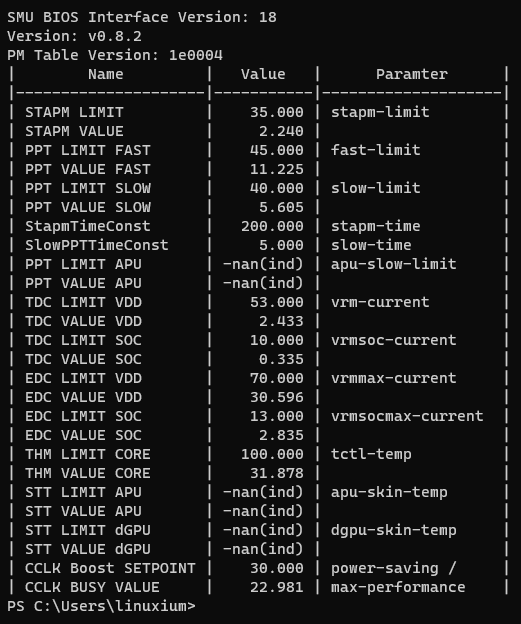

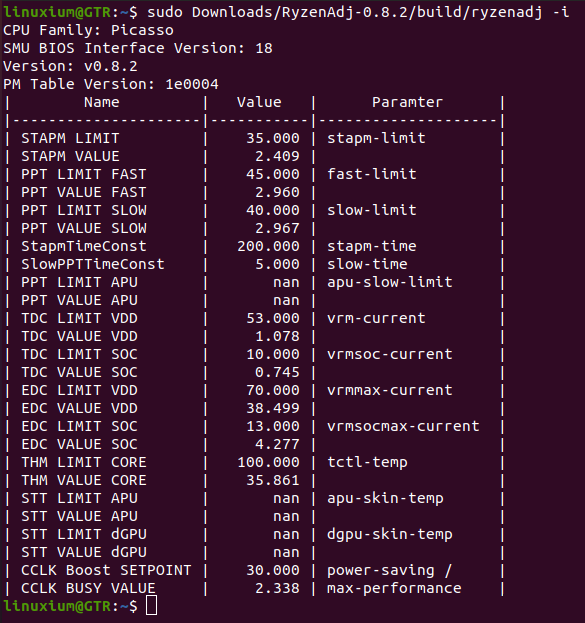

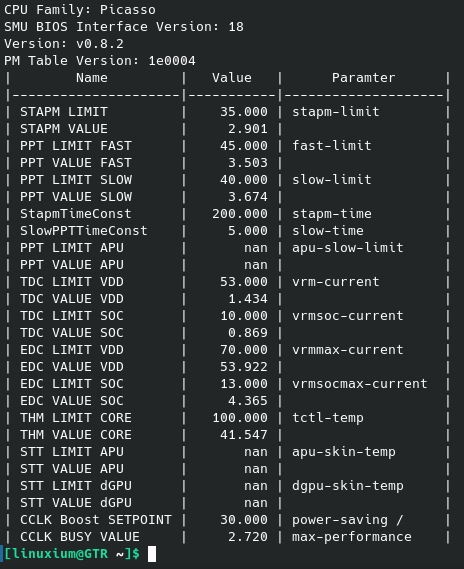

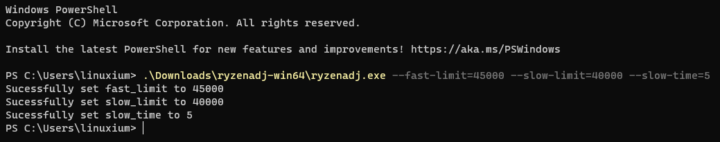

On both the AMD devices ‘RyzenAdj’ was used to set the Actual Power Limit (PTT Limit Fast) to 45W, the Average Power Limit (PPT Limit Slow) to 40W and the Slow PPT Constant Time (SlowPPTTimeConst) to 5 seconds:

for each OS:

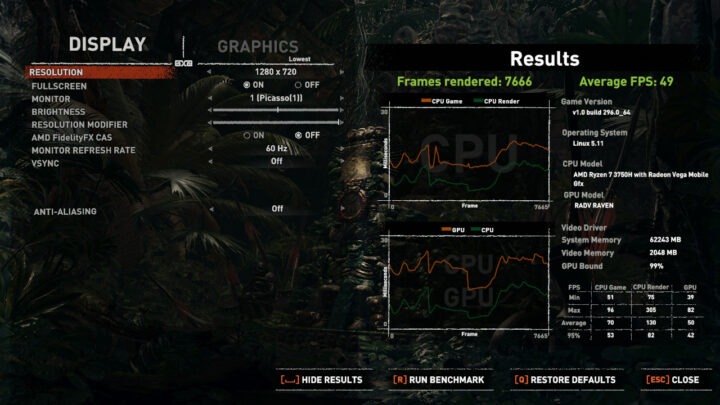

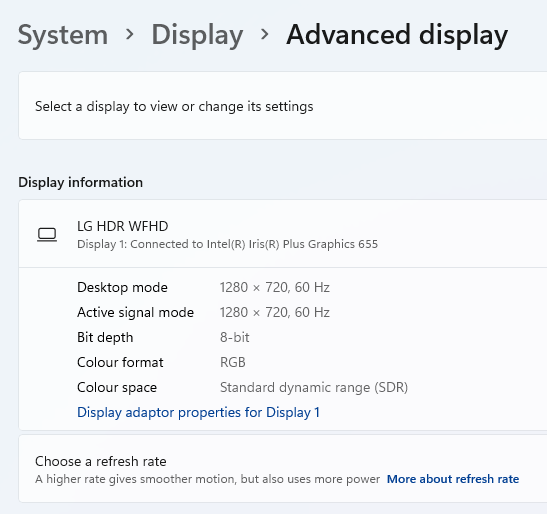

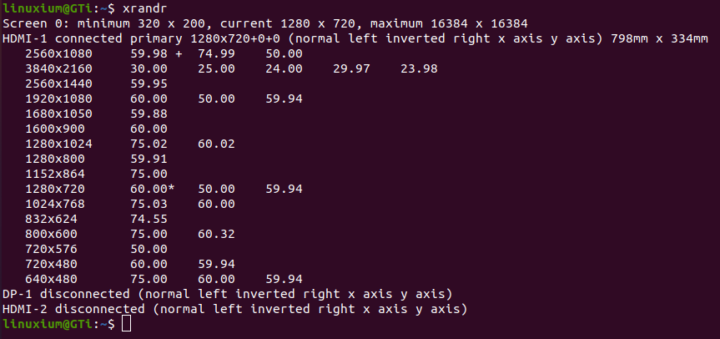

Finally, the ‘Display’ resolution was set 1280×720 on each device:

Testing Methodology

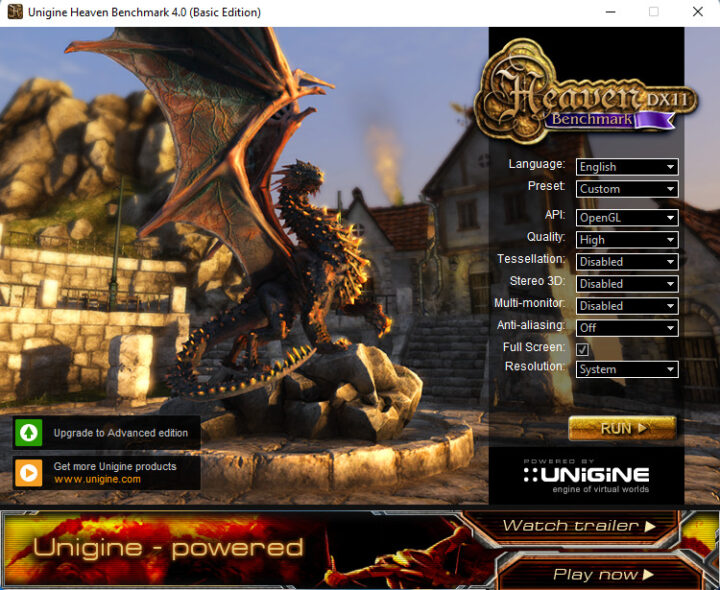

Initially, I tested several games under Steam in both Windows and Linux including Counter-Strike: Global Offensive (CS:GO), Grand Theft Auto V (GTA V), Horizon Zero Dawn (HZD), and Shadow Of The Tomb Raider (SOTTR). Whilst I noticed consistent performance in line with the conclusions below, I dropped testing CS:GO and GTA V in deference to using the more consistent in-game benchmarks of HZD and SOTTR. I also added testing with Heaven using the ‘OpenGL’ API:

as this is both available in Windows and Linux and was also consistently repeatable. However, I’ve only tabulated the SOTTR and Heaven results as these sufficiently demonstrate the trends seen in all the results.

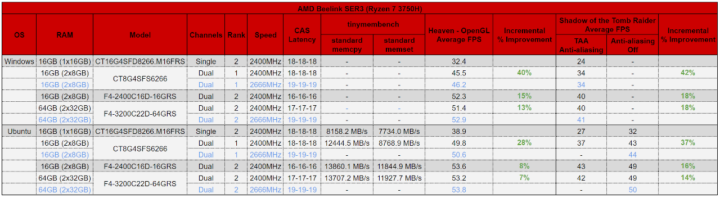

As a result of a comment from Yuri below: “Were both memory kits single-ranked or dual-ranked?” additional testing was undertaken to clarify whether the observed performance improvement on the AMD devices was a result of increasing the amount of memory or increasing the number of memory ranks.

The testing presented in the original article used 16GB as two (dual-channel) single-rank 8GB sticks (CT8G4SFS6266) and compared it with 64GB as two (dual-channel) dual-rank 32GB sticks (F4-3200C22D-64GRS) with the memory clocked at 2400MHz on the Intel devices and 2666MHz on the AMD devices.

The additional testing was performed only on the AMD SER3 device and used 16GB consisting of one (single-channel) dual-rank 16GB stick (CT16G4SFD8266.M16FRS) and 16GB consisting of two (dual-channel) dual-rank 8GB sticks (F4-2400C16D-16GRS) together with retesting the original memory. As the 16GB dual-rank sticks were only 2400MHz the additional testing was undertaken without overclocking the memory and so all the memory was clocked at 2400MHz.

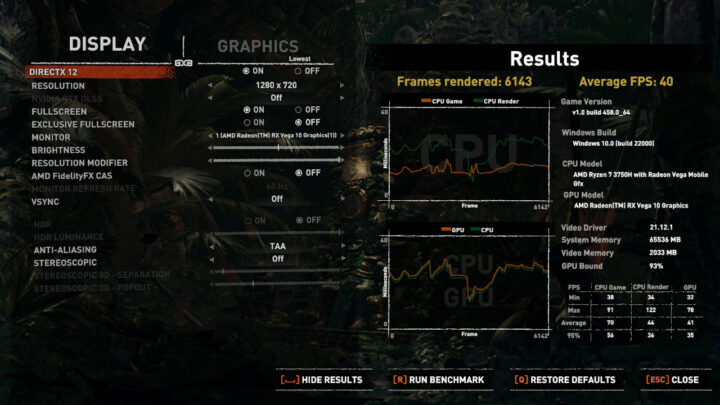

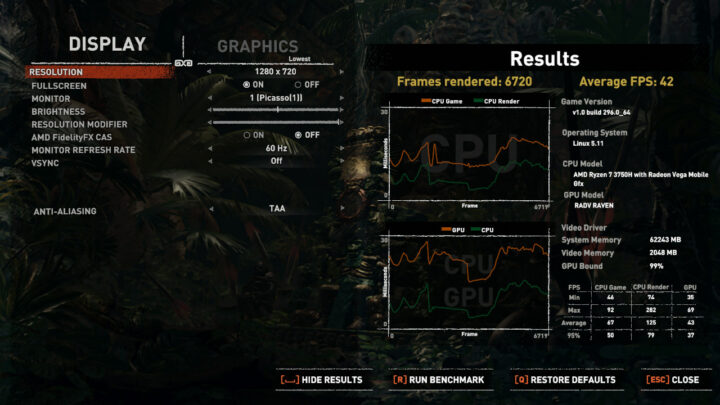

One final point is regarding the SOTTR benchmark. On Windows it uses DirectX 12, and using the default settings together with the lowest graphical present results in anti-aliasing set to ‘TAA’:

However, on Ubuntu, the default settings turn anti-aliasing off:

So in the additional testing I’ve also included running the Ubuntu SOTTR benchmark with ‘TAA’ set for comparison purposes:

Results

Additional testing results with original testing shown in blue for comparison:

OS Observations

A direct comparison of gaming performance between Windows and Linux cannot be drawn from such limited data and it should also be noted that some games run natively whilst others use compatibility tools like ‘Proton’. However, what was interesting is that on the Intel mini PCs SOTTR on Manjaro was much slower than on Ubuntu. This was not the case for the AMD devices where the performance was similar using the ‘OOTB’ experience. There could well be a simple solution for this however this highlights a common issue in ‘gaming’ on Linux where often it seems necessary to search for fixes just to get things to work.

Memory Observations

The most obvious impact was that increasing the memory from 16GB to 64GB on the AMD devices resulted in a noticeable improvement to FPS. The benefits appear to favor Windows more than Linux which, although lower, still saw consistent increments. Conversely, there was effectively no consequence of increasing the memory on the Intel devices with the few minor differences being within the margin of testing variance.

For the additional testing, this same noticeable improvement resulted after changing from using single-rank 16GB to using dual-rank 16GB in dual-channel mode. As expected when going from using 16GB memory installed as a single stick and therefore running in single-channel to the same amount of memory but installed as two 8GB sticks running in dual-channel there was a significant improvement to FPS.

The additional testing shows that on AMD mini PCs going from single-rank memory to dual-rank memory will also result in a noticeable improvement unlike on the Intel mini PCs where no difference was observed. Interestingly, retesting the 64GB memory resulted in very slightly lower results suggesting that the slight drop in clock speed from 2666 MHz to 2400 MHz might be responsible. The effect of CL timings has not been evaluated.

Conclusion

Gaming performance may differ between Windows and Linux so the choice of OS will likely depend on whether the desired games have ‘native’ versions or are supported by an appropriate compatibility layer.

However, increasing the memory appears to improve gaming performance on AMD mini PCs with notable FPS increments especially under Windows whereas no perceptible improvements were observed on Intel mini PCs. Whether these findings justify the extra expense of purchasing more memory is debatable. However, if you have it available it makes sense to use it.

To get the best gaming performance from AMD mini PCs dual-rank memory should be used in dual-channel mode.

Ian is interested in mini PCs and helps with reviews of mini PCs running Windows, Ubuntu and other Linux operating systems. You can follow him on Facebook or Twitter.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Interesting observations. Note an additional point I don’t see addressed here: Were both memory kits single-ranked or dual-ranked? Often, larger kits, e.g. your 64 GB kit, are dual-ranked, which can provide some performance advantage from that alone; the increased memory capacity itself may not necessarily be the main factor.

Yes, this is the real reason, not “more ram makes computer faster”

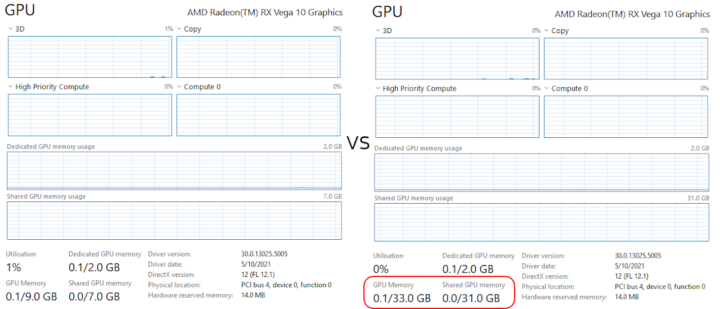

I stand corrected. Before I thought the extra RAM increased the ‘Shared GPU Memory’ and this was leveraged during rendering leading to increased FPS.

Thanks for your additional point. I will perform additional testing as outlined in my comment.

I’m confused. There’s QA at stackoverflow/stackexchange saying that using single ranked dual channel is more performant than dual-ranked dual channel. Could you please enlightening us? Thanks.

I haven’t seen the thread, so can’t comment directly. But, generally speaking:

With a dual-ranked setup, you get improved memory bandwidth, at a (tiny) cost of latency. Performance impact is going to vary by application; games tend to benefit from the added bandwidth.

(And of course there are other factors, such as the platform/memory controller, as we can see in this article.)

The article has been updated with my additional testing which confirms the performance was the result of dual-rank memory. Thanks again for your clarification.

Very cool article

It’s a cool article to dunk on because I think Yuri nailed it. AMD APUs are known to scale well with better memory performance and dual-rank could provide that. I doubt any of the games are trying to use more than 16 GiB of memory.

If we want to be charitable, I think you’re much more likely to get dual rank on accident if you pick up a couple of 32 GiB sticks. And while it’s an overspend of perhaps $200, you’ll never have to worry about memory again in your

mini PClife.As long as you don’t plan on living too long 🙂 Memory capacity requirements go up exponentially… I think in 2001, I bought a computer with 256 MB RAM. In 2021, 8GB is quite common for a usable computer. If the trend continues, we’ll need 256-512 GB RAM in computers in 2041.

I understand there are reasons to believe the trend will stop, as for instance, 8K monitors are starting to reach the limits of the human eye. Unless in 2041, we’ll all be lying in pods wearing Metaverse headsets, in which case I reckon higher resolution should help 🙂

https://jcmit.net/mem2015.htm

There has been ongoing stagnation in the $/GiB pricing of memory since around 2012, which has prevented memory requirements from increasing exponentially. I expanded my 4 GiB laptops to 8 GiB around 2010. Today 8 GiB is still sufficient for many users and 16 GiB is usually more than enough (cheap Chromebooks are still packing a measly 4 GiB). I believe Windows has actually gotten better about memory usage since around Windows 8, rather than demanding further “exponential” increases.

You could easily make the case for lots more. For example, 256 GiB to hold the contents of an entire ~200 GB AAA game in memory, which is what you would want for maximum performance. It’s just unlikely until we see memory prices crash to $1/GiB and under. Which will require another round of price fixing class action lawsuits.

Ideally, we will live to see the introduction of a universal memory that can combine the density of NAND with the speed/latency of DRAM. Unfortunately, promises of universal memory have been vaporware so far.

Ian, can you please provide decode-dimm output for both SO-DIMM configs from at least one the AMD boxes (usually part of i2c-tools package)?

And if time permits also tinymembench output as well (single vs. dual rank should always be a trade-off between latency and bandwidth)?

Sure. I will perform additional testing as outlined in my comment.

Just added decode-dimms to sbc-bench (maybe it helps saving some time to fire up sbc-bench with each DIMM combination instead of testing individually).

Quick test with decode-dimms on 2 Mini PCs and a laptop showed appropriate details. With 4 servers it was hit-and-miss and required at least a modprobe eeprom.

I also had to ‘modprobe eeprom’ on this device. If you or anyone else is interested in the ‘decode-dimms’ output for each memory configuration tested I’ve uploaded the files to http ix.io as 3Kh8, 3Kh9, 3Kha and 3Khb

Thank you for the additional data points though I miss information about latency. 🙂

IMO a missed opportunity especially to check tasks that favour memory latency over bandwidth but I understand this is all very time-consuming…

Just in case you have some spare time left 😉 sbc-bench now writes to a continuous log so to test again through a bunch of different RAM configs it’s possible to use stuff like this from /etc/rc.local (or something similar):

[ -f /root/.bench-me ] && (/usr/local/bin/sbc-bench -c ; shutdown -h)

I did run ‘tinymembench’ for each combination of memory in the additional testing and included the bandwidth ‘memcpy’ and ‘memset’ results in the new table however for the latency test results please see actual test output files which I’ve uploaded to http ix.io as 3Kwa, 3Kwb, 3Kwc and 3Kwd (concatenate to form four URLs which if posted explicitly would get this comment blocked).

Thank you! Tried to create a table from this additional data (all the links included): https://github.com/ThomasKaiser/sbc-bench/blob/master/results/Memory-Timings.md

At the bottom why I still call it a ‘missed opportunity’ to not benchmark the difference memory latency makes (of course nothing to do with the topic of this review or ‘gaming’ in general)

It’s hard to understand ‘memset’ being lower bandwidth than ‘memcopy’, while many benchmarks (tinymembench) on different platforms/cpus and configurations can show also often (up to nearly) double bandwidth numbers for memset instructions (32bit and 64bit).

What’s the difference (lower latencies for memcopy, changed memcopy instructions or microcode, memory buffering on larger (L2/L3) caches for memcopy, etc.)?

Opportunity realised as I’ve now run ‘sbc-bench’ using the same combination of memory as in the additional testing with the results uploaded to http ix.io as 3KJy, 3KJW, 3KKb and 3KKC.

The average 7-Zip compression for each of the memory configurations respectively was 17089,16566,18508,18240, decompression was 23156,23019,22957,22953 with the totals being 20123,19792,20732,20596.

As the testing also included rerunning ‘tinymembench’ your table can also be updated with the corresponding results as there is a slight difference due to expected testing variation.

Note that the CPU temperature during system health is not reflected correctly in this latest version of ‘sbc-bench’ and I didn’t notice this until after the testing was complete.

Thanks to everyone for their comments and especially to Yuri for pointing out single-ranked vs dual-ranked.

The testing above for 16GB used two (dual-channel) single-rank 8GB sticks (CT8G4SFS6266) and for 64GB used two (dual-channel) dual-rank 32GB sticks (F4-3200C22D-64GRS). The memory ran at 2400MHz on the Intel devices and 2666MHz on the AMD devices.

I propose to perform some additional testing based on using:

16GB consiting of one (single-channel) dual-rank 16GB stick (CT16G4SFD8266.M16FRS)

16GB consiting of two (dual-channel) dual-rank 8GB sticks (F4-2400C16D-16GRS)

together with the originally tested memory because the 16GB dual-rank sticks are only 2400MHz so I will test with all the memory clocked at 2400MHz.

I only plan to repeat the testing on the AMD Ryzen 7 3750H device (Beelink SER3) and just on Windows and Ubuntu using SOTTR and Heaven (OpenGL) with additionally running tinymembench and decode-dimm only on Ubuntu.

This will provide results for:

16GB single-channel vs 16GB dual-channel single-rank

16GB single-channel vs 16GB dual-channel dual-rank

16GB dual-channel single-rank vs 16GB dual-channel dual-rank

16GB dual-channel single-rank vs 64GB dual-channel dual-rank

16GB dual-channel dual-rank vs 64GB dual-channel dual-rank

which should be enough to clarify where any FPS gains are derived from.

If anyone has anything else to add I’m happy to consider within reason.

The article has been updated and additional testing confirms the FPS gains are from using dual-ranked memory.