As a former software engineer who’s mostly worked with C programming, and to a lesser extent assembler, I know in my heart that those are the two most efficient programming languages since they are so close to the hardware.

But to remove any doubts, a team of Portuguese university researchers attempted to quantify the energy efficiency of different programming languages (and of their compiler/interpreter) in a paper entitled Energy Efficiency across Programming Languages published in 2017, where they looked at the runtime, memory usage, and energy consumption of twenty-seven well-known programming languages. C is the uncontested winner here being the most efficient, while Python, which I’ll now call the polluters’ programming language :), is right at the bottom of the scale together with Perl.

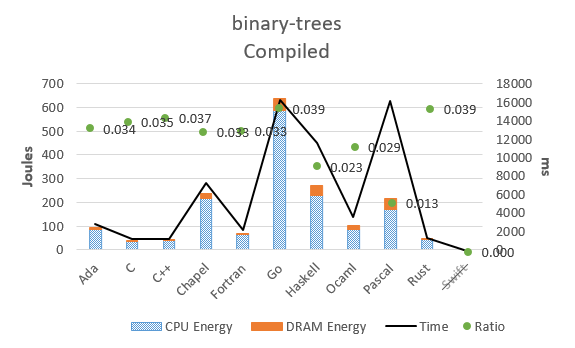

The study goes through the methodology and various benchmarks, but let’s pick the binary-trees results to illustrate the point starting with compiled code.

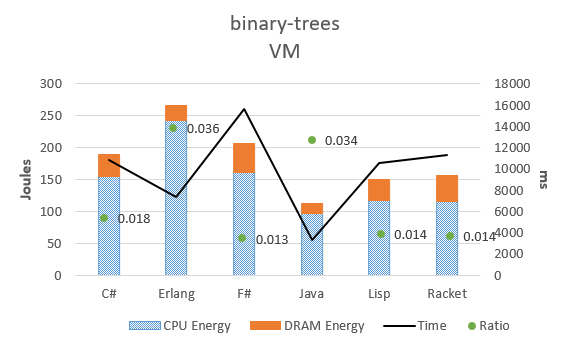

To the surprise of no one, the study concludes that “compiled languages tend to be, as expected, the fastest and most energy-efficient ones”.C and C++ languages are the most efficient and fastest languages. Go is the worst language from the compiled languages category, and it’s even worse than languages relying on a VM like Java or Erlang, at least with the binary-trees sample used.

To the surprise of no one, the study concludes that “compiled languages tend to be, as expected, the fastest and most energy-efficient ones”.C and C++ languages are the most efficient and fastest languages. Go is the worst language from the compiled languages category, and it’s even worse than languages relying on a VM like Java or Erlang, at least with the binary-trees sample used.

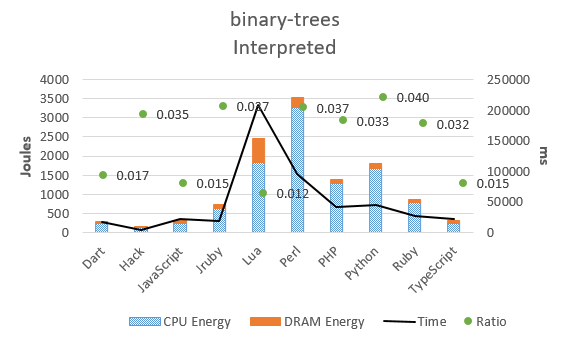

But the crown of the most inefficient languages goes to interpreted languages like Perl, Lua, or Python, and that’s by some margin.

It should be noted all tests were performed on a machine based on an Intel Core i5-4460 Haswell CPU @ 3.20GHz with 16GB of RAM, and running Ubuntu Server 16.10 operating system with Linux 4.8.0-22. Considering MicroPyhon is now running on a wide range of microcontrollers, I suspect it may not be as bad on those platforms with a smaller footprint, and it would be interesting to find out the difference.

It should be noted all tests were performed on a machine based on an Intel Core i5-4460 Haswell CPU @ 3.20GHz with 16GB of RAM, and running Ubuntu Server 16.10 operating system with Linux 4.8.0-22. Considering MicroPyhon is now running on a wide range of microcontrollers, I suspect it may not be as bad on those platforms with a smaller footprint, and it would be interesting to find out the difference.

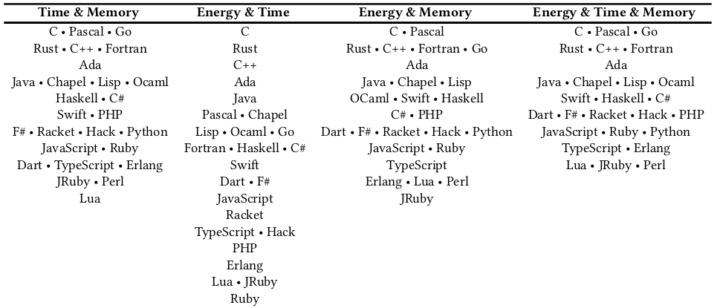

The study also ranked each language with different combinations of objectives mixing time, memory, and energy parameters, and C is always at the top with those metrics. That’s been known for years, but if you want to optimize your program for battery life/low power, some of the routines would have to be optimized in C, assembler, SIMD instructions, or custom instructions for accelerators.

The study also ranked each language with different combinations of objectives mixing time, memory, and energy parameters, and C is always at the top with those metrics. That’s been known for years, but if you want to optimize your program for battery life/low power, some of the routines would have to be optimized in C, assembler, SIMD instructions, or custom instructions for accelerators.

Via Hackaday

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

C is probably the most dangerous language – did the researchers factor in the amount of downtime and damage caused by badly written C that crashes.

I’m not going to claim that C is perfect or anything but whatever language you use it runs on the same machine. If the machine allows for badness it will eventually happen whether you use so called safe languages or not.

I think a lot of new languages introduce issues of their own. You might be avoiding stupid memory errors (which we have lots of tooling to find and debug now even for C) but now you’re adding a ton of untrustable code from the built in package manager. Why bother searching high and low for a memory error you need other exploits to make use of when you can just trick developers into shipping your malware in their code. In a lot of cases they won’t notice that your library they blindly imported is mining crypto, that the package repo has been compromised etc.

Bingo!

Do new languages add “a ton of untrustable code from the built in package manager”? Eh, maybe. Yes, many programs use lots of dependencies from random people with essentially no review, so malicious code is worth considering. But there are ways to address it. You don’t have to use external packages at all. More realistically, you can use packages sparingly only from trusted/vetted authors. You can (and should) check in lockfiles (which include dependency version numbers and content hashes) so that new, potentially malicious versions aren’t picked up by accident. You can vendor dependencies. There are young but promising tools like cargo-crev for shared code review. (Getting serious about cargo-crev is on my own todo list for when I’m closer to my application’s 1.0 release…)

Well that’s exactly what Linux distribution packaging systems do. They manages dependencies, and you can so see, which libs are used, mainly in C, sometime in other languages with C made libs having binding in other languages. Happily Python, also use CCI to reduce Python scripting waste and use more compiled code, in cpu/other processors intensive part.

“You don’t have to use external packages at all”, you must be doing your CS 101 homework. No, it’s inevitable in the industry.

Badly written code shouldn’t count into things. Trying to protect people from themselves is where a good part of those inefficiencies come from. There’s something called, “skill,” that needs to be accounted for. SERIOUSLY. I don’t want something with a safety net because it leads to devs being sloppy.

Your paper is 4, almost 5 years old. Although Python has made good strides in speed with some branches near C speeds, it’s a moot point with CPU power steadily increasing.

But it’s big advantage over C is almost everyone can pick it and use it. It is more maintainable and as a runtime errors in code can be quickly located and squashed. But bad code is still bad code and will still be present.

The other advantage is speed of development vs C. Adding a new feature takes 3 or more times that of Python. So over time the speed of development vs C on monolithic apps will match the efficiency of speed and development with a lower cost over time. That’s a factor that the bean counters look at and while Python may take 5 seconds to 30+ minutes slower, upgrading the hardware or cost of a higher cloud tier is cheaper than costs of additional in-house and occasional ramp ups with contract workers that can read the code quickly and be productive faster vs a C programming environment.

Statistics such as these look good, but cost is what drives development and maintenance in the C suite. Unless raw speed is the goal, then C programming is the answer. But the need makes up a lower segment of the market today and I don’t see growth in that segment to increase in the future sadly enough. I like C myself but it no longer pays as well and makes up the minority of employment opportunities. Money talks and most programmers will graduate to it in order to better support their families and the young single ones like a wider range of choices to blow their money on.

And as an IT Director, I have to do more with less if we can’t show the payback of both tangible an intangibles of a project that will generate more revenue and pay the project costs within 3 years. So getting a larget budget and raises for the department is based on how we can generate revenue for the company. That’s my bottom line. We have to be profit generating unit not just an expense. Otherwise the company will just outsource the department as so many others have.

You can write bad code in ever language. Getting a crash because of an uncatched exception is not better. Catching it and don’t reacting on it correctly is even worse, because you are hiding the real error source. C programmers know to check malloc result and handle OOM. I don’t know any high level Java or C# programs that can deal with OOM. The devs silently assume mem is an infinite resource.

Also critical software in satilites, airplanes etc. is still written in C, because it is deterministic and and can be proven to be correct. Every lamguage with a garbage collector is unusable for such use cases.

It’s been a long time since compsci but I think it’s been proven that’s it’s impossible to prove any arbitrary complex program to be correct.

When looking for statistics on this in WAF products, you’ll notice that C is almost not represented in remotely exploitable bugs, contrary to all the horrors you can do in languages supporting evaluation or exec, such as PHP, which is by far the most feared in hosting environments.

WAF as in Web Application Firewall? Not surprised to hear C is almost not represented in WAF statistics; almost no web applications are written in C. Code that doesn’t exist is secure but maybe not so functional…

For things that are written in C/C++, about 70% of security errors are due to memory safety problems. (Strikingly, Microsoft and Chromium gave this same percentage for their respective security bugs.)

Memory-safe languages are effective at eliminating most of these errors. I particularly like Rust. In safe Rust (and I use “unsafe” in <1% of the Rust lines I write), I have memory safety for almost no runtime cost. (Why “almost”? Mostly because of bounds checking on array indexes, which the compiler doesn’t elide as often as I’d like.) YMMV, but I actually write more efficient code in Rust than in C++. In C++, I often am conservative and allocate+copy rather than risk someone misunderstanding the lifetime of a reference. In safe Rust, I am fearless.

It’s fun to read that it doesn’t exist or is not secure, because virtually *all* the products that are put at the edge and that are there to serve the ones that need a WAF are actually written in C. NGINX, Apache, Varnish, HAProxy, Lighttpd, Litespeed. Thus they are the first ones taking all the attacks and the least represented in the vuln database. And one can wonder what it would be like if they were not at the front to deal with the horrors they’re dealing with, protecting the next level…

Out of interest, what vulnerability database are you looking at?

You might be right that they’re least represented, but they’re not immune. I remember some high-profile vulnerabilities in Apache, for example.

There’s been a tremendous effort put into each line of those six http servers. They’re standard, well-respected, open-source software, some of them developed over decades, and that’s nearly a complete list of the ones folks use.

They’re completely dwarfed in line count by web application code, which again just isn’t written in C. I couldn’t list just six web apps that are all most sites use. In fact, most sites have at least some custom web application code, often thrown together by less experienced developers under deadline. How well do you think it’d go if those web apps were all written in C also?

I’m aware of at least one banking application written in C and it’s not that buggy. However it has always cost a lot in upgrades. I mean, there’s virtually no maintenance. It works, there are no moving parts, period. But when a new feature is requested, it costs more to add than in newer languages. And developers are 3 times as expensive as those you need for Java or others found in such environments.

In that case the issue is the programmer not the language. Even bad written code vs good one can have a big impact on performance and efficiency.

When you see self defined high level software engineer dividing a integer by a 2^n instead of bit shift you understand that the issue is not the language.

Absolutely! The sad thing is that bad developers tend to restrict what they can use to what Google or StackOverflow can propose them as a starter.

What a load of shite.

I program in c++ and the universe is written in C. It does not matter if you have a Lamborghini if you don’t know how to drive. 🙂

Stop spreading bullshit, unoptimized C is many times resources intensive, error prone,less secure. You could die before making a C program behave well , smooth, green and fast.

There are a lot better Candidate like nim-lang which is close to C in performance and as easy as python.

Fortunately over the last 50 years there were thousands of people who had way less difficulty than you imagine and could build the whole internet that you can use today to post such uneducated messages 🙂

Seems like Rust should be the “go to” language if you want speed, efficiency and safety.

No, because it’s got complex constructs that are hard to follow, etc. The problem with Rust is that it’s trying to do the same things that the latest C++ standards are doing coupled with what Haskell does well (The efficiency in Rust comes at other prices)- with i’s own new syntax and ROE that do not give you the same things.

I for now never seen piece of software in Rust that was as fast as C version. And they consume generally lot of HDD, due to the wrong habit to statically link all the libs, with security issues+compilation waste that come with it when update is needed.

I agree, we should demand speed, efficiency and safety… but you misspelled Ada

That’s what some say, but finding developers who are able to decipher that smiley-based language is not easy yet.

The low-energry consumption of C/C++ became only relevant when programs moved from client side to provider/cloud … because only then the provider had to pay himself for the energy consumed. That caused a raise in popularity in C/C++ in the past 1-2 past decade.

It actually came into play much earlier on. The notion that C/C++ wasn’t popular prior to this and all is a silly notion out of box. You just came late to the party. That’s what over 35 years in the industry brings you. Comprehension. Of a lot of things.

The grandparent simply does not have the same viewpoint of the battlefield that you do. Pull your head in just a bit.

Let me check your comprehension of my comprehension: I did my first C programming in 1988 or so. Pascal before that. And assembly before that (1985).

You mean compared to PL/1, assembly or COBOL maybe ? Because C was the language the systems and tools were written with by then. When communication tools started to appear with the growth of the internet, C was used to write most applications. Mail clients & servers, IRC clients and deamons, browsers & web servers, news readers & servers, and of course, all CGI applications were all written in C because the above languages weren’t made for this. Perl started to displace C for CGI applications as it was easier (and trivially exploitable most of the time due to unreadable code full of badly approximative regex). Then Java appeared and was long considered a client-side language until it shockingly started to appear on the server side in 1999-2000, when RAM started to become cheap. PHP replaced Perl and Java replaced C on server-side applications, just due to greater accessibility. So no, I wouldn’t say that C became more popular in the last 20 years. It just hasn’t disappeared because it’s ultimately the only transparent interface to the processor, and other languages are written on top of it, so those looking for efficiency finally make the effort of learning it.

C/C++ was king in the 1990´s, but then came Java as absolute king in 2002, and then C rose again in late 2000´s.

I haven’t read the whole thing but based on your write up I’m sceptical that there is any realworld insight to be gained.

Even in the highlevel VM based languages like java you wouldn’t be writing these micro functional blocks in the language itself as you should have decentish compiled versions of those things provided already. Otherwise what’s the point? Python is basically hot glue over C libraries in a lot of cases. I doubt it makes a huge amount of difference for a lot of use cases.

And the use case for these languages tends to be different too.. perl is probably more often used for infrequent short lived tasks so the actual energy saving from rewriting all of the perl in the world in Rust probably isn’t going to make Greta happy.

It’s somewhere between their take and yours. Seriously. It’s premature optimization for most cases, to be sure- but there’s a lot more done in Perl and Python than ought to be and I consider some of the inefficiencies they “measured” to be a lack of comprehension of the language and proceeding to implement something as if you’d do it in C. Lua’s not that inefficient, for example- you couldn’t use it in games as a scripting language, for example, if it were as “bad” as they’re describing there. Some of that you talk to- but you kind of gloss it over a bit too.

The closer you get to C in that hierarchy there in their study, the more efficient you got. This doesn’t get into the relative merits or issues (and there ARE in many of them) of the various languages. They just proceeded to implement <X> and then tested it, without contemplation of the features of the languages in question. After having learned, and professionally used over 20 programming languages over the 35+ years in the industry, there’s good choices, good fits and poor ones. Both in terms of developer efficiency, system efficiency, scale out, etc. It’s a bit of an indication of a likely poor execution of the whole thing when you see the thing done as it was here.

In terms of efficiency in CPU and power consumption while balancing off things like verbosity and complexity in coding with Assembly, Forth beats C. So, does that lead to using it? Heh. That’s a BIG, “it depends,” thing. I’d go there. Some devs? Oh, H*LL, NO! They lack the discipline and seem to think Rust is God’s gift to programming. They seem to feel the need to have safety nets that let them just be sloppy. Esp. at the embedded systems levels.

It’s about design trade-offs, folks. It’s another metric and nothing more. And I see this as needing more study. It highlights the need to think about it in something resembling the study’s attempt- but I see it as flawed. Like everything, you have to get the methodology right…and I see issues with what they did and how. Some of the rest of us see some of it too.

>inefficiencies they “measured” to be a lack of comprehension of the language

Or they knew that but the findings would be much less *interesting* if they benchmarked a full real world app written in C against basically the same app implemented as Python glue over mostly the same C libraries.

Actually, one of the problems is that people tend to either consider the micro-benchmark case or the big picture, but not how time is spent in applications. The reality is that most extremely slow applications suffer from either:

Very often applications are considered sufficiently optimized when unit tests show acceptable wait time. But 100ms to compute a shortest path between multiple geolocated points might seem nothing for a developer testing his application, however they’re terrible if this needs to be done often in an application server because you won’t be able to do it more than 10 times a second. And when you build a site that grows, you don’t have the option of rewriting the application, so instead you scale horizontally by adding servers. Just for a shitty function that cannot run efficiently in your language. In this case it’s very common to conclude “that language is shitty”, let’s switch to another one that will run more efficiently on such algorithm. But the real problem is the lack of application design. If scripting languages were only used as a glue between low-level functions they wouldn’t be that bad. It just turns out that man developers don’t know lower level languages anymore or don’t trust them and will prefer to use their high-level scripting languages as the language for everything, and rely on the interpreter’s optimizer, just-in-time compiler and whatever to partially compensate for their misdesign.

As I always say, silicon is cheaper than humans, and as long as this will remain true we won’t get out of this situation. If only electricity became 100 times more expensive, suddenly we’d see a boost in efficiency in a lot of programs (starting with browsers).

I tend to agree. We have always done UI in VB because it’s painless, and everything else in C or assembler.

That’s the best approach, but it’s quite rare because it requires more skills. Congrats for this 🙂

We once had a system with the real time part written in Verilog (FPGA) and C (DSP), then a level of C code on the x86 PC for basic operations, and the UI was VB with a COM link to Python for easily customized program logic. It all worked pretty well.

Informative article

Thanks

What about .net core 5?

I am well aware of how much heating javascript causes, I can close tabs in my browser for the fan to stop and have many 100MB’s released each time. Javascript’s natural habitat is the web browsers and html needs to be rendered at the end. We need a richer set of default web controls and frameworks renderable by the OS in the browser so that the endless javascript frameworks and abstractions are not needed as much.

> in a paper entitled Energy Efficiency across Programming Languages published in 2017

The group published an update in 2021: http://repositorium.uminho.pt/bitstream/1822/69044/1/paper.pdf

Many thanks for that. All this is quite close to the area of my PhD research, and I wasn’t aware of the 2021 paper

I took the data from the tables given in the paper and made some additional evaluations based on the geometric mean of the time data normalized to C, see http://software.rochus-keller.ch/Ranking_programming_languages_by_energy_efficiency_evaluation.ods.

Interestingly I get a different order than the one in Table 4 of the paper. The paper seems to have used arithmetic instead of geometric means, which is questionable given http://ece.uprm.edu/~nayda/Courses/Icom5047F06/Papers/paper4.pdf.

> in a paper entitled Energy Efficiency across Programming Languages published in 2017

The group published an update in early 2021. Here is the link (scrambled because apparently links cannot be posted here): repositorium.uminho.pt slash bitstream slash 1822 slash 69044 slash 1 slash paper.pdf (prefix with the http stuff and replace “slash” by character)

but this means killing of poetry… as latin, as Perl…

How did Go used so much CPU?

There is something wrong there because every script i did in Go had a good CPU and memory usage.

Compared to what?

So is Rust’s, there have been discoveries of rather substantial improvements to such common algorithms, but they are not so obvious and I doubt that they are all in there.

As for Go, this is often cited: https://blog.discord.com/why-discord-is-switching-from-go-to-rust-a190bbca2b1f

You probably mean “good enough for you”. For plenty of tasks it’s generally better than interpreted languages, but I’ve seen some people use it for network tools or log processing and it lacked at least one digit compared to regular C code.

I wrote a milter to subsample bounce messages based on MySQL settings and fed the result to a ruby program. The milter was written in C with pthreads and never failed or crashed or ran out of memory. The ruby program had to use a sleep of one second to prevent it from crashing due to threading issues. Both programs were multi-threaded. I agree that bad programming practices can doom any project. But, when well written, C will beat them all.

Waouh, that means low level programming languages are more efficient than higher level ones…just kind of wheel re-discovery uh… Nothing about assembly language which should relegate C and C++ to the summits of inefficiency, and why not go to hexa code or directly in binary…good luck to program even if only a simple compression software. Maybe you should consider only the global cost of development, which is currently the only items all companies are focusing on. Yeah, develop a complex software in C, at the good level of security and user friendly, will be 5 times longer than in python. Nevertheless, it remains that C, and C++ are really good schools to learn and understand how software and hardware are closely related and sweat a lot with memory and stack management.

They didn’t look at Smalltalk, which they should have. Also, the OS used is a significant factor.

Windows, for example, is forever indexing all of the time. Updates are measured in gigabytes.

Hundreds of processes are going on all of the time. Applications are not self-contained and use resources from all over the place. Installing is an elaborate process when simply copying a self-contained application onto the computer as a simple file would be a much more practical alternative taking a few seconds rather than rebooting a machine many gigabytes in size and trying to resolve thousands of potential conflicts which are not always successful. Also, there is no doubt that reorganizing, diagnosing, and repairing the machine while it is running is a nutty solution compared to allowing static updates and installing/deinstalling before the OS is even started.

A key point about this study: it’s not a direct comparison of programming languages. It’s a comparison of implementations of The Computer Language Benchmarks Game challenges in a variety of programming languages. Some of the implementations are painstakingly optimized with SIMD and sophisticated algorithms. Some aren’t. It’s benchmarking N different programs written in M languages (where M<N) that satisfy a given challenge, not the same program ported to N languages. Which languages have high-quality implementations might say more about how obsessed a given language’s community is with this particular set of benchmarks and rules than the actual efficiency of the language in question.

For example, look at the difference between JavaScript and TypeScript on fannkuch-redux. Wow, JavaScript is an order of magnitude more energy-efficient than TypeScript! But…that doesn’t make sense. You can just “cp fast-solution.js fast-solution.ts” and that difference evaporates. Any valid JavaScript program is a valid TypeScript program; the type annotations are optional, and it’s ultimately compiled by the same JavaScript compiler anyway.

(That said, in general the ranking matches my intuition about which languages are fast, and I feel good that my favorite language Rust did quite well. 😉 )

The usual ‘casual benchmarking: you benchmark A, but actually measure B, and conclude you’ve measured C’ 🙂

Oh yes, let’s reinvent the Erlang/OTP in C just to save CPU cycles (I bet that won’t be the case). Let’s waste millions of hours of programmers’ life, just to make a point.

Can you read graphs? According to the results you present, Go is less energy efficient than some “interpreted languages” like Dart, Hack, JavaScript and TypeScript (which all use JIT compilers, so I don’t know why they are classified as “interpreted”).

Also, please note that the fastest C, C++, Rust, and Pascal programs (at least) use a custom bump allocator, whereas the others use the standard memory management facilities of their language. While this might or might not be an acceptable choice for the problem, it is certainly abnormal, and doesn’t “generally” work.

A post that makes an environmentalist statement about computer energy consumption, but then end it by requesting donations via cryptocurrencies which is the number one contributer to energy crises in IT world.

Sorry, but this is ignorant of how Python is actually used. The compute-intensive parts are written in compiled languages, so the differences in power consumption are much less. The data are from irrelevant benchmarks in which an entire compute-intensive problem is coded in Python. No actual professional would do that.

> The data are from irrelevant benchmarks in which an entire compute-intensive problem is coded in Python. No actual professional would do that.

I’m sorry but I’m seeing that all the time. A lot of admins use it to process logs or transform data, and they adapt to the slugishness to the point of finding it totally normal to wait 1 minute to average response times in 1 GB of HTTP logs or count the status codes, because, well, “for sure it should take time, it’s 1 GB after all, that’s 16 MB per second, wow!”. And this is not going to change anytime soon because it’s being taught in schools as a programming language instead of a scripting language.

Most people consider fast a program that counts faster than they can. It’s natural. But it’s forgetting that hardware performance has grown by more than one million over the last 40 years and that it would be about time to think differently and benefit from these improvements for everything and not just games or coding speed.

Who is this Frank Earl guy who’s so high on his own farts and 35 years of experience sucking codex?

Probably one who, like many of us here, started with real computers at a time when virtual machines were not even a name nor concept, and when you would declare DATA statements in BASIC to place native machine code for the stuff that you wanted to be fast, writing that code in assembly under the DEBUG utility, carefully copying the bytes, and calling them using CALL VARPTR(foo)… Yeah, old times. At least by then savings were measurable to the naked eye (or ear when you wanted to make sounds on a crappy buzzer).

Virtual machines like Pascal p-code didn’t exist then? Or even SWEET16 for Apple/Wozniak’s Integer BASIC?

Ahhh yes machine code in data statements.. thanks for reminding me. Those were the days 🙂

FYI the language is called assembly, not assembler. An assembler is a tool that translates assembly into machine code.

LOL, the author of MASM32 made this very mistake.

But in many use-cases, code in Python/Perl is just some glue/wrapper calls around libraries where most crical parts are written in C (e.g. numpy…). Besides, remember the Greenspun’s tenth rule : “Any sufficiently complicated C or Fortran program contains an ad hoc, informally-specified, bug-ridden, slow implementation of half of Common Lisp”.

I find declaring variable types exhausting. While I agree with everything in the article how about C and a similar being faster because it is closer to the hardware I just feel like the amounts of lost electricity are too minuscule. I couldn’t do many of the tasks that I need to do as a Linux server administrator and internet marketer without Perl and python. I use the python module scrapy and selenium webdriver (with chromedriver) to perform so many daily tasks it’s scary to even think about doing that stuff in something I’d have to compile everytime I wanna run it!

I do envy you though as I could never wrap my brain around x86 assembler further than a “hello world” app. My brain just won’t allow me to understand the documentation even when it’s in crayon!

> I just feel like the amounts of lost electricity are too minuscule

Per user this is often true. But when you start to pack all that code into tens of VMs running on real hardware that fills datacenters, I can assure you that’s not the case. I’ve met several times people who had to change to a new DC because they reached their maximum electricity capacity. And when that happens, it’s too late to think “if I had known, I’d have hired a few more developers to rewrite all that in the background and made amazing savings”.

I’m delighted to finally see a comparison by energy, I’ve long been saying I would eventually try to produce something like this! Having seen performance ratios of 6000 between C and another language in the past on some algorithms really disgusted me about what we were doing with all these joules… I remember having showed some “normal” performance levels on certain things running on very small machines (like a USB-powered gl.inet machine running a MIPS at 400 MHz), and people were asking where the cheat was because they considered it impossible given that for the same task they needed a big dual-xeon system. Then you can’t help but think about the thousands of machines eating power in datacenters that are probably wasted with the same way of thinking!

This is the dumbest shit I read today

That’s surprising that Go underperforms. I left the community because giving good free work to bad people doesn’t make any sense to me, not because I expected the implementation to be THAT deficient. The very simple idea at the beginning was to just knock the sharp edges off of C, add a garbage collector, and implement the Hoare “communicating sequential processes” paper so that Google staff would have something like a restricted C++ to rewrite all of the Python code in after Guido had left.

You can always run your Python code in the Jython VM, though I don’t know that these language constructs will necessarily manifest the benefits evidenced by Java.

The personalities at Google veer toward passive aggression and the Bay Area is cached in my view. Someone at Google likely decided that they wanted to replace everything Guido had ever done, and hired Rob to be the bigger and better version of him with a bigger and better idea. Pissing contest and cargo cult management, nasty people. Not really a bad design, but who will work for you?

Obviously C is cheaper than Python on a single run, but to develop a C program one needs way more iterations of compile-and-test than Python, due to the programmer’s friendly language and libraries. It’s about the same as for high-performance optimization, don’t spend (CPU) time optimizing code that isn’t expensive at run time.

While this seems to be true for most developers, actually for me it has always been the opposite. Having spent countless hours trying to make a very trivial python script serve a page and emit certain headers in HTTP responses drove me totally crazy. There are countless variants of the HTTP server, some easy to use but not extensible, and others that you don’t even understand what you have to feed them. Now I know what drives so much traffic to stackoverflow, I spent my time reading there and blindly copy-pasting junk to see if that allowed me to progress. At the end of the day I realized that it had never been that long for me in C to write a trivial network server! My perception of such scripting languages is that it’s easy to get close to what you need but the last mile is never reached and you have to readjust your objective to match the reality of the options offered to you.

Tesla autonomous driving software seems mostly relying on C++, Java, VHDL/Verilog, and Python. (Web design for interfaces PHP, CSS, Ruby, React, Truescript and SQL for database management)?

Think efficiency comes with priorities for workflow of expert or community tasks (and their sizes and intensity on interaction for development changes) and infrastructure dependencies (drivers experiences database, surrounding acoustics recognition vs. local computation abilities with AI based hardware).

Nonetheless multiples of 30-70 for speed comparison or energy consumption differences for executables are more than expected (on 2021 hardware levels)?

The basic idea is flawed. It doesn’t figure in the extra programming effort to write efficient C. This may be far worse than the runtime gains.

It really depends. I don’t count the number of times I wrote C programs for a single use because it took less time than the difference with another language for a single run (typically data processing). And when your applications scale you value hosting costs a lot, to the point that servers can quickly outpace your developers’ costs.

Computers (including servers) make up about 1% of the world’s energy demand.

Negligible

“[…] in 2015, ICT accounted for up to 5% of global energy demand”

“By 2030, ICT is projected to account for 7% of global energy demand.”

https://arxiv.org/pdf/2011.02839.pdf

Most of the energy consumption is in the production of devices (grey energy), not in their use.

C is a low level programming language.

Python and the others are high ends, and encapsulates stuff for the programmer at a cost.

If you can afford that cost, go for it.

yeah sure, rust as efficient as c… I believe to this as I believe a wayland benchmark on phoronix: zero.

I looked at some asm out of rust. It’s pretty decent, really. The problem this language has is that it’s extremely strict and prevents you from doing what you want, which is also how the compiler can infer some simplifications and optimizations. So you spend 3 times more time trying to write your program in a way that pleases the compiler, which at this point makes me prefer assembly, which is way more readable than rust for me!

Yea but you’re assuming that the programmer takes 0 seconds to write perfect code…

What about the energy needed to keep the machine running…?

Huh ? That energy (when it exists) is proportional to the code’s inefficiency. When you can run you application on a sub-watt machine you don’t need to start the dual-socket octa-core at 400W for the same stuff. Just an example, my backup server, it’s an Armada385 with 1GB of RAM. It needs to have some decent peak performance during backups but is not used the rest of the time, so that hardware is perfect. It consumes around 2W in idle. I was suggested to use some horribly inefficient backup tools written in Go that simply cannot fit on this machine and would force me to replace it with a big x86 machine equiped with 16G of RAM just due to the resource wastage. I’m not willing to have that large a machine (possibly with a fan) running 24×7 just to upload backups once a day on a remote server, so I’m still relying on ugly tools instead. If such tools were written in a more efficient way (like rsync, chasing every single byte of memory) that wouldn’t be an issue.

The author of this article seems to personally dislike Python. At least that’s the feeling I get.

Or maybe he only had to pay the power bill to make his opinion.

Like everywhere on this environmental statements about what should be done… they completely avoid the economics behind everything… there is no way to replace our energy infrastructure with green energy as today at a decent cost, and the same here… making and maintenance of complex C/C++ software isn’t economically rational for many cases.

I would compare the current world’s economics to a criminal activity. Damaging nature and environment just some people to live in luxury. To speak nothing about the enormous world’s debt that to the best of my understanding needs to be repaid by 4 generations ahead. It is said that many IT companies moved to this trend as well. Personally I am very disappointed by the current software trend to use more .NET, Python, Java … based products, quite disappointed experience with such products.

Hope more programmers and software engineers will look what is important to protect nature and environment and less what is economical. As well as think that computer and software engineering is about creating something like an art, not just some business to make some money.

Many of that generations ahead maintain employment structures, forcing them to build cookie pop-up messages and data tracking, that require attention, knowledge on ‘rebound effects’, time and energy, for making their livings, because that’s the way this culture of ‘economy first’ created law?

Economics is about being rational. If powering an entire country with green energy instead of other sources cost 3 times, then you are 3 times poorly on average just bc of being “green”. Energy prices affect every other price.

If every program was made on C/C++ the energy consumption and other resources to develop all those apps will increase sharply, something not been taken in count in this article.

Economics are about not being stupid. Smart environmental policies should focus on take the best balance between environment and energy cost, the same on software. Higher energy prices, like we have now, will make other options to arise and we are on that steps on making green a rational decision rather than a dumb idea.

I my opinion, the time for balancing between economy and environment has passed long time ago. Currently, we see the devastation effects of climate change, disasters, increased sun’s UV radiation etc. If humanity does not want to live in an environment like the presented in a recent movie Finch 2021, very strict measures are required.

The resources for developing apps in C/C++ are nothing in comparison to the resources users are require to have in order to use those apps. Just look at the old Window XP apps – great responsiveness, limited use of RAM, storage space … If an old small XP program was let say 100 KB, nowadays a similar app could be at least few MB. Recently I was looking at some comments that 80 MHz microcontroller has no enough processing power to do do some simple FFT and the developer need it 500 MHz microcontroller. Many programmers got quite lazy etc. This also refers to web programming, huge web sites doing nothing, but just requires tremendous computer resources …

Economic, finance and laws are tools for making people do what is right. Unfortunately some misused them.

We always do the math. You are willing to be far more poorly just to be “green”?? Maybe you have enough resources to afford that.. not many.

The thing is, we always affect environment with any action, even green energy facilities have an impact! We always do that balance. We need to mix solar/wind/hydro facilities with nuclear. But we need the last one for now. And UE is closing them and they will have to reopen worst options to supply the demand (coal?) or prices will skyrocket.

For the C/C++ comparison it would require a bigger research to determine hoe much energy we waste taking in count development costs and consumers waste at execution. But to be honest… how much energy current laptops use??? Very low, we have the resources to spear, and those windowsxp pcs consume far more than current hardware.

What about Julia?

There is a reason different programming languages exist. They simply serve different purposes. For example, I would never try to implement anything OS related with Python, and for the same reasons, I would never choose C for many application programming either, it is simply a bad choice. Lets consider the dictionary/Map data structure needed for application programming. Trying to implement that in a language that does not support it inherently ( C ) is horrendous, inefficient and buggy.

In addition, I certainly hate the fact that you are mixing up your preferences with saving the planet nonsense. You are making this a political issue rather than a scientific issue. As my beloved George Carlin put it once, the planet is fine, it has been here long before we arrived and it will be here after we are long gone, it is us, the humans, that will be vanished off its face as the other 98.5% of other species did 🙂 Don’t tell me your troubles old man, put an honest paper out that says that as an old dude, I don’t want learn new programming languages 🙂

IMHO the trendy architecture and frameworks are wasting far more than the language alone. Trillions of instructions are literally turned into NOP equivalent to achieve the buzzwords like cloud and microservice …

totally agreed.

Electrical power and compute resources are something humans can produce more of in an increasingly efficient and clean manner. Higher level programming languages use more of those in exchange for saving something that cannot be produced – time.

That’s not always true. When some programs become popular and make 1 billion persons waste one second of their life, it’s equivalent to 30 years of a single person. And some badly written programs waste wayyyyy more than 1 second of life to their users during all the the time the program is used. Some are more like 1 second lost per second of usage, counting several hours a day (think browsers and fat javascript frameworks that make you wait long for websites to render). If the time lost in my life due to programs written in some languages totals 1 yr every 20 yr, and that’s the same for 100 million people, should we consider that the total 100 million years-people lost that equals 1.2 million people killed constitute a crime against humanity ? I mean, let’s be serious, the only reason for using high-level language is laziness or hurry. You need something right now done quickly for you own use, that’s fine. If its performance has any impact on users, at some point it ought to be rewritten more efficiently.

I would absolutely love to see an elaborate web application written in C. I could die happy.

As I mentioned above, I know at least one bank application rewritten in C after JS had been a terrible performance and reliability failure. It then progressively moved some parts to Java for ease of maintenance, but the parts that rarely change remain in C since there’s no need to risk to introduce bugs just for fun. No need to die for this 🙂

Save the planet, don’t use computers.