As machine learning moves to microcontrollers, something referred to as TinyML, new tools are needed to compare different solutions. We’ve previously posted some Tensorflow Lite for Microcontroller benchmarks (for single board computers), but a benchmarking tool specifically designed for AI inference on resources-constrained embedded systems could prove to be useful for consistent results and cover a wider range of use cases.

That’s exactly what MLCommons, an open engineering consortium, has done with MLPerf Tiny Inference benchmarks designed to measure how quickly a trained neural network can process new data for tiny, low-power devices, and it also includes an optional power measurement option.

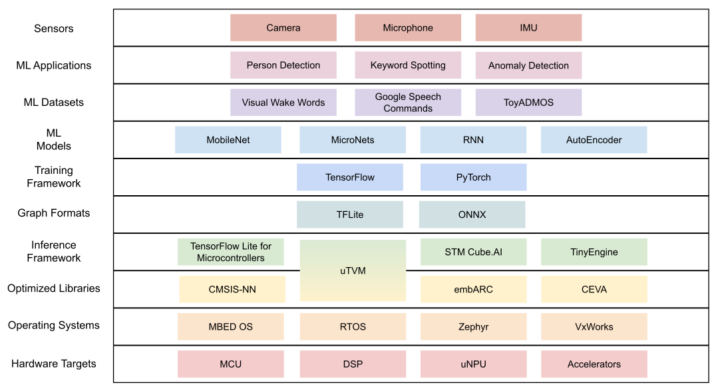

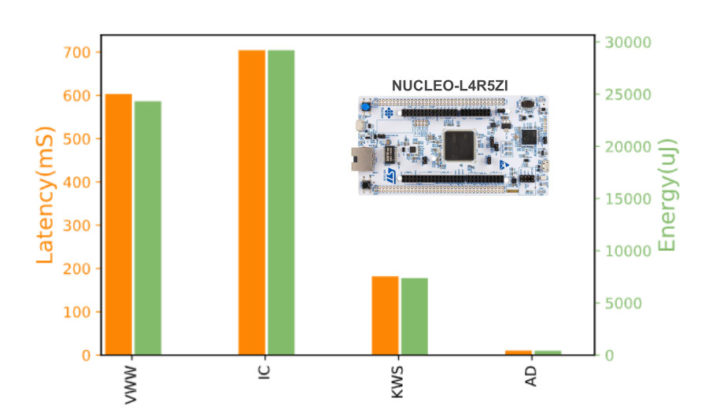

MLPerf Tiny v0.5, the first inference benchmark suite designed for embedded systems from the organization, consists of four benchmarks:

- Keyword Spotting – Small vocabulary keyword spotting using DS-CNN model. Typically used in smart earbuds and virtual assistants.

- Visual Wake Words – Binary image classification using MobileNet. In-home security monitoring is one application of this type of AI workload.

- Image Classification – Small image classification using Cifar10 dataset, Resnet model. Lots of use cases in smart video recognition applications.

- Anomaly Detection – Detecting anomalies in machine operating sounds using ToyADMOS dataset, Deep AutoEncoder model. One use case is preventive maintenance.

MLPerf Tiny targets neural networks that are typically under 100 kB, will rely on the EEMBCs EnergyRunner benchmark framework to connect to the system under test and measure power consumption while the benchmarks are running. This feature appears to be a work in progress.

You’ll find more details about MLPerf Tiny benchmark in a white paper, the open-source reference implementation can be found on Github, and some results are posted and updated in Google Docs.

We’ll have to see if MLPerf Tiny truly becomes an industry standard but various organizations and companies are already involved in the project including Harvard University, EEMBC, CERN, Google, Infineon, Latent AI, ON Semiconductor, Peng Cheng Laboratories, Qualcomm, Renesas, Silicon Labs, STMicroelectronics, Synopsys, Syntiant, UCSD, and others.

Via Hackster.io and MLCommons press release.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress