Rockchip RK3399 and RK3328 are typically used in Chromebooks, single board computers, TV boxes, and all sort of AIoT devices, but if you ever wanted to create a cluster based on those processor, Firefly Cluster Server R2 leverages the company’s RK3399, RK3328, or even RK1808 NPU SoM to bring 72 modules to a 2U rack cluster server enclosure, for a total of up to 432 Arm Cortex-A72/A53 cores, 288 GB RAM, and two 3.5-inch hard drives.

Firefly Cluster Server R2 specifications:

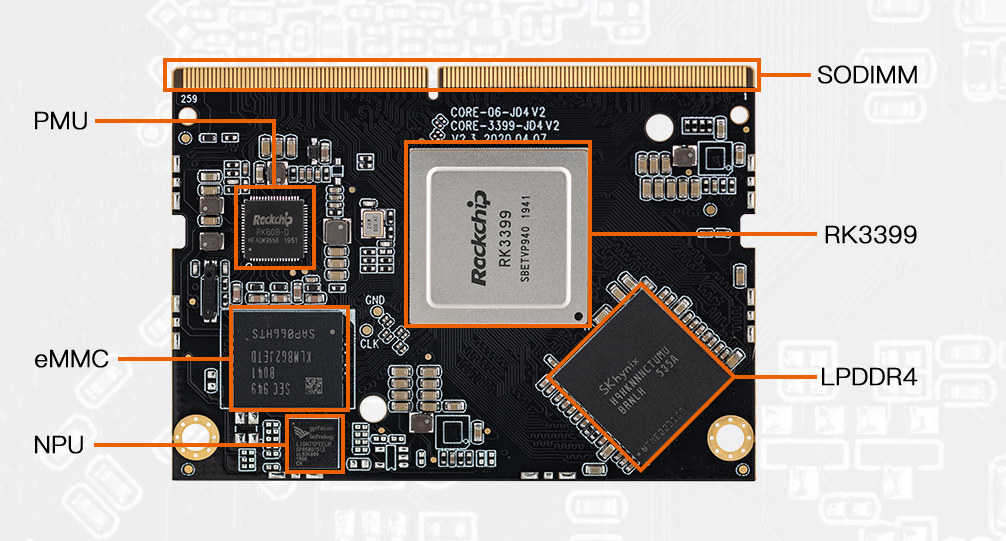

- Supported Modules

- Core-3399-JD4 with Rockchip RK3399 hexa-core Cortex-A72/A53 processor up to 1.5 GHz, up to 4GB RAM, and optional on-board 2.8 TOPS NPU (Gyrfalcon Lightspeeur SPR5801S)

- Core-3328-JD4 with Rockchip RK3328 quad-core Cortex-A53 processor up to 1.5 GHz, up to 4GB RAM

- Core-1808-JD4 with Rockchip RK1808 dual-core Cortex-A35 processor @ 1.6 GHz with integrated 3.0 TOPS NPU, up to 4GB RAM

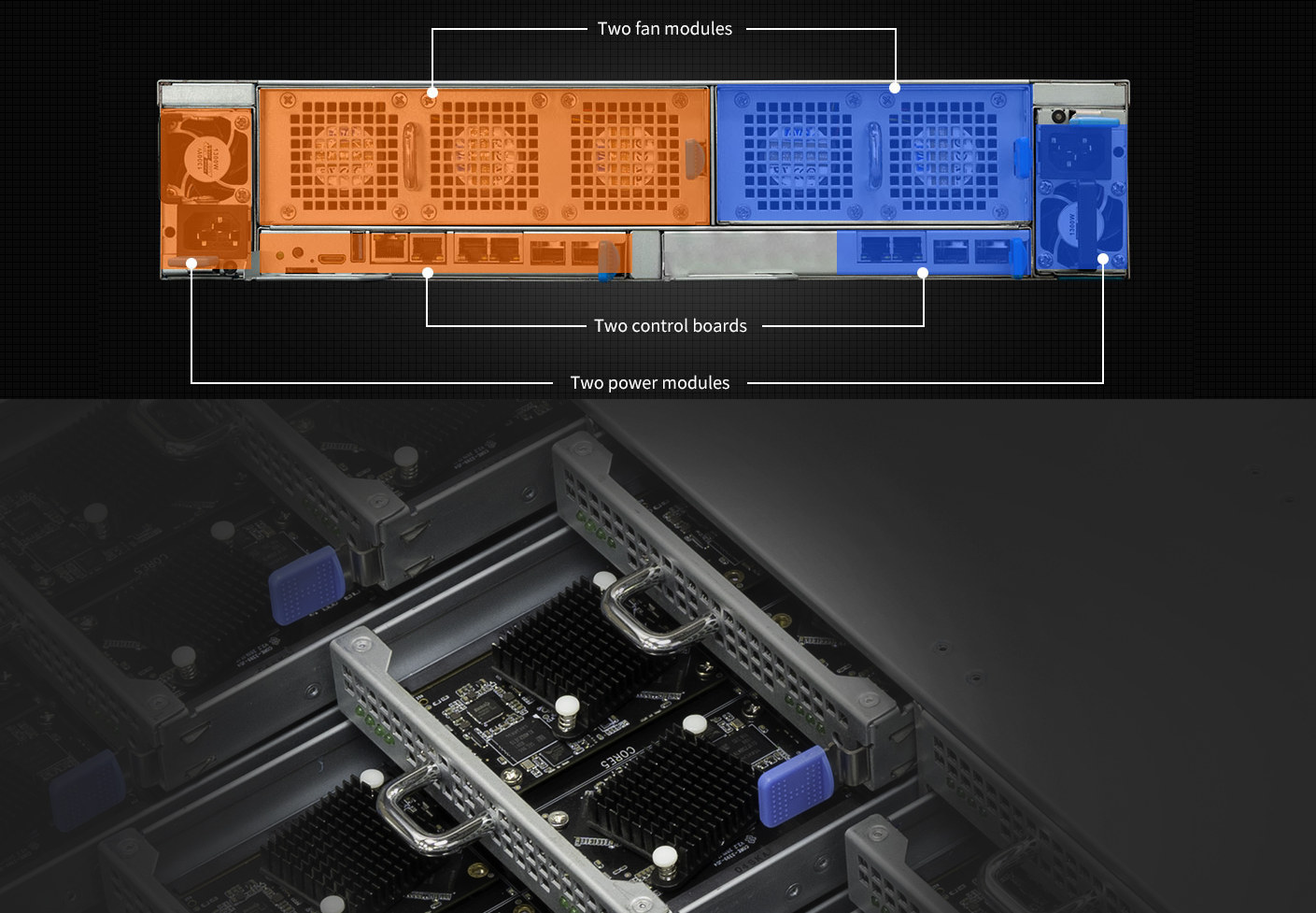

- Configuration – Up to 9x blade nodes with 8x modules each

- Storage

- 32GB eMMC flash on each module (options: 8, 16, 64, or 128 GB)

- 2x hot-swappable 3.5-inch SATA SDD / hard disks on blade node #9.

- SD card slot

- BMC module:

- Networking

- 2x Gigabit Ethernet RJ45 ports (redundant)

- 2x 10GbE SFP+ cages

- Support for network isolation, link aggregation, network load balancing and flow control.

- Video Output – Mini HDMI 2.0 up to 4Kp6 on master board

- USB – 1x USB 3.0 host

- Debugging – Console debug port

- Misc

- Indicators – 1x master board LED, 8x LEDs on each blade (one per board), 2x fan LEDs, 2x power LEDs, 2x switch module LEDs, UID LED, BMC LED

- Reset and UID buttons

- Fan modules – 3×2 redundancy fan module , 2×2 redundancy fan module

- Networking

- Power Supply – Dual-channel redundant power design: AC 100~240V 50/60Hz, 1300W / 800W optional

- Dimensions – 580 x 434 x 88.8mm (2U rack cluster server)

- Temperature Range – 0ºC – 35ºC

- Operating Humidity – 8%RH~95%RH

Firefly says the cluster can run Android, Ubuntu, or some other Linux distributions. Typical use cases include “cloud phone”, virtual desktop, edge computing, cloud gaming, cloud storage, blockchain, multi-channel video decoding, app cloning, etc. When fitted with the AI accelerators, it looks similar to Solidrun Janux GS31 Edge AI server designed for real-time inference on multiple video streams for the monitoring of smart cities & infrastructure, intelligent enterprise/industrial video surveillance, object detection, recognition & classification, smart visual analysis, and more. There’s no Wiki for Cluster Server R2 just yet, but you may find some relevant information on the Wiki for an earlier generation of the cluster server.

There’s no price for Cluster Server R2, but for reference, Firefly Cluster Server R1 is currently sold for $2000 with eleven Core-3399-JD4 module equipped with 4GB RAM and 32GB eMMC flash storage, so a complete NPU less Cluster Server R2 system might cost around $8,000 to $10,000. Further details may be found on the product page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

their website is like a joke – can’t wait to spend 10k $ there – NOT !

Interesting design.

> up to 18 storage device in total ( 180TB storage using 10TB drives)

I don’t think so since physically impossible. The product page talks about ‘Blade node 9 can be installed with two 3.5 SATA/SSD hard disks (supports hot swag)’. Still wondering how hot swap should be possible if the HDDs are neither accessible from the front nor the back.

Also a bit disappointing is the lack of care for some details (SoC heatsink form/orientation vs. air flow for example)

Private user experience mentioned, vertical mounted, high power-on number disk drives (>2U rack height) that started having problems on heads positioning (probably) showed spec like behavior being positioned horizontally (maybe for a couple of weeks at most before risk of data loss increases).

Opposite with disk failure on starting up: did not spin up horizontally, but on vertical position.

Diagonal positioning should be avoided for stationary disks on 3.5″ size?

(And times reformatting disks after changed positioning was on stepper motor heads positioning, considering hotswapping difficulties in the old days …)

For it, separating SoMs and disks clusters for improved cooling efficiency (one major durability/longevity factor for disk drives)?

Why talking about HDDs? The thing above allows two piles of spinning rust to be integrated somehow into the chassis, most probably routing a single PCIe lane from one of the 72 SoMs to an ASM1061 controller providing the two SATA ports. Maybe it’s even worse and the two disks are USB attached (using an JMS561 or something like that).

… article mentioned 18 hds for 9 nodes and based on diskprices analysis on data from amazon.[com, Europe] there’s 5 times lower cost /TB compared to (>7.68TB?) ssds, so for a 180TB storage $2700 or up to $15000 (for cheapest regular, low volume ssd prices)

… powerless data storage (not with server io) and data refresh powering for ssds?

> article mentioned 18 hds for 9 nodes

You can’t cramp 18 hot-swappable 3.5″ HDDs in a 2U rack enclosure since the maximum is 8 on the front and 8 on the back which is 16 in total and then there’s no room left for anything that makes up this R2 blade thing. In 580x 434×88.8mm you get either 18 x 3.5″ HDDs or 9 blade + 2 switch modules.

Thx to Your explanation and http://en.t-firefly.com/themes/t-firefly/public/assets/images/product/cluster/clustersever2/6.mp4

Shown SoMs are “69.6mm × 55 mm” so one layer of 144 cpu cores are on 320x434mm (and around <12mm for heatsink mounting on printed circuit board)

3.5″ will last long like hdds are conceptionally included?

Well, picture shows 9 on the front, not 8, but by the looks of it, it is the whole blade, that is hot-pluggable, not HDDs. The handle on the front looks nothing like a handle for a light HDD, but for a heavy, 800-1000 mm long blade.

I’m fully aware that these are blades and not hot swap HDD trays. Yesterday the post claimed (and still claims in the 1st paragraph) that 18 3.5″ HDDs could be included in this R2 thing which is impossible due to space constraints alone.

And while I was wrong about 8 HDDs max on each side of an 2U enclosure (it’s 12) still it’s impossible to fit 9 such SoM blades, 2 switch modules, 2 PSUs, a BMC and additional 18 3.5″ HDDs into such a small thing like the R2.

> 800-1000 mm long blade

To fit into an enclosure 580 mm deep? 😉

Good point. I misread that part as “9 blades can be installed with two SATA drives”. It would have been nice if the website explained a bit more about the internal design.

BTW: you mention everywhere on your blog RK3328 would be a 1.5GHz CPU which might only be true if the RK3328 device in question runs a ‘community OS’ like ayufan’s Rock64 builds, Armbian and the like.

Rockchip’s DVFS table ends at 1296 MHz but crappy tools like CPU-Z or Geekbench always only report max. cpufreq OPP. As such RK3328 at ‘2.0 GHz’ and the real 1.3 GHz perform identical since with Android every reported cpufreq above 1.3 GHz usually is fake.

A s922X som would run cooler and use less power

Wrt an price estimate based on R1 pricing… The older 1U model most probably relies on an internal simple 16 port GbE switch IC to interconnect the 11 SoMs with 4 externally accessible RJ45 GbE ports.

On this model the costs for the switch(es) must be a lot higher. I would assume 4 and 5 blades are each connected to an own 48-port switch IC that has one 10GbE uplink port (SFP+) and exposes another GbE port via RJ45.

Strange. This brings the solution to many hundreds of $ per RK3399. It’s unclear whom could benefit from this compared to any other solution in the same price range. You can have far more power with the same CPU from other vendors for the same price, or way more power using mainstream CPUs in the same form factor. There’s probably a niche market here that I’m not seeing.

> You can have far more power with the same CPU from other vendors for the same price

But not in the same rack space, right? Wrt ‘niche market’ think about the ‘cloud phone’ use case. If your business is review fraud (e.g. 60% of electronics reviews on Amazon being fake) then administering a bunch of centrally manageable Android devices might make a lot of sense compared to handing out several smartphones to the people writing those fake reviews 😉

Exactly!

The company where I work should be niche then, as I’m looking for a “reasonable price” solution to virtualize android through a native platform, I don’t want x86 to emulate android, I want arm to run android VM’s and make local network accessible through adb, and ot should be capable of running 80-100 VM. Administration wise, that’s a very good thing, as tkaiser said.

I think you can easily pack 72 NanoPI NEO4 boards in such a large enclosure. They will of course not be hot-pluggable. If you absolutely need a SOM, FriendlyElec’s RK3399v2 is only $80, we’re still half way from $10k for 72 boards. I don’t understand why some makers design their own SOMs when others already did so. It comes with a significant low-volume cost which could easily be cut by using more standard stuff.

> pack 72 NanoPI NEO4 boards in such a large enclosure.

To be accompanied by 2 Ethernet switches which need at least one or even two additional rack units. Since I would believe one of the popular use cases for this cluster thing will be mass surveillance (camera stream + AI/NPU) most probably the target audience wants more robust housing than just 72 SBC flying around in some case. BTW: enclosure depth seems to be optimised for telco/network racks.