Traverse Ten64 is a networking platform designed for 4G/5G gateways, local edge gateways for cloud architectures, IoT gateways, and network-attached-storage (NAS) devices for home and office use.

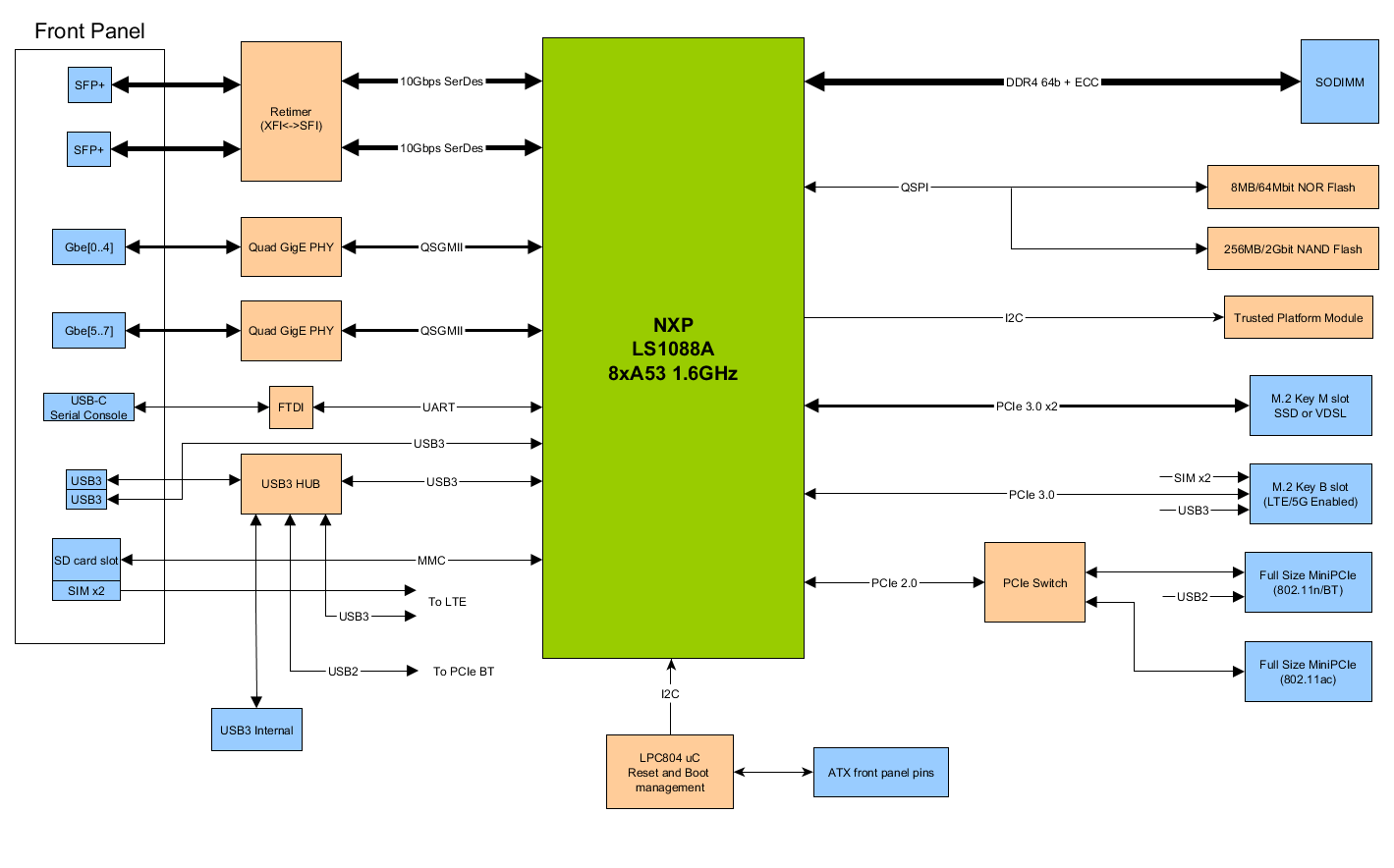

Ten64 system runs Linux mainline on based NXP Layerscape LS1088A octa-core Cortex-A53 communication processor with ECC memory support, and offers eight Gigabit Ethernet ports, two 10GbE SFP+ cages, as well as mini PCIe and M.2 expansion sockets.

Traverse Ten64 hardware specifications:

Traverse Ten64 hardware specifications:

- SoC – NXP QorIQ LS1088 octa-core Cortex-A53 processor @ 1.6 GHz, 64-bit with virtualization, crypto, and IOMMU support

- System Memory – 4GB to 32GB DDR4 SO-DIMMs (including ECC) at 2100 MT/s

- Storage

- 8 MB onboard QSPI NOR flash

- 256 MB onboard NAND flash

- NVMe SSDs via M.2 Key M

- microSD socket multiplexed with SIM2

- Networking

- 8x 1000Base-T Gigabit Ethernet ports (RJ45)

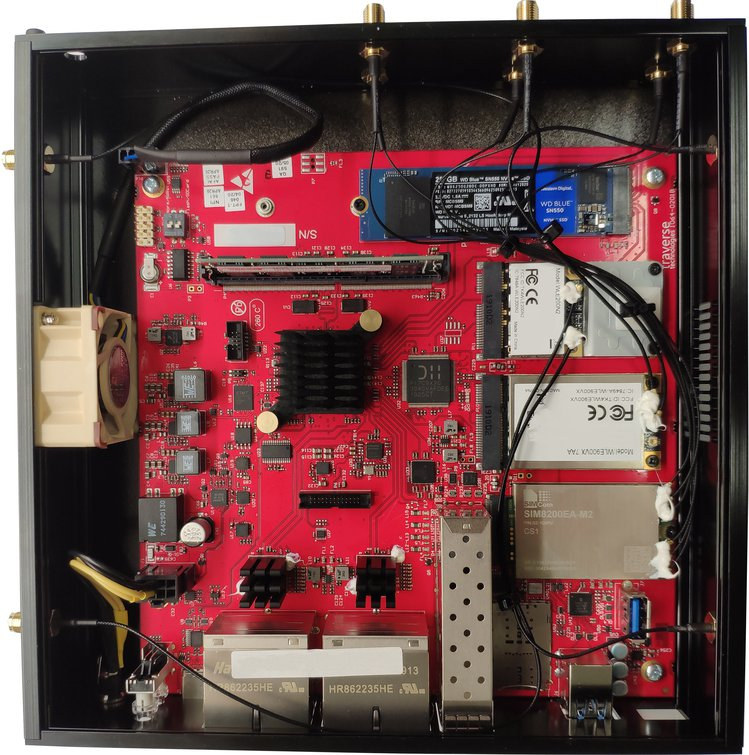

- 2x 10GbE SFP+ cages

- Optional wireless M.2/mPCIe card plus 3-choose-2 nanoSIM/microSD socket and up to 11x SMA connectors for antennas

- USB – 2x USB 3.0 ports, 1x USB 3.0 interface via internal header, USB-C port for serial console

- Expansion

- 1x M.2 Key M (PCIe 3.0×2)

- 2x miniPCIe (PCIe 2.0)

- 1x M.2 Key B (PCIe 3.0×1 + USB 3)

- Power Supply – 12 VDC via 8-pin connector, ATX 12V and 2.5 mm DC adapters available

- Power Consumption – > 20 W (typ.)

- Dimensions

- Board 175 mm x 170 mm (compatible with Mini-ITX)

- Enclosure – 200 mm x 200 mm x 45 mm (approximately 1U high, metal construction, internal cooling fan)

The system runs U-Boot v2019.X+, Linux Kernel 5.0+ (mainline), OpenWrt and standard Linux distributions via EFI, and supports NXP DPAA2 (Data Path Acceleration Architecture Gen2) with all drivers provided. Documentation is provided on Traverse website, where you’ll also find the firmware images, and the source code has been committed on Gitlab, but there does not seem to be any active development done on Gitlab.

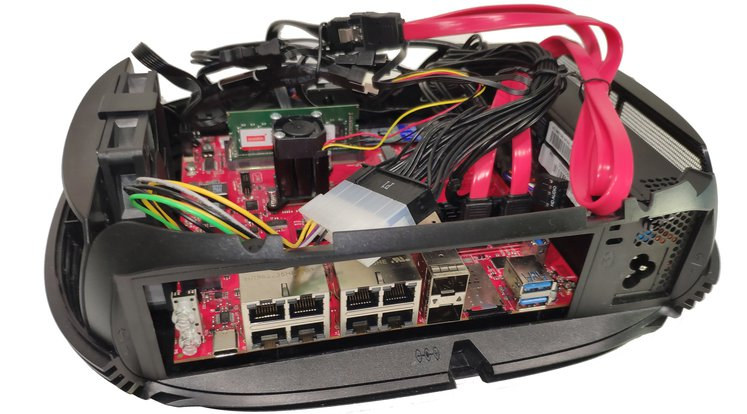

You may have seen one of the use cases listed in the introduction is NAS, but with no SATA ports on the solution, it does not seem that well-suited. That’s why Traverse also offers a NAS DIY Kit with heatsink & fan, ATX power adapter, a four-port SATA controller via an M.2 card, and an I/O faceplate. That looks overkills for a NAS, but maybe there are some applications that may benefit from a NAS with 10 Ethernet interfaces.

Ten64 provides an alternative to platforms like MacchiatoBin mini-ITX networking board, Turris Omnia open-source hardware router, or ClearFog CX LX2K networking board with a different set of features and price points.

Traverse Ten64 has just launched on Crowd Supply with a $60,000 funding target, and the networking platform is offered for $579 and up with the mainboard installed in the metal enclosure with a fan, a 60 W power supply with regional power cord, a USB-C console cable, a recovery microSD card, a SIM eject tool, and a hex key. ECC RAM is available as an option for $60 for 8GB to $240 for 32GB. The DIY NAS kit is listed for $70, but will only be useful if you purchase the $579 system. Shipping is free to the US, and $40 to the rest o the world. Backers should expect Ten64 to ship in March 2021.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

> 8x 1000Base-T Gigabit Ethernet (ports)

If it had 8x 2.5GbE or 5GbE I would have been interested :(.

My thoughts exactly.. if they were better than 1Gb this might have made a nice core for a home network for the sort of people that read this site.

It’s still only 8x A53 at 1.6 GHz, or the same as the nanopi-fire3. At some points there are limits to the amount of traffic the CPU will be able to process. Let’s say I’d rather pick 4×2.5 than 8×1 🙂

I think for my use case it would be ok. I don’t need 2.5Gb full duplex on all the ports all the time. I need 2.5Gb via NFS on one or two ports a few times a day and enough juice for running PPPoE and routing for internet.

Seems like the new H2 would work for this.

Yeah, my current setup is actually two of the older H2.. the issue is I’d still need a switch. If this has 2.5Gb ports I could chuck out the switch, wifi APs, H2s etc and just stick this box on a shelf instead.

This SoC supports full hardware offload for packet forwarding via DPAA2. If you’re using their SDK or DPDK in mainline Linux, you should be able to forward at 10Gbps with minimal CPU load. That being said, neither are supported in mainline OpenWRT, so you’d be stuck with software forwarding for now.

For pure forwarding, sure. But once you want to do more than forwarding (proxying, NFS server etc) that doesn’t count anymore. Network accelerators are only usable in very specific use cases, typically routing and switching. This is perfect when you want to daisy-chain servers and get rid of switches for example.

Is it possible to use the 10GbE SPF+ cages to connect to a 2.5 Gbps switch with some kind of adapter. I quick web search does not return any useful results.

as far as i remember, there are some 10G Base-T modules that supports 10 gb/s speed.

i suppose they also can handle 2.5 gb/s as well

Thanks. I did not use the best terms the first time I searched, Using the terms in your comment, I could find a “SFP+10GBASE-T Transceiver Copper RJ45 Module” on Amazon.

It’s around $40, and does not take too much space after being inserted in the the SFP cage.

This is a passive media converter. Unless IEEE 802.3bz is mentioned I wouldn’t trust into NBase-T capabilities. This thing here for example is IEEE 802.3bz capable and in fact not just a media converter but a 2-port switch.

Thanks. I’m now pretty sure the $40 thing will not work.

I found a similar looking one that does support 802.3bz multi-gigabit Ethernet, but it’s close to $500. So there does not seem to be a somewhat cost effective way to connect 10GbE to 2.5GbE.

There are definitely such SFP+ adapters, the problem is that they need to be supported by the device you plug them into. While most fiber-based SFP+ modules supporting the same type of medium should work (unless vendor ID is blacklisted), copper-based ones advertise a different link mode and are not always recognized as up by the switch. I personally wouldn’t invest that much just to test 🙂

The entire ecosystem for 5GbaseT and 2.5GbaseT is a shit show currently, its even worse when trying to get them working in an SFP form factor.

We (the telco I work at) have also been trying to find a 10GbaseT SFP+ that works in 2.5GbaseT and 5GbaseT operation. Currently the only one we’ve found that works is Methode’s DM7052 SFP+, which can run in 2.5GbaseT operation when plugged into another 2.5GbasT device.

The big caveat is the host interface will flip from 10G to 2.5G operation when a 2.5GbaseT capable device is plugged in. Unfortunately this tends to cause problems as most SFP+ ports can only run in 1G or 10G operation (2500BaseT, 5000BaseT, 2500BaseX or 5000baseX is still sadly very exotic and rare).

We’re able to get Methode’s 10G SFP+ working in 2.5GbaseT mode on our Armada 3720 board (1G or 2.5G capable), but only after PHYLINK changes in Linux.

Hi, Matt from Traverse here. Yes, you can use a 10G SFP+ do to do 2.5/5/10G. I’ve used the Mikrotik 10G SFP adaptor to connect to the Intel X540-T2 and the QNAP-UC5G1T 2.5/5G USB.

At the moment there isn’t enough competition around 2.5/5/10GBase-T chipsets to offer it at an acceptable price, that said, the 2.5G market is starting heat up.

> I’ve used the Mikrotik 10G SFP adaptor to connect to the Intel X540-T2 and the QNAP-UC5G1T 2.5/5G USB.

Seems you’re talking about their S+RJ10, right?

ServeTheHome has a SFP+ to 10GbaseT buyer’s guide which includes 2.5 and 5gbps compatibility:

https://www.servethehome.com/sfp-to-10gbase-t-adapter-module-buyers-guide/

Correct, S+RJ10.

We will have an indepth post about 10G performance next week on Crowd Supply – but there are some benchmarks over here – https://ten64doc.traverse.com.au/network/network-performance/

I’d note that not all A53 implementations are equal – the LS1088 has 2MB L2 cache (1MB per 4 core cluster) while many smaller ones only have 512k or 1MB.

The DPAA2 hardware is quite good at load balancing packet flows between cores, whereas the inbuilt Ethernet (if any) in ‘media’ SoCs might only support one input/output queue which can only be serviced by a single core.