[Update Sep 3, 2020: The post has been edited to correct Google Coral M.2 power consumption]

If you were to add M.2 or mPCIe AI accelerator card to a computer or board, you’d mostly have the choice between Google Coral M.2 or mini PCIe card based on the 4TOPS Google Edge TPU, or one of AAEON AI Core cards based on Intel Movidius Myriad 2 (100 GOPS) or Myriad X (1 TOPS per chip). There are also some other cards like Kneron 520 powered M.2 or mPCIe cards, but I believe the Intel and Google cards are the most commonly used.

If you ever need more performance, you’d have to connect cards with multiple Edge or Movidius accelerators or use one M.2 or mini PCIe card equipped with Halio-8 NPU delivering a whopping 26 TOPS on a single chip.

Hailo-8 M.2 accelerator card key features and specifications:

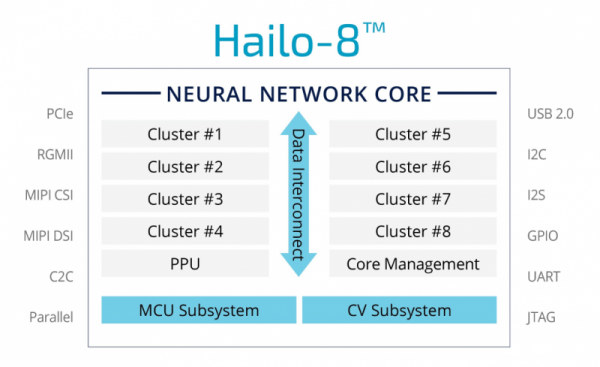

- AI Processor – 26 TOPS Hailo-8 with 3TOPS/W efficiency, and using a proprietary novel structure-driven Data Flow architecture instead of the usual Von Neumann architecture

- Host interface – PCIe Gen-3.0, 4-lanes

- Dimensions – 22 x 42 with breakable extensions to 22 x 60 and 22 x 80 mm (M.2 M-key form factor)

We don’t have specs for the mini PCIe card because it will launch a little later, but besides the form factor, it should offer the same features. The card supports Linux, and the company is working on Windows compatibility. Despite the different architecture, the card supports popular frameworks like TensorFlow and ONNX.

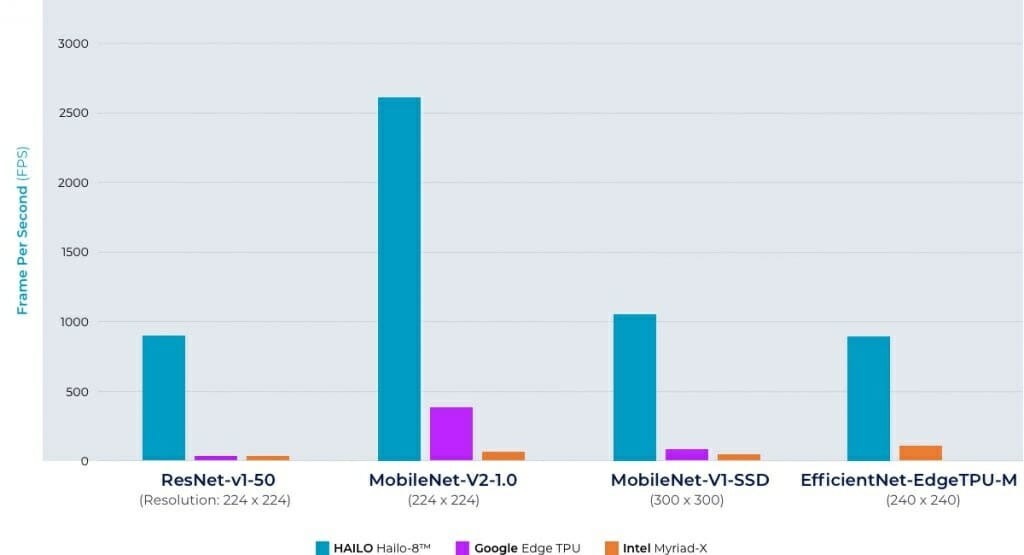

When looking at the performance chart released by Israeli startup Hailo, which pits Hailo-8 against Google Edge TPU and Intel Myriad X using popular ResNet and MobileNet models, I might as well titled this article as “Hailo-8 M.2 card mops the floor with Google Edge TPU and Intel Movidius Myriad X AI accelerators”.

When looking at the performance chart released by Israeli startup Hailo, which pits Hailo-8 against Google Edge TPU and Intel Myriad X using popular ResNet and MobileNet models, I might as well titled this article as “Hailo-8 M.2 card mops the floor with Google Edge TPU and Intel Movidius Myriad X AI accelerators”.

The amount of AI processing power packed in such a tiny M.2 card is impressive, and it’s made possible by the higher 3 TOPS per watt efficiency compared to the typical 0.5 TOPS per watt 0.5 Watt per TOPS (or 2 TOPS per Watt) advertised by competitors. So a 26 TOPS Hailo-8 card will consume around 8.6 W under a theoretical maximum load, while a 4 TOPS Google Coral M.2 card should consume about 2 W. [Update 2: After a conference call with Hailo, the company disputes Google efficiency numbers. You can find more details in a follow-up article discussing Hailo-8 AI processor and AI benchmarks.]

As noted by LinuxGizmos who alerted us of the new cards, Hailo-8 was previously seen integrated into Foxconn fanless “BOXiedge” AI edge server powered by SynQuacer SC2A11 24x Cortex-A53 cores SoC and capable of analyzing up to 20 streaming camera feeds in real-time.

More details about Hailo-8 M.2 card may be found on the product page, as well as the announcement mentioning the upcoming mini PCIe card.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

I don’t get it.

If the Hailo-8 is so powerful why would anyone still use Google Edge TPUs?

We don’t know the price of Hailo-8. Also, If an application work just fine with a 4 TOPS, why getting a 26 TOPS chip? It’s possible many applications don’t need that much power, so why pay more?

I know I’ve applications that will leverage the 4. I also know I’ve got applications that will leverage the 26. Thing is? If you want that, a Xavier is in your future for less TDP. Especially if you’re needing a bit less TOPS and all. The NX is the same price point as the TX2 right now and weighs in slightly less than this for a total TDP of about 10-12 watts total for that. That’s SOC total. This thing runs HOT and if I’ve got a TDP of 10-ish for my SOC and add that? Riight. I’ll believe it when I see it and the price point is something sane. It’d need to be a bit less than $100 to be comparable and you can daisy chain Edge TPUs either via USB or PCIe to scale.

You can actually buy Google Edge TPUs at a known, reasonable price. They come in a USB3 stick form you can use in anything, including a Raspberry Pi. And (as of recently) the TensorFlow driver is open-source. It’s as hobbyist-friendly as it gets. (Full disclosure: I work for Google. Not on anything ML-related.)

If Hailo-8 can match all that, I’ll buy a pile of them. But I’m not optimistic. Announcements like this are so frustrating—the hardware looks so promising but it doesn’t seem to be really available to hobbyists. The Gyrfalcon Lightspeeur 2803S is the same way.

We won’t get into the fact that you can get PCIe edge ones as well…

~25 USD for the mini-PCIe, M.2 models.

Programming info is proprietary like NVidia’s GPU, but that’s a differing discussion… And yes, the drivers for all of it is open as the APIs to hook drivers to code.

If you guys didn’t insist in that Blaze clone or made it more Yocto friendly, I’d have already coupled it to Yocto and had a PoC where there’s an Edge TPU coupled to a Jetson Nano (The only PCIe M.2 providing SoC I have in hand right now…).

Not true according to the Coral TPU website:

“The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). “

Oops, I read that wrong yesterday. I read 0.5 TOPS per watt, instead of 0.5 Watts per TOPS.

You can see what they did — they put 8 of the existing 3 TOPS designs onto a single die and then added interconnect. Maybe they didn’t even do that, this could be eight 3TOPS dies mounted on a substrate.

You can buy the RK1808 which has a 3TOPS unit for $10. So you could clone this by using eight RK1808 chips/dies.

Someone want to clone this for $100?

Is it possible to fit 8 RK1808 chips on a small M.2 2242 card?

Only if you repackage the dies, which is probably not worth the effort.

This chip is pretty similar to Jetson NX. You can buy Jetson NX dev board for $400. Jetson going to have much more mature dev tools.

Also, RTX3080 is 238TOPS for $700. But it needs 320W power.

The problem’s always one of what you’re trying to do. If it’s mobile, you can’t use that RTX3080 unless you have a HUGE honkin’ supply for power.

24-ish TOPS at 10 Watts for something vehicle mounted where you can afford that level of budget (There’s a LOT of things like that…)- it’s a bit of a game changer. It should be noted that the NX has two NVDLA type cores to get it’s speed with- so one could get a similar scale with something like four Edge TPUs off of a PCIe switch, etc.

I’m now in contact with somebody at Hailo. There may be an interview, so if anybody has specific questions, pelase go ahead.

As usual, availability of evaluation boards, single item / volume pricing that’s about the business part. If nothing to hear the usual vaporware. Stick to jetson / google offerings.

If that’s not unobtanium any data on flexibility of the compiler for non CV workloads (running transformers / time series analysis workloads) would be great to know.

TIA

Does it support Darknet Yolo? Which version? Both v3 and v4?

What are the performances of the 8-bit quantized Yolo network in term both of detections quality and frames per seconds?

Thank you

So this is solution for running pre trained models, where the weights are quantized to 8bit. Do they have results how much the ‘recognition’ performance drops compared to non quantized fp32 NNs ?

Do they plan on something able to accelerate the training with fp32 precision ?

Training is not possible on Hailo-8, it’s only for AI inference. I haven’t seen any AI edge solutions work with floating-point data. AFAIK, all use 4-bit or 8-bit data.

i bought a coral M2 E key but I’m struggling to find out an adapter for a standard PCI express bus.. do you have any suggestions??

That one may work: https://amzn.to/38sH7jh

PCIe card for Key-E M.2 module.

Since latest dual edge tpu supports two instances of pcie x1, this might be a great solution.

https://github.com/magic-blue-smoke/Dual-Edge-TPU-Adapter/issues/4