Last June, I reviewed a Ryzen Embedded SBC with Windows 10, and the USB hard drive I normally use for review had all sorts of problems including very slow speeds and/or stalled transfers but no error messages.

Last week, I tried again by installing Ubuntu 20.04 on the same Ryzen Embedded SBC, and the USB hard drive had troubles again, so just assume there were some hardware incompatibility issues between the SBC and the drive, and there may not be a fix or workaround.

Sometimes, it’s indeed a hardware issue with the drive getting too many bad blocks, and if that’s the case, and the drive is still under warranty you can return it and get a fresh new (or refurbished) drive for free. But that drive was still working with my laptop getting around 100MB/s.

So I ran out of ideas until numero53 commented he had similar problems with many USB-SATA adapters, and the trick was to disable UAS (USB Attached SCSI) which in theory you’d want to improve performance. The problem was reported by Raspberry Pi and ODROID users, and you’d normally get error messages that look like that:

|

1 2 3 4 5 6 |

Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#29 uas_eh_abort_handler 0 uas-tag 30 inflight: CMD IN Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#29 CDB: opcode=0x88 88 00 00 00 00 01 5c 00 13 f8 00 00 00 08 00 00 Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#28 uas_eh_abort_handler 0 uas-tag 29 inflight: CMD IN Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#28 CDB: opcode=0x88 88 00 00 00 00 01 5c 00 13 f0 00 00 00 08 00 00 Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#27 uas_eh_abort_handler 0 uas-tag 28 inflight: CMD IN Jun 16 04:59:18 feynman kernel: sd 1:0:0:0: [sdb] tag#27 CDB: opcode=0x88 88 00 00 00 00 01 5c 00 13 e8 00 00 00 08 00 00 |

I did not have any such messages, but I still tried to disable UAS on this particular drive with little conviction it would work. First, we’ll need to find the USB VID/PID for the adapter:

|

1 2 |

lsusb | grep Seagate Bus 002 Device 004: ID 0bc2:2312 Seagate RSS LLC |

Now we can create a file to blacklist the drive/adapter in order to prevent the UAS module to load for this drive:

|

1 2 |

echo options usb-storage quirks=0bc2:2312:u | sudo tee /etc/modprobe.d/blacklist_uas_0bc2.conf sudo update-initramfs -u |

After a reboot, we can see the drive was blacklisted:

|

1 2 3 4 |

dmesg | grep -i uas [ 0.425573] squashfs: version 4.0 (2009/01/31) Phillip Lougher [ 1.975254] usbcore: registered new interface driver uas [ 2.271540] usb 2-2.4: UAS is blacklisted for this device, using usb-storage instead |

Let’s see if that fixed my issue by running iozone storage benchmark:

|

1 2 3 4 5 6 7 8 |

iozone -e -I -a -s 1000M -r 1024k -r 16384k -i 0 -i 2 random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 1024000 1024 90423 91456 2724687 78287 1024000 16384 91281 98600 1916998 92524 iozone test complete. |

It could write a 1GB file at about 90+MB/s. Pretty much normal on this drive. Issue fixed! The ~2GB/s read numbers are clearly influenced by the cache…

It’s apparently quite a common problem with Seagate (VID: 0x0bc2) and Western Digital (VID: 0x1058) USB drives, and Thomas Kaiser just blacklisted all such drives in Armbian by default a few years ago.

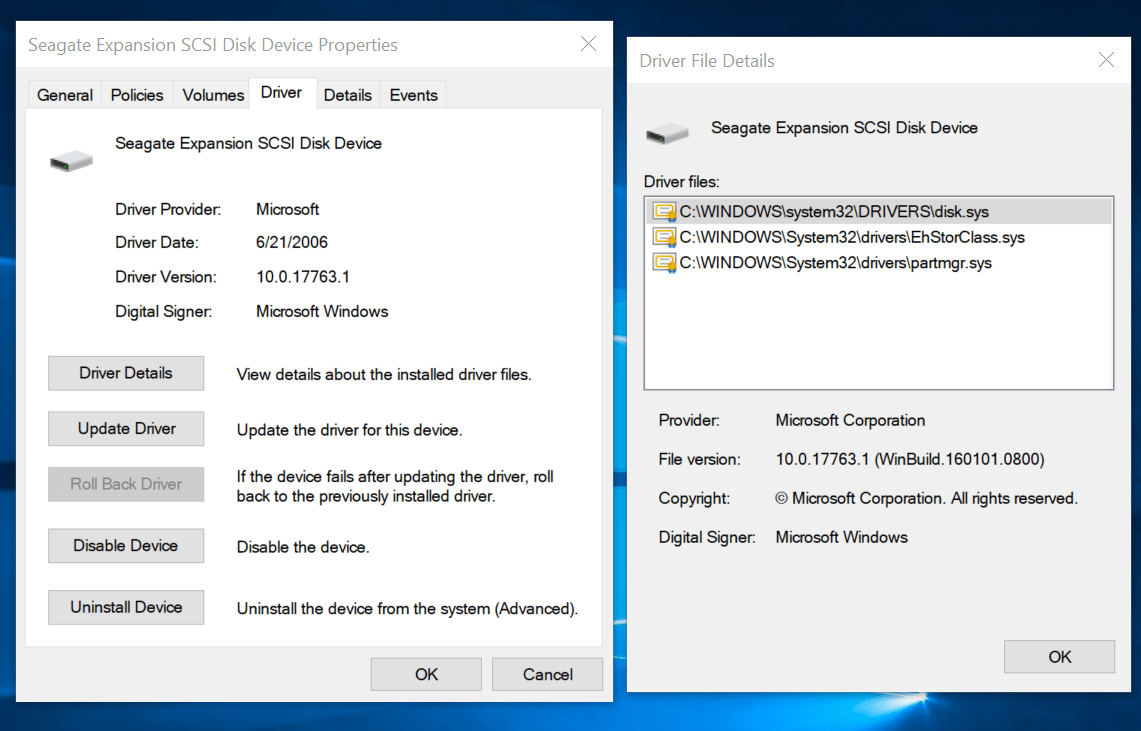

OK, so we’ve found a workaround in Linux/Ubuntu. But what about Windows 10, since I got the same problem here. There’s no obvious solution that I could find. There’s uaspstor.sys file in the %WinDir%\System32\drivers folder that is the USB Attached SCSI (UAS) Driver for Windows 10. But it’s not used directly by my drive.

I suppose it might be possible to try to move/rename drivers’ files until the issue is solved, but it’s not ideal since other drives that actually work properly with UAS would also be affected. I looked for methods to blacklist UAS for specific drivers in Windows 10 just like we just did in Linux, but there doesn’t seem to be any solution. If you do have a workaround, please do share.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

> The ~200MB/s read numbers must be influenced by the cache…

In fact it’s 1.9GB/s (1916998kB) and this is most probably due to your filesystem being exFAT handled by FUSE and all FUSE filesystems avoiding direct I/O so filesystem benchmarks show usually how fast the OS can read from the filesystem cache. The following call shows more realistic numbers:

I ran the test on an NTFS partition, but if I remember correctly it also avoid direct I/Os.

On Odroid H2, N2, N2+ and C4, using:

iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2

I see in most cases UAS to be slower than BOT in the reclen ranges of 16k, 512k, 1024k using SSDs or HDDs. Ubuntu 18.04 or 20.04.

I started to systematically do these tests when I discovered that a cassandra-stress tool session was running about 5% faster with BOT compared to UAS. So this is not specific to a iozone methodology.

So while there are plenty of cases where UAS provides higher numbers, there are also plenty of cases where it does not. I did not find a specific pattern allowing one to predict what the performance will be prior to testing.

Understanding why is beyond my skills.

CONCLUSION: The ubiquitous “wisdom” on the Net that you should use UAS because it is “faster” is not accurate. Using USB disks with SBC is quite common. My suggestion is that you test UAS and BOT with what the SBC will do the most in production and choose UAS or BOT accordingly.

That’s where I am at this point. Suggestions welcomed.

> I see in most cases UAS to be slower than BOT

With a huge variation of different USB-to-SATA bridges or just a few or even one?

(1) USB SATA Bridge from Hard Kernel: Bus 002 Device 003: ID 152d:0578 JMicron Technology Corp. / JMicron USA Technology Corp.

(2) USB SATA Bridge from NexStar external enclosure: Bus 002 Device 004: ID 174c:1351 ASMedia Technology Inc. USB3.0 Hub

Longer answer (could no longer edit the previous post)

If you know of external enclosures with chipset not showing this behavior, I’m all ear and ready to buy it or them for testing.

I tested two SSD and two HDD:

For the SSD I know there are now the best of the crop but I only spend extra $$$ for Samsung Pro on my PCs.

The only case where I saw UAS dramatically leaving BOT in the dust:

was with an Odroid C4 and an HDD. Same hardware with a SSD returned:

What was particularly vexing is that on an Odroid H2+ the behavior was reversed. Here is the SSD:

Here is the HDD:

Methodology:

1) For each test I ran iozone 3 times for UAS and 3 times for BOT, I then compute the average for UAS and for BOT and then I compare.

2) I use empty HDD disks so that inner vs outer tracks are not part of the equation.

3) I do not control what the OS does (e.g. CRON) during the tests.

To minimize a possible effect from (3), I might recompute a spreadsheet where I’ll use the max of each 3 test results instead of the average. I don’t know how it will change the results though.

As I wrote earlier, I cannot find a pattern, so I have to run the software that will represent 90% of the production usage with UAS or BOT to find out which one to use.

Not talking about your results (too hard to read without proper formatting and without being able to look at raw data usually not worth the efforts anyway) but about methodology.

If you don’t control what’s going on in the background you’re just generating numbers without meaning. I always run at least sbc-bench -m (monitoring mode) in parallel when doing any sort of benchmarking and afterwards I always check dmesg output for anomalies (like e.g. bus resets or similar stuff).

Especially cheap consumer SSDs implement write caches and once this capacity is exceeded they get dog slow (I measured below 60 MB/s with cheap Intel and Crucial consumer SSDs). So if this is not taken into account by the benchmark setup it’s just generating worthless data. If you run 3 times with 5 different reclens and 100MB each that’s 1.5GB of data written to the SSD in a batch. I own several consumer SSDs with write caches smaller than that.

Unpredictable SSD write cache behavior is one potential bottleneck, USB issues is another, host performance is another. What do you benchmark in the end if you add a bunch of potential bottlenecks to a setup?

BOT mode is less efficient than UAS and as such generates higher CPU load. So if you benchmark BOT and UAS on a system with ondemand cpufreq governor it might happen that while testing UAS the CPU cores are clocked at at rather low speed while sitting at maximum cpufreq when testing with BOT. This will result in numbers without meaning suggesting BOT being faster than UAS.

For a proper test setup you need to eliminate as much potential bottlenecks as possible and you need to verify that as well. That means switching to performance cpufreq governor, using one drive that is never the bottleneck (an SSD with a sustainable write and read performance of above 500 MB/s) and checking the environment prior and after the execution.

If you click on Read More, the formatting is clear enough.

I understand what you mean… BUT I’m not trying to reach a state of pure synthetic benchmark to compare X vs. Y. I’d rather want to see what mode is best in regular production environment.

If during a synthetic benchmark X is better than Y, fine. But if this is never going to happen in production, all the synthetic benchmarks of the world are useless to me.

The reason I started to test UAS vs BOT is because my DB app was running faster with BOT compared to UAS.

To conclude, I redesigned the testing: repeating it six times and eliminating outliers. The pattern of UAS being slower than BOT is confirmed in “my” production environment.

Disassemble the disk and check out what chip is used?

Older Seagate USB3 disks use an ASMedia ASM1053, since end of 2014 ASM1153 or JMicron JMS577. But regardless of the chip the firmware is the problem since it’s never ASMedia’s or JMicron’s standard firmware.

Defective by Seagate.

I read this was a faulty implementation of SATA over USB by Seagate, but my memory is bad.

This is a super annoying problem and disabling UAS didn’t fix for my current seagates that i need to access.

Had to buy a damn laptop for them.

Thank you for this article – it helped me to get my ADATA SE800 work on my AMD ThinkPad T14s.

I think that you’re right that it isn’t possible to just blacklist a specific drive on Windows

.

Replacing uaspstor.sys with usbstor.sys (located in C:\Windows\System32\Drivers) solved the issue for me.

This removes uasp support for all devices, but for me it doesn’t really matter, because I rarely use Windows and I still get decent read speeds without UASP (around 250MB/s when copying a video file to my laptop).

Still, it’s weird that it works with UASP both on my desktop and old thinkpad x260, both powered by an Intel CPU though.