SolidRun CEx7-LX2160A COM Express module with NXP LX2160A 16-core Arm Cortex A72 processor has been found in the company’s Janux GS31 Edge AI server in combination with several Gyrfalcon AI accelerators. But now another company – Bamboo Systems – has now launched its own servers based on up to eight CEx7-LX2160A module providing 128 Arm Cortex-A72 cores, support for up to 512GB DDR4 ECC, up to 64TB NVMe SSD storage, and delivering a maximum of 160Gb/s network bandwidth in a single rack unit.

Bamboo Systems B1000N Server specifications:

- B1004N – 1 Blade System

- B1008N – 2 Blade System

- N series Blade with 4x compute nodes each (i.e. 4x CEx7 LX2160A COM Express modules)

- Compute Node – NXP 2160A 16-core Cortex-A72 processor for a total of 64 cores per blade.

- Memory – Up to 64GB ECC DDR4 per compute node or 256GB per blade.

- Storage – 1x 2.5” NVMe SSD PCIe up to 8TB per compute node, or 32TB per blade

- Network

- 16-port 10/40Gb Ethernet non-blocking level 3 switch per blade

- Uplink – 2x 10/40Gb QSFP Ports per blade

- Misc – Six dual counter-rotating fans

- Power supply – 1 or 2 1300W AC/DC PFC 48V DC Power

- Dimensions – 778 x 483 x 43 mm (1U 19″ rack – Bamboo B1000 chassis)

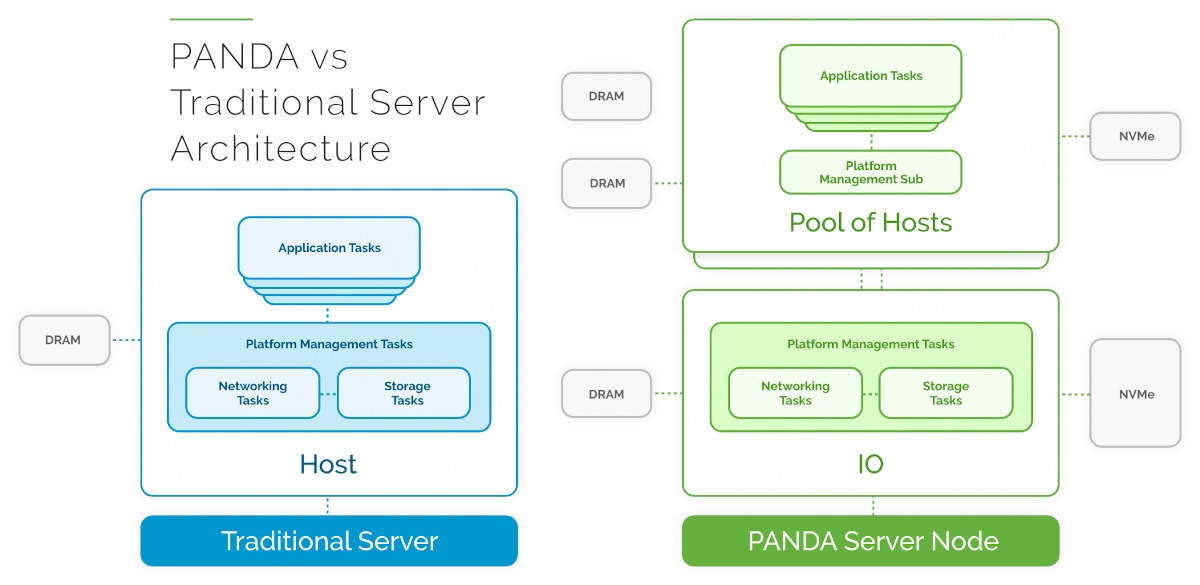

Bamboo B1008N is more like an 8 Linux servers-in-a-box rather than a single 128-core server, and all 8 servers interface using Bamboo PANDA (Parallel Arm Node Designed Architecture) system architecture “utilizing embedded systems methodologies, to maximize compute throughput for modern microservices-based workloads while using as little power as possible and generating as little heat as possible”.

The Bamboo Pandamonium System is a management module with UI and REST API using for configuration and control, for example, to turn off individual nodes to save power when they are not needed. Bamboo B1000N server is especially suited for tasks such as Kubernetes, edge computing, AI/ML simulation, and Platform-as-a-Service (PaaS).

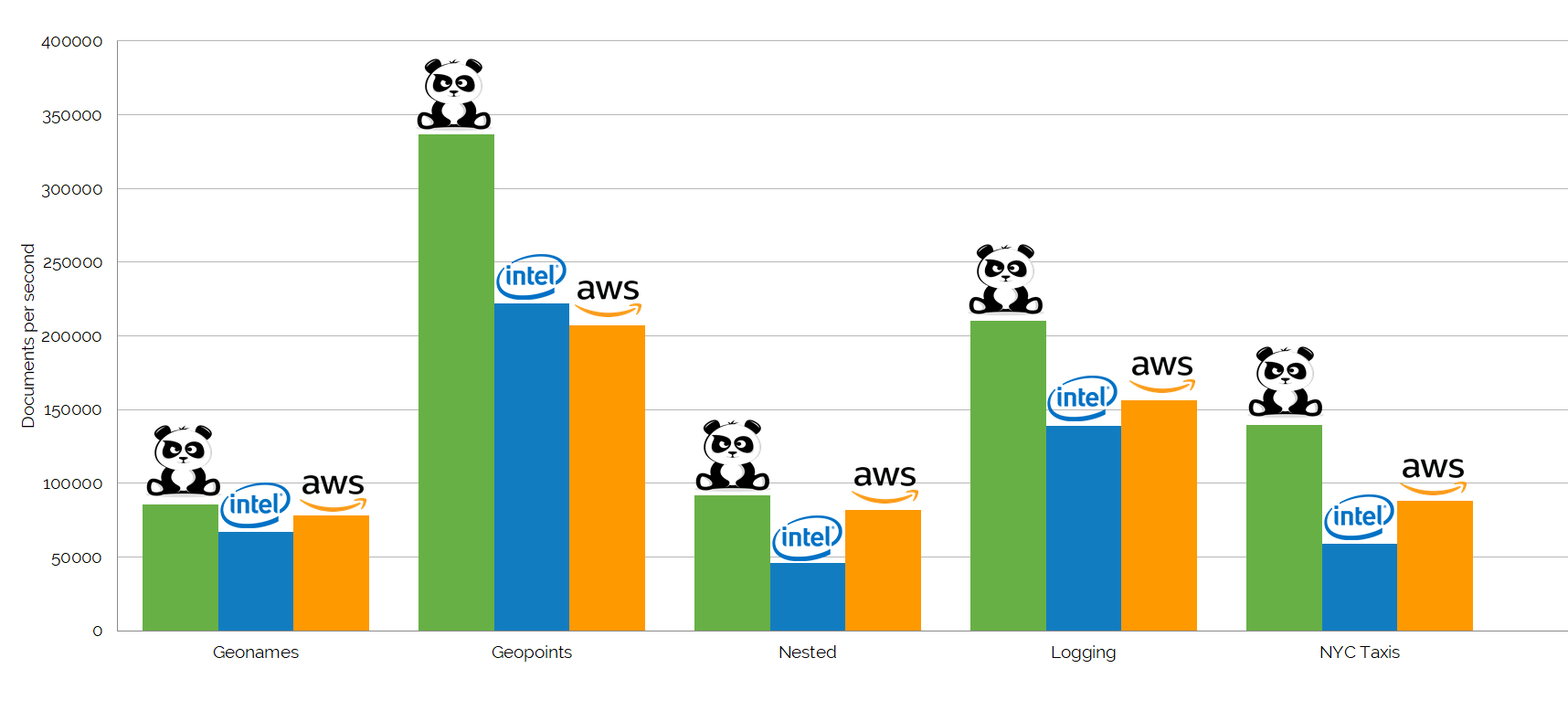

The company compared B1008N server fitted with 64GB RAM and 32TB SSD to a server with three Xeon E3-1246v3 processors @ 3.5GHz, 32GB RAM, and two AWS Instances m4.2xlarge with 8x Intel Xeon vCPU @ 2.3 GHz, 32GB RAM using the industry-standard ElasticSearch benchmark.

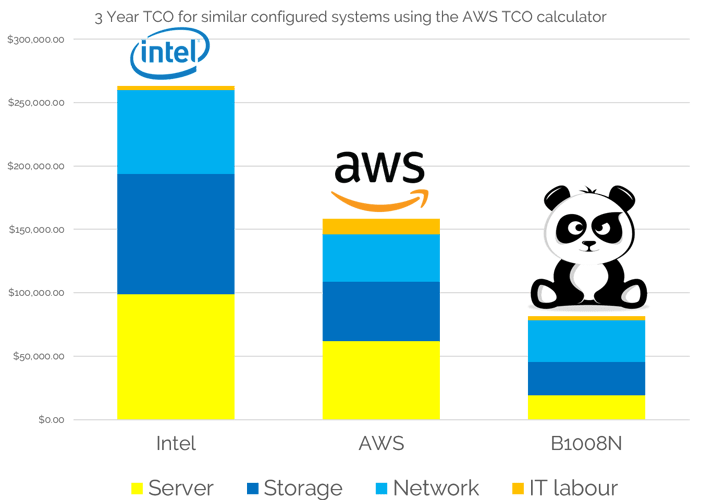

Bamboo Arm server’s performance is clearly competitive against those Intel solutions, but the real advantages are the lower acquisition cost (50% cheaper), the much lower energy consumption (75% lower), and it’s much more compact saving up to 80% of the rack space, and this shows in the total cost of ownership as calculation over a period of three years using AWS TCO calculator.

B1008N costs about $80,000 to operate over 3 years, against around $155,000 for the AWS and over $250,000 for the Intel Xeon servers. The server costs are quite straightforward as it’s the one-time cost of the hardware or AWS subscriptions over three years. Bamboo Systems explains B1008N network costs are 50% less than Intel as traffic is contained within the system thanks to the built-in Layer 3 switches. However, I don’t understand the higher storage costs for the Intel servers.

There’s no available or pricing on the product page, but Cambridge Network reports Bamboo Systems B1000N Arm server will be available in the US and Europe in Q3 2020 with price starting at $9,995.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Yeah i really dont need one of those.

But boy do i want to have one so i open htop and see all those bad boys

> But boy do i want to have one so i open htop and see all those bad boys

Just how? You would need to establish 8 SSH sessions and run htop in each of them just to see a maximum of 16 cores at once.

Better grab a decade old Sun server with two UltraSPARC T2 CPUs and you get htop showing 128 CPUs at once (threads in reality since those ‘Niagara’ CPUs could handle 8 threads per core)

You need a large terminal window to display these 128 ‘CPUs’ at once in htop and it looked really impressive until you realized the horrible ‘per thread performance’ of these SPARC implementations back then. 😉

It’s not just about the numbers of cores. At Bamboo, we build well balanced systems. So, for example we have a high ratio of memory controllers to cores. Each processor has 20Gb/s of networking. Each as 22GB/s PCI access to the SSD’s. This gives us a very high throughput capability as we can make the processor work very hard as we have reduced the bottlenecks. It also scales linearly, as each application processor you switch on comes with its own resources, unlike in a. traditional architected server.

Lastly, to take a large processor and then carve it up, w which has been the way we’ve done things until now, requires a hypervisor… which consumes CPU and memory. So, if you require smaller machines than the CPU, the reason for virtualisation, why not build smaller machines?

Seems arm is doing well.

” Fujitsu and the Riken research institute ended up packing 152,064 A64FX chips into what would become the Fugaku system, which is now the world’s fastest supercomputer. ”

” For the first time, a machine built with ARM chips has achieved the top ranking as the world’s fastest supercomputer.

On Tuesday, the Top500 supercomputing organization crowned a new champion: Japan’s “Fugaku” system. Based in Kobe, Japan, the machine is able to achieve 2.8 times the processing power of the previous record holder, the US’ Summit supercomputer. “

Fujitsu’s A64FX are special in two ways:

So this is not about ARM in general or a specific instruction set but using super fast memory and super wide SIMD extensions.

And there’s absolutely no relationship at all between this irrelevant supercomputing copy&paste job and what this blog post is about.

You better tell arm that tkaiser knows better.

“We are incredibly proud to see an Arm-based supercomputer of this scale come to life and thank RIKEN and Fujitsu for their commitment and collaboration,” said Rene Haas, president, IP Group, Arm. “Powering the world’s fastest supercomputer is a milestone our entire ecosystem should be celebrating as this is a significant proof point of the innovation and momentum behind Arm platforms making meaningful impact across the infrastructure and into HPC.”

>Seems arm is doing well.

You’ve probably jinxed them now..

Hi there. On the Bamboo web site, you will find a link to the AWS TCO calculator and the inputs used to get the Intel and AWS info. When you run the TCO calculator it has a whole section that explains its reasoning and how it calculates. From there, you can get the Storage information.

Obviously AWS tries to make themselves look like a good financial choice. A couple of things AWS does not include in their calculation are things like the IOPS charge (Which is there when you buy your own storage) or a charge for Backup, which they include in On-Prem, but no in their solution… so I added that to AWS using their own Backup service charges).

Also, with on-prem you get all the storage upfront, whereas AWS assumes you start at 6TB and build…but they don’t provide the algorithm they used to calculate that.

Lastly, AWS used read intensive SSD’s, whereas ours are read/write. When I use Amazon’s figures for read/write (io1) intensive, they became more expensive than Intel on-prem.