Silicon vendors have been cheating in benchmarks for years, mostly by detecting when popular benchmarks run and boost the performance of their processors without regard to battery life during the duration of the test in order to deliver the best possible score.

There was a lot of naming and shaming a few years back, and we did not hear much about benchmarks cheating in the last couple of years, but Anandtech discovered MediaTek was at it again while comparing results between Oppo Reno3 Europe (MediaTek Helio P95) and China (MediaTek Dimensity 1000L) with the older P95 model delivering much higher performance contrary to expectations.

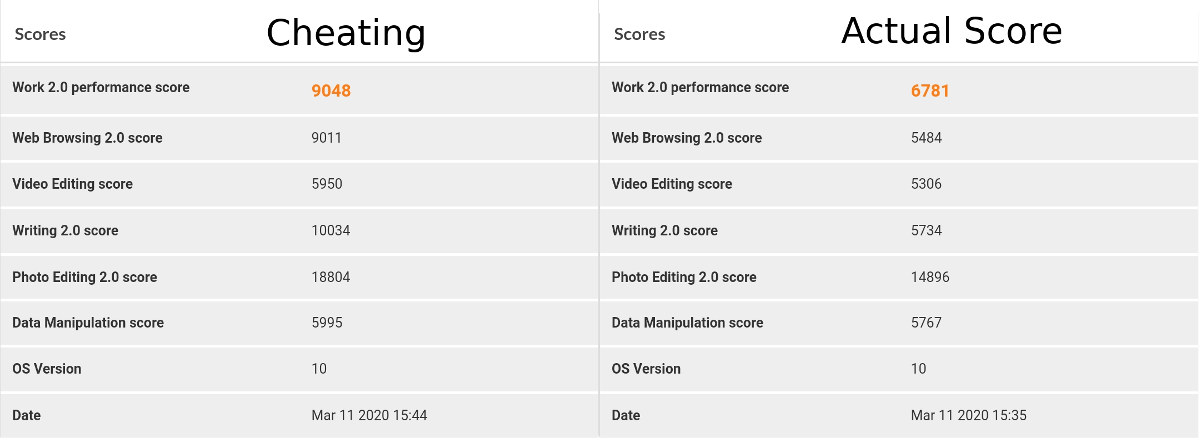

Cheating was suspected, so they contact UL to provide an anonymized version of PCMark so that the firmware could not detect the benchmark was run. Here are the before and after results.

As noted by Anandtech, the differences are really stunning: a 30% difference in the overall score, with up to a 75% difference in individual scores (e.g. Writing 2.0).

After digging into the issue they found power_whitelist_cfg.xml text file, usually found in /vendor/etc folder, added some interesting entries:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

... <Package name="com.futuremark.pcmark.android.benchmark"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> <PERF_RES_GPU_FREQ_MIN Param1="0"/> </Activity> </Package> <Package name="com.antutu.ABenchMark"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> </Activity> </Package> <Package name="com.antutu.benchmark.full"> <Activity name="Common"> <PERF_RES_POWERHAL_SPORTS_MODE Param1="1"/> </Activity> </Package> ... |

Just found UMIDIGI A5 Pro has similar parameters but using “CMD_SET_SPORTS_MODE” instead of “PERF_RES_POWERHAL_SPORTS_MODE”.

AnandTech went on to check other MediaTek phones from Oppo, Realme, Sony, Xiaomi, and iVoomi and they all had the same tricks!

The way it works is that smartphone makers must find the right balance between performance and power consumption, and do that by adjusting the frequency and voltage using DVFS to ensure the best tradeoff. Apps listed in power_whitelist_cfg.xml are whitelisted in order to run at full performance. So in some ways, the benchmarks still represent the real performance of the smartphone if it was fitted with a large heatsink and/or phone fan 🙂

That’s basically what MediaTek answer is, plus everybody is doing it anyway:

We want to make it clear to everyone that MediaTek stands behind our benchmarking practices. We also think this is a good time to share our thoughts on industry benchmarking practices and be transparent about how MediaTek approaches benchmarking, which has always been a complex topic.

…

MediaTek follows accepted industry standards and is confident that benchmarking tests accurately represent the capabilities of our chipsets. We work closely with global device makers when it comes to testing and benchmarking devices powered by our chipsets, but ultimately brands have the flexibility to configure their own devices as they see fit. Many companies design devices to run on the highest possible performance levels when benchmarking tests are running in order to show the full capabilities of the chipset. This reveals what the upper end of performance capabilities are on any given chipset.

…

We believe that showcasing the full capabilities of a chipset in benchmarking tests is in line with the practices of other companies and gives consumers an accurate picture of device performance.

So is that cheating, or should it just be accepted as business as usual or best practices? 🙂

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Reminds me of the VW emissions scandal.

I guess it’s in a grey area between cheating and not. Personally, it would be acceptable for me if as a user was able to add the applications of my preference to that whitelist and doing so I was agreeing that this may affect the battery life and maybe the overall stability. Of course, giving super powers like these in the user it’s like rolling the dice when it comes to warranty, but for me that would be honest and transparent to the end customer.

> is that cheating, or should it just be accepted as business as usual or best practices?

Given that the target audience of these Android devices looks at benchmark results in the most stupid way possible (comparing numbers or the length of bar charts without thinking about the meaning of the numbers for their use cases) what’s the alternative?

Even the choice of benchmarks (PCMark in this case) already suggests this is all about generating numbers without meaning. How large is the percentage of people doing video and photo editing on their smartphones? I guess as large as the amount of people affected by a better ‘Writing 2.0 Score’ –> close to zero.

Smartphone users passively consume media and actively play games. So they should look at how fast and efficient video decoding is done and 3D acceleration on the GPU. Since they’re on a mobile device and not on a PC plugged into a wall outlet. Instead they compare useless bar charts done with kitchen-sink benchmarks targeting ‘office use cases’.

Due to the nature of DVFS (higher frequencies require way higher energy/consumption) reaching the upper 25%-30% of the performance scala easily wastes twice the energy compared to remaining at the 70%-75% level. Smartphone users who have to charge their device twice a day for sure will not buy this brand again.

How many consumers will understand that? How many will understand that a benchmark targeted at ‘doing boring work on a PC plugged into a wall outlet’ (AKA PCMark) is pretty much useless for their use case on a battery powered device doing something entirely different?

So because most consumers are stupid, companies have the right to cheat? There’s no excuse for cheating, ever.

And if you follow Andrei’s reviews on Anandtech he goes well beyond the benchmarks he highlighted here.

You are completely misunderstanding what is happening. There is no actual “cheating“ going on, the scores are not fake, they are real, they are just achieved by ignoring regular thermal and power limit

You can also do this manually on the same phone by switching to a high performance mode. Yes it’s sloppy and not very transparent, especially if you don’t understand what’s going on technically but it’s not cheating as such, just showing the device at its best

As TK pointed out, there is no such thing as a static stock level of performance because of continually changing thermal conditions that feed adjustable kernel and user space policies to adjust power and frequencies

You’re discovering marketing, Laurent. It’s a way to say a certain truth from a certain perspective, using general terms that are commonly understood as having a general meaning and making one think it always applies. Listen to radio ads about cars. “our car can run for 900 km without refilling”. With how large a tank ? Or “our car only consumes 3.5 L/100km in cities”. Maybe when driving at night with all green lights ? The principle is that such tests must be reproducible if challenged, but their meaning usually is irrelevant to the consumer’s usage.

> because most consumers are stupid

Why should consumers be stupid? They’re just mostly clueless and trained to look at the totally wrong metrics (that was the ‘looking at benchmark results in the most stupid way possible’ part).

In the last decade PC users obsessed by CPU benchmark scores (especially the irrelevant multi-threaded numbers) when wanting to spend an additional amount of money for a faster system, then having to choose between ‘SSD instead of HDD’ and ‘faster CPU’… chose the more expensive CPU and ended up with a system being slower with most if not all ‘PC use cases’.

And here it’s the same. People obsessed by numbers without meaning, mostly not willing or able to understand that you can’t have high sustained performance without thermal constraints and compromises wrt battery life, wanting to pay as less as possible. How should device vendors deal with this ‘benchmark dilemma’?

And majority of these kitchen-sink benchmarks are crappy anyway. They show BS numbers like max CPU freq based on some device tree definitions instead of simply measuring the clockspeeds, they do not monitor benchmark execution and so on. And this is the basis consumers rely on when choosing a new gadget they throw away within two years time anyway.

I’ve been doing performance analysis of upcoming CPU for 20 years in design teams. So thanks guys for teaching me about marketing and why people are clueless about perf 😉

I know the situation of performance and how, even well educated, people react and apprehend it. And misunderstand what they see even when it’s not made nicer with various tricks. Part of the job is to educate people and you don’t go anywhere by cheating. I agree the word cheating is perhaps strong in this context, it’s nonetheless misrepresenting what a SoC is able to achieve in a device in normal conditions.

Oh well in fact it’s likely because my job is performance analysis that I just can’t stand all of that and that it drives me mad 😀

My job has been a long benchmark history over the last 20 years as well, but on network products, including some I authored myself. What I can say is that as technology evolves, the number of dimensions increases and the relevant ones change over time. CPUs progressively switched from MHz (including PR-rating) to battery life + top speed for a short period before throttling. Users are not prepared to this as this is too abstract.

In my area, some vendors used to measure bandwidth while packet rates then connection rates were mostly the key; nowadays, even connection rates are not enough because you have to add TLS in various versions and flavors as you never know which case will be relevant to your users. While I’ve tried a lot to teach people how to read such numbers and make sure to to be mistaken, I continuously hear people report just one number out of nowhere as if a single metric was possible, not understanding why their projected use case differs from someone else’s.

And I came to the conclusion that it’s human. We can only compare single dimensional items (“the tall guy”, “the small car” etc). If you’re not expert in an area to continuously follow all improvements you can only remember about one dimension, often the one that helped you make your decision, and only remember this one just like you have your body size on your ID card.

Thus it remains the responsibility of those producing benchmark results to educate their readers about how they’re produced, what they mean, and how they can be influenced and twisted compared to real use cases. Typically on network tests, what you measure in the lab is always better than reality because your test equipment is local and the latency is much better. Once you’re aware of it, it it’s not a problem. For application benchmarks, the possible presence of a background browser eating 80% of one core due to 50 “idle” tabs opened is rarely taken into account and will often significantly affect real use cases by limiting the CPU’s top frequency on other cores, or even heat it enough to make it throttle much faster.

Willy I suggest to you the clue is in the phrase ” To give informed consent the person needs to be able to read, comprehend and understand ”

When explaining to a audience remember KISS and the plain English campaign. (There might be similair campaigns in other peoples native language too. ).

Remember too ” Pinky: “Gee Brain, what are we gonna do tonight?” Brain: “The same thing we do every night, try to take over the world!” “

>Oh well in fact it’s likely because my job is performance analysis

This is called an “appeal to authority”[0]. There are plenty of people that have been doing a job for a long time and doing it badly or just wrong. If what tkaiser is saying, that the benchmarks are not outright cheating but actually the best possible case, is true then come up with an argument against that and not “well I’m a totally anonymous internet person but here are my credentials you can’t verify or anything but I’m super important and always right”.

[0] The same tactic used by people trying to claim that 5G towers cause COVID 19

> what tkaiser is saying, that the benchmarks are not outright cheating but actually the best possible case

Seems I failed to express myself. My point is that this type of benchmarks is irrelevant anyway. But consumers love to compare numbers or the length of bar charts, don’t care that those numbers say nothing about their use case (mobile device) and as such somehow device vendors have to deal with this.

For me personally an important benchmark is how long those things get security/kernel updates and how much privacy can be preserved with a specific device (family).

>how long those things get security/kernel updates

>how much privacy can be preserved with a specific device (family).

You probably need to be spied on for revenue generation after the phone purchase to be deemed worthy of getting any updates past the initial 6 months.

> getting any updates past the initial 6 months.

The last phone I bought new (back in Sep. 2012) got updates for 5 consecutive years, the one I used since Sep. 2017 got security/kernel updates for 6 years so I hope that the one I’m using now (also used) will be supported at least until the end of 2022.

Nexus/Pixel or iPhone?

> Seems I failed to express myself. My point is that this type of benchmarks is irrelevant anyway. But consumers love to compare numbers or the length of bar charts, don’t care that those numbers say nothing about their use case (mobile device) and as such somehow device vendors have to deal with this.

I still fail to see the logic which leads from consumers have no clue about what this represents with their use case to a vendor has the right to play games with benchmarks.

But I agree that many perf benchmarks are useless (and misleading) for many users. That still doesn’t give a SoC vendor the “right” to do what is described here (again that’s my personal opinion twisted by my job).

> For me personally an important benchmark is how long those things get security/kernel updates and how much privacy can be preserved with a specific device (family).

I agree but I thought we were talking performance benchmark.

>I still fail to see the logic which leads from consumers have no clue about

>what this represents with their use case to a vendor has the right to play

>games with benchmarks.

If the consumer doesn’t understand what the benchmarks mean then whether they have been gamed or not doesn’t matter anymore. We’re talking “Schrödinger’s benchmark” at that point.

> I thought we were talking performance benchmark.

I guess that’s your job description and partially mine. But here it’s solely about marketing numbers 🙂

What you are doing dgp is known as ” Attacking the player not the ball ” as his skill exceeds you.

>as his skill exceeds you.

That could be true. He could also be a toilet cleaner at an ASIC designer and think that puts him on the same level as the CTO. Neither situation would change whether the benchmarks are actually cheating or not or whether or not it’s right to do so.

He could also be the server room janitor or server room bell boy. Every time the server rings the trouble bell he comes running.

Every deceiver always justifies their actions

I wasn’t talking out of authority, I’m not into dick games. I was in fact explaining why my view on this matter is likely very biased by my job which is to provide trustable performance results to design teams.

>I wasn’t talking out of authority, I’m not into dick games.

Which is why you went off on one about being taught about marketing and dropped that you work in an impressive but nothing to do with marketing field?

It’s not that black or white. The problem mostly is the total lack of transparency, and in this case the utility running the benchmark is at fault for not collecting enough info about the environment it’s running in. Indeed, users who will be tempted by the device after seeing a benchmark may rightfully expect to see the same level of performance on the tested application. At the very least if the benchmark utility indicated “I’m running with the following settings”, the user could actually apply them to their beloved applications and get the expected performance at the expense of battery life.

I’d say that an ideal operating system should assign just the right performance to an application, not too much to save battery life and not too little in order to provide a nice experience. After all that’s why we already have big.little. Some people like to slow down their PC when running on battery for example. So it’s fine to assign performance based on the application and conditions. It’s just not fine not to be clear about it.

And typically when I build a kernel I increase my CPU’s max temperature so that it throttles less and builds faster. The rest of the time I don’t because the fan makes a terrible noise when this happens (think about starting firefox or chrome). If my system would automatically adapt to my use case by detecting what I’m doing, that would be awesome. In some regards this is what we’re seeing in this benchmark, except that the performance boost is granted to a measuring tool and not to a measured application, which makes the situation much less honest.

personally at this point i think the benchmark users & creators are partly at fault.

Rather than benchmark the speed alone, they should focus on how fast a device can go for how long.

For instance create scenes of varying complexity, provide an upper bound for max framerate and see how long it goes unit the battery is depleted.

That would resemble more closely a device‘s pratical performance…

I was thinking exactly the same and totally agree with you. Still, some will complain that the benchmark is purposely run at low speed to maximize battery life or run at full speed to minimize wait time. There’s a trade-off there that only the end user can choose, and synthetic benchmarks will never cover all corner cases, because they’re… benchmarks.

I can imagine much better performance when you have big heatsink on SOC 🙂

What do benchmark devs have to say about this?

It’s their job, after all, to maintain the context in which easily digestble, but otherwise meaningless numbers can be more or less meaningfully compared.

Everybody or nobody cheating does not disruot the context, somebody cheating does.

> What do benchmark devs have to say about this?

This for example: http://www.brendangregg.com/ActiveBenchmarking/bonnie++.html

You always need to benchmark the benchmark first to see what it pretends to test and what it actually tests. Of course none of this happens with any ‘commonly accepted’ benchmark for consumer devices.

What it actually tests is irrelevant here. It may as well be a blackbox.

Think about the popular benchmark results as of a hall of fame in a classic arcade game — another metric taken as goal.

What does Donkey King measure? Quite a lot, yet it’s not directly applicable to measure your “real-world” performance of any kind.

Is it possible to extract real-world benefits by cheating? Billy Mitchell shows it’s absolutely possible.

Would the playing field be completely different if everyone were using emulators to get best chance at top performance? Sure, just look up MAME leaderboards.

Once enough people start taking numbers seriously, what they actually measure takes a distant second place to how exactly they are measured (see any established sport, from curling to classic tetris tournaments for additional examples).

I’ve thought about it and I have to apologize that my wording misled you. I meant specifically the developers of big enough benchmarks that can serve as a goal for manufacturers. I thought I put ‘the’ in there, but I didn’t.

Your point about active benchmarking is valid. I think I’ve read it a year ago or so following the link you once posted in comments on this site.

Following the spirit of active benchmarking, not its letter, phones and SBCs should have different benchmarks, not number-centric, but use case-centric.

Both are hard problems to tackle and even harder to automate, but make for an interesting thought experiment.

Is it even possible to 80/20 phone use cases, so the result will be short enough to make it readable?

Consider notebookcheck.net listings for mobile SoCs. I find them unreadable and barely useful, although they tend to have several screens worth of test data (its accuracy is irrelevant at the moment).

Is it possible to have a relatively short list of pass-fail criteria for phones with a little bit of numbers of how long it can do it on full charge or no, I wonder.

Like, can I shoot and record 1080@60? Can I play PUBG? For how long? Can I play PUBG competitively? And so on.

> Is it possible to have a relatively short list of pass-fail criteria for phones with a little bit of numbers of how long it can do it on full charge or no

Since I fear you’re asking me: dunno. And I’m a ‘use case first’ guy anyway so my list of criteria looks totally different than other’s. Also I’m not interested in buying a new phone anytime soon or at all since my requirements are too low to justify this (email, chat, receiving monitoring notifications, some web browsing and watching cat videos).

I’ve been happy with 512/1024 MB RAM for the last decade, had a dual core ARM CPU up until recently (for the last two years supposedly the first 64-bit ARM SoC in a consumer device available without even knowing/caring) and the only performance metric I could’ve been thinking of is ‘rendering complex websites’ (funnily cnx-software being the gold standard since one of the few sites with ads allowed).

Or the other way around: When I first saw reviews/benchmarks of the iPad Pro some years ago and the ‘phenomenal’ IO throughput numbers due to this device using really fast NVMe storage I thought: what’s the point of looking at these numbers? There’s no use case benefiting from this and most probably the only real thing about this ultra fast storage implementation is ‘race to idle’ (extending battery life due to storage related tasks finishing within a fraction of time allowing to send everything storage related 99.9% of times to deep sleep).

This changed a year later watching a friend of mine working on such a tablet (she’s a graphic designer) and thinking about ‘camera capabilities’ of these mobile gadgets which require an extensive amount of processing power $somewhere (not necessarily CPU).

Still the kitchen-sink benchmarks consumers base their decision on have nothing to do with all of this.

Yup, the old good “90% of the users use just 10% of features, but each one uses different 10%”.

I use an IP68 phone as a substitute for Nexus 7 2013 as they don’t do 7″ tablets anymore. I don’t even have a SIM in it, let alone play games.

But the problem looks hardly surmountable for me too.

The bewildering range of use cases makes it very non-trivial to provide a relevant list for the user of that benchmarking service.

Using real cases instead of synthetic loads all but ensures a lot of manual time invested into testing (and retesting after a year or so) of each phone.

I struggle to find a sustainable monetization model for such a service that will require at least one full-time tester to remain relevant, even not counting the cost of phones themselves.

Benchmarks like Antutu or GeekBench avoid all these problems at the cost of relevancy of results except for in their own database.

But they don’t have to buy the phones or test them themselves as it’s all up to user to download an app, wait for ten-fifteen minutes and look at the score.

So, I guess, these are the benchmarks we deserve.

meh, if you’re not going to buy a mediatek based phone based on this then you might as well not buy any phone as they are literally all doing this stuff including western companies like Apple.

Never believe benchmarks or amazon reviews.

In my opinion this is not cheating.

PCMark is as much a benchmark as “professional wrestling” is a sport. Both are “a form of performance art and entertainment” [1]. It also seems a lot of people believe both to be “real”. 😉

The article cites a difference of “9048” and “6781” as evidence of foul play. However, these numbers lack any units of measure. What is the 2267 difference representative of? This is similar to a graph with no units: it does not provide any meaningful information. I simply do not believe its possible to cheat when dealing with abstracts.

[1] https://en.wikipedia.org/wiki/Professional_wrestling

The computing press benchmarks are 99+% marketing in contrast to scientific measurements. For instance, they rarely express results in percentage.

In a recent article a benchmark result of VRMark and 3DMark reported 8774 for a Ryzen 3 3200G and 8642 for a Core i3-9100. Mmm… 8642/8774*100 = 98.5%. Now, what is the precision of these measurements, 5%? More? Less? In other words there is no difference for this particular measurement. Yet, the uncareful reader will conclude a Ryzen 3 3200G is better than a Core i3-9100 because in the chart the bar for the former is longer than the latter.

Add to this that it not rare to see charts where the y axis does not start at zero, emphasizing the differences while in terms of % we are still within uncertainty of the measurements.

In other words, a large part of these benchmarks are pure BS practiced by media people who think they are doing scientific and statistical analysis of the products they review.

It is easy to make numbers say things that are utterly wrong. Using the PC benchmark industry standard, it is obvious that the people of Zimbabwe is an exceptional people. The average number of legs per Zimbabwean is less than two legs because they are people with only one leg, i.e. as the result of illness or accident. Yet most of the Zimbabweans have two legs.

Conclusion: according to this result, the people of Zimbabwe is an exceptional people.

Your comparison with the number of legs is interesting because indeed, what most of such benchmarks measure is comparable to the average number of legs per country, seeing some at 1.995 and others at 1.997 and then declaring you’d rather live in the country with 1.997 as it’s so much better, but at the same time they’d never report the number of samples used nor the standard deviation in the measure, would not care about measurement errors resulting in one country reporting 2.01, and they’d probably make their graph start at 1.98 to emphasize the difference between countries!

Exactly. And for those who did not get the joke, the sentence I purposely omitted was “…therefore most Zimbabweans are above average (in terms of number of legs) hence the people of Zimbabwe is an exceptional people 🙂

Benchmarks would also greatly profit using median and percentiles when comparing products. This would eliminate the BS about sub-5% differences.

The benchmark software should just add another test that loops the tests from full charge to 5% battery depletion and reports runtime and average score. Sure, some companies will just solder the battery leads to an external supply and cool the board with external fans… But that will be offset but the many different private home consumers running the tests as well as the journalists’ and bloggers’ (video recorded) reports.

No the solution is quite easy, the package name should be randomized upon each installation. This would prevent the logic they use to detect when a benchmark is installed or running.

There is no question here.

It is cheating and is therefore a con.

A con is fraudulent and in many nations, fraudulent behaviour is illegal.

It does not matter whether it is in line with industry practice because wrong is wrong, no matter how widespread the practice.

My LeEco Android TV does this also (MStar SoC), I dug into the system and found out it sets a prop variable to “benchmark” while a benchmark application is running and while that variable is set there is a method which manipulates the DVFS values based on a value in /proc.

Laughably see the name of the script 🙂

root@DemeterUHD:/ # cat /system/bin/benchmark_boost_monitor.sh

#!/system/bin/sh

while [

getprop mstar.cpu.dvfs.scaling== “benchmark” ]do

# 0x5566 is a magic number which means that we want to extend the expiration timer

echo 1 0x5566 > /proc/CPU_calibrating

sleep 60

done

UL did not appreciate MediaTek’s little trick and delisted several SoCs and phones from their benchmark rankings.

https://benchmarks.ul.com/news/ul-delists-mediatek-powered-phones-with-suspect-benchmark-scores