As Arm wanted to enter the server market, they realized they had to provide systems that could boot standard operating system images without modifications or hacks – just as they do on x86 server -, so in 2014 the company introduced the Server Base System Architecture Specification (SBSA) so that all a single OS image can run on all ARMv8-A servers.

Later on, Arm published the Server Base Boot Requirement (SBBR) specifications describing standard firmware interfaces for the servers, covering UEFI, ACPI and SMBIOS industry standards, and in 2018 introduced the Arm ServerReady compliance program for Arm servers.

While those are specific to Arm server, some people are pushing to implement SBBR compliant for Arm PCs, and there’s one project aiming to build an SBBR-compliant (UEFI+ACPI) AArch64 firmware for the Raspberry Pi 4.

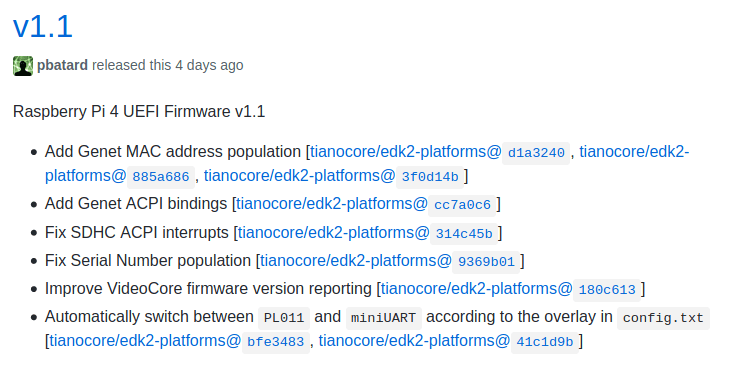

The UEFI firmware is a build of a port of 64-bit Tiano Core UEFI firmware, and version 1.1 of the firmware was just released on February 14, 2020.

The UEFI firmware is a build of a port of 64-bit Tiano Core UEFI firmware, and version 1.1 of the firmware was just released on February 14, 2020.

Note Raspberry Pi 4 UEFI firmware is still experimental, so there will be bugs and some operating systems such as Windows 10 may not boot at all. But it can be used to boot Debian 10.2 for ARM64 from USB with the caveat that Ethernet and the SD card won’t be available due to missing up-to-date drivers.

After download, the firmware image can be installed in two steps:

- Create an SD card in MBR mode (GPT, EFI not supported) with a single partition of type 0x0c (FAT32 LBA) or 0x0e (FAT16 LBA). Then format this partition to FAT.

- Extract all the files from the archive onto the partition

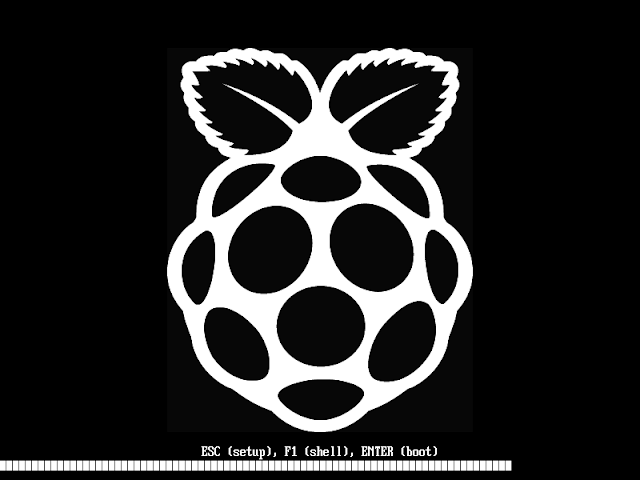

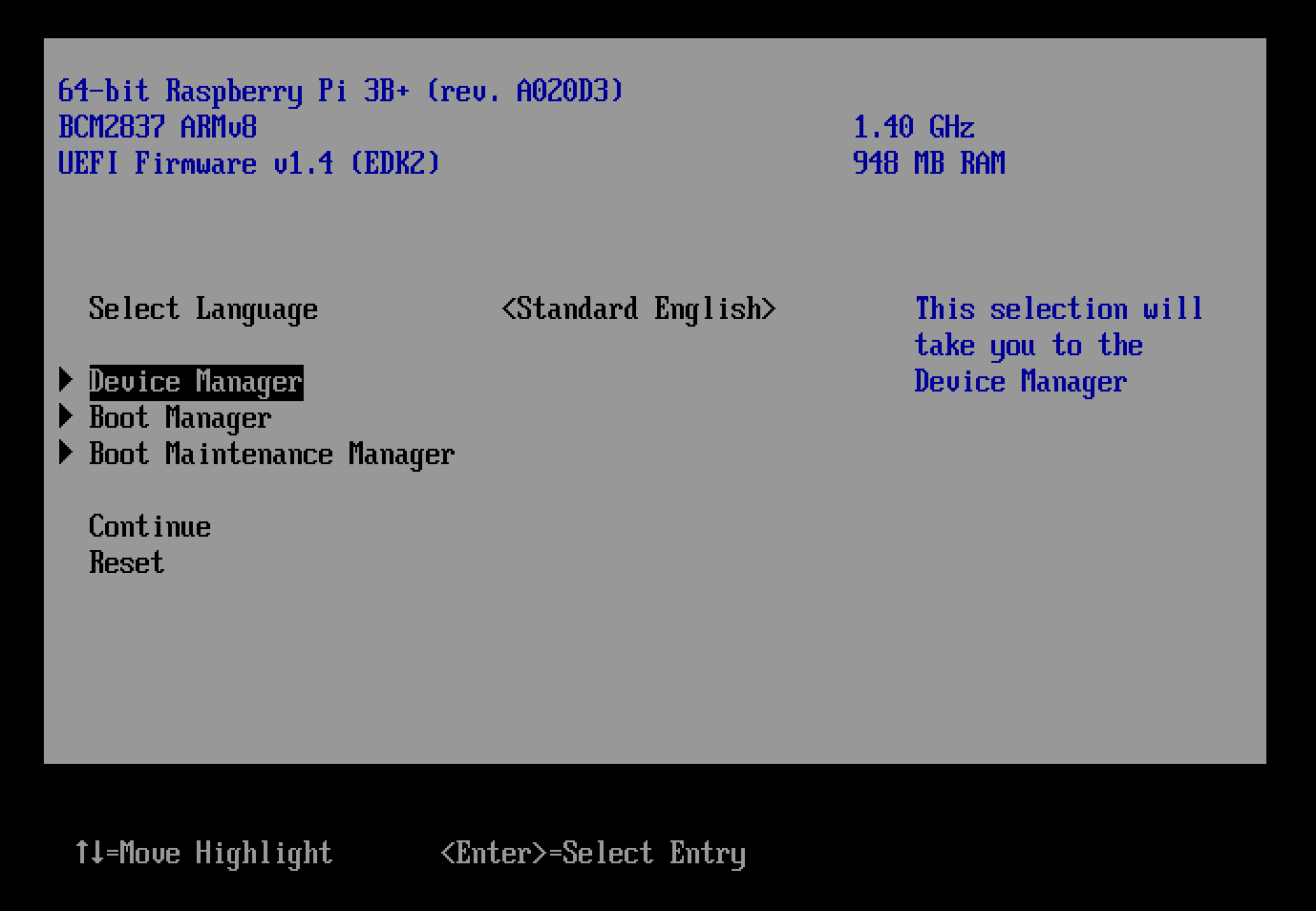

Now insert the SD card/plug the USB drive and power up your Raspberry Pi 4. A multicolored screen should soon show up, followed by a Raspberry Pi black & white logo meaning the UEFI firmware is ready. You can then press Esc to enter the firmware setup, F1 to launch the UEFI Shell, or boot whatever compatible operating system (ISO image) present in the SD card or USB drive (default behavior).

You can follow the project on the dedicated website, and get involved with the developers on Discord. You may also consider following WhatAintInside on twitter for updates about the project and everything ARM64.

As a side note, the Raspberry Pi 3 model B/B+ is already EBBR (Embedded Base Boot Requirement) compliant with its own UEFI firmware available on Github. As I understand it, the RPi 3 firmware relies on the device tree file instead of ACPI required for SBBR compliance.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

One operating system in particular really likes UEFI compliance. But it can be a pane to use at times.

I think 20H1 will be interesting for that particular OS on Pi…

> I think 20H1 will be interesting for that particular OS on Pi

Why exactly?

I’ve used the UEFI firmware on the Pi3. It worked fairly well although Debian 64 consistently benchmarked below Raspbian so I went back. I will give this a try on my Pi4 though and will be interesting to see how it runs.

Did you reset the stock firmware clock rate after installing it? The setting is in the “device menu” shown in the screenshot above. By default its something like 1/2 the rated frequency, which makes everything really slow.

Yes. 64 bit on the pi 3 is unfortunately just slower in most things. We’re talking benchmark slower by maybe 5%, not watching the system churn butter slower.

> I’ve used the UEFI firmware on the Pi3.

Why?

To try Debian stock 64 bit. UEFI makes it easier to run mainline kernels. This was before the foundation had a change of heart on 64 but support.

The VideoCore based RPi is really a sick platform 🙂

To be able to run a ‘stock’ 3rd OS like arm64 Debian you need to chainload two other obscure OS first. ThreadX that is in charge of everything, then UEFI that has not even a chance to bring up the hardware but has to emulate everything relevant (ACPI, NVRAM, etc.).

Yeah, I get it. It’s a hobby thing. It’s fun to experiment. It’s far cheaper than a real UEFI Arm64 board.

Do you find it necessary to mention ThreadX in every article you reply on? You’re obsessed…

I appreciate tkaiser’s comments. Other newcomers would too

how about Pi4 how it runs?

Well, on the web page at the bottom they say “Visitors are welcome and contributors are highly encouraged” yet they secretly hide everything behind yet-another-closed-door-discussion-channel which requires you to create an account just to follow the discussions. That’s nonsense. This also means that nothing of the possible design discussions exchanged there will ever be archived anywhere. The world is getting sick…

> The world is getting sick

The RPi world is already totally sick. Where does the requirement to format the SD card with MBR/FAT originates from and why can neither GPT nor a 0xef partition type be used (EFI System Partition)? Since they masquerade that they in reality do not just ship some UEFI emulation layer but also suck in the primary OS as well and have to ship two operating systems with their releases: https://github.com/pftf/RPi4/blob/c43c1ed8b95bf44458d5fb59ac3f76efa8093cc6/appveyor.yml#L16-L19

Those are the BLOBs containing ThreadX to run on the VideoCore (that still can only cope with partitions and filesystems from the past) and the stuff for ACPI emulation.

I have a different view on this. As much as I dislike UEFI and ACPI, these could actually improve the cross-platform compatibility in the future for the ARM world, hoping to reach a point close to the x86 server world where, provided that your kernel has the relevant storage drivers, you actually manage to boot to a system which can then load the other hardware-specific drivers. And in this perspective, what’s better than a misdesigned RPi as an experimentation playground ? As you say it does require some emulation layers, it causes some difficulties that regular systems will not face, and has hundreds of thousands of enthousiast beta-testers available! I mean, once it starts to work there, we’ll know the solution will be robust enough to be ported to more serious platforms with much less effort.

> we’ll know the solution will be robust enough to be ported to more serious platforms with much less effort.

I don’t get it since I thought ‘we’ would be already there, see the NetBSD 9.0 announcement here from today and ‘Compatibility with “Arm ServerReady” compliant machines (SBBR+SBSA) using ACPI. Tested on Amazon Graviton and Graviton2 (including bare metal instances), AMD Opteron A1100, Ampere eMAG 8180, Cavium ThunderX, Marvell ARMADA 8040, QEMU w/ Tianocore EDK2’.

So serious ARM hardware tries to be SBBR+SBSA compliant and has real ACPI/UEFI, for the random Android SoC on our SBCs this never will be an issue since why should their vendors stop with their ‘port and forget’ mentality? If it works with their own outdated BSP bootloader/kernel to get a somewhat recent Android booting mission is accomplished, isn’t it?

And on the RPi I would believe the majority of ‘enthousiasts’ loving to see UEFI support for their platform is keen on Windows (and a few wanting ‘stock 64-bit Debian’ or something like that). Most of them probably not able to understand that Windows on ARM without proper GPU support is worthless…

> why should their vendors stop with their ‘port and forget’ mentality?

Actually the main driver behind this is cost: you do the job once, pay once and that’s all. With UEFI this can go back more or less to this, the vendor will figure he’s developing a BIOS for his machine then the machine is supposed to be compatible with whatever. Of course the driver porting effort still remains to be done, but let’s be honest, the same IP blocks are regularly found on most SoCs. You generally find designware UARTs and MACs for example. So over time it could possibly allow some vendors not to need shitty BSPs anymore because the alternative will be good enough.

>some vendors not to need shitty BSPs anymore because the alternative will be good enough.

I don’t think it’ll happen. Everyone can make ARM chips so vendors have to add something specific and weird to differentiate their offerings and hopefully keep you locked on their product lines. Usually that’s some hardware or some horrific hacks they’ve made to their kernels to pull off something.

> let’s be honest, the same IP blocks are regularly found on most SoCs. You generally find designware UARTs and MACs for example.

Sure. Neither you nor me ever wants to attach a display to an ARM device or is interested in any of the use cases those things are developed for in the first place (some media stuff running with Android). And as @dgp said: why should different ARM SoC vendors try to be compatible to each other when their only goal is to differentiate from their competitors by improving in one or the other area (superior video engine, codec support and the like).

With their business cases in mind I also don’t think that ‘upstreaming’ the relevant SoC parts is even possible since sometimes the stuff they hack into their BSP kernels does not even exist as a concept in mainline.

A little over 4 years ago I got my first 64-bit SBC (Pine64) and started soon after working on improving DVFS/thermal settings for A64 based on then already outdated Allwinner’s 3.10 BSP kernel. Just recently this DVFS/THS stuff landed in mainline which is simply just a few years too late or in other words: ‘Linux software support is ready once the hardware is obsolete’.

> why should different ARM SoC vendors try to be compatible to each other when their only goal is to differentiate from their competitors

In the x86 world this problem disappeared 25 years ago. And we’re still seeing tons of atom, celeron and whatever small board being sold everywhere, where operating system support out of the box is a critical selling point. Still vendors differentiate themselves based on real features, not on “I have my own unreliable hack in my driver”. At the moment the facial value is placed where it’s not.

> Linux software support is ready once the hardware is obsolete

We all know this, and part of the problem is spending weeks trying to get something barely working by hacking the system everywhere. I remember when I tested my mirabox with Marvell’s BSP, haproxy wouldn’t work because they managed to break the splice() syscall by doing dirty things inside! That was completely nonsense! Vendors should never have to touch these areas, but we all know how they end up doing this: “ah finally it boots, commit now!”. I’m pretty sure that most board vendors would prefer to pay engineers and contractors to bring some value than to figure how to boot, or how to work around BSP issues with unresponsive SoC vendors.

There’s no reason the ARM ecosystem has to be different than anything else. It’s just in this current state because there’s nothing common between all devices. At least boot loaders have mostly converged, we made a small progress in two decades…

>In the x86 world this problem disappeared 25 years ago.

There are only two real x86 vendors and while they share (most) the same ISA they are not competing by trying to sell basically the same synthesized code from another company (ARM) in different shapes.

The x86 market is comparable to the ARM vendors with implementer licenses that are outdoing each other with how badass they can make their version of ARMv8 and not how much junk they can bolt onto the same Cortex A7 source tree they’ve been strapping junk onto for decades.

The way to have stopped this from happening was for ARM to license their cores as part of a basic SoC that vendors extend or the license came with requirements for how the base system looks to software. Instead the ship sailed and now they’re trying to retroactively fix it.

The irony with all this is that everyone said X86/Intel was the bad guy and ARM was the future because it was made of totally vegan RISC instructions and something to do with a BBC micro blah blah and now we have the horrendous ghetto that is the ARM eco-system that is going to haunt us for generations to come while the big bad Intel are probably the only vendor out that can actually claim their stuff works in mainline from top to bottom.

> Linux software support is ready once the hardware is obsolete

here you’re speaking about the CPU vendors, not the board vendors. The difference is subtle, but in x86 world, only CPU support has to be compatible and the rest around is enumerated (BIOS, ACPI, etc). In the ARM world, most CPU cores are standardized and compatible nowadays. Probably 90% of cores on the market are A53 and are exactly the same. These are not the ones causing trouble. The trouble is what to put into the DTB, what IP blocks are connected around and where they are located. In the x86 world some of them are standard (or backwards compatible, e.g. COM1 at 0x3F8+irq4 emulating an old 8250), and the rest gets enumerated.

I think that with ACPI+UEFI we can reach that point in the ARM world. If at least any machine using standard cores (A53, A72 etc) and standard IP blocks can work out of the box without requiring shitty OS-specific DTBs to be flashed by the user and changed with every kernel upgrade. I’m pretty certain there will still be lots of problems, bad implementations (who ever saw an ACPI table without errors?) and bad support for new IP but that should already be a progress.

> The irony with all this is that everyone said X86/Intel was the bad guy and ARM was the future… Intel are probably the only vendor out that can actually claim their stuff works in mainline

I 100% agree with you on this! I’m still dreaming of the day I can boot any ARM board from a random bootable USB stick. That day I may migrate my file server from my old Atom to my macchiatobin!

>I 100% agree with you on this! I’m still dreaming of the day

>I can boot any ARM board from a random bootable USB stick.

I think RISC-V (or something else) will have taken over long before that happens. Doesn’t RISC-V mandate a devicetree in rom or something.. if so that’s a good start.

I would suggest to you dgp that it is more ying and yang than that ( In Ancient Chinese philosophy, yin and yang is a concept of dualism, describing how seemingly opposite or contrary forces may actually be complementary, interconnected, and interdependent in the natural world, and how they may give rise to each other as they interrelate to one another. ).

One side arm is free to do as your hardware licenses let you. Which brings constant change, little to no support as well as unkown life lenght.

On the other side you can get a fixed product life, possible aging software thats mature but open to attack possibly and designs can be dated by unforseen hardware evelutions or customer needs drasticlly changing.

The PC world also went through this but long evelotion time has weeded out dead ends mostly, in desktop market.

Apple is an example of support to point but you are told what you can have.

> The irony with all this is that everyone said X86/Intel was the bad guy and ARM was the future… Intel are probably the only vendor out that can actually claim their stuff works in mainline.

And everyone was right. Arm (as in desktop/server class hw) will be mainline soon the way x86 is. Yet, there will always be the small and/or embedded arm SoC vendor who will take a BSP and run with it. The reason we’re not seeing that with x86 is (you guess it right) — no small vendors. It’s a stagnated duopoly with two incumbents. If that had the turmoils of arm’s rich market then god help us.

>And everyone was right. Arm (as in desktop/server class hw) will be mainline soon the way x86 is.

You’re delusional. Before that happens the desktop and server markets will be long since dead. ARMs market is highly proprietary locked down devices that only the vendor can build an OS for and that’s were everything is going because the money in generic stuff is going to dry up.

> ARMs market is highly proprietary locked down devices that only the vendor can build an OS for

RedHat and Fedora (booting off UEFI) build arm devices? And the vendor of the device is who, exactly? Asus, Acer, Samsung et al ship win10 and chromeos devices without Asus or Samsung SoCs inside. Hey, look, it’s just like PC vendors shipping intel/amd-based devices!

YAWN!

Discord and Telegram seem to be gaining traction for developers’ discussions, as I’ve had to join such services several times to get more details about products/projects.

I find it absolutely pathetic that developers have to hide behind such secret places, especially for opensource development. They’re probably ashamed to say stupidities in public and look dumb, but there it is even worse, their work remains totally confidential and nobody can help nor improve upon their work. I really fail to see their motivation to do that!

Discord I can understand the complaint but why are we whining about Telegram? Isn’t it open source as well? Why complain that an open source app is being used for open source development?

I don’t care how the discussion tool is developed itself. What matters to me is that I cannot read messages exchanged there without creating an account on yet-another-platform! THIS is utter non-sense. Opensource developement happening behind closed doors is not exactly what I would call opensource. How do you share links to discussions in commit messages, how do you bring other developers to the discussion ? Let’s simply put it, when you do it public, 7 billion people have access to the discussion to get some background about a design choice. When you do it on such a crap, a few hundred people only have access to it. This makes a huge difference in terms of expected quality and reliability.

Not saying you’re wrong but if you look at how much nonsense that happens in the “open source community” now, i.e. people that have no part of a project trying to get people ejected from a project for thought crimes, entitled orbiters that think they have some right to tell actual contributors what they should be doing with their time, the incredibly twitter-vocal crazies and so and and so, you’ll have some empathy for people that say you can have their code but you don’t get a direct line into their work space to stink it up with whatever the voices in your head are saying that day.

Actually scratch that I do think you’re wrong. Unless you’re paying their salary then you don’t really have any right to complain about how they decide to do their work. It would be nice it was all out in public but you’ve done exactly what puts people off of being transparent like that with strangers: Assume you know best, accused them of having something to hide and of being incompetent when it’s more likely than not they just want do their work in peace.

They’re absolutely allowed to do their work secretly and may even be paid for this, which is still fine. But just don’t call this opensource. We all know that design and discussions are way more important than the final code, which is just one possible outcome of many discussions. And actually thousands of kernel developers, as well as developers on many other opensource projects do not seem to have any problem with sharing their work on public places like mailing lists or issue trackers, it’s even the opposite, they’re constantly looking for better tools to improve indexing and better find past discussions (have a look at lore.kernel.org for example).

I can accept that one has to create an account somewhere to interact. But not just to read.

>But just don’t call this opensource.

Opensource means you get the source not that you get the backseat drive the development process. You get the source so that if you think you can do better you can go and do that without having to beg the original developers.

>We all know that design and discussions

Doesn’t matter. There is no opensource requirement to make all of that stuff public.

>And actually thousands of kernel developer

Thousands of kernel developers now work for commercial entities and do a huge amount of their work in private and only the last mile makes it to the LKML if it ever is released to the public.

And there are “secret” kernel lists where things like how to handle the whole CoC situation are discussed.

> Thousands of kernel developers now work for commercial entities and do a huge amount of their work in private and only the last mile makes it to the LKML if it ever is released to the public.

Actually these typically are the patch sets that get rejected by maintainers asking to redesign the stuff totally differently and restart from the white board. Look for example at the hot patching mechanisms, Red Hat implemented one, SuSE another one, both pushing to get theirs merged, in the end none was taken and they were forced to work together on a third one that got merged. You can work in your garage on your own driver, nobody will complain if you merge it as-is. But once it’s basic infrastructure supposed to be generic, there’s little hope to change anything without design discussions.

So open source works just like I said. People work in vacuums and you see just the tip of what is actually going on and sometimes it’s a waste of work. I wouldn’t be surprised if even though vendors you mentioned work together in secret on stuff… and you still have no right to tell them to change how they work unless you are paying their bills.

It’s not written in any of the licenses that your work flow also needs to be open and if it was open source would be totally unusable expect for hobby projects.

Can raspberry pi4 sdcard imgs (non squashed) be converted to iso

And can be setup on a external usb key / harddrive while maintaining sd card boot so it can reconside usb harddrive / key like bootberry but also self expands from the original pi image swap file

Regards

Paul