Last month I received NVIDIA Jetson Nano developer kit together with 52Pi ICE Tower Cooling Fan, and the main goal was to compare the performance of the board with the stock heatsink or 52Pi heatsink + fan combo.

But the stock heatsink does a very good job of cooling the board, and typical CPU stress tests do not make the processor throttle at all. So I had to stress the GPU as well, as it takes some efforts to set it up all, so I’ll report my experience configuring the board, and running AI test programs including running objects detection on an RTSP video stream.

Setting up NVIDIA Jetson Nano Board

Preparing the board is very much like you’d do with other SBC’s such as the Raspberry Pi, and NVIDIA has a nicely put getting started guide, so I won’t go into too many details here. To summarize:

- Download the latest firmware image (nv-jetson-nano-sd-card-image-r32.2.3.zip at the time of the review)

- Flash it with balenaEtcher to a MicroSD card since Jetson Nano developer kit does not have built-in storage.

- Insert the MicroSD card in the slot underneath the module, connect HDMI, keyboard, and mouse, before finally powering up the board

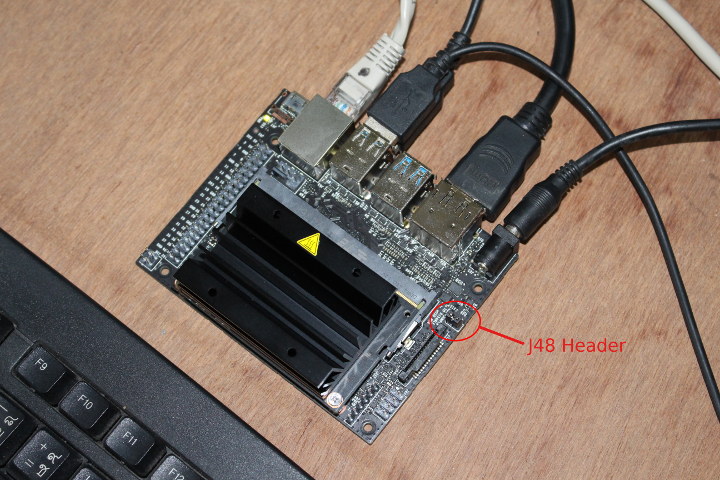

By default, the board expects to be powered by a 5V power supply through its micro USB port. But to avoid any potential power issues, I connected a 5V/3A power supply to the DC jack and fitted a jumper on J48 header to switch the power source.

It quickly booted to Ubuntu, and after going through the setup wizard to accept the user agreement, select the language, keyboard layout, timezone, and setup a user, the system performed some configurations, and within a couple of minutes, we were good to go.

Jetson Nano System Info & NVIDIA Tools

Here’s some system information after updating Ubuntu with dist-upgrade:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

cnxsoft@Jetson-Nano:~$ uname -a Linux Jetson-Nano 4.9.140-tegra #1 SMP PREEMPT Tue Nov 5 13:43:53 PST 2019 aarch64 aarch64 aarch64 GNU/Linux cnxsoft@Jetson-Nano:~$ cat /etc/lsb-release DISTRIB_ID=Ubuntu DISTRIB_RELEASE=18.04 DISTRIB_CODENAME=bionic DISTRIB_DESCRIPTION="Ubuntu 18.04.3 LTS" cnxsoft@Jetson-Nano:~$ df -h Filesystem Size Used Avail Use% Mounted on /dev/mmcblk0p1 30G 10G 18G 36% / none 1.7G 0 1.7G 0% /dev tmpfs 2.0G 4.0K 2.0G 1% /dev/shm tmpfs 2.0G 30M 2.0G 2% /run tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup tmpfs 396M 20K 396M 1% /run/user/120 tmpfs 396M 136K 396M 1% /run/user/1000 cnxsoft@Jetson-Nano:~$ free -h total used free shared buff/cache available Mem: 3.9G 1.7G 449M 49M 1.8G 2.0G Swap: 1.9G 904K 1.9G cnxsoft@Jetson-Nano:~$ cat /proc/cpuinfo processor : 0 model name : ARMv8 Processor rev 1 (v8l) BogoMIPS : 38.40 Features : fp asimd evtstrm aes pmull sha1 sha2 crc32 CPU implementer : 0x41 CPU architecture: 8 CPU variant : 0x1 CPU part : 0xd07 CPU revision : 1 processor : 1 model name : ARMv8 Processor rev 1 (v8l) BogoMIPS : 38.40 Features : fp asimd evtstrm aes pmull sha1 sha2 crc32 CPU implementer : 0x41 CPU architecture: 8 CPU variant : 0x1 CPU part : 0xd07 CPU revision : 1 |

Loaded modules:

|

1 2 3 4 5 6 7 8 9 10 |

lsmod Module Size Used by bnep 16562 2 zram 26166 4 overlay 48691 0 fuse 103841 5 nvgpu 1569917 38 bluedroid_pm 13912 0 ip_tables 19441 0 x_tables 28951 1 ip_tables |

GPIO appear to be properly configured:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 |

cat /sys/kernel/debug/gpio cat: /sys/kernel/debug/gpio: Permission denied cnxsoft@Jetson-Nano:~$ sudo cat /sys/kernel/debug/gpio [sudo] password for cnxsoft: gpiochip0: GPIOs 0-255, parent: platform/6000d000.gpio, tegra-gpio: gpio-0 ( ) gpio-1 ( ) gpio-2 ( |pcie_wake ) in hi gpio-3 ( ) gpio-4 ( ) gpio-5 ( ) gpio-6 ( |vdd-usb-hub-en ) out hi gpio-7 ( ) gpio-8 ( ) gpio-9 ( ) gpio-10 ( ) gpio-11 ( ) gpio-12 (SPI1_MOSI ) gpio-13 (SPI1_MISO ) gpio-14 (SPI1_SCK ) gpio-15 (SPI1_CS0 ) gpio-16 (SPI0_MOSI ) gpio-17 (SPI0_MISO ) gpio-18 (SPI0_SCK ) gpio-19 (SPI0_CS0 ) gpio-20 (SPI0_CS1 ) gpio-21 ( ) gpio-22 ( ) gpio-23 ( ) gpio-24 ( ) gpio-25 ( ) gpio-26 ( ) gpio-27 ( ) gpio-28 ( ) gpio-29 ( ) gpio-30 ( ) gpio-31 ( ) gpio-32 ( ) gpio-33 ( ) gpio-34 ( ) gpio-35 ( ) gpio-36 ( ) gpio-37 ( ) gpio-38 (GPIO13 ) gpio-39 ( ) gpio-40 ( ) gpio-41 ( ) gpio-42 ( ) gpio-43 ( ) gpio-44 ( ) gpio-45 ( ) gpio-46 ( ) gpio-47 ( ) gpio-48 ( ) gpio-49 ( ) gpio-50 (UART1_RTS ) gpio-51 (UART1_CTS ) gpio-52 ( ) gpio-53 ( ) gpio-54 ( ) gpio-55 ( ) gpio-56 ( ) gpio-57 ( ) gpio-58 ( ) gpio-59 ( ) gpio-60 ( ) gpio-61 ( ) gpio-62 ( ) gpio-63 ( ) gpio-64 ( ) gpio-65 ( ) gpio-66 ( ) gpio-67 ( ) gpio-68 ( ) gpio-69 ( ) gpio-70 ( ) gpio-71 ( ) gpio-72 ( ) gpio-73 ( ) gpio-74 ( ) gpio-75 ( ) gpio-76 (I2S0_FS ) gpio-77 (I2S0_DIN ) gpio-78 (I2S0_DOUT ) gpio-79 (I2S0_SCLK ) gpio-80 ( ) gpio-81 ( ) gpio-82 ( ) gpio-83 ( ) gpio-84 ( ) gpio-85 ( ) gpio-86 ( ) gpio-87 ( ) gpio-88 ( ) gpio-89 ( ) gpio-90 ( ) gpio-91 ( ) gpio-92 ( ) gpio-93 ( ) gpio-94 ( ) gpio-95 ( ) gpio-96 ( ) gpio-97 ( ) gpio-98 ( ) gpio-99 ( ) gpio-100 ( ) gpio-101 ( ) gpio-102 ( ) gpio-103 ( ) gpio-104 ( ) gpio-105 ( ) gpio-106 ( ) gpio-107 ( ) gpio-108 ( ) gpio-109 ( ) gpio-110 ( ) gpio-111 ( ) gpio-112 ( ) gpio-113 ( ) gpio-114 ( ) gpio-115 ( ) gpio-116 ( ) gpio-117 ( ) gpio-118 ( ) gpio-119 ( ) gpio-120 ( ) gpio-121 ( ) gpio-122 ( ) gpio-123 ( ) gpio-124 ( ) gpio-125 ( ) gpio-126 ( ) gpio-127 ( ) gpio-128 ( ) gpio-129 ( ) gpio-130 ( ) gpio-131 ( ) gpio-132 ( ) gpio-133 ( ) gpio-134 ( ) gpio-135 ( ) gpio-136 ( ) gpio-137 ( ) gpio-138 ( ) gpio-139 ( ) gpio-140 ( ) gpio-141 ( ) gpio-142 ( ) gpio-143 ( ) gpio-144 ( ) gpio-145 ( ) gpio-146 ( ) gpio-147 ( ) gpio-148 ( ) gpio-149 (GPIO01 ) gpio-150 ( ) gpio-151 ( |cam_reset_gpio ) out lo gpio-152 ( |camera-control-outpu) out lo gpio-153 ( ) gpio-154 ( ) gpio-155 ( ) gpio-156 ( ) gpio-157 ( ) gpio-158 ( ) gpio-159 ( ) gpio-160 ( ) gpio-161 ( ) gpio-162 ( ) gpio-163 ( ) gpio-164 ( ) gpio-165 ( ) gpio-166 ( ) gpio-167 ( ) gpio-168 (GPIO07 ) gpio-169 ( ) gpio-170 ( ) gpio-171 ( ) gpio-172 ( ) gpio-173 ( ) gpio-174 ( ) gpio-175 ( ) gpio-176 ( ) gpio-177 ( ) gpio-178 ( ) gpio-179 ( ) gpio-180 ( ) gpio-181 ( ) gpio-182 ( ) gpio-183 ( ) gpio-184 ( ) gpio-185 ( ) gpio-186 ( ) gpio-187 ( |? ) out hi gpio-188 ( ) gpio-189 ( |Power ) in hi IRQ gpio-190 ( |Forcerecovery ) in hi IRQ gpio-191 ( ) gpio-192 ( ) gpio-193 ( ) gpio-194 (GPIO12 ) gpio-195 ( ) gpio-196 ( ) gpio-197 ( ) gpio-198 ( ) gpio-199 ( ) gpio-200 (GPIO11 ) gpio-201 ( |cd ) in lo IRQ gpio-202 ( |pwm-fan-tach ) in hi IRQ gpio-203 ( |vdd-3v3-sd ) out hi gpio-204 ( ) gpio-205 ( ) gpio-206 ( ) gpio-207 ( ) gpio-208 ( ) gpio-209 ( ) gpio-210 ( ) gpio-211 ( ) gpio-212 ( ) gpio-213 ( ) gpio-214 ( ) gpio-215 ( ) gpio-216 (GPIO09 ) gpio-217 ( ) gpio-218 ( ) gpio-219 ( ) gpio-220 ( ) gpio-221 ( ) gpio-222 ( ) gpio-223 ( ) gpio-224 ( ) gpio-225 ( |hdmi2.0_hpd ) in lo IRQ gpio-226 ( ) gpio-227 ( ) gpio-228 ( |extcon:extcon@1 ) in hi IRQ gpio-229 ( ) gpio-230 ( ) gpio-231 ( ) gpio-232 (SPI1_CS1 ) gpio-233 ( ) gpio-234 ( ) gpio-235 ( ) gpio-236 ( ) gpio-237 ( ) gpio-238 ( ) gpio-239 ( ) gpiochip1: GPIOs 504-511, parent: platform/max77620-gpio, max77620-gpio, can sleep: gpio-505 ( |spmic-default-output) out hi gpio-507 ( |vdd-3v3-sys ) out hi gpio-510 ( |enable ) out lo gpio-511 ( |avdd-io-edp-1v05 ) out lo |

Nvidia Power Model Tool allows us to check the power mode:

|

1 2 3 4 |

sudo nvpmodel -q NVPM WARN: fan mode is not set! NV Power Mode: MAXN 0 |

MAXN is 10W power mode, and we could change to 5W & check it as follows:

|

1 2 3 4 5 |

sudo nvpmodel -m 1 sudo nvpmodel -q NVPM WARN: fan mode is not set! NV Power Mode: 5W 1 |

NVIDIA also provides tegrastats utility to report real-time utilization, temperature, and power consumption of various parts of the processor:

|

1 2 3 4 5 6 7 8 9 |

tegrastats RAM 1802/3956MB (lfb 106x4MB) SWAP 0/1978MB (cached 0MB) CPU [12%@102,6%@102,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@44.5C thermal@35.5C POM_5V_IN 1121/1121 POM_5V_GPU 0/0 POM_5V_CPU 124/124 RAM 1802/3956MB (lfb 106x4MB) SWAP 0/1978MB (cached 0MB) CPU [14%@102,4%@102,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35.5C PMIC@100C GPU@36C AO@45C thermal@35.5C POM_5V_IN 1079/1100 POM_5V_GPU 0/0 POM_5V_CPU 124/124 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [17%@307,6%@307,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@44.5C thermal@35.75C POM_5V_IN 1121/1107 POM_5V_GPU 0/0 POM_5V_CPU 165/137 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [14%@102,5%@102,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@45C thermal@35.5C POM_5V_IN 1121/1110 POM_5V_GPU 0/0 POM_5V_CPU 166/144 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [14%@102,4%@102,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@44.5C thermal@35.5C POM_5V_IN 1121/1112 POM_5V_GPU 0/0 POM_5V_CPU 165/148 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [15%@204,5%@204,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@45C thermal@35.5C POM_5V_IN 1121/1114 POM_5V_GPU 0/0 POM_5V_CPU 166/151 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [14%@204,3%@204,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@45C thermal@35.5C POM_5V_IN 1161/1120 POM_5V_GPU 0/0 POM_5V_CPU 165/153 RAM 1802/3956MB (lfb 105x4MB) SWAP 0/1978MB (cached 0MB) CPU [16%@102,6%@102,off,off] EMC_FREQ 0% GR3D_FREQ 0% PLL@34C CPU@35C PMIC@100C GPU@36C AO@45C thermal@35.5C POM_5V_IN 1163/1126 POM_5V_GPU 0/0 POM_5V_CPU 165/155 |

It’s rather cryptic, so you may want to check the documentation. For example at idle as shown above, we have two cores being used at a frequency as low as 102 MHz, CPU temperature is around 35°C, the GPU is basically unused, and power consumption of the board is about 1.1 Watts.

AI Hello World

The best way to get started with AI inferences is to use the Hello World samples which can be installed as follows:

|

1 2 3 4 5 6 7 |

sudo apt update sudo apt install git cmake libpython3-dev python3-numpy dialog git clone --recursive https://github.com/dusty-nv/jetson-inference cd jetson-inference mkdir build cd build cmake ../ |

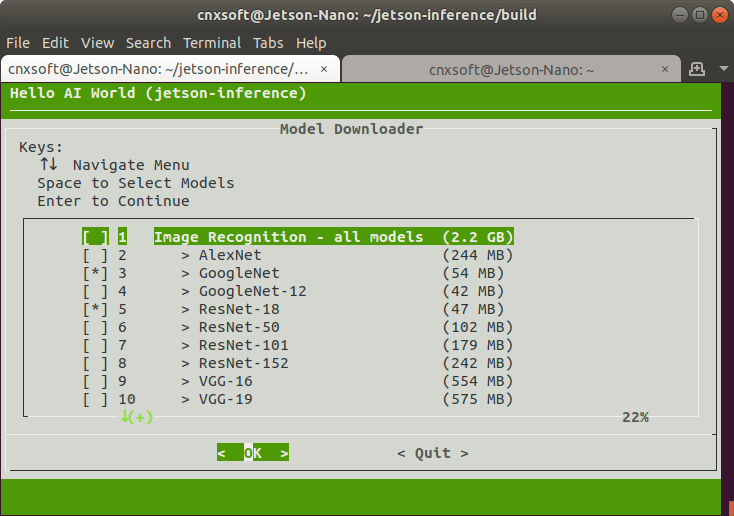

The last command will bring Hello AI World dialog to select the models you’d like to use. I just went with the default ones

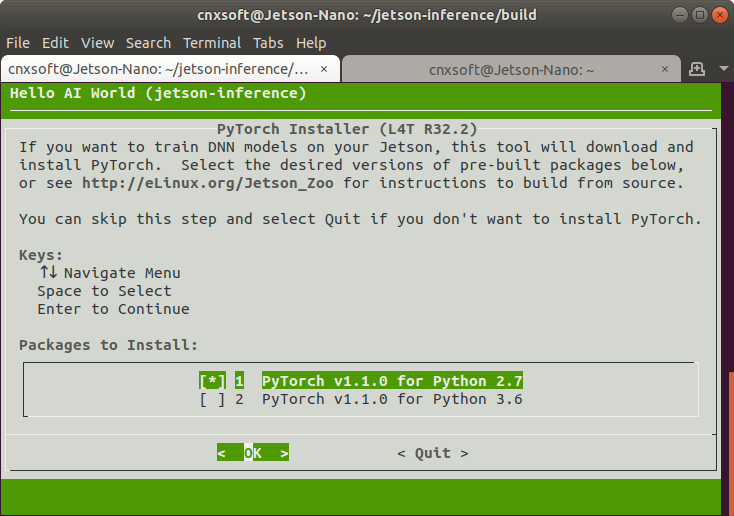

Once the selected models have been downloaded, you’ll be asked whether you want to install PyTorch:

If you’re only going to play with pre-trained models, there’s no need to install it, but I did select PyTorch v1.1.10 for Python 2.7 in case I have time to play with training later on.

If you’re only going to play with pre-trained models, there’s no need to install it, but I did select PyTorch v1.1.10 for Python 2.7 in case I have time to play with training later on.

We can now build the sample:

|

1 2 3 |

make sudo make install sudo ldconfig |

Then test image inference with an image sample using ImageNet:

|

1 2 |

cd ~jetson-inference/build/aarch64/bin ./imagenet-console --network=googlenet images/orange_0.jpg output_0.jpg |

Here’s the output of the last command:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

imageNet -- loading classification network model from: -- prototxt networks/googlenet.prototxt -- model networks/bvlc_googlenet.caffemodel -- class_labels networks/ilsvrc12_synset_words.txt -- input_blob 'data' -- output_blob 'prob' -- batch_size 1 [TRT] TensorRT version 5.1.6 [TRT] loading NVIDIA plugins... [TRT] Plugin Creator registration succeeded - GridAnchor_TRT [TRT] Plugin Creator registration succeeded - NMS_TRT [TRT] Plugin Creator registration succeeded - Reorg_TRT [TRT] Plugin Creator registration succeeded - Region_TRT [TRT] Plugin Creator registration succeeded - Clip_TRT [TRT] Plugin Creator registration succeeded - LReLU_TRT [TRT] Plugin Creator registration succeeded - PriorBox_TRT [TRT] Plugin Creator registration succeeded - Normalize_TRT [TRT] Plugin Creator registration succeeded - RPROI_TRT [TRT] Plugin Creator registration succeeded - BatchedNMS_TRT [TRT] completed loading NVIDIA plugins. [TRT] detected model format - caffe (extension '.caffemodel') [TRT] desired precision specified for GPU: FASTEST [TRT] requested fasted precision for device GPU without providing valid calibrator, disabling INT8 [TRT] native precisions detected for GPU: FP32, FP16 [TRT] selecting fastest native precision for GPU: FP16 [TRT] attempting to open engine cache file networks/bvlc_googlenet.caffemodel.1.1.GPU.FP16.engine [TRT] loading network profile from engine cache... networks/bvlc_googlenet.caffemodel.1.1.GPU.FP16.engine [TRT] device GPU, networks/bvlc_googlenet.caffemodel loaded [TRT] device GPU, CUDA engine context initialized with 2 bindings [TRT] binding -- index 0 -- name 'data' -- type FP32 -- in/out INPUT -- # dims 3 -- dim #0 3 (CHANNEL) -- dim #1 224 (SPATIAL) -- dim #2 224 (SPATIAL) [TRT] binding -- index 1 -- name 'prob' -- type FP32 -- in/out OUTPUT -- # dims 3 -- dim #0 1000 (CHANNEL) -- dim #1 1 (SPATIAL) -- dim #2 1 (SPATIAL) [TRT] binding to input 0 data binding index: 0 [TRT] binding to input 0 data dims (b=1 c=3 h=224 w=224) size=602112 [TRT] binding to output 0 prob binding index: 1 [TRT] binding to output 0 prob dims (b=1 c=1000 h=1 w=1) size=4000 device GPU, networks/bvlc_googlenet.caffemodel initialized. [TRT] networks/bvlc_googlenet.caffemodel loaded imageNet -- loaded 1000 class info entries networks/bvlc_googlenet.caffemodel initialized. [image] loaded 'images/orange_0.jpg' (1920 x 1920, 3 channels) class 0950 - 0.978582 (orange) class 0951 - 0.021285 (lemon) imagenet-console: 'images/orange_0.jpg' -> 97.85821% class #950 (orange) [TRT] ------------------------------------------------ [TRT] Timing Report networks/bvlc_googlenet.caffemodel [TRT] ------------------------------------------------ [TRT] Pre-Process CPU 0.08693ms CUDA 0.87870ms [TRT] Network CPU 64.90576ms CUDA 63.73557ms [TRT] Post-Process CPU 0.25339ms CUDA 0.25224ms [TRT] Total CPU 65.24608ms CUDA 64.86651ms [TRT] ------------------------------------------------ [TRT] note -- when processing a single image, run 'sudo jetson_clocks' before to disable DVFS for more accurate profiling/timing measurements imagenet-console: attempting to save output image to 'output_0.jpg' imagenet-console: completed saving 'output_0.jpg' imagenet-console: shutting down... imagenet-console: shutdown complete |

It also generated output_0.jpg with the inference info “97.858 orange” overlaid on top of the image.

Tiny YOLO-v3

The imageNet sample does not take a lot of time, so I also tried Tiny-Yolo3 sample which takes 500 images as described in a forum post:

|

1 2 3 4 5 6 7 8 9 10 |

sudo apt install libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev libgflags-dev cd ~ git clone -b restructure https://github.com/NVIDIA-AI-IOT/deepstream_reference_apps cd ~/deepstream_reference_apps/yolo sudo sh prebuild.sh cd apps/trt-yolo mkdir build && cd build cmake -D CMAKE_BUILD_TYPE=Release .. make sudo make install |

After we’ve built the sample we have some more work to do. First I downloaded 5 images from the net with typical objects:

|

1 2 |

ls ~/Pictures/ bicycle.jpg bus.jpg car.jpg laptop.jpg raspberry-pi-4.jpg |

edited ~/deepstream_reference_apps/yolo/data/test_images.txt to repeat the 5 lines below 100 times to have 500 entries:

|

1 2 3 4 5 |

/home/cnxsoft/Pictures/laptop.jpg /home/cnxsoft/Pictures/raspberry-pi-4.jpg /home/cnxsoft/Pictures/bicycle.jpg /home/cnxsoft/Pictures/bus.jpg /home/cnxsoft/Pictures/car.jpg |

and finally modified ~/deepstream_reference_apps/yolo/config/yolov3-tiny.txt to use kHALF precision:

|

1 |

--precision=kHALF |

We can now run Tiny YOLO inference

|

1 2 |

cd ~/deepstream_reference_apps/yolo sudo trt-yolo-app --flagfile=config/yolov3-tiny.txt |

That’s the output (not the first run):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

sudo trt-yolo-app --flagfile=config/yolov3-tiny.txt [sudo] password for cnxsoft: Loading pre-trained weights... Loading complete! Total Number of weights read : 8858734 layer inp_size out_size weightPtr (1) conv-bn-leaky 3 x 416 x 416 16 x 416 x 416 496 (2) maxpool 16 x 416 x 416 16 x 208 x 208 496 (3) conv-bn-leaky 16 x 208 x 208 32 x 208 x 208 5232 (4) maxpool 32 x 208 x 208 32 x 104 x 104 5232 (5) conv-bn-leaky 32 x 104 x 104 64 x 104 x 104 23920 (6) maxpool 64 x 104 x 104 64 x 52 x 52 23920 (7) conv-bn-leaky 64 x 52 x 52 128 x 52 x 52 98160 (8) maxpool 128 x 52 x 52 128 x 26 x 26 98160 (9) conv-bn-leaky 128 x 26 x 26 256 x 26 x 26 394096 (10) maxpool 256 x 26 x 26 256 x 13 x 13 394096 (11) conv-bn-leaky 256 x 13 x 13 512 x 13 x 13 1575792 (12) maxpool 512 x 13 x 13 512 x 13 x 13 1575792 (13) conv-bn-leaky 512 x 13 x 13 1024 x 13 x 13 6298480 (14) conv-bn-leaky 1024 x 13 x 13 256 x 13 x 13 6561648 (15) conv-bn-leaky 256 x 13 x 13 512 x 13 x 13 7743344 (16) conv-linear 512 x 13 x 13 255 x 13 x 13 7874159 (17) yolo 255 x 13 x 13 255 x 13 x 13 7874159 (18) route - 256 x 13 x 13 7874159 (19) conv-bn-leaky 256 x 13 x 13 128 x 13 x 13 7907439 (20) upsample 128 x 13 x 13 128 x 26 x 26 - (21) route - 384 x 26 x 26 7907439 (22) conv-bn-leaky 384 x 26 x 26 256 x 26 x 26 8793199 (23) conv-linear 256 x 26 x 26 255 x 26 x 26 8858734 (24) yolo 255 x 26 x 26 255 x 26 x 26 8858734 Output blob names : yolo_17 yolo_24 Using previously generated plan file located at data/yolov3-tiny-kHALF-kGPU-batch1.engine Loading TRT Engine... Loading Complete! Total number of images used for inference : 500 [======================================================================] 100 % Network Type : yolov3-tiny Precision : kHALF Batch Size : 1 Inference time per image : 30.7016 ms |

That sample is only used for benchmarking, and it took 30.70 ms for each inference, so we’ve got an inference speed of around 32 fps. That sample does not report the inference data, nor does it display anything on the display.

Detecting Objects from an RTSP Stream

In theory, I could loop the Tiny Yolov3 sample for stress-testing, but to really stress the GPU continuously the best is to perform inference on a video stream. detectnet-camera sample part of Jetson Inference (aka AI Hello World) can do the job as long as you have a compatible USB camera.

I have two older webcams that I bought 10 to 15 years ago. I never managed to make the Logitech Quickcam works in Linux or Android, but I could previously use the smaller one called “Venus 2.0” in both operating systems. But this time, I never managed to make the latter work in either Jetson Nano, nor my Ubuntu 18.04 laptop. If you want to get a MIPI or USB camera that works with the board for sure, check out the list of compatible cameras.

But then I thought… hey I have one camera that works! The webcam on my laptop. Of course, I can’t just connect it to the NVIDIA board, and instead, I started an H.264 stream using VLC:

|

1 2 |

sudo apt install vlc cvlc v4l2:///dev/video0 --sout '#transcode{vcodec=h264,vb=800,acodec=none}:rtp{sdp=rtsp://:8554/}' |

cvlc is the command-line utility for VLC. I could also play the stream on my own laptop:

|

1 |

cvlc rtsp://192.168.1.4:8554/ |

I had a lag of about 4 seconds, and I thought setting the network caching option to 200 ms might help, but instead the video below chopping. So the problem is somewhere else, and I did not have time to investigate for this review. The important part is that I could get the stream to work:

When I tried to play the stream in Jetson Nano with VLC, it would just segfault. But let’s not focus on that, and instead see if we can make detectnet-camera sample to work with our RTSP stream.

When I tried to play the stream in Jetson Nano with VLC, it would just segfault. But let’s not focus on that, and instead see if we can make detectnet-camera sample to work with our RTSP stream.

Somebody already did that a few months ago so it helped a lot. We need to edit the source code ~/jetson-inference/build/utils/camera/gstCamera.cpp in two parts of file:

- Disable CSI camera detection in gstCamera::ConvertRGBA function:

123456789#if 0if( csiCamera() )#elseif (1)#endif{// MIPI CSI camera is NV12if( CUDA_FAILED(cudaNV12ToRGBA32((uint8_t*)input, (float4*)mRGBA[mLatestRGBA], mWidth, mHeight)) )return false; - Hard-code the RTSP stream in gstCamera::buildLaunchStr function:

1234567891011121314151617181920212223else{#if 0ss << "v4l2src device=" << mCameraStr << " ! ";ss << "video/x-raw, width=(int)" << mWidth << ", height=(int)" << mHeight << ", ";#if NV_TENSORRT_MAJOR >= 5ss << "format=YUY2 ! videoconvert ! video/x-raw, format=RGB ! videoconvert !";#elsess << "format=RGB ! videoconvert ! video/x-raw, format=RGB ! videoconvert !";#endifss << "appsink name=mysink";#endifmSource = GST_SOURCE_V4L2;}#if 1ss<<"rtspsrc location=rtsp://192.168.1.4:8554/ ! rtph264depay ! h264parse ! omxh264dec ! appsink name=mysink";#endifmLaunchStr = ss.str();

Now we can rebuild the sample, and run the program:

|

1 2 3 |

make cd aarch64/bin ./detectnet-camera -width=640 -height=480 |

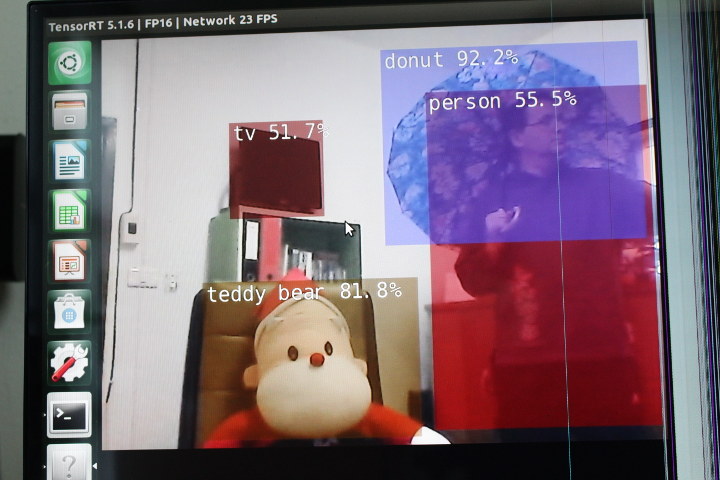

and success! Provided you accept I’m holding a massive donut on top of my head, and Santa Claus is a teddy bear…

The console is continuously updated with objects detected, and a timing report.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

2 objects detected detected obj 0 class #1 (person) confidence=0.729327 bounding box 0 (350.284058, 93.807137) (640.000000, 476.335693) w=289.715942 h=382.528564 detected obj 1 class #88 (teddy bear) confidence=0.854156 bounding box 1 (135.627579, 153.656708) (464.466187, 468.515198) w=328.838623 h=314.858490 [TRT] ------------------------------------------------ [TRT] Timing Report networks/SSD-Mobilenet-v2/ssd_mobilenet_v2_coco.uff [TRT] ------------------------------------------------ [TRT] Pre-Process CPU 0.36381ms CUDA 0.40844ms [TRT] Network CPU 40.90813ms CUDA 39.29630ms [TRT] Post-Process CPU 0.11625ms CUDA 0.11641ms [TRT] Visualize CPU 0.71480ms CUDA 0.74339ms [TRT] Total CPU 42.10300ms CUDA 40.56453ms [TRT] ------------------------------------------------ |

This sample would be a good way to get started to connect one or more IP cameras to Jetson Nano to leverage AI to lower false alerts instead of the primitive PIR detection often used in surveillance cameras. We’ll talk more about CPU and GPU usage, as well as thermals in the next post.

I’d like to thank Seeed Studio for sending NVIDIA Jetson Nano developer kit for evaluation. They sell it for $99.00 plus shipping. Alternatively, you’ll also find the board on Amazon or directly from NVIDIA.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Thanks for this. I have some RTSP cameras I will try this with.

Itll be interesting to see if I get the lag that other streaming clients such as plc have with with stream.

Would you mind sharing your entire modified gstCamera.cpp. I tired to emulate your edits, make runs clean, but detectnet-camera fails with:

/detectnet-camera

[gstreamer] initialized gstreamer, version 1.14.5.0

[gstreamer] gstCamera attempting to initialize with GST_SOURCE_NVARGUS, camera 0

[gstreamer] gstCamera pipeline string:

nvarguscamerasrc sensor-id=0 ! video/x-raw(memory:NVMM), width=(int)1280, height=(int)720, framerate=30/1, format=(string)NV12 ! nvvidconv flip-method=0 ! video/x-raw ! appsink name=mysinkrtspsrc location=rtsp://wowzaec2demo.streamlock.net/vod/mp4:BigBuckBunny_115k.mov ! rtph264depay ! h264parse ! omxh264dec ! appsink name=mysink

[gstreamer] gstCamera failed to create pipeline

[gstreamer] (no property “location” in element “mysinkrtspsrc”)

[gstreamer] failed to init gstCamera (GST_SOURCE_NVARGUS, camera 0)

Thank you.

Hi!

How can I change the SSD_mobilenet pretrained model with a customized one?