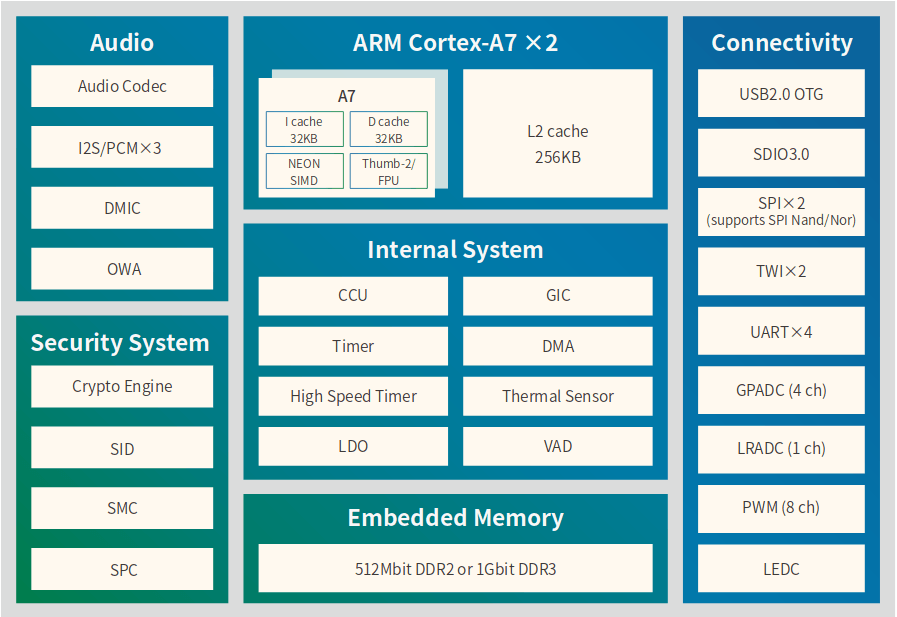

Earlier this year, Allwinner introduced some AIoT (AI + IoT) processors including Allwinner R328 dual-core Cortex-A7 processor for “low-cost voice interaction solutions” aka low-cost smart speakers. I did not pay too much attention at the processor at the time, but since then, the company has released a product brief with some more details about the processor.

We can see it integrates 64MB to 128MB DDR3 memory which should be enough to run Linux without external memory, and truly provide a low-cost solution for smart speakers, and I was told the chip may cost around $3. I was also asked whether Allwinner R328 smart speakers were already shipping.

A Google search in English did not help, so I had to switch to Chinese, and after visiting several sites, I could see some Allwinner A328 platforms including a smart speaker and a system-on-module were showcased at some event in China.

We’ve got a photo, but that much more info about the speaker itself. Processors are not typically mentioned in smart speakers specifications, so I went to Taobao and 1688.com websites to look for smart speakers that look like the one above.

“Tmall Elf Sugar R” (R) matches the design above, and sells for only 100 RMB ($14.2 US) on the website. There are several variants, so it’s a bit confusing since I can’t read Chinese. Based on the description the speaker comes with a 2-microphone array, support Bluetooth 4.2, and runs AliGenie Voice Assistant. According to Wikipedia, AliGenie Voice is an open-platform intelligent personal assistant launched and developed by Alibaba Group used in the Tmall Genie smart speaker. AliGenie is capable of smart home control, music playback, voice shopping with Taobao and Tmall, voice recognition, voiceprint recognition, as well as semantic understanding and speech synthesis.

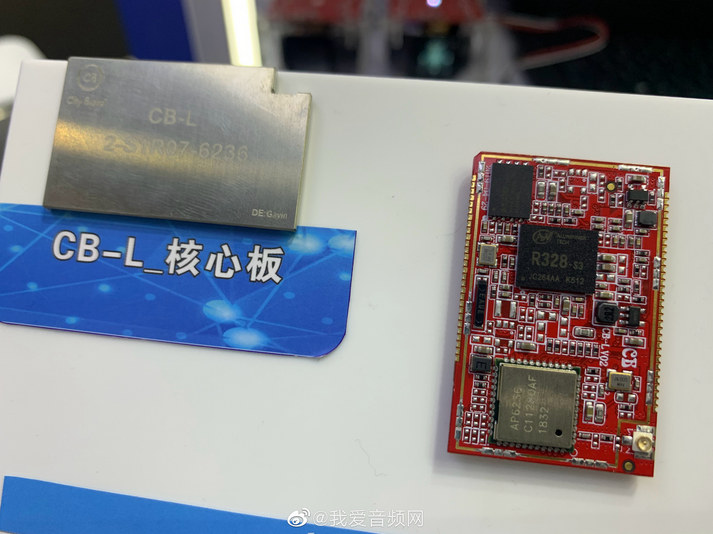

Beside the smart speaker, I also noticed an Allwinner R328 system-on-module named CB-L 2-S1R07-6236, but a web search did not yield any results. We can still the module comes with R328-S3 processor, and Ampak AP6236 802.11n WiFi 4 and Bluetooth 4.2 module, as well as a flash of unknown capacity. The company behind the design is apparently called CB which stands for… City Brand (Thanks Milkboy! See comments for details and link) well I’m not sure since the photo is blurry, but it looks like “City Biguo” or similar. Hopefully, Chinese readers may help on that one.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

It’s expensive but there is one board with this chip on taobao:

https://item.taobao.com/item.htm?spm=a230r.1.14.112.1ce816e7zyh333&id=600269429132&ns=1&abbucket=8#detail

I can see that board is using R328-S2. I wonder what the differences are between R328-S3 and R328-S2. Maybe just the memory 64MB vs 128MB?

Oh. It’s in the product brief… R328-S2: 64MB DDR2; R328-S3: 128MB DDR3

The physical size and pinout of the two seems to differ as well. That probably means you can’t put an S3 on an S3 board if you find you need more memory after you’ve done your PCB layout.

The package size is different but the ball size and ball pitch are the same, and S3 only has one more pin, so maybe it’s possible to design a PCB that works for both?

>a PCB that works for both?

Looking at the board above again you can see two silkscreen boxes for the SoC. I guess the bigger outline is for the S3 so the layout guy didn’t put components in the way.

“There are several variants,”

There are 2 variant essentially

官方标配 = just the devices, regular and Model “R”. usually expensive ones are just new stock with new design, with minimal HW changes

anything with *套餐 means bundling, see link bellow

https://img.alicdn.com/imgextra/i4/1115488308/O1CN01AJ7frj2BF73jpufzX_!!1115488308.jpg

“The company behind the design is apparently called CB which stands for”

Its CITY BRAND, http://www.citybrandhk.com/news_view.aspx?TypeId=4&Id=452&Fid=t2:4:2

Thanks!

Thanks, Jean-Luc nice work!

As per Allwinner website: OS: Linux 4.9

R328-S2 is square 9×9 mm

R328-S3 is rectangular 9×11 mm

Looking for SKD/Datasheet/User Manual

Chinese speaking friends please chime in. If there is this speaker for 14$ with R328-S3 I’m getting one ASAP to Europe

to disassemble for all of us to see whats inside.

Would be nice if internally it uses a SoM like the one pictured. So you can take the SoM out and dump the rest of it.

That said RK3308 SoMs with wifi are $10 on taobao (https://item.taobao.com/item.htm?spm=a230r.1.14.89.7d592d11kcApkR&id=595669877515&ns=1&abbucket=8#detail).

Is PMIC integrated on RK3308? There is no PMIC on this module.

There are 5 dumb DC-DC supplies on the board so you likely won’t be able to scale any voltages along with clock frequencies.

FWIW I went for this SoM instead for my first RK3308 experience. It’s more expensive but I don’t like SPI NAND or RTL wifi -> https://item.taobao.com/item.htm?spm=a230r.1.14.191.66dc2d11tXHjWG&id=600801250471&ns=1&abbucket=8#detail

Haven’t gotten the board yet but it looks like dumb DC-DC supplies like the other one.

There is also this one:

https://www.cnx-software.com/2019/06/08/mcuzone-rk3308-som-mdk3308-ek-evaluation-kit-smart-voice-applications/

I considered that one too but the headers make it massive. It does seem to have a rockchip PMIC though so maybe the power consumption will be better.

You can certainly build and retail a smart speaker using the R328 for $14. I’ve heard that the R328 is under $3 in volume. So that SOM in the photo costs about $6 to mass produce. Throw in power supply, case, speakers – maybe $10 wholesale. That supports the $14 retail price.

The board on taobao is using an AC101 for the array mics. That is a more expensive solution which I’m not sure is worth the added costs. The analog condenser mics do have better sound reproduction, but is it needed? This solution also allows you to input the amp-out signal into the AC101. Now when you sample you can get the 4 mics and amp-out simultaneously for easier echo cancelation. AC101 is I2S based.

The cheap solution is to directly attach four PDM digital mic to the SOC. The SOC supports that. You then wire amp-out to line-in. Downside — the echo cancelling software is more complicated because the sampling is not synchronized. I wonder if there is a simple circuit you could make that turns amp-out into a PDM signal?

> I’ve heard that the R328 is under $3 in volume

That would make it just shy of the cheapest 32bit Cortex A with memory you can buy today (AFAIK the cheapest is this: https://item.taobao.com/item.htm?spm=a230r.1.14.14.31bb2e0eYacUFC&id=596354183127&ns=1&abbucket=8#detail at ~$2.6). If only it wasn’t in such a horrible to work with package and you didn’t need to order thousands of them.

Many of the Hisilicon chips with embedded memory are sub-$3. But they are all ARM9.

>But they are all ARM9.

That means the are probably on pre-DT 3.x kernels and makes getting them running with a mainline kernel a massive pain. Cortex A means if you have a working u-boot you can probably get from no kernel support to booting a buildroot initramfs in about 3 changes (a new arm/mach- dir and skeleton machine file, a device tree with the memory, gic and arch timer and some config options to enable a debug uart) to the latest upstream kernel.

They are all Linux 3.4 and all of the h.,264/265 support is closed source so you can’t switch kernels. On the other hand, a smart speaker doesn’t care that much about the kernel. You do have the source to the 3.4 kernel, just not all of their modules. So you can keep applying patches to it. 3.4 has received a lot of patches due to it being used in Android 4.2 (?)

If you don’t care about the camera and are a glutton for punishment, there is no reason you couldn’t bring these chips up to mainline.

>So you can keep applying patches to it. 3.4

I think 3.4 has been dead for a while as almost no one uses Android 4 anymore. If you don’t mind working with something that out of date and are never going to put in on a network I guess you can get away with it but I think you’d be setting yourself up for a lot of pain in the long run.

>If you don’t care about the camera and are a glutton for punishment,

>there is no reason you couldn’t bring these chips up to mainline.

I did that for the $2.5 chip above. Cortex A has enough standardised that it’s actually possible to do without destroying your sanity.

That $3 number is from single source. That ‘s not today’s spot price, more like commit to 1M chips spread over a year kind of price.

Hmm, the SoC have old AW logo, kinda strange

It is brand new chip. These may be sample units not produced on the high volume production lines.

This is a bit tangential, but what’s a good option for getting a microphone array to play around with on the computer? I’m struggling to find good info and I thought someone might know a thing or two here

I saw the ReSpeaker-Mic-Array-v2-0 which is sorta what I want, but it’s a bit pricey but probably mostly do to the on-board DSP stuff which I don’t need. I’m actually more interested in trying my hand at implementing the DOA-type algorithms on the computer side myself instead of having it all done for me on-chip.

But even if I get a ReSpeaker and use it as a 4 mic sound card, I’m unclear as to how to get the microphone lines in-sync. For instance the ReSpeaker wiki shows recording from all 4 speakers at once in Audacity – but in code, how would I make sure the sound I’m reading off is lined up timewise? From what little sound programming I’ve done it seems you’re usually just pulling bytes off of some sound buffer and there aren’t exactly time stamps to go with it… (On a DSP ofcourse this is a nonissue!)

I could have some reference tone or impulse for calibrating the lines time-wise, but it’d be nice to avoid that.

If anyone has some pointers, let me know 🙂

I’d expect all ADCs to run of the same clock as a bare minimum.

The absolute cheapest way is to buy a Playstation Eye. $7 and they are USB. 4 mic channels and ALSA driver already in Linux mainline.

https://blog.michaelamerz.com/wordpress/trying-respeaker-mic-array-v2-0/

Don’t worry about syncing. The syncing is done in hardware. When you read from the ALSA device you will get four samples at a time (one from each mic), those samples were simultaneously captured by the hardware and then serialized later for you to read them.

Thanks so much for the pointers Jon. The price isn’t a huge turn off, but it’s good to know my options. I think the Play station eye not being omnidirectional will needlessly complicate things for me at the moment. I will look into ALSA. I had done some stuff through Java’s sound API and it may has obfuscated the 4 samples-at-a-time feature b/c of it’s own API

You can plug the Playstation Eye into your desktop to allow for easy software development.

Also, PulseAudio supports microphone arrays.

https://arunraghavan.net/2016/06/beamforming-in-pulseaudio/

What’s funny is that I have the same exact webcam and I never realized it had two microphones 🙂

I just confirmed I get both mics in Audacity (though I needed a reboot after plugging it in). Looks like I’ve got enough to get started. Thanks again for the links and info. It’s been very helpful for getting started with this

Gone this route also beamforming doesn’t work and is already dropped from webrtc and will be the same on the next update of pulse audio.

I am not trolling you John but jusy went through all this and honestly I have read the same and they are bum steers. 🙂

Beamforming was dropped from google’s webrtc because no one has array mics in their web browsers. AFAIK libwebrtc-audio-processing is going to keep the beamforming code. Pulse audio uses libwebrtc-audio-processing.

https://freedesktop.org/software/pulseaudio/webrtc-audio-processing/

There are no updates to libwebrtc-audio-processing. from after when beamforming was removed from google webrtc.

It is not a huge amount of code.

There’s also a discussion about PS3 eye and Kinect (See comments) @ https://www.cnx-software.com/2017/08/31/those-charts-show-the-benefit-of-microphone-arrays-for-hot-word-detection/

Oh my. The rabbit hole keeps going deeper. I assume that that’s the Kinect V1.0. They’re a bit harder to get on Taobao but I did find this interesting PDF about beamforming in Java with the Kinect

https://fivedots.coe.psu.ac.th/~ad/kinect/ch15/kinectMike.pdf

Some details are a bit vague. He can only use two mics at a time (may be a Java issue) He spend a lot of time setting up the Java Sound API but then when he gets to beam-forming he drops it entirely and uses a library (that I think works with the Kinect SDK)

The mics are also rather weirdly spaced on the Kinect.. so there are some unknowns and room for complications

I think working directly with ALSA like Jon suggested is probably the more no-nonsense route. And his direct testimonial about the PS3 Eye leaves me with a bit more confidence 😛

And umm.. any pointers for deciphering what Drone said: ” mic polling can be surprisingly effective, sometimes easily outperforming complex synthetic mic arrays.” ?

From what I understand polling is about how you get the data from the system and synthetic mic arrays is a postprocessing thing – but I’m prolly misunderstanding something

I’d get something working first and then worry about the PhD research stuff. You will need hot word detection – here is a free open source version.

https://github.com/nyumaya/nyumaya_audio_recognition

Thanks for that but I’ve actually got a few small projects in mind that aren’t ML related. More in the art/visualization space and point to point data over audio stuff. Nothing smart-speaker related! 🙂

Example source for data over audio

https://github.com/voice-engine/hey-wifi

Another option is Chirp which partnered with Arduino recently: https://www.cnx-software.com/2019/08/13/arduino-partners-with-chirp-to-enable-data-over-sound-m2m-connectivity/

But it’s not open source.

The library they use is very interesting: https://github.com/quiet/quiet

As well as it’s dependencies liquid-dsp and libfec

I’ll need to dig into this

Have you had time to look into it? I’ve asked somebody to test hey-wifi on Raspberry Pi, and he’s struggling to make it work.

PS3eye often gets recommended but the drivers in linux don’t work 100%. You get alsactl errors simple uitils like alsamixer don’t work.

At one stage it did and then the changed the multuchannel IEC setups in linux and its not that great.

Even the sample rate it states is all over the place.

The best prob for the pi is the respeaker 2mic as then capture/playback are on the same clock and you might have a chance of software AEC.

Without AEC if you have any media playing you go into forrest mode and are unable to tell it to stop or at least it will not hear you as its full of echo.

PS3eye for a media playing AI is a piece of junk and where the last one somone advised me now resides.

PS3eye is only recommend because it is dirt cheap and has open source support. You could fix up the drivers and send a patch into the kernel if you want. It is not a complicated piece of hardware, shouldn’t be very hard to fix the drivers.

The respeaker stuff works, but all of the interesting bits (beamforming, aec, agc) are closed source. That’s fine if you want a black box solution.

Many ARM CPUs support PDM mics. Like this one:

https://www.adafruit.com/product/3492

Several times I have wired up four of these to the PDM inputs on RK1808, V5, H6, etc… It is very easy to do with common jumper wires. I solder the wires into the PDM boards and then plug the other ends into the dev board connectors.

Really cool gadget! (Like an upgraded Amazon Echo). BTW the official name seems to be “TMall Genie”, says so on the front of the gadget (in recessed text). I did a quick translation of the fun features… https://docs.google.com/presentation/d/1K1GtkdrRbwVOaXWCqo0TiFOKYjn1-CD3gy7PCjFdy64/edit?usp=sharing

Somebody tried Tmall Elf Sugar and shot a video. It’s in Chinese, but turning on caption translation turns out mostly OK, once the “cat sperm” is out of the way…

https://www.youtube.com/watch?v=8Dcrb_ODwA8