Intel 8080 processor was released in April 1974, Motorola 6802 in 1976, and people in their late 40’s, 50’s or older may have experimented with those more than 40 years.

People may still have those at home, but surely it’s not possible to purchase those in 2019 if suddenly you’ve got that nostalgia feeling getting at you, right? Apparently, it is, as Wichit Sirichote, an associated professor at the Department of Applied Physics in King Mongkut’s Institute of Technology, in Bangkok, Thailand has designed a few development kits based on those older processors.

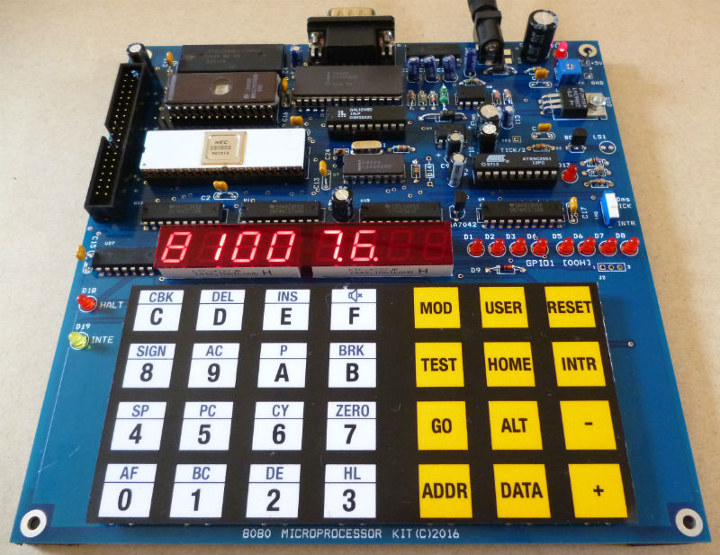

Let’s take the 8080 devkit as an example to check out the features of such kits:

- CPU – NEC 8080 CPU clocked at 2.048MHz

- Memory & Storage – 32KB RAM, 32KB EPROM

- Memory and I/O decoder chip – GAL16V8D PLD

- Oscillator – 8224 chip with Xtal frequency of 18.432MHz

- Bus controller – 8228 chip with RST 7 strobing for interrupt vector

- Display – 6-digit 7-segment LED display

- Keyboard – 28 keys

- RS232 serial port — Software-controlled UART 2400 bps 8n1

- Debugging LED – 8-bit GPIO1 LED at location 00H

- Tick – 10ms tick produced by 89C2051 available for time trigger experiments

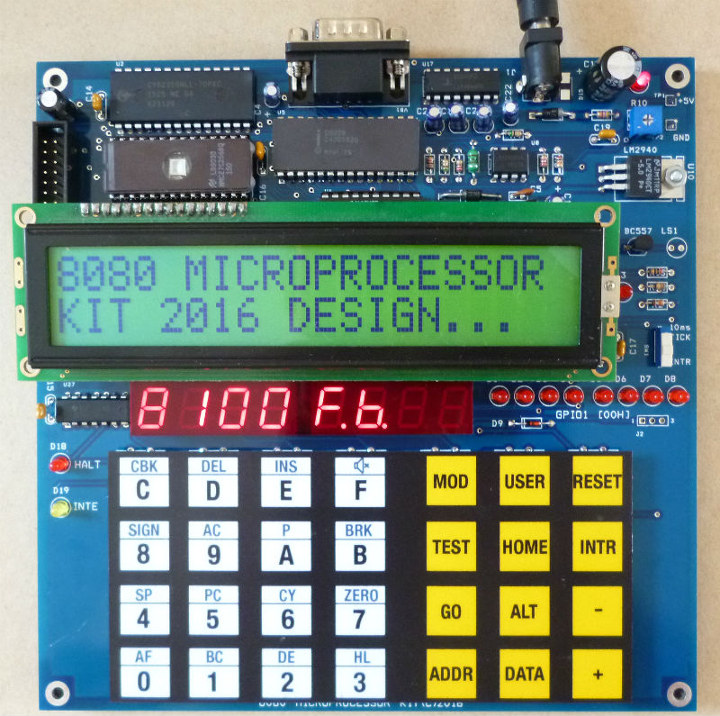

- Text LCD interface – direct CPU bus interfaced text LCD

- Expansion header – 2x 20-pin header

- Misc – KIA7042 reset chip for power brownout reset; HALT and INTE bit indicator LEDs

- Power conversion – +12V and -5V power supplied via on-board MC34063 DC/DC converter and Intersil ICL7660

- Power consumption — 500mA @7.5V via external AC adapter

More details about the kit, including some sample code in assembly can be found on the project page.

If you did get started many years with another platform, Wichit Sirichote has a choice of other development kits based on relics of the past:

- CDP1802 Microprocessor Kit Build the Vintage COSMAC CDP1802 kit.

- Intel 8086 Microprocessor Kit Build the popular x86 based microprocessor kit with the 8086 CPU.

- Motorola 6809 Microprocessor Kit Build the 8-bit microprocessor kit with the 6809 CPU.

- Motorola 68008 Microprocessor Kit Build the 32-bit microprocessor kit with the 68008 CPU.

- Intel 8088 Microprocessor Kit Build the 16-bit microprocessor kit with a popular 80C88 CPU.

- 8051 Microcontroller Kit New design 8051 single board with 7-segment and hex keypad.

- Motorola 6802 Microprocessor Kit Back to 1976, 6802 Microprocessor.

- Z80 Microprocessor Kit Easy use with MPF-1 commands compatible, Z80 kit.

- 6502 Microprocessor Kit Back to classic CPU with MOS technology 6502 CPU.

- 8085 Microprocessor Kit. Nice design with smaller size PCB of MTK-85 kit.

I personally got started with a ZX-81 computer and then an Intel 8086 PC in the early 90’s so maybe I’m not that old after all 😉

You’ll find all the information about the microprocessor and microcontroller training kits on Mr. Sirichote’s website. There’s no purchase link on the website, but I heard about the projects via Rick Lehrbaum who wrote about his experience with the 8080 kit, and explains that you can contact Mr Sirichot by email (available on his website) if you’re interested in ordering one of his microprocessor kits.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

z80 is found to this day as ez80 — a 24bit 50MHz *pipelined* MCU, with z80 BC mode. There’s is a very nice MakerLisp board based on ez80 + 2MB SRAM + sd+ usb, hosting a bare-metal version of LISP.

ZX81, ZX Spectrum 48K, Atari ST FM and STE, 386 Intel, Cyrix, AMD etc

@Blu

You mean these?

https://www.tindie.com/products/lutherjohnson/makerlisp-ez80-lisp-cpm-machine/

https://www.digikey.com/catalog/en/partgroup/ez80-acclaim/15884

Yep. The first one is MakerLisp board.

This is great. That’s exactly how computer science should be taught to kids. The problem is that they’ve got so much used to playing games and using advanced applications nowadays that they can easily get bored with what they can achieve here. But when you touch such kits you quickly understand the cost of a byte or of an instruction. One benefit of such a kit compared to an arduino+PC is that it’s possible to be completely autonomous. I mean, with the 2-line LCD and a minimalistic monitor capable of assembling/disassembling instructions, it’s possible to write your own code directly from the keyboard and experiment with GPIOs. User interface is a critical aspect that many micro-controller kits have underestimated, suddenly making the device appear as the stupid low-performance stuff hanging off a cable attached to a powerful PC. But programming these on the living room’s table with no PC around has another dimension.

I personally used to experiment with an old XT-compatible motherboard that I repaired a long time ago. It was my first real PC, and when I replaced it with a 386sx I went deeper in experimentations with it. I noticed that the 8087 co-processor socket and the 8088 processor had alsmost the same pinout (for obvious ease of routing), and that they used a very simple RQ/GT bus access protocol. I managed to place two 8088 on this board with a few pins bent and a 7400 NAND soldered on top of the second one to adjust a few signals and flip the A19 pin. This way when I released the RST pin, the second processor would boot form 7FFF:0 (512kB-16) where I could place some dummy code using the “debug” utility running on the first one. I could make my floppy drive led blink this way, and verified that it continued to blink while starting a game on the PC. It was my very first encounter with real multi-tasking and multi-processing! Under DOS and using standard PC/XT parts 🙂

Thus I can only encourage students to play with such kits and try to extend them to do fun things.

Computer science should be taught to kids with logo turtle IMHO. Learning how to print stuff on the terminal, flash LEDs is pretty boring. Having a little robot with a marker pen in it draw a massive picture you coded teaches the same concepts but appeals to far more kids.

fun, because for me it was the exact opposite. I really didn’t like logo by finding it totally useless and feeling I was just wasting my time since I’d never meet it again in my life. On the opposite when you start with a blinking led, you then switch to a 7-seg LED display, then you connect 4 of them and have a clock, then add a buzzer and make your alarm clock, then connect it to a high power lamp and/or a radio etc… The possibilities are countless.

Likewise. Of all aspects of CS I’ve studied at high-school, logo has produced the least impact on my career and general formation of interests.

Logo was one of the “killer apps” on the RISC PC when it first came out. You would have thought you’d be all over it.

Anyway. Way to miss the point yet again. I wasn’t talking about teaching almost-adults in a CS class. The original post was about “kids”.. you know those little things that are more worried about whats for dinner than the life long impact of something they learned at school.

> Logo was one of the “killer apps” on the RISC PC when it first came out. You would have thought you’d be all over it.

I was never into logo. Besides, I was in high school a tad before RISC PC.

Speaking of logo and little kids, Scratch is what some of them are taught in 3rd grade nowadays. Yes, Scratch is a far logo descendant, but things are very, very far from turtle graphics today.

When it comes to CS fundamentals, games like Human Resource Machine and 7 Billion Humans is what I recommend to young kids (and adults).

Scratch is great and all but kids lose interest pretty quickly if it’s just doing something on the screen. The old turtles were good because it brought logo off of the screen and crashing around the room. At my primary school we had a RISC PC with the logo software and a turtle and a BBC micro with some sort of GPIO thing that ran a little traffic light setup and some motors to operate a crossing barrier.. The BBC micro barely got touched.

logo is lisp though,

so depending how you like it, you can go pretty “deep” with it

@Willy, absolutely. Young people’s interest in the fundamentals and what makes things tick in our industry is best lit via simple (by today’s standards), graspable hw. Whether that’d be hw architectures and machine languages, HL/machine interactions, maker projects and interfacing with the physical world — all these are best left to a simple MCU-class system, with as few things in the way as possible. I feel lucky for having grown up in the 8-bit era.

If you’re going to bore kids to death with that stuff at least use something that makes sense (i.e. doesn’t have weird artefacts of being 8 bit like bank switching) like the m68k. I can only speak for those of us that didn’t have to craft our own valves before even thinking about coding but a lot of people use something like http://www.easy68k.com/ for their low level/systems architecture modules.

I’m not speaking of using *exactly* these devices with their numerous design mistakes, but something *as simple*. The 8088 instruction set was very simple (yet not as simple as other CPUs of this area) and the way it worked was easy to understand. It didn’t yet impose you word alignment and was powerful enough for many use cases. Hey, after all it ultimately ruled the world. But m68k could be excellent as well, or 8051 as well (for other reasons, even simpler).

These machines allow the developer to understand every single part they are coding and the associated impacts. Nowadays with MMUs and operating systems running in protected mode, a lot of invisible things have impacts out of your control : TLB misses, L1/L2/L3 cache misses, frequency scaling, etc. We’re all used not to get the same performance out of the same program when run 3 times in a row, all we keep in mind is “it’s probably something like this”. There’s no “probably” on a 8088. You issue “POP CS” (0x0F, now reused as a prefix) and the next instruction is fetched from another memory location, it does what you imagine it does. Being able to verify guesses is an important part of learning.

The same could be done with PIC microcontrollers (but they lack useful instructions like multiply and division, making them boring to use), or AVRs, but these often rely on a harvard architecture and cannot execute directly from RAM. Under DOS you could run “DEBUG” (was almost a shell for me), issue “A100” and type 2 instructions, enter twice, run “P” to proceed step by step and see the effect on registers (or on the hardware if you change some leds or video settings, or hear it if you enable the buzzer or change the PIT frequency). It is extremely interactive and the possibilities are countless for those curious.

>The same could be done with PIC microcontrollers

*shudder*

>to proceed step by step and see the effect on registers

If you really want to do that you can do it with a $.30 STM32 via SWD.

Even older pros writing Java should play with such low end hardware to understand basic things that they have no clue about 🙂

How would that help them? “older pros” writing Java would have usually had experience with at least JNI (which requires knowing how these machines actually work) because it’s almost impossible to write anything complex with totally pure Java. Some of them might have experience manipulating byte code with asm.

That would help them being nice with memory rather than stupidly wasting it. That would help them understanding what kind of code a CPU really runs rather than an abstracted VM (and I can guarantee you not many of Jave devs have a clue what the JVM is and even less what kind of code gets generated by JIT).

I strongly believe doing low level programming is a benefit for every programmer. Even though of course no one should only do low level prog, just practice it a little 🙂

>That would help them being nice with memory rather than stupidly wasting it.

“waste” is subjective. Using a lot of memory to do something doesn’t mean that it’s a waste. Especially as it’s not a resource you consume and never get back. And almost all Java devs that have been working with it with years will know about native buffers and when it makes sense to use them.

>That would help them understanding what kind of code a CPU really runs

Most java developers know what byte code is and what JIT does. Again, many that have been using it for years have done stuff like generating byte code on the fly i.e. I’ve seen a generic JSON deserialiser that created the byte code to deserialise an input on the fly and was as fast as a C implementation that could only deserialise a preset schema.

> rather than an abstracted VM (and I can guarantee you not many of

>Jave devs have a clue what the JVM is and even less what kind of code

>gets generated by JIT).

If the code the JIT generates A: works, B: works fast enough, C: fits on the machine you want to run it on do you care what it looks like? Do you care how the how the sausage gets made as long as it tastes good and doesn’t poison you?

>I strongly believe doing low level programming is a benefit for every programmer.

>Even though of course no one should only do low level prog, just practice it a little

Java, Python etc developers will all do some level of low level programming eventually by integrating C code to do something that isn’t possible otherwise.

This is simply not my experience. But OK.

You don’t get to meet many good devs while being neck deep in multi gigabyte code bases all day long?

I know people working in a company that has a huge C++/Java code base (multi million lines). And the consensus is that they have no clue what’s happening at lower level (even some of the C++ devs, though these tend to be more knowledgeable) and simply don’t care.

This matches my experience teaching microprocessor micro-architecture 15 years ago in a computer science master class at university. Most of the students didn’t care (and that was the same for all the other higher level courses such as functional programming) but they were happy designing GUI in their Java IDE.

Of course that doesn’t mean these people are bad at their job. They just could be better and definitely have the capacity to understand basic low level things.

The neglect toward hw architectures by both university curricula and the students themselves is alarming — it will bite the industry in the ass fairly soon. The notion that the industry provides opportunities for big money no matter what makes students seek the course of least effort through the academic programs and subsequent early years of employment in the industry as well. ‘Why do I need to bother with arcane things like hw architectures when I have my salary guaranteed?’ is yielding more and more prospective employees who don’t know the first thing about computing.

> is yielding more and more prospective employees

>who don’t know the first thing about computing.

Go for an interview in “the industry”. All of them in the last few years are data structures etc on a white board. It’s stuff that’s not relevant to most positions people are going for but they need to grasp on some level to get through the first round.

>And the consensus is that they have no clue what’s happening at lower level

Do you actually know that for sure or is it your automatic bias against them?

>Of course that doesn’t mean these people are bad at their job.

So why does it actually matter then?

>They just could be better and definitely have the capacity to

>understand basic low level things.

Better at what? Better at knowing some junk about old 8 bit crap with bank switching, weird zero page stuff etc for no reason?

> Better at what? Better at knowing some junk about old 8 bit crap with bank switching, weird zero page stuff etc for no reason?

Often it’s “better at doing stuff that is more friendly to the computer”. Like knowing the size of a struct you use in an array so that each reference only does a single bit shift and not a more painful multiply that in addition to taking more cycles, clobbers two registers. Stuff like not using divisions in portable code supposed to be fast because many CPUs simply don’t have it, or using it with constants instead of variables so that the computer can replace it with a multiply an a shift. Like having a clue what a cache line is to avoid false sharing in threaded code (this one will not be learned on 8088 though).

There is a *lot* to learn on minimalist machines, and actually that’s the best contribution I’m seeing from Arduino to our industry, even if a lot is hidden behind the C++ compiler. At least you get a sense about the type of program you can fit in 8kB and run in 0.5kB of RAM, and you quickly understand the impacts on RAM size of using recursive functions, and the impact on code size of not using native size integers.

>Often it’s “better at doing stuff that is more friendly to the computer”.

If you’re using Python, Java etc then you already don’t really care about that even if you know about it. Even if you care what can you do about it? Are you going to rewrite everything in C and make your job considerably harder and time consuming just to feel good about not using as much memory on your machine with 64GB of RAM? Wasting memory is a horrible crime but wasting time worrying about inconsequential things isn’t? Isn’t “premature optimisation” one of the sins the good Knuth warned us about?

>actually that’s the best contribution I’m seeing from Arduino to our industry,

I don’t really think Arduino helps with teaching any of the concepts you guys think are super important. Yes there are things in Arduino that deal with the AVR being a tiny 8 bit MCU like hand crafted assembly routines but how many Arduino users actually know how that works or even exists? Most casual Arduino users don’t know what an interrupt is because they’ve been fine with using delay() all over their code to achieve the same result. Just like Java those that have been doing Arduino stuff for a while will sometimes move onto just using avr-gcc directly because it’s not possible to achieve what they need with the abstractions provided.

Knowing when the tool isn’t fit for purpose any more is much more important than constantly second guessing the tool because you’re worried it’s not doing the right thing.

Anyhow, the best thing Arduino contributes to our industry is removing the barriers of entry imposed by snobs. Seriously, we have a guy insinuating that Java guys don’t have all this magic knowledge when only a few days ago he was rattling on about how you must debug on the target machine and wanted to invalidate my opinion based on how much disk space his code takes up.

There was nothing to invalidate about your opinion — it was an argument from ignorance, to boot.

you would be surprised how much you can do without JNI. I’ve never used it myself and I doubt any of my colleagues did

One thing bothers me in specs for 8080 kit – it’s quartz’s frequency. Do they seriously run 8080 at 18 MHz???

As I understood it the CPU runs at 2.048 MHz but the Xtal is 18 MHz and gets divided to the right frequency.

a 2.048 Crystal likely would be much more costly these days (since no one is using that speed), so ya a frequency divider is being used… (but I wouldn’t be that surprised that the 8080 could run at 18MHz these days… ) likely there is a divide by 9 or something to the clocking likely you find on older 8051’s.

I have fond memories of my first computer with a Z80 (well NSC800 in fact) back in ’83. I still remember the encoding of most instructions…

I then switched to 68k and SPARC. x86 marked the end of assembly programming for me, what an abomination it was and still is (but what great processors Intel and AMD are doing!). I’m now happy doing assembly on ARM though I know no hexa opcode anymore 🙂

There is always going back to Basic or Forth for those liking a brain twister.

his ebay shop: https://www.ebay.com/sch/kswichit/m.html

Get your hands dirty

https://hackaday.io/project/19000-a-4-4ics-z80-homemade-computer-on-breadboard

One of my first computers was an s-100 with Z80 based processor board from California Computer Systems. It ran cp/m 2.2. In those days if you added a peripheral like a disk controller or a printer you had to write your own diver rotines in assembler in the BIOS. That’s when BIOS meant basic input output system. Yep in those days you knew how stuff worked, or it didn’t work. BTW a 64K ram board cost $600 in 1980 dollars, about $1800 today!!!. The CCS board documentation was superb. It still is!!

Interesting discussion.

I really think that playing with one of these would be a waste of time, but kids do seem to learn from apparently wasting time.

I would at least like a Teletype with a paper-tape reader/punch.

Most of these processors have stupidly complex instruction sets. The PDP-8 has a much simpler instruction set if the goal is to introduce kids to “real” processors.

(I haven’t turned on my Altair 8800 in at least 20 years, so I don’t seem to be willing to put an effort into nostalgia. On the other hand, I did just watch a YouTube video of a VCF talk by one of the designers of the Atari 800.)