Code gets continuously written and updated for new features, optimizations and so on. Those extra lines of code sometimes come at a cost: a security bug gets inadvertently introduced into the code base. The bug eventually gets discovered, a report is filled, and a software fix is committed to solve the issue, before the new software or firmware to push to the end user. This cycle repeats ever and ever, and this means virtually no software or device can be considered totally secure.

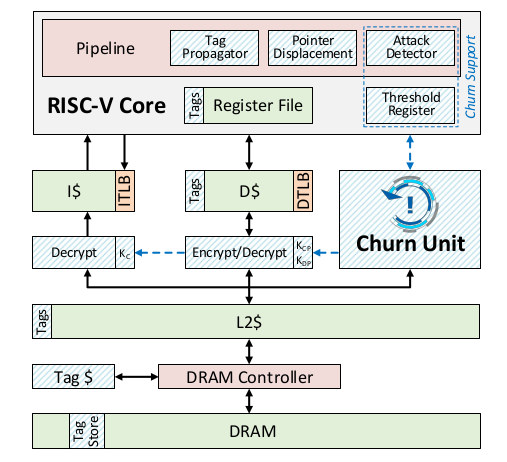

The University of Michigan has developed a new processor architecture called MORPHEUS, and that blocks potential attacks by encrypting and randomly reshuffling key bits of its own code and data several times per second through a “Churn Unit”.

The new RISC-V based processor architecture does not aim to solve all security issues, but focuses specifically on control-flow attacks made possible for example by buffer overflows:

The new RISC-V based processor architecture does not aim to solve all security issues, but focuses specifically on control-flow attacks made possible for example by buffer overflows:

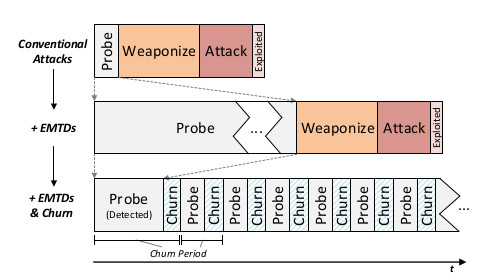

Attacks often succeed by abusing the gap between program and machine-level semantics– for example, by locating a sensitive pointer, exploiting a bug to overwrite this sensitive data, and hijacking the victim program’s execution. In this work, we take secure system design on the offensive by continuously obfuscating information that attackers need but normal programs do not use, such as representation of code and pointers or the exact location of code and data. Our secure hardware architecture, Morpheus, combines two powerful protections: ensembles of moving target defenses and churn. Ensembles of moving target defenses randomize key program values (e.g., relocating pointers and encrypting code and pointers) which forces attackers to extensively probe the system prior to an attack. To ensure attack probes fail, the architecture incorporates churn to transparently re-randomize program values underneath the running system. With frequent churn, systems quickly become impractically difficult to penetrate.

The chip’s churn rate is set to 50ms by default since it only slows performance by about 1% and is said to be several thousand times faster than the fastest electronic hacking techniques. But it can adjusted, and the processor does increase the churn rate is a suspected attack is detected by the built-in attack detector.

MORPHEUS architecture was successfully tested using real-world control-flow attacks including stack overflow, heap overflow, and return-oriented programming (ROP) attacks, as all of the attack classes were stopped in a simulated Morpheus architecture

You can find more details in the paper entitled “Morpheus: A Vulnerability-Tolerant Secure Architecture Based on Ensembles of Moving Target Defenses with Churn“.

Thanks to TLS for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

The limit to such mechanisms is the time it takes to debug your code. In 1996 I did something along that vein with a friend. We wanted to protect one of our programs against reverse engineering and we used two interleaved state machines for this, each using the other one’s outputs to make progress, one providing function pointers and the other one their arguments. Their sequence was pseudo-random and allowed the program to make forward progress. Among the target functions and args were kill() and SIGTRAP, which were used as one of the state machine’s clock (the other one being the poll loop). This made use of a debugger almost impossible, especially when you figure that the slightest desynchronization between the state machines causes args and functions to be mismatched, with kill(-1,SIGSTP) and kill(getppid(),SIGKILL) often being generated. One day I made a stupid bug somewhere in the code. It took me a full day to be able to be able to simply locate it. While I was proud of the level of protection achieved this way, I definitely lost trust in my ability to continue to code in that direction and maintain that code…

In the implementation presented here I wouldn’t want to be the one having to bisect between commits to try to spot an off-by-one which produces random results!

Maybe it’s possible to disable the secure system during development, and only enable it for releases.

There use to be programs that ran while zipped or compressed. Write the program, debug the program, then try running it with encrypted compression.

Let the hackers chew on that.

Other method, tokenize your code, just as Sinclair tokenized Sinclair basic, so storage took less space. His basic was compiled at run time.

Tokenization is just simple substitution encryption. Easy to attack.

>There use to be programs that ran while zipped or compressed.

>Write the program, debug the program, then try running it with encrypted compression.

Unless your machine somehow runs encrypted byte code it has to extract that code before actually running it.

Which means A: your plaintext code will be in memory at some point, B: you have to have plaintext code for the decompressor in memory somewhere. Personally I would just attack the decompressor.

White Star Line claimed that the Titanic was “practically unsinkable”.

The University of Michigan News preposterous headline

“Unhackable: New chip stops attacks before they start”

should be taken with even less credibility.

Disasters (not those of poor planning or the failure to maintain integrity types) almost always arise from weaknesses that were either not anticipated or considered too improbable.

What happens if the crackers are somehow able to gain access not to the program assets or data but to the randomizing process its-self and cause corruption of the encryption process so that the “program assets” are corrupted and cannot be decrypted.

Seems like a great way to stop some vital service where this approach might be thought to be the “perfect” solution.

Some entertaining thoughts there 🙂

I am reminded of the MCU running on Amlogic, Raspberry tart and others, that controls boot, CPU clock reported and more. How has hacking of them progressed?

I hear what you’re saying and I suspect if this ever did go mainstream we would see researchers eventually find situations where part of the churn state was leaked and the whole thing was made useless. On the other hand considering how useless people are at using even basic protections that have been available for decades in their software having efforts move right into the chip seems like a good idea to me.