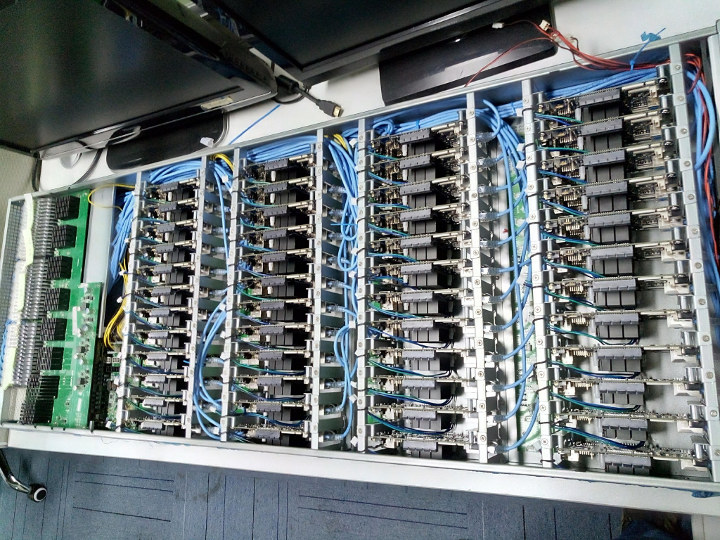

Boards’ clusters are always fun to see, and PINE64 has shared pictures of two RockPro64 clusters with respectively 48 and 24 boards neatly packed into partially custom enclosures. The 48-node cluster will feature a total of 288 cores, including 96 Arm Cortex-A72 cores and 188 Cortex-A53 cores, as well as 192GB of LPDDR4 RAM.

Low cost development boards may be seen as toys by some, so it’s interesting to learn that PINE64 plans to move their complete website infrastructure including the main website, a community website, forums, wiki, and possibly IRC on the 24-node cluster, while it seems the 48-node cluster may be used for their build environment.

The company has just completed the assembly of the clusters, and did not disclose the full technical details just yet. However, a progress report may be written in due time.

Once the migration is done, and everything works as it should, it will be a good showcase of the stability of RockPro64 boards and accompanying software.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

At least they’re dogfooding their own products…

This is just too funny: https://twitter.com/whitequark/status/1111246078773465088

Pine64’s response is just too perfect.

That’s why eating own dogfood is important. It’s a win-win scenario : )

where are the fans? densly packed passive cooling, in an closed encosure? never mind, they are in the front.

Yep, and the heatsinks are of the proper type for a front-back airflow. Good design, and as @TLS mentioned, a proper case of self dogfooding. More companies should be doing this.

On a tangent, Packet.com just announced their 32-core 3.3GHz Ampere servers, $1/h — c2.large.arm.

This twitter ‘dialogue’ made my day: https://twitter.com/whitequark/status/1111246078773465088

(since there is no ECC RAM possible with RK3399 I would be really surprised if someone starts some serious testing on DRAM data integrity)

ECC RAM is a severly underlooked subject in today’s desktop and (less so) sever computing. RK3399 being a mobile chip, the situation is clearly left to sw, but in other environments where ECC *is* an option, people often blissfully neglect it. And they shouldn’t.

Now that we mention it, I don’t think I have a device which has 8GB or more that doesn’t have ECC, whether x86 or arm : ]

> RK3399 being a mobile chip, the situation is clearly left to sw

You can’t ‘leave this to sw’ since if a bit flip manipulates a pointer an application or even the kernel might crash. That’s the purpose of an ECC RAM memory controller: repairing single bit flips before they can cause harm and at least reporting uncorrectable bit flips (2 or more bits with primitive/standard ECC implementations) so that measures can be taken once number of bit flips increase and probably indicate a faulty memory module.

You can surely do error detection in sw, particularly on pointers. All it takes is a couple of spare bits, or expanding the ptr type. Yes, sw has to be written with that in mind, which is why I say ‘left to sw’ — whether latter addresses the problem or not is up to its authors.

> You can surely do error detection in sw, particularly on pointers

How should a CPU ‘detect’ that it is jumping to the wrong address?

You read the pointer from non-ECC RAM ram, you verify it’s ok (perhaps it’s not — panic, or do costly corrections) — you keep it in ECC cache. Yes, for the case of direct jumps that would imply self-modifying code technique. For the case of indirect jumps (dispatch tables, etc) — those are just another data.

“RK3399 being a mobile chip”

ROFL!!!! These things would need active cooling to be called a mobile chip, they run hot as hades and can under no circumstances be considered something you can passively cool if you have any kind of system load. Sure, if you compare with Intel CPUs, they’re mobile.

Mobile as in something that goes into passively-cooled notebooks & tablets. I happen to have an ASUS chromebook flip c101pa with RK3399. I haven’t seen it throttling yet, and it’s not like I haven’t been pushing its CPU and GPU (though separately so far — I might push them simultaneously soon, though, once google fix one major GPU stack issue). Yes, it does have a proper copper-based (i.e. expensive) thermal solution.

Maybe they have made a better PCB design than FriendlyARM, as we’ve burnt through a few of their boards for a project. Even with a custom, much larger cool and a fan, they get scorching hot. It’s not a friendly cheap at all when it comes to heat produced by it.

In general SoC’s hosting 28nm CA72s @ ~2GHz are prone to high temps (even before factoring in fancy GPUs), but 2x CA72s can be kept in check with the right thermal design, even when passively cooled. IMO it’s usually a matter of what burden on your BOM you’re willing to take for TDP.

Big, little SoC are not designed for always on at full throttle. They are design for running mostly on the lower cores GHz.

Over clocking to always big GHz on, burns chips.

> Big, little SoC are not designed for always on at full throttle.

Yes, they are. Read below.

> Over clocking to always big GHz on, burns chips.

Nobody is discussing overclocking here. RK3399 OP1 (the kind that goes into chromebooks and tablets) are specced at 2GHz for the CA72 cores and 1.5GHz for the CA53 cores. Of course all cores run under ‘ondemand’ power governor, so that when there’s no load cores automatically throttle down, just like your normal desktop. But I can run a SIMD matmul load on all big cores (the kind that loads cores the most) sustaining nominal performance for ~ten minutes (as long as I’ve bothered to monitor) — no throttling whatsoever on the big cores.

TLS burning through boards

Is my reply. You may consider it normal, IMO it is not.

> TLS burning through boards

FriendlyELEC boards. The NanoPC-T4 is so far the RK3399 board with worst heat dissipation I encountered, FE’s heat dissipation attempt with ‘isolating’ thermal pad and miniature heatsink is just a joke (also the board shows a strange high idle consumption of 4W — but maybe that was just my early engineering sample).

This is reality with RK3399 (running at 2.0/1.5 GHz); My RockPi 4b was used in a pure CPU numbers crunshing project for 17 days between 75°C and 80°C without any crash (chemistry simulation stuff in this case).

> Over clocking to always big GHz on, burns chips.

BS. Even those RK3399 that are not ‘specced’ for operation at 2.0/1.5GHz work flawlessly at these clockspeeds on full load but just waste more energy and produce more heat (the ‘better’ RK3399 working stably at lower vCore voltages are sold separately as RK3399 OP1 to Google for the Chromebooks).

You need a great performing heatsink (and Pine64 has one) directly attached to the SoC and you’re done: https://github.com/ThomasKaiser/Knowledge/blob/master/articles/Heatsink_Efficiency.md (unfortunately FriendlyELEC’s approaches in this area are inferior –> crappy thermal pad combined with either tiny heatsink or giant heatsink with huge own thermal mass backfeeding heat into the SoC/board)

Don’t you use a laptop?

I use ~3 notebooks, each of them hosting 4GB LPDDR. My old decommissioned amd notebook had 8GB of non-ECC DDR3. I haven’t hit a RAM bottleneck on the current notebooks yet, and they’re used mainly for development. Actually, any of the current notebooks runs circles around the old one.

Cannot tell if this is a joke or not. Run critical infrastructure without ECC? Combining that with off-brand Spectek memory? If they haven’t got a clue, now they get to find out how bit-unstable the LPDDR memory they use on those boards via random crashes and invisible corruptions. This is probably the absolute worst combination in application. “Amazing” engineering…*shakes head*

LPDDR optimizes the hell out of power by reducing refresh. This takes all of the margin designed into DDR and throws it out the window. It’s perfect for consumer and battery power devices but terrible for data retention and correctness. This is why your phone asks you to reboot it after a week. When LPDDRing, you should only use Samsung/Hynix/Micron if you remotely care about reliability.

Even distributed fault tolerant applications need ECC for god sakes.

Speaking of Spectek, do they even produce their own LPDDR4, or are they just packaging samsung/micron/hynix dies?

They’re a Micron subsidiary so most of their stuff is Micron’s “value” stuff.

Web server just can ignore rare bitflips. As it do all contemporary home and mobile appliances.

> Web server just can ignore rare bitflips

No it can not depending on which bit flips. Since the result can be an application or the kernel crashing.

It’s a little company website. Who cares if it crashes??

Just one power supply? Around 1000 watts of 12VDC there, wouldn’t it be a good idea to employ two or more power supplies?

since google also used cheap PC to run they huge system, it is not strange to run a small(relative) web site on ARM cluster. The much more nodes provide high availability service, if they used distributed computing and storage like Kubernetes(k3s is better for this kind of resource-limited device) and Ceph. So hardware is cheaper but need more software guys.

Google learned their lesson, though.

https://news.ycombinator.com/item?id=14206635

> since google also used cheap PC to run they huge system

They stopped this a long time ago for obvious reasons (see @megi’s link) so this is today just an urban myth or excuse for people not wanting to spend the additional bucks for DRAM integrity (that’s at least primitive ECC memory able to correct single bit errors and report multiple bit flips).

Now you just have no choice: buy costly specific “server hardware” — or have no ECC.

Long times ago I use ECC RAM in all my computers. Until cpu and chipset makers drop out ECC support.

You can use ECC with pretty much any modern AMD processor. It’s only Intel who decided to make ECC a feature only worthy of servers and high end workstations!

I don’t see any serious storage, like an ssd or hdd.

Yep, that’s the 2nd problem besides DRAM integrity issues. If doing this with a RK3399 choosing boards with an M.2 key M slot to be used with NVMe SSDs would probably be a better idea. But NanoPC-T4 for example sucks if it’s about heat dissipation.