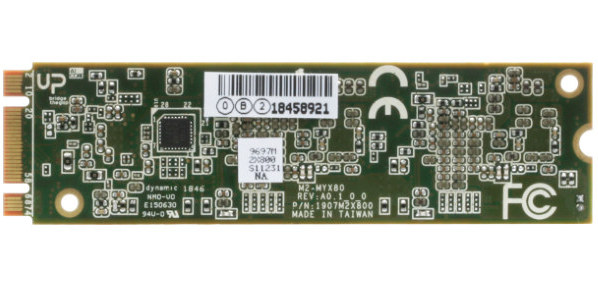

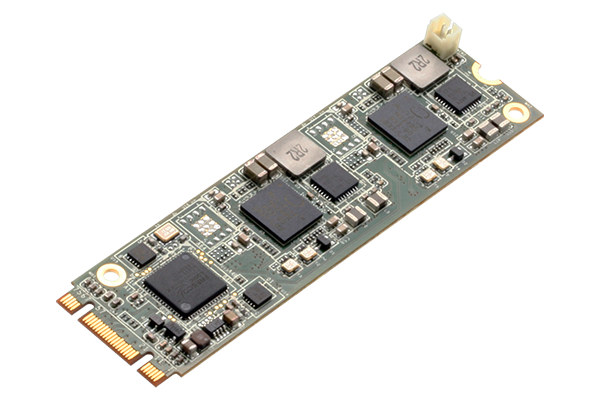

AAEON released UP AI Core mPCIe card with a Myriad 2 VPU (Vision Processing Unit) last year. But the company also has an AI Core X family powered by the more powerful Myriad X VPU with the latest member being AI Core XM2280 M.2 card featuring not one, but two Myriad X 2485 VPUs coupled with 1GB LPDDR4 RAM (512MB x2).

The card supports Intel OpenVINO toolkit v4 or greater, and is compatible with Tensorflow and Caffe AI frameworks.

AI Core XM2280 M.2 specifications:

- VPU – 2x Intel Movidius Myriad X VPU, MA2485

- System Memory – 2x 4Gbit LPDDR4

- Host Interface – M.2 connector

- Dimensions – 80 x 22 mm (M.2 M+B key form factor)

- Certification – CE/FCC Class A

- Operating Temperature – 0~50°C

- Operating Humidity – 10%~80%RH, non-condensing

The card works with Intel Vision Accelerator Design SW SDK available for Ubuntu 16.04, and Windows 10. Thanks to the two Myriad X VPU’s, the card is capable of up to 200 fps (160 fps typical) inferences, and delivers over 2 trillion floating point operations per second (TOPS).

The card works with Intel Vision Accelerator Design SW SDK available for Ubuntu 16.04, and Windows 10. Thanks to the two Myriad X VPU’s, the card is capable of up to 200 fps (160 fps typical) inferences, and delivers over 2 trillion floating point operations per second (TOPS).

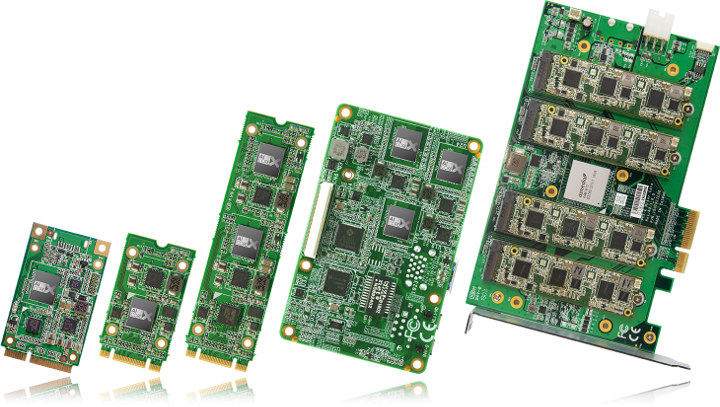

Pricing and availability are not known at the time of writing, but eventually it might be possible to purchase the card via the product page. The AI Core X product page reveals a total of 6 cards with Myriad X VPU are in the pipe from UP AI Core XM2242 M.2 card with one VPU, and up to UP AI Core XP 8MX PCIe x4 card with 8 Myriad X 2485 VPU’s and 4GB RAM.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

So bandwidth/latency between the host and the Myriad thing doesn’t seem to be that important, right? It’s always a single PCIe lane attached Fresco Logic USB3 host controller providing connectivity. On the XM2280 card using two USB3 connections instead of one on the others…

For this low-end devices usually a USB3 lane suffices — you wouldn’t pilot your tesla with that. In this case the M.2 is just a more robust USB port. Still it’s a notable upgrade over the original Movidius stick on a RPi over USB2 ; )

>So bandwidth/latency between the host and the Myriad thing doesn’t seem to be that important,

>you wouldn’t pilot your tesla with that.

USB3 latency is like 1ms right? Humans can pilot drones going at above 100km/h with only attitude hold (acro mode) via analogue video with between 10-30ms of latency and input with 10ms or more latency the other way.

> USB3 latency is like 1ms right? Humans can pilot drones going at above 100km/h with only attitude hold (acro mode) via analogue video with between 10-30ms of latency and input with 10ms or more latency the other way.

Humans do a lot of things solely off intuition, but most importantly, they don’t fly their drones in heavy traffic. Last but not least, humans don’t die if their drone crashed due to a split-second miss-judgement (unless they got hit by said drone). Here’s an article that has a few quotes by Musk just to give you a taste how tense things can be for the TPU in a car:

https://www.forbes.com/sites/jeanbaptiste/2018/08/15/why-tesla-dropped-nvidias-ai-platform-for-self-driving-cars-and-built-its-own/#425553806722

Mind you, the part that tesla replaced due to the former not quite meeting their requirements — nv’s PX2, is in a completely different league compared to the Movidius.

>Humans do a lot of things solely off intuition, but most importantly,

Which causes errors that need correction. If a human can work out a flight path and traverse a 3D path while constantly correcting themselves with 40ms or more of system latency on top of human reaction latency, video loss, lost input frames, human error etc then an automated fly by wire system shouldn’t have much of a problem with 1ms of latency from guidance updates over USB3. Actuators in a drone or a car are going to take longer than 1ms to do anything either way.

>they don’t fly their drones in heavy traffic.

If we want to be very literal. Yeah they do. People have done FPV runs through traffic in major cities in traffic.

If we want to be less literal people race multiple drones through wooded areas.

>Last but not least, humans don’t die if their drone crashed due to a split-second miss-judgement

The safety, regulatory etc stuff is all beside the point. Humans can drive with ~13ms of latency. They can pilot drones through 3D space with even more latency on top of that. 1ms of latency shouldn’t be a problem. You aren’t going to get a car to suddenly correct it’s course in less time eitherway.

> Actuators in a drone or a car are going to take longer than 1ms to do anything either way.

Latency accumulates. The fact you have a high-speed, high-inertia object to control (a car vs a drone — latter is high-speed, low-inertia) makes latency at decision-taking more important, not less important. It doesn’t matter if the autopilot correctly inferred to sharply change lanes if it was 1ms late to avoid impact.

> If we want to be less literal people race multiple drones through wooded areas.

Multiple drones =/= heavy traffic. The wooded areas is not much of a challenge — it’s stationary. Traffic is not. Also, obstacles in traffic are vulnerable too — in woods drone racing nobody is trying to save the trees from impact. That’s why drones often crash in those races, at virtually no cost compared to a car crash.

>It doesn’t matter if the autopilot correctly inferred to sharply change lanes

>if it was 1ms late to avoid impact.

As far as I can tell the frame rate of the cameras used for these applications is generally 60 FPS. Which gives you a frame every 16ms. So if 1ms is the difference between life and death it’s already a coin toss as you might not capture a frame of the hyper speed unicorn before it’s splattered all over your wind screen.

>The wooded areas is not much of a challenge

World class FPV pilot too eh? They should make you the next bond or something.

> As far as I can tell..

As far as I can tell you didn’t bother to read the article I linked.

For the record, a 2017 tesla autopilot used 8 cameras, 12 ultrasonic near-range sensors and a radar.

>As far as I can tell you didn’t bother to read the article I linked.

It didn’t say anything about latency of 1ms mattering. It didn’t even contain the word latency from what I remember of quickly doing a find in the page to find if it was relevant.

>For the record, a 2017 tesla autopilot used 8 cameras,

That’s what you should have called out in the article you linked. Anyhow the missing piece from that that I saw else where is that that number is total frames. So for the older system 200/8 = 25 fps or 40ms per frame. This article says the card can process 200fps peak so it seems like it could drive your 2017 Tesla.

The newer system can handle 2000 frames per second according to Elon*. It doesn’t actually say if it’s sampling that many frames but even if they can sample 250 frames per second from whatever camera sensors they are using that’s 4ms a frame. Which still isn’t sub-millisecond.

* note that Elon also thinks building a massive vacuum tube and ramming people down it is possible without killing everyone inside.

Erm

1. There’s only one PX2 in the autopilot. It needs to handle 8 cameras *plus* those sensors you conveniently omitted. Tesla determined PX2’s estimated 200fps was not sufficient in the ideal case (even though they produced road-legal autopilots with it). Has it crossed your mind that if the 12TOPs PX2 was deemed on the low side, another 2TOPs device would not even be considered? But please, do call tesla and let them know how they’ve been wasting their money on parts trivially replacable by a Movidius stick.

2. You contine to not comprehend elementary latency vs throughput characteristics of a channel, which explains your obsession with fps — you can have a 1000fps camera but accumulate 1h latency between capturing of frame N and reacting to frame N (hint: the fps is the information throughput of the channel, not its latency). A 1ms USB would only pile an unnecessary 1ms on a mission critical path.

Guys, you’re talking past each other, you’re both right but on different things.

Yes, latency does accumulate. No, 1ms latency added to an *image* is not perceptible as it corresponds to roughly 1/16 of an image sample, which means that if this ms would matter, then you have something wrong with the chosen camera since it would need to detect changes that can appear way faster than it sees. Hint: the event could appear and disappear 8 times between two frames without the camera even being aware of it.

However, what is also true is that these devices are *coprocessors*. So in practice you don’t just send the image once to this device and wait, you call one function running on the device to perform one operation on the image, then you call another one etc. The latency may become a problem once you’re calling the device 10-20 times to refine some processing, detect features, and pass some extra sensors info depending on the detected features. If you call it 20 times, you have 20 times the processing time plus 20 times 1 ms and this can become quite noticeable.

This is probably also why there is now a huge race to performance in such devices : it can be cheaper to ask them to perform tons of useless calculations then decide which one you keep instead of iterating. And a brain works more like this. You’re not actively looking for road signs when you drive, but you notice them as features present in the landscape until you care. Just like you notice advertisement.

@willy, that’s the point about latency accumulation, though — that 1ms is not your upper bound, it’s your lower bound. Your upper bound is whatever you latency was sans the USB trip, *plus* the USB trip, which of course is 2x 1ms, as the TPU is a coprocessor, as anybody would notice.

There’s a reason inference at the edge is the big thing that it is — if autopilots et al were only a matter of throughput — hey, we can watch 720p@60 youtube videos on a no-big-deal connection, and my ping to the closest youtube gateway is ~1ms. Those morons at tesla should be investing in datacenters, not in high-efficiency TPU R&D /dgp’s voice. Yet tesla, as everybody else in the business, do the opposite — they invest in high-efficiency TPUs. The only reason they do that is latency and latency alone. In this regard, putting your TPU behind an extra 1ms of latency is not a sought effect, regardless of the throughput.

That’s what I’m saying, it’s mainly a matter of number of calls per operation. Past a certain point it starts to matter.

It’s the same principle which led to the apparition of web services a long time ago : instead of requesting your database over the inter-DC link 100 times from the application, you send one “complex” web request to the other side, which asks these 100 questions to the database and returns the computed response. This way you pay the round trip only once.

There will likely be a new round of cloud marketing bullshit around NPUs, real time image processing and such. I’m curious to see what APIs vendors will propose to mitigate the awful latencies over the net! At least for the first time the vendors will not be able to redefine the references to suit their capabilities, they won’t be able to claim that you can’t drive over a pedestrian in 50ms! That might become interesting 😉

Indeed. I’ll be waching what google do with stadia, but given that so far it appears to be just a dumb streaming service, i.e. no smart processing at the edge, just your inputs going to the datacenter, and you getting back a framebuffer ala VNC, I expect some competitive genres to be outright unplayable on it, as anti-latency heuristics go only so far. Then again, google have the capacity to cover the world, so a datacentre per city should easilly solve that.. Just kidding ; )