Amazon has developed AWS Graviton processors optimized for cloud applications and delivering power, performance, and cost optimizations over their Intel counterpart. The processors feature 64-bit Arm Neoverse cores and custom silicon designed by AWS themselves, and can be found today in Amazon EC2 A1 instances.

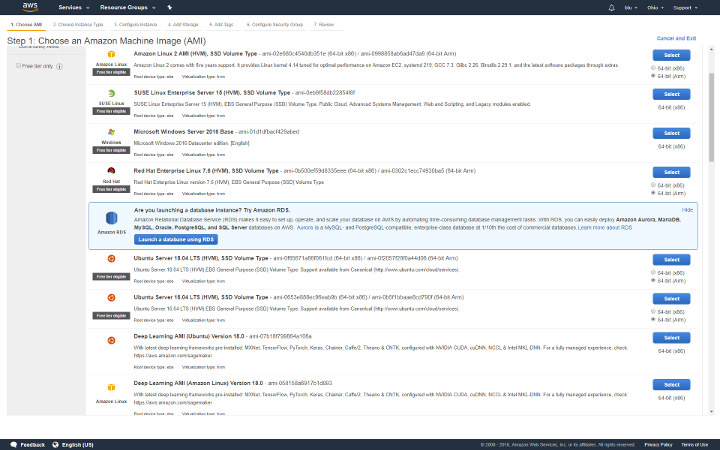

The screenshot above shows Amazon Linux 2, Red Hat Enterprise Linux 7.6, Ubuntu 18.04 Server, and Ubuntu 16.04 Server machine images having options for either 64-bit x86 or 64-bit Arm servers.

Amazon Arm server instance are particularly suitable for applications such as web servers, containerized microservices, caching fleets, distributed data stores, as well as development environments. Amazon further explains:

A1 instances are built on the AWS Nitro System, a combination of dedicated hardware and lightweight hypervisor, which maximizes resource efficiency for customers while still supporting familiar AWS and Amazon EC2 instance capabilities such as EBS, Networking, and AMIs. Amazon Linux 2, Red Hat Enterpise Linux (RHEL), Ubuntu and ECS optimized AMIs are available today for A1 instances.

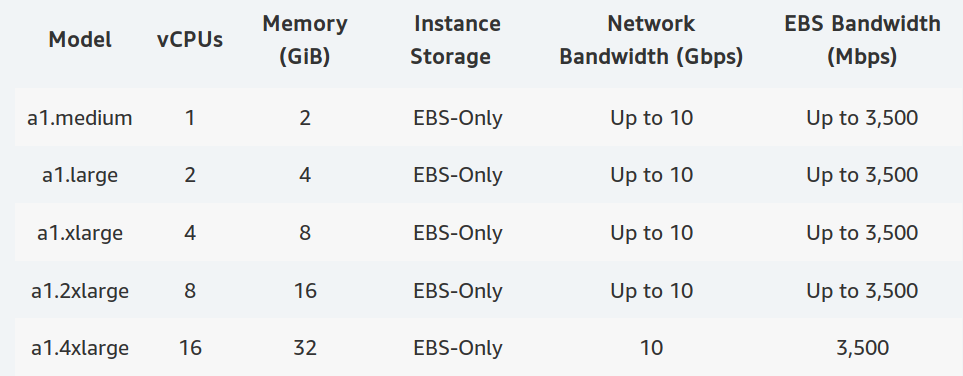

The table below shows available A1 instances, and you can start small with one virtual CPU and 2GB RAM, and scale up to 16 vCPUs and 32GB memory.

Amazon EC2 A1 instances are currently available in the US (North Virginia, Ohio and Oregon, as well as in Europe via Amazon’s datacenter located in Ireland. You can get started via the A1 instances page on Amazon.

Thanks to blu for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

The big question with ARM servers is pricing. They could be cheaper to run and cheaper to buy but neither of those are of concern to a cloud customer so it’s all down to the hourly price. I don’t think AWS have priced it right since for instance a 16 core 32GB RAM ARM instance is coming in at $0.408 while an m5.2xlarge which has half the x86 cores but the same RAM is cheaper at $0.384.

It’s too late to edit my comment but I’m assuming that 1) ARM cores have at most the performance of half an x86 core and 2) that things like network, storage throughput can be ignored, which is definitely a false assumption.

Overall just having a big provider hosting ARM servers is a good thing, I’m only saying they’re not appealing to architecture-agnostic people like website hosters as they’re not cheaper than x86.

> 1) ARM cores have at most the performance of half an x86 core

For some things — yes (e.g. avx vs neon), for others — nope. Keep in mind the crux of the performance issue for arm chips (CA72-75) as found in phones and SBC’s is their low clocks and small caches compared to desktop parts — that issue is largely gone in server parts.

> 2) that things like network, storage throughput can be ignored, which is definitely a false assumption.

A very false assumption indeed : )

>Overall just having a big provider hosting ARM servers is a good thing,

Why? Just because it’s ARM?

Yes. More competition – the better.

>Yes. More competition – the better.

But it’s not competition. It’s just another instruction set at around the same price with probably lower performance. Other than some weird hate boner for x86 I can’t imagine why you’d even care.

Price-performance is better as per Amazon engineers (not by much, though).

Other than some fetish for x86, people want better performance and instruction sets matter a lot in the work-per-clock and work-per-watt performance metrics (if they didn’t Intel wouldn’t be trying to patch x86 with a myriad of extensions in the first place). Hope that answers your question.

>Price-performance is better as per Amazon engineers (not by much, though).

It doesn’t seem that different when you look at the pricing. They seem to have intentionally made the ARM and x86 offerings slightly different so you can’t do a like for like comparison but if you look at the on-demand price for the smallest a1 instance it’s more expensive than a few of the x86 instances that have more memory and vcpus.

>Other than some fetish for x86,

Much like this weird fetish for ARM that seems to be directly linked for a weird hate boner for x86?

>people want better performance and instruction sets

>matter a lot in the work-per-clock and work-per-watt performance metrics

If you’re running stuff on AWS you probably don’t care all that much about details like that. The whole point of AWS is so that you can fire all of your admins before having to explain to your CEO that amazon is bleeding you dry but you can’t migrate away from it because your stack is now riddled with Amazon’s SaS.

>(if they didn’t Intel wouldn’t be trying to patch x86

>with a myriad of extensions in the first place).

Why is adding extensions a bad thing? With ARM you have a bunch of different incompatibilities between revisions of the cores, optional extensions, proprietary co-processors for everything the main CPU is too weak to handle.

> Much like this weird fetish for ARM that seems to be directly linked for a weird hate boner for x86?

I’m personally interested in the higher efficiency at scale that you can get from using lower power & low cost chips. Intel is specialised on the high end but its low-end offerings are still higher than what you can find in the ARM world, and don’t always compete well. Concrete example: on the very low end, you’ll find Atom processors, which are roughly 1.5 times slower than the RK3399, for roughly twice the price per board. Then you scale a bit higher and you’ll find the GeminiLake Celeron which will be 1.5 times faster than the RK3399 for 3 times the price per board. If you’re not interested in performance (like most people using clouds are), it becomes possible to achieve very dense capacity using such low-power, middle-end SoCs for a small part of the price it would cost on x86.

It definitely isn’t for everyone. But there is a market for this.

Conversely, ARM-based chips are not yet interesting for the middle to high end, because prices rise quickly and power usage as well. If you need a 3 GHz A76 for a certain workload, it probably isn’t the right solution at the moment, at least until you find server chips offering 16 of these cores etched at 10nm or less. I’m pretty sure that an underclocked low-end i5 could cost less and draw less power while achieving the same performance per core.

While I’m technically interested in seeing ARM succeed on the server (for the vast majority of low power workloads), I’m worried for them that once Intel feels threatened, they start to fight back and produce really low-end chips to compete in ARM’s garden. It would be nice for competition but not for the long-term survival of an alternative offering. I prefer to see a small overlap between their solutions, this way I can choose the one which fits my use case without my use case being imposed by the vendor.

I totally get using ARM embedded and mobile spaces. Even if you don’t like ARM for some reason there aren’t many alternatives; Want an MCU with a decent open toolchain, debuggers that don’t cost $300 and only work with one chip? You want ARM. Want a chip for your mobile phone, STB etc.. it’s ARM or nothing basically.

But… I’m not going to pay the same money or more for a cloud server that performs the same or even worse and doesn’t have the universal support that x86 has just because it’s ARM. Once it’s running Linux and a bunch of frameworks written in Python, Java whatever it could be millions of Z80s wired together on a breadboard for all I care as long as it fits my performance/cost requirements.

I can see how this might be good for Amazon. Presumably with custom chips they can fit many many more instances in the same space/power/maintenance footprint than they can with off the shelf parts from Intel. Thinking that this is some sort of f.u to X86 or creates “competition” (How exactly does Amazon compete with itself?) is bizarre.

They compete with Intel, so Intel cannot keep them in a headlock. Otherwise Intel pulls your string and you have to dance.

>They compete with Intel, so Intel cannot keep them in a headlock.

Amazon competes with Intel? Amazon won’t be selling these processors to the general public or even if they did in the volumes that Intel would care about and the prices of their ARM instances aren’t massively different to the X86 ones so what are they competing on?

That is not a like for like comparison.

Amazon gains, knowledge, experience, and the profit the would be Intel’s goes back into Amazon’s arm development.

Just as Apple has replaced many suppliers with its own developed tech, after using the suppliers tech.

People just defend Intel because they fear for their Intel hardware based jobs. Worth remembering many laughed at the first iPad, as just a large phone, but look at the market it now has. arm claim next years 2019 Neoverse cores will be 30% better and 30% better the year after.

> Amazon won’t be selling these processors to the general public

Who knows? They acquired Annapurna Labs back in early 2015 but still continue to sell their ARM based router/storage/NAS SoCs to respective vendors. QNAP just recently showcased a new NAS based on also new Alpine AL-324.

> It doesn’t seem that different when you look at the pricing.

That was an AWS engineer’s estimate in a private conversation at the current AWS event, for some workload they had in mind. I haven’t done my own testing yet. Keep in mind a vCPU on EC2 is a logical core on Xeon, and a physical core on A1.

> Much like this weird fetish for ARM that seems to be directly linked for a weird hate boner for x86?

Funnily enough, it was you who brought the boners narrative to this tread. In reality there’s no ‘one ISA fits them all’ in this universe — depending on a variety of factors some ISAs (read: their implementations) excel at tasks where other flounder. The elementary ‘Why not run it on an x86?’ has an equally elementary answer — ‘Because something else might run it better’. IFF it runs best on x86 — sure, keep running it on x86. The insistence on running it on x86 no matter what would be the equivalent of a fetish.

>If you’re running stuff on AWS you probably don’t care all that much about details like that.

Everybody cares, be that indirectly, about ‘details like that’ — that underlines the economies of the very infrastructure. Example: where are the x86 smartphones? Did customers care about the ISA in their smarphones? Nope, they didn’t. And yet, x86 in smarphones has gone the way of the dodo, even though Intel tried their best with the atoms.

> Why is adding extensions a bad thing? With ARM you have a bunch of different incompatibilities between revisions of the cores, optional extensions, proprietary co-processors for everything the main CPU is too weak to handle.

I didn’t say extensions are a bad thing per say, I said _the_myriad_ of extension on x86 — that implies the fundamental ISA is not quite adequate at this day and age. Just like IA-32 was not adequate at the time when AMD had to redo it for 64-bit. As per why ISA fragmentation is a bad thing — that’s self-explanatory.

BTW, I’d love to hear about those different incompatibilities between revisions of armv8 cores.

>That was an AWS engineer’s estimate in a private conversation at the current AWS event

And everyone knows that AWS’ pricing is all over the place and often comes out well beyond what you calculate. Try paying thousands of dollars in unexpected per-message fees for MQTT keepalives. :p

>In reality there’s no ‘one ISA fits them all’ in this universe

Most people in this universe don’t care anymore. It could be a SISC or cluster of 68Ks running on a massive FPGA in a parallel universe. As long as it runs whatever high level language their stack uses they don’t notice or what to know.

> ISAs (read: their implementations) excel at tasks where other flounder.

No one cares if their whole stack is running on top of Python or Java.

>‘Because something else might run it better’.

What does better mean here? Faster? Cheaper? These ARM instances don’t seem cheaper. The lowest one is more expensive than many of their X86 instances with more vcpus/memory.

To prove faster you’d need to benchmark it for your specific application but consider that for most of the languages etc you’ll be using X86 is the defacto standard.

>that underlines the economies of the very infrastructure.

And that’s why people run stuff on AWS instead of getting equipment from Dell, HP etc or getting a real servers with a core i7 and 32GB of RAM from somewhere like Hetzner for less money.

I just want to run my application. I don’t want to know about processors, RAM, disk RPMs.

>Example: where are the x86 smartphones?

I thought we were getting excited about this Amazon thing? What does smartphones have to do with any of this?

>that implies the fundamental ISA is not quite adequate at this day and age.

Really? Don’t you think it implies that for some problems (i.e. the type of problems SIMD instructions were added for) aren’t easy or fast to implement out of generic instructions and thus having a dedicated extension or co-processor helps. If anything this is more of a concern for ARM where the generic processor isn’t running at 4GHz hence there are tons of weird video, crypto etc co-processors bolted on.

>Just like IA-32 was not adequate at the time when AMD had to redo it for 64-bit.

I sense we’re going off into the weeds here.

For the stuff you would run on the smaller ARM instances an IA-32 machine would probably be equally as capable.

>As per why ISA fragmentation is a bad thing — that’s self-explanatory.

Tell that to ARM.

>BTW, I’d love to hear about those different incompatibilities between revisions of armv8 cores.

The TRMs are up on ARM’s site if you really want to know. I was thinking of all of ARMs stuff.

There are a few headaches in the 32bit cores like unaligned accesses will cause a fault on some of them but not on others. Maybe it’s a lot better for their 64 bit stuff but you stuff like 32bit support is optional, the crypto extensions are optional so it’s still quiet possible to have something that’ll run on one ARMv8 and not on another.

@dgp, I don’t see a point in explaining myself any further. enjoy your day.

AWS stopped charging for MQTT keepalives about a year ago. They admitted it was a problem and fixed it. We were bothered by it too and were sending our keep alive traffic to EC2 to avoid the charges. With the new pricing it is not an issue any more. In general their initial IOT pricing was way too expensive and was lowered across the board in the price change.

>AWS stopped charging for MQTT keepalives about a year ago.

yep. We started using it when it was in alpha/beta and hassled them about the pricing until they finally changed it.

> Just because it’s ARM?

Because some software optimizations that have long ago happened on x86 might happen on ARM then sooner. Talking about NEON related stuff like the below:

* https://www.cnx-software.com/2018/04/14/optimizing-jpeg-transformations-on-qualcomm-centriq-arm-servers-with-neon-instructions/

* https://forum.armbian.com/topic/8161-swap-on-sbc/?do=findComment&comment=61682

I’m not sure why anyone would go for an ARM instance because someday you *might* get libraries that are optimised for ARM when you can go and get an x86 instance that already has optimised libraries or get an instance that has a GPU.

I was just talking about a slight hope that if some people for whatever reasons choose those A1 instances the yet missing optimizations might follow sooner. To be able to benefit on other ARM systems than such cloud instances from.

I think what will probably happen in this case is Amazon will deploy any optimisations that directly help them and the result will be in some hard to find tarball. Amazon’s embrace of open source seems to end as soon as the git clone has finished.

AWS’ open source commitment is all over the map and it varies hugely by group. For example AWS Amplify is totally open. https://aws-amplify.github.io/

Amazon claims up to 45% cost savings

https://www.cnx-software.com/2018/11/29/amazon-ec2-a1-arm-cost-savings/

A 45% saving really would be something but without them saying how they managed that it’s hard to tell if it’s actually the instances they are now using are cheaper like-for-like or just because they were actually using instances that were bigger than they need. Without a break down of where they actually saved money they could have just pulled that value out of the air.

A bit of a non sequitur, but I remember using Scaleway’s ARM servers and discovering they didn’t support NEON instructions. That was an interesting thing to debug 🙂

IIRC, they were using a Marvell armv7 uarch which was stripped of neon.

Expect more from arm

” The chip designer on Oct. 16 unveiled its plans for a family of infrastructure-class compute platforms aimed at hyperscale cloud data centers to the network edge that are playing an increasingly important role in a rapidly growing distributed IT computing environment driven by the rise of the internet of things (IoT). Arm officials also introduced a roadmap for its Neoverse solutions that will begin this year with the 16-nanometer Cosmos platform, continuing next year with the 7nm Ares offerings.

That will be followed by another 7nm+ platform, dubbed Zeus, in 2020 and then the 5nm Poseidon platform a year later. Each generation will bring a 30 percent performance gain and help drive innovation from a broad range of technology partners for systems and software.

”

All neoverse cores

and the classic non sense benchmark vs Raspberry Pi of course

► C-Ray http://codepad.org/OQDCkVna

► SciMark http://codepad.org/wZe5SrjI

GCC 4.8 — wow.

Are you able to provide 7-zip and tinymembench numbers too?