Rockchip RK3399Pro was announced as an updated version of RK3399 processor with an NPU (Neural Processing Unit) capable of delivering 2.4 TOPS for faster A.I. workloads such as face or object recognition.

There haf been some delays in the past because of a redesign of the processor that placed the NPU’s RAM on the PCB instead of on-chip for cost reasons. Eventually we got more details about RK3399Pro, and today I also received a 15-page presentation with some more information about the software, and processor itself.

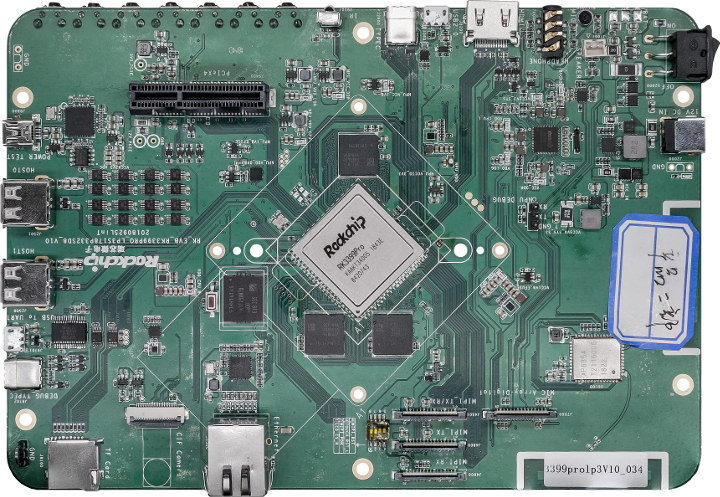

But even more interesting, that’s the first time I see Rockchip’s official RK3399Pro EVB (Evaluation Board), and the guys at Khadas uploaded a video to explain a bit more about the board, and showcase the NPU performance measured up to 3.0 TOPS with an object recognition demo, and an “body feature” detection demo – for the lack of a better word – running in Android 8.0.

Eventually, Shenzhen Wesion (Khadas) will launch their own RK3399Pro board with Khadas Edge-1S now “available” on Indiegogo.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

This demo is quite convincing (well done Gouwa). I think there’s more than enough performance to detect moving pedestrian for car automation. It looks like hardware made a lot of progress over the last few years, I’m even wondering if the new NPUs will not quickly make GPUs obsolete for general purpose computing (they’ll become graphics chips again).

NPUs are matrix multipliers. Any potent matrix multiplier (case in point — GPUs) can be a successful inference machine, but matrix multipliers going gen-purpose is about what _else_ they can do well, aside from multiplying matrices. In this aspect GPUs are, and will likely remain, the much better massively-parallel gen-purpose machines for the foreseeable future.

I think the elephant in the room is that these NPUs don’t seem to support OpenCL or anything similar.. and who wants to be stuck programming this stuff? You’re gunna have to re-invent the wheel and rewrite your whole processing stack for this one custom chip. Doesn’t sound fun

I keep seeing the same pattern, you just get little demos from the chip maker and then they’re expecting device companies to do the heavy lifting. I’m no expert, but to me it seems to only make sense in price-sensitive mass produced devices (something like Alexa or a smart security cam)

Absolutely. APIs and programming models is another side to all this.

NPUs typically do not have the precision required to be useful as a general purpose processor. The document reference in the article for the NPU used in RK3399 Pro states “1920 INT8 MACs, 192 INT16 MACs, 64 FP16 MACs”. The focus is on integer (8/16 bit) calculations and “half” precision (16bit) floating point.

With 1920 int8 MACs you can do awesome stuff 🙂 I’m starting to think there may be a way to use this for pattern matching for new generations of compression algorithms. I hope (and expect) they support saturated arithmetic by the way (I haven’t checked the doc yet).

INT8 is used for inference. INT16 can be used for training if you know what you’re doing and the domain can handle the precision. There’s an Alphabet presentation that goes into detail on this topic.

24 fps detection … very nice. Thank you for posting this video

There is a comparison between RK3399Pro and other NPUs, you may confused about the other NPU models, more details below:

– T: NVIDIA TX2

– H: HiSilicon Kirin 970

– A: Apple A11

– M: Movidius Myriad X

Have fun in the AI world!