We’ve previously seen several clusters made of Raspberry Pi boards with a 16 RPi Zero cluster prototype, or BitScope Blade with 40 Raspberry Pi boards. The latter now even offers solutions for up to 1,000 nodes in a 42U rack.

Circumference offers an other option with either 8 or 32 Raspberry Pi 3 (B+) boards managed by UDOO x86 board acting as a dedicated front-end processor (FEP) that’s designed as a “Datacenter-in-a-Box”.

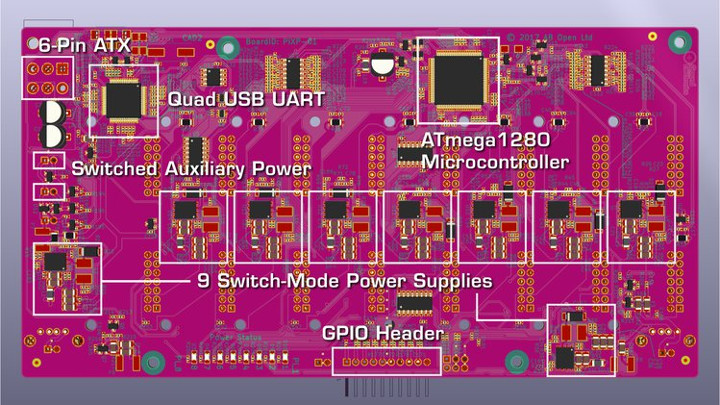

Key features and specifications:

- Compute nodes – 8x or 32x Raspberry Pi 3 B+ boards for a total of 128x 64-bit 1.4 GHz cores max

- Backplane

- MCU – Microchip ATmega1280 8-bit AVR microcontroller

- Serial Comms – FTDI FT4232 quad-USB UART

- Switched Mode Power Supply Units (SMPSUs):

- 8x / 32x software controlled (one per compute node)

- 1x / 4x always-on (microcontroller)

- HW monitoring:

- 8x / 32x compute node energy

- 2x / 8x supply voltage

- 2x / 8x temperature

- Remote console – 8x / 32x (1x / 4x UARTs multiplexed x8)

- Ethernet switch power – 2x / 8x software controlled

- Cooling – 1x / 4x software controlled fans

- Auxiliary power – 2x / 8x software controlled 12 VDC ports

- Expansion – 8x / 32x digital I/O pins (3x / 12x PWM capable)

- Networking

- 2x or 8x 5-port Gigabit Ethernet switches (software power control)

- 10x / 50x Gigabit Ethernet ports in total (C100 has additional 2x AON switches in base)

- FEP – UDOO x86 Ultra board with Quad-core Intel Pentium N3710 2.56 GHz with 3x Gigabit Ethernet

- C100 (32+1) model only

- 32x LED compute node power indicators

- 6x LED matrix displays for status

- 1x additional always-on fan in the base

- Dimensions & Weight:

- C100 – 37x30x40 cm; 12 kg

- C25 – 17x17x30 cm; 2.2 kg

The firmware for the backplane has been developed with the Arduino IDE and Wiring C libraries, and as such will be “hackable” by the user once the source code is released. The same can be said for the front panel board used in C100 model and equipped with a Microchip ATmega328 MCU.

The UDOO x86 Ultra board runs a Linux distribution – which appears to be Ubuntu – and supports cluster control out of the out-of-the-box thanks to a daemon and a command line utility (CLI), as well as Python and MQTT APIs.

Hardware design files, firmware, and software source code will be made available after the hardware for the main pledge levels have been shipped.

Circumference C25 and C100 are offered as kits with the following:

Circumference C25 and C100 are offered as kits with the following:

- Custom PCBAs

- 1 or 4 backplane(s) depending on model

- Front Panel (C100 only)

- 2x (C25) / 10x (C100) 5-port Gigabit Ethernet switches

- 1x UDOO x86 dual-gigabit Ethernet adapter

- All internal network and USB cabling

- Power switch and cabling

- Fans and guard

- Laser cut enclosure parts and fasteners. C100 also includes 2020-profile extruded aluminum frame

What you don’t get are the Raspberry Pi 3 (B+) and UDOO x86 (Ultra) boards, SSD and/or micro-SD cards, and power supply, which you need to purchase separately. That’s probably means you could also replace RPI 3 boards, by other mechanically and electrically compatible boards such Rock64 or Renegade boards, or even mix them up (TBC).

The solution can be used for cloud, HPC, and distributed systems development, as well as testing, and education.

The project has just launched on Crowdsupply aiming to raise $40,000 for mass production. Rewards start at $549 for the C25 (8+1) model, and $2,599 for the C1000 (32+1) model with an optional $80 120W power supply for the C25 model only, but as just mentioned without the Raspberry Pi and UDOO boards. Shipping is free worldwide and planned for the end of January 2019, except for more expensive early access pledges which are scheduled for the end of November.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

The design is so freaking cool, the 32x Pi model looks like a 1965 DEC PDP-8.

Really nice project.

What’s the purpose of the fans?

Just as a reference: for anyone interested in SBC clusters a design that takes care of sufficient heat dissipation (unlike the above designs) is mandatory since why would anyone want throttling?

Here is a nice example also making use of custom powering backplanes and allowing for efficiently cooling the individual boards due to proper orientation and position of the fans: http://forum.khadas.com/t/vim2-based-crypto-currency-miner-build/

And of course for a low count cluster using something like Pine’s Clusterboard with a couple of SoPine modules is a way better approach than cramping normal SBCs with a bunch of Ethernet switches and cables in a box. Though suffers from the same problem as RPi 3/3+: Cortex-A53 in 40nm is too slow and generates way too much heat at the same time.

Good point on the thermal.

I was also wondering how reliable such a setup would be.

> I was also wondering how reliable such a setup would be

The primary OS on those Raspberries (a RTOS called ThreadX) implements throttling on its own starting at 80°C. Of course the secondary OS (Linux for example) won’t know and while the boards (especially those in the middle of such a stack) in reality are throttled down to e.g. 800 MHz due to overheating the kernel will still happily report 1200 MHz or even 1400 MHz on the Pi 3+ (RPi cheating as usual).

The first line only returns bogus values while only the 2nd call provides the real clockspeed (talking directly to ThreadX that controls the hardware):

(reference: https://github.com/ThomasKaiser/OMV_for_Raspberries/blob/master/usr/sbin/raspimon)

So this is not that much a problem of reliability but lower performance after some time of sustained load when the boards in the middle start to overheat. It’s interesting to see a ‘cluster design’ in 2018 simply not caring about appropriate heat dissipation at all. But most probably the target audience doesn’t care anyway…

My concern was also wrt reliability of the nodes.

I’m not sure if the setup allows for netboot or local storage.

My experience with SD cards over time is not that good.

> I’m not sure if the setup allows for netboot or local storage.

Both. The RPi SoC allows for some sort of proprietary netbooting in the meantime. But even with local storage on SD card there’s nothing to worry as long as you keep the ‘flash memory’ challenges in mind:

1) Always be aware that there’s fake/counterfeit crap around faking a higher capacity than the real one so cards need to be tested prior to usage (since otherwise it’s game over once the amount of data written exceeds the real capacity — such counterfeit cards die over thousand times faster than cards with real capacity)

2) ‘Write Amplification’. It’s really all about this and distros *not* taking care of this (e.g. Raspbian) will lead to the SD card wearing out magnitudes faster compared to distros that address this problem (increasing the commit interval so stuff gets written to flash storage only every 10 minutes in a batch which already greatly reduces Write Amplification, same with keeping logs in RAM and writing to card hourly/daily).

I tried to explain the importance of lowering the write amplification in length here: https://forum.armbian.com/topic/6444-varlog-file-fills-up-to-100-using-pihole/?do=findComment&comment=50833

Anyway… if it’s just about a ‘cluster to go’ for educational purposes I would still better look at Pine’s Clusterboard: https://www.cnx-software.com/2018/02/01/pine64-clusterboard-is-now-available-for-100-with-one-sopine-a64-system-on-module/

For less than 400 bucks you get everything you need: the Clusterboard containing a GbE switch, 7 sockets for the SoPine modules (29 bucks each), the appropriate 75W PSU and any Mini ITX enclosure. The SoPine modules have some SPI NOR flash you can burn a bootloader into that makes all devices PXE boot compliant. So simply throw another SBC with an SSD as NFS server into the enclosure and you’re done getting an ‘SD cards free’ cluster booting in a standards compliant way (skipping the proprietary RPi VideoCore stuff)

While I find that it *looks* nice, I’m really shocked anyone can think about using an inefficient RPi to build a cluster. You build clusters because you need performance, not for community support. In this design, the x86 board controlling the cluster might have almost as much power as all the RPis together (OK I’m exagerating a bit though not that much when they start to throttle, but you get it). There are a lot of alternative boards around for various purposes. The NanoPI-Fire3 provides 8*1.4 GHz A53. Stronger per-core performance can be obtained using RK3288 as found in MiQi or Tinkerboard, as well as various of the Exynos 5422-based Odroid boards. A single MiQi, Fire3 or Odroid-MC1 board would roughly replace 3 RPi, reducing the number of switch ports, fans and the enclosure size. It’s sad that people don’t study what’s available before rushing on a well-known generic but inefficient device. Sometimes I’m wondering if one day we’d not even see clusters of Arduinos…

They don’t sell the boards with the kit, so it should be easy enough to use Tinker boards instead of Raspberry Pi 3 boards.

Those RK3288 boards like Tinkerboard or MiQi suffer even more from the broken thermal design of these cluster boxes here. They need efficient heat dissipation to not throttle down to a minimum which is impossible with this box (only the board at the stack’s top receives appropriate cooling, the 7 below not)

Which is fascinating since the guys behind the project (Ground Electronics) know how to do it correctly (maximum airflow over the boards/heatsinks with a fan blowing laterally): https://groundelectronics.com/products/parallella-cluster-kit/

But maybe it’s really all about the target audience? If people want to build a cluster out of RPi they must be clueless like hell (or not giving a sh*t about anything that’s important) so better offer them something looking nice over functioning efficiently?

> only the board at the stack’s top receives appropriate cooling, the 7 below not

I didn’t notice when I first saw the photos, you’re absolutely right, what an horror! So it’s just a noise generator.

> You build clusters because you need performance, not for community support.

They claim such a physical cluster setup would be great for educational purposes. I do not agree that much since Datacentre-in-a-laptop is IMO the better idea if you want to learn clustering and be mobile at the same time. My latest laptop has 16 GB DRAM which allows for 20 virtual servers easily.

https://www.crowdsupply.com/ground-electronics/circumference/updates/a-gnu-parallel-clustering-demo

Unbelievable. They really only care about the most inefficient SBC possible for cluster use cases (Raspberries). A cluster job when running on 8 nodes being just 3.72 faster compared to a single node is really ‘impressive’. They even explain why using Raspberries for this is such a crappy idea:

‘This overhead eats into the potential performance gain – as does the 100 Mb/s network port of the Raspberry Pi 3 Model B, which can be improved by a rough factor of three by switching the nodes to use the newer and faster Raspberry Pi 3 Model B+ with its gigabit-compatible Ethernet port’

As if powerful boards with real Gigabit Ethernet being 10 times faster wouldn’t exist. But unfortunately due to the broken thermal design of the box also more performant boards would run throttled to the minimum.

This a ridiculous for the price considering you still have to purchase the boards and other components. Good luck.