Hardkernel has just launched their ODROID-HC1 stackable NAS system based on a cost-down version of ODROID-XU4 board powered by Samsung Exynos 5422 octa-core Cortex-A15/A7 processor, which as previously expect, you can purchase for $49 on Hardkernel website, or distributors like Ameridroid.

We now have the complete specifications for ODROID-HC1 (Home Cloud One) platform:

We now have the complete specifications for ODROID-HC1 (Home Cloud One) platform:

- SoC – Samsung Exynos 5422 octa-core processor with 4x ARM Cortex-A15 @ 2.0 GHz, 4x ARM Cortex-A7 @ 1.4GHz, and Mali-T628 MP6 GPU supporting OpenGL ES 3.0 / 2.0 / 1.1 and OpenCL 1.1 Full profile

- System Memory – 2GB LPDDR3 RAM PoP @ 750 MHz

- Storage

- UHS-1 micro SD slot up to 128GB

- SATA interface via JMicron JMS578 USB 3.0 to SATA bridge chipset capable of achieving ~300 MB/s transfer rates

- The case supports 2.5″ drives between 7mm and 15mm thick

- Network Connectivity – 10/100/1000Mbps Ethernet (via USB 3.0)

- USB – 1x USB 2.0 port

- Debugging – Serial console header

- Misc – Power, status, and SATA LEDs;

- Power Supply

- 5V via 5.5/2.1mm power barrel (5V/4A power supply recommended)

- 12V unpopulated header (currently unused)

- Backup header for RTC battery

- Dimensions – 147 x85 x 29 mm (Aluminum case also serving as heatsink)

- weight – 229 grams

The company provides Ubuntu 16.04.2 with Linux 4.9, and OpenCL support for the board, the same image as ODROID-XU4, but there are also community supported Linux distributions including Debian, DietPi, Arch Liux ARM, OMV, Armbian, and others, which can all be found in the Wiki.

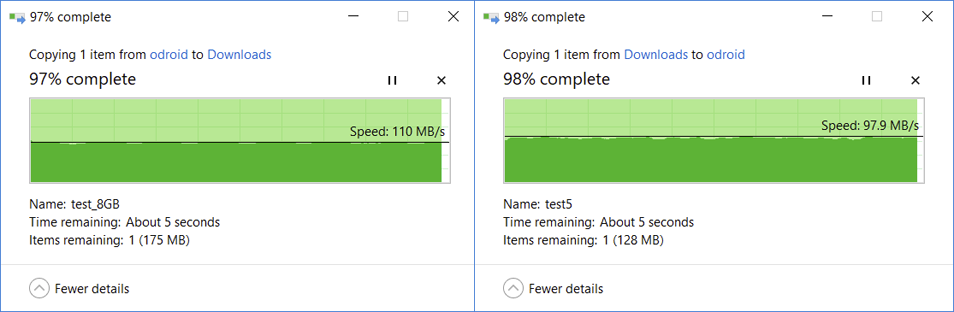

Based on Hardkernel’s own tests, you should be able to max out the Gigabit Ethernet bandwidth while transferring a files over SAMBA in either directions. tkaiser, an active member of Armbian, also got a sample, and reported that heat dissipation worked well, and that overall Hardkernel had a done a very good job.

While power consumption of the system is usually 5 to 10 Watts, it may jump to 20 Watts under heavy load with USB devices attached, so a 5V/4A power supply is recommended with the SATA drive only, and 5V/6A if you are also going to connect power hungry devices to the USB 2.0 port. The company plans to manufacture ODROID-HC1 for at least three years (until mid 2020), but expects to continue production long after, as long as parts are available.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Just a quick note. To benefit from HC1’s design (excellent heat dissipation, no USB3 contact/cable/powering hassles any more) settings and kernel version are important (to make use of ‘USB Attached SCSI’ (UAS) Hardkernel’s 4.9 LTS or mainline Linux kernel is necessary).

I did a quick comparison of two Debian Jessie variants obviously following different philosophies using their respective defaults with an older 2.5″ 7.2k Hitachi HDD testing on the fastest partition:

– 3.10.105 kernel: https://pastebin.com/VWicDntJ

– 4.9.37 kernel + optimizations: https://pastebin.com/0eWy00bY

The 2nd image is my test image I currently explore whether limiting the 4 big cores on the Exynos to just 1.4GHz is ok for NAS use cases (95% NAS performance compared to 2.0GHz but ~3W less consumption so far). And due to a network problem in my lab Gigabit Ethernet performance is currently limited to half the speed that’s possible with XU4/HC1 (still no idea what’s wrong here but with working network and 4.x kernel and appropriate settings — IRQ affinity and RPS — +900 Mbits/sec in both directions are no problem).

TL;DR: Settings/versions matter. Don’t trust in beefy hardware but check whether the OS image you use is able to squeeze out the max (or choose a conservative approach to save 1/3 energy with a performance drop of just 5% if your use case allows this 🙂 )

@tkaiser

So tkaiser, you would suggest to use this solution for a DIY NAS, to at least a bit increase the safety of the data.

For example such setup – 1 usb3 disk and 1 usb2 disk connected to the HC1, the usb3 disk is primary and used also for streaming while the usb2 is only meant for regular daily backups of usb3 disk….So data would then be on personal computers and on both usb3 and usb2 disks on the HC1, thus a bit safer if the disks in the personal computers die.

Could also other software be run on it like home-assistant, etc. in addition to OMV for example?

Since I realized that the OS image I chose for testing not only uses an outdated (and UAS incapable kernel) but also cpufreq scaling settings I don’t really understand (interactive governor and allowing the CPU cores to clock down to 200 MHz), I re-tested with both OMV/Armbian (4.9.37 kernel, this time big cores allowed to clock up to 2 GHz as it’s the real default) and DietPi (3.10.105) this time with a rather fast SSD (Samsung EVO840).

Difference to before: I switched in a 2nd round from distro defaults to performance cpufreq governor (something I wouldn’t recommend with XU4 for day to day usage since the Exynos on ODROID XU4/HC1 is a beast and needs a lot of juice when being forced to run especially the big cores at 2.0 GHz): https://pastebin.com/i1SQGAji

Comparing the ‘performance’ results only is maybe a good idea since this demonstrates how much kernel version (and USB Attached SCSI) matters. Comparing differences between defaults and performance for each kernel version then gives you the idea how further settings matter (though stuff like IRQ affinity and receive packet steering (RPS) to increase network performance aren’t addressed with such a simple storage benchmark. At least with DietPi’s default settings letting all interrupts being processed on cpu0 (a little core) a nice bottleneck for NAS use cases is created)

@Mark

ODROID-XU4 is a performance beast and the HC1 here is just the same minus display output and GPIO headers (no one uses anyway since 1.8V) and also fortunately missing USB3/underpowering hassles. In other words: You have reliable storage access here and can run plenty of other stuff in parallel to pure NAS/OMV use cases (a single client pushing/fetching NAS data through SMB/AFP/NFS keeps one big core busy and 3 little cores at around ~50%, so you have 3 beefy big cores left for other tasks and can let the little cores do background stuff as well — you might want to check cgroups/taskset stuff when running Linux).

Since I hate bit rotting and love data integrity my personal take on such stuff is using filesystems that allow for data checksumming (ZFS or btrfs), doing regular snapshots and sending them to another disk or even machine in the same building or another city/country via VPN (both ZFS and btrfs allow for send/receive functionality so you can simply transfer a snapshot you made before in a single step without comparing source and destination as it would be the case using anachronistic methods like rsync between two disks or nodes). But I fear we’re already off-topic 🙂

What configuration/filesystem would you use to have redundancy with two or three of these NAS?

@Mark

I forgot to mention that HC1 has the potential to run much much more heavy stuff on the CPU (or maybe the GPU as well utilizing OpenCL) since the huge heatsink works really well and can be combined with an efficient large but also slow/silent fan if really needed (I really hate XU4’s noisy fansink but unfortunately the quite XU4Q version has been announced just a week after I purchased XU4 some months ago).

BTW: with really heavy stuff (eg. cpuminer on all CPU cores which uses NEON optimizations) the heatsink + huge fan is not enough at least with summer ambient temperatures like now in my area. Starting minerd –benchmark the powermeter jumps up to 23W and throttling almost immediately occurs (my dvfs/cpufreq settings start already at 80°C with downclocking).

Though such a test is somewhat stupid (since not realistic unless you want to really do coin mining on these things) I already scheduled a test run at a customer (throwing the HC1 in a rack near the cold air intakes where they measured 12°C). Only a bigger PSU is needed since 5V/4A isn’t sufficient any more with such a workload at 2GHz. But no need to worry, everything normal will run fine with Hardkernel’s 5V/4A PSU and with typical NAS workloads combined with optimized settings (the ‘limit big cores to 1.4GHz’ I mentioned before) consumption remains below 8W while maxing out Gigabit Ethernet 🙂

@DurandA

I’m gonna pre-empt tkaiser’s answer here: No ECC? Then it can’t be trusted with your data. ( 😉 )

If you want to ignore that though maybe Gluster would do? Just don’t expect it to be bulletproof. ZFS can do all the checksumming it likes but if it’s being accessed through some dodgy RAM there’s nothing you can do.

*edit* Or you just have one unit as a front man and rsync between it and the other two which act as backups. That’s a bare minimum but simple to set up.

Are all the drivers for this board in the upstream kernel, or is Hardkernel maintaining it’s own 4.9 fork? It sounds like drivers have been upstreamed, but hardkernel only seem to talk about their 4.9 LTS kernel.

@CampGareth

For all those following naïve optimism thoughts like ‘if I put a one into my DRAM then it’s save to assume that I get back a one and not a zero‘ reading through all three pages of this explanation why (on-die) ECC soon becomes a necessity for mobile DRAM might be enlightening or frightening based on perspective/experiences 😉

@camh

Hardkernel maintains an own 4.9 branch which receives regular updates from upstream 4.9 LTS where they can fix intermediate issues soon on their own if needed (eg. a while ago they discovered an issue with coherent-pool memory size too small not only affecting ‘USB Attached SCSI’ but also some wireless / DVB scenarios) and by which they can guarantee an always working kernel due to internal testing efforts.

Latest mainline also runs pretty fine on XU4/HC1 but currently you’re more likely to run into issues (eg. USB3 being reported as broken few weeks ago with 4.13-rc)

Hello Tkaiser,

Could you please confirm the power consumption for idle & disk powered-off with your optimized image?

The article contains consumption values 5-10watts, if your optimized image should lower the maximum for 3 watts, does it means 5-7 watts (in idle)?

Or should we take some more variables taken into account and the final consumption is different?

@tkaiser

Great news, gonna soon order it then :)…Yeah its a bit over my head the more complicated and better ways, but still nice to know, thanks for the awesome reply tkaiser!

CampGareth now frightened me a bit but I’m gonna try to ignore that :).

@tkaiser

Am I missing something? It seems to me that article doesn’t have anything on reliability. What it’s saying is that if you gain ECC you can further abuse your RAM to save power, just reduce its voltage, and the speed of refresh cycles, and its node size. Make it less reliable then correct for the newly introduced unreliability. A similar approach is used in mobile radios since high power broadcasting takes a lot of power but a few million transistors dedicated to error correction take far less.

Still the more I read the more I tend to agree with you that this is a serious problem stopping SBCs from being useful NASes, at least for enterprise. For instance here’s a paper from 14 months analysis of facebook’s servers in 2015:

http://repository.cmu.edu/cgi/viewcontent.cgi?article=1345&context=ece

The key stats from that are 2% of servers have at least one memory error each month, 10% of servers have at least one error in 12 months (servers that have had errors are more likely to have errors again). That’s certainly not insignificant. I’m not sure it’s the biggest threat out there, my drives have had a 100% fatality rate by about 5 years and power supplies tend to pop due to spikes, but it’s a problem for sure.

Nope. My specific use case is NAS and I’m investigating whether it’s worth to let the A15 CPU cores clock up to 2.0 GHz which wastes a lot more energy — see below — or only allow them to clock as high as 1.4GHz when performance is the same. My number of 3W less is based on that (full NAS performance comparing big cores at 2 / 1.4GHz). Idle consumption is not affected here at all and I’m focusing on ‘good enough’ performance since for NAS use cases Gigabit Ethernet is the bottleneck anyway and it doesn’t matter whether the CPU would be able to shove out 300 MB/s or 200 MB/s since ~115 MB/s is enough.

The ‘problem’ is that the higher the clockspeeds the higher VDD_CPU has to be (CPU cores being fed with more voltage to still operate stable/reliable). And at the upper end of the scale small increase in clockspeed mean huge increases in voltage/consumption. Also I don’t trust that much in my powermeter so I can only provide relative numbers (11W vs. 7.8W –> ‘~3W less‘) and leave it up to Hardkernel to provide official numbers.

Small addition to before just as a reference for anyone concerned about performance especially in combination with heat dissipation and another quick comparison between both available kernel variants (and related strategies, eg. throttling):

Tinymembench results with 3.10.105 kernel https://pastebin.com/8LKsBJai and with 4.9.38 https://pastebin.com/31dundeJ (performance cpufreq governor in both cases so running at 2000/1400 MHz big/little).

Then 7-zip, sysbench and cpuminer numbers done with performance cpufreq governor to get an idea how throttling strategies differ. Sysbench numbers can only be compared when compiled with same compiler for same target platform (true since 2 times Debian Jessie packages used that were built with GCC 4.9):

– 3.10.105 numbers: https://pastebin.com/XdUGgHsZ

– 4.9.37 numbers: https://pastebin.com/cV2yPNZD

HC1 was lying flat on a table with heatsink fins on top, ambient temperature 25°C. When comparing the benchmark numbers we don’t compare two kernels but two different throttling settings/strategies. The DietPi image running with 3.10.105 seems to start throttling at 95°C reported SoC temperature while the 4.9 settings start already at 80°C. I bet 7-zip numbers would look a lot better with 4.9 kernel after increasing thermal trip points to the same value DietPi uses.

The 120 second break between 7-zip and sysbench run can be used to monitor how fast the SoC cools down (that’s the downside of a huge heatsink: stores also more heat to feed back into the SoC) and the sole purpose of the cpuminer run was to check efficiency of throttling.

With the 3.10 settings I saw DietPi’s cpu tool reporting constantly +95°C and after 10 minutes we were down to 7.7 khash/s. By checking cpufreq statistics (time_in_state) we see clearly that the little cores ran all the time at 1.4 GHz while the big ones were jumping directly between 900 and 2000 MHz with no steps in between.

Throttling with 4.9 works totally different (thermal/cpufreq numbers for the whole run: https://pastebin.com/2fxdjFLc ). Even with a much lower first trip point (80°C compared to 95°C) overall performance is better while temperatures are lower at the same time: 8.6 khash/s while not exceeding 91°C. This is just another nice example that shows the advantage of fine grained throttling steps over huge jumps. The 4.9 settings use 100 MHz steps and slowly climb down to 1300/1200MHz.

So in case your workload is known to cause throttling that’s another good reason to choose 4.9 over 3.10 (considering increasing thermal trip points and/or adding a fan blowing over the heatsink fins to further improve HC1 number crunching performance). But in case you’re thinking about such workloads better check specifications of your SD card (or use one with industrial temperature range in the first place 😉 ) and also keep in mind that the heatsink then also dissipates heat into a connected HDD/SSD. Another 10 minute cpuminer run after some idling and internal SSD temperatures (totally idle) recorded prior and after benchmark: https://pastebin.com/H9DPyYhB (in other words: adding a fan is the way to go in such situations)

@CampGareth

Oh, that was already two years ago on CNX: https://www.cnx-software.com/2015/12/14/qnap-tas-168-and-tas-268-are-nas-running-android-and-linux-qts-on-realtek-rtd1195-processor/#comment-520316 (check especially the links there).

The more processes and structures shrink the more we are away from something ‘digital’ and the more such error detection and correction stuff has to happen. On-die ECC as it’s optional with LPDDR4 is one logical requirement for further process shrink but it should be pointed out why this is different from ECC DIMMs. The latter allow to read out bit flips happening so you can act on accordingly (that’s what monitoring is for — in the beginning I configured SNMP traps and pager alerts for memory errors but after two decades single bit flips were just handled as logged incidents and only if occurences increase per memory module (delayed) action is required).

Anyway: we did a lot of test wrt DRAM reliability already (focused on cheap SBC with Allwinner A20 and H3) and had to realize that a lot of boards out there use default DRAM timings that are too high (or are missing proper calibration which would allow to run at much higher clockspeeds without affecting reliability). So if I have to use an SBC without ECC memory and care about data integrity a first step might be to downclock memory (eg. HC1’s LPDDR3 to 750 MHz — AFAIK default clockspeed is 825 MHz on all OS images available)

HC2 arrived and works as expected: https://forum.armbian.com/topic/4983-odroid-hc1-hc2/?do=findComment&comment=45690

Fully software compatible so all available OS images will work out of the box. ‘Performance’ tests useless since only depending on the disk used. With SSDs (the enclosure is also prepared for 2.5″) and OS images that use good settings ~400 MB/s sequential performance is possible while random IO performance is limited due to USB3 SATA here. But with spinning rust only the HDD in question will define performance, the JMS578 used here as USB-to-SATA bridge is no bottleneck at all.