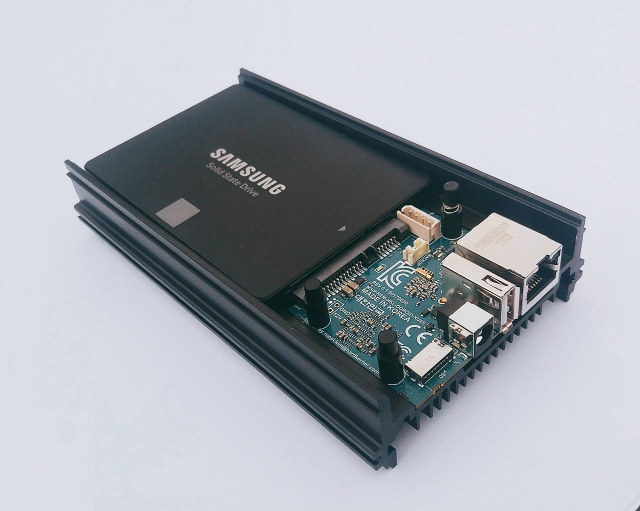

Hardkernel ODROID-XU4 board is a powerful – yet inexpensive – ARM board based on Exynos 5422 octa-core processor that comes with 2GB RAM, Gigabit Ethernet, and a USB 3.0 interface which makes it suitable for networked storage applications. But the company found out that many of their users had troubles because of bad USB cables, and/or poorly designed & badly supported USB to SATA bridge chipsets. So they started to work on a new board called ODROID-HC1 (HC = Home Cloud) based on ODROID-XU4 design to provide a solution that’s both easier to ease and cheaper, and also includes a metal case and space for 2.5″ drives.

They basically remove all unneeded features from ODROID-XU4 such as HDMI, eMMC connector, USB 3.0 hub, power button, slide switch, etc… The specifications for ODROID-HC1 kit with ODROID-XU4S board should look like:

- SoC – Samsung Exynos 5422 quad core ARM Cortex-A15 @ 2.0GHz quad core ARM Cortex-A7 @ 1.4GHz with Mali-T628 MP6 GPU supporting OpenGL ES 3.0 / 2.0 / 1.1 and OpenCL 1.1 Full profile

- System Memory – 2GB LPDDR3 RAM PoP

- Storage – Micro SD slot up to 64GB + SATA interface via JMicron JMS578 USB 3.0 to SATA bridge chipset

- Network Connectivity – 10/100/1000Mbps Ethernet (via USB 3.0)

- USB – 1x USB 2.0 port

- Debugging – Serial console header

- Power Supply

- 5V via power barrel

- 12V unpopulated header for future 3.5″ designs [Update: ODROID-HC2 is in the works, to be released in November 2017]

- “Backup” header for battery for RTC

- Dimensions & weight – TBD

Exynos 5422 SoC comes with two USB 3.0 interfaces and one USB 2.0 interface, and since USB 3.0 interfaces are used for Ethernet and SATA, that’s why they only exposed a USB 2.0 port externally. The metal frame supports 2.5″ SATA HDD or HDD up to 15 mm thick, and it also used as a heatsink for the processor. The company tested various storage devices including Seagate Barracuda 2 TB/5 TB HDDs, Samsung 500 GB HDD and 256 GB SSD, Western Digital 500 GB and 1 TB HDD, HGST 1TB HDD with UAS and S.M.A.R.T. function.

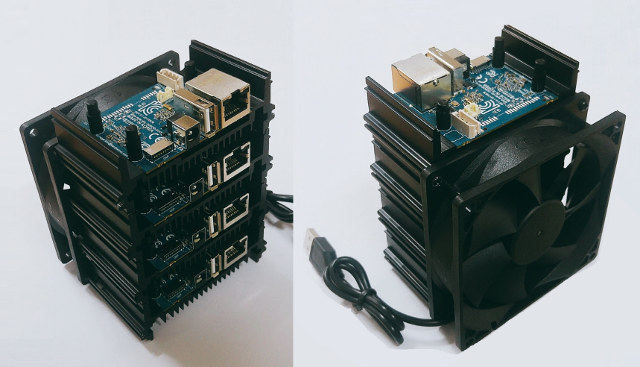

The fun part is that you can easily stack several ODROID-HC1 kits on top of each other, and you could use Ceph filesystem (Ceph FS), if you want the stacked boards to show as one logical volume [Update: This may not work well due to lack of RAM and 32-bit processor, see comments’ section]. The price is not too bad either, as ODROID-HC1 is slated to launch on August 21st for $49 + shipping with the board and metal frame.

The fun part is that you can easily stack several ODROID-HC1 kits on top of each other, and you could use Ceph filesystem (Ceph FS), if you want the stacked boards to show as one logical volume [Update: This may not work well due to lack of RAM and 32-bit processor, see comments’ section]. The price is not too bad either, as ODROID-HC1 is slated to launch on August 21st for $49 + shipping with the board and metal frame.

But the company did not stop there, as they found out it was rather time-consuming to setup a 200 ODROID-XU4 cluster in order to test Linux kernel 4.9 stability, and also designed a ODROID-MC1 (MC = My Cluster) cluster with 4 boards, a metal frame and a large USB powered heatsink.

The solution is based on the same ODROID-XU4S boards, minus the SATA parts. It’s also stackable, so building that 200 board cluster should be much easier and faster to do. The solution is expected to start selling for $200 around the middle of September, and on the software side some forum members are working on Docker-Swarm. Hardkernel is also interested in sending samples to people who have cluster computing experience.

Thanks to Nobe for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Looks very nice! Nearly what i want. But a little more connections would be nice, e.g. a version with 2x SATA or a second USB port. But this is of course a chipset limitation.

They have something called the mycloud 2 which has 2 sata controllers and can allow for raid. Altought its more expensive than the HC1.

Win product right there.

Great ideas, glad they listened to the community and brought 2 awesome from those ideas. I really like thr metal case and heatsink. The HC1 is something I’ll definatly try out now that it can run 4.9.

From a hardware engineering perspecitve it does not make any sense to expose an additional USB2.0 port if you have two USB3 host controllers. You use one for storage with UAS, which I can see to not tolerate a Hub because of the increased amount of endpoints and overhead. But, why would you not pair the other USB3 host with a hub for GbE Ethernet and external USB port?

Very well done! I’ll prepare another OMV image disabling the rather inefficient Cloudshell 2 scripts based on detection of the internal USB hub soon. If Hardkernel wants me to test OMV on this board they could contact me through OMV forum.

@cnxsoft: Board design and the availability of the unpopulated 12V header let me believe they also thought about the 3.5″ use case (just as suggested few weeks ago but with RK3328 instead of Exynos in mind — see bottom of this post).

Huh !

How much arrogant can you get, tkaiser??

On what level do you think this is an arrogant request? Hardware manufacturers are used to put out their investment of merely a couple thousand of US$ for hardware development all the time, expecting to get long term software support for free, from open source projects that equal software engineering worth a couple 10k $ a month…

@tkaiser

Please check your PM box in odroid-forum. We already sent you a PM before cnx posted this news.

Xalius:

sorry, but did not get your point?

I wonder if that T628 MP6 could be used solely as an OpenCL device.

@blu

Yes, OpenCL is officially supported on it.

@blu

as far as i understand, it can.

I think both GigE and SATA are on USB3 so there’s little room for more bandwidth there. If the chip has native USB2, it can be quite useful for external backups compared to a possibly already overloaded USB3.

They thought about my build farm use case 😉 Actually it could be an inexpensive option for this. At $200 for 4 boards, it’s cheaper than RK3288 at ~$60 a piece for about the same performance level, and here the CPU should be properly cooled down.

@willy

There are two (2) USB3 ports provided by the SoC. One goes to GB Ethernet, and the other goes to SATA. They are not shared and there is no hub.

@crashoverride

Ah that’s pretty cool. This further explains the lack of any extra USB3 port then!

So into each unit for 50usd one can plug two hdd’s? since it has two usb3 interfaces? Is there place for two hdd’s in the metal case? Or should i buy two units for a two disk setup?

Why aren’t they using a 64-bit cpu? the Samsung Exynos5422 Cortex™-A15 2Ghz and Cortex™-A7 Octa core CPUs are documented as being 32-bit processor core licensed by ARM Holdings implementing the ARMv7-A architecture.

64-bit cpus have ARMv8 which is a better chip for this kind of stuff, isn’t it?

The other point I’m also unimpressed with is the RAM? just 2GB. real motherboards like Supermicro AMD-EPYC or Intel based all have 512GB+ RAM capacity we can throw onto the board. 2GB is SO LITTLE!!!

@Mark

You can only use one drive per $49 unit. The USB 3.0 interfaces are used internally in the board one for Gigabit Ethernet, one for SATA. So you’re right if you want two hard drives you’d need to get two units, unless you are happy with using the USB 2.0 port, but this will obviously lower performance.

@willy

is cpu cache relevant in your use case ?

i noticed exynos-5422 has 2MB for A15 cluster while rk3288 has 1MB.

then there’s also a bit more max frequency + the additional ‘little’ cluster in 5422, so i wonder if it might perform significantaly better at compiling software

by the way, i advertised your work in odroid forums, you might be contacted by hardkernel in the next few weeks (no guarantee though :p)

@nobe

Yep, CPU cache is very important, just like memory bandwidth. In fact the build performance scales almost linearly with memory bandwidth. With twice the cache we could expect a noticeable boost. I’ve read on their forums that XU4 uses 750 MHz DDR3 frequency vs 933 on XU3 and 800 on MiQi. In all cases that’s dual-channel 32-bit so it’s more or less equivalent. The cortex A15 is supposed to be slightly faster on certain workloads, with peaks around 3.5 DMIPS/MHz vs about 3.0. Also I’ve always suspected that the RK3288 with its A12 renamed to A17 doesn’t benefit from all the latest improvements that used to differenciate the inexpensive A12 from the powerful A17 at the beginning (that said it’s very fast). So while the A15 sucks a lot of power, it runs at a 11% higher frequency and may have a slightly higher IPC so it might be worth a try, especially at this price point.

Regarding the “little” cluster, I’m really not interested in using it. Cortex A7 is so slow at this task that if you’re unlucky and a large file ends up on such a core, it may simply last for the total build time on small projects. And the added benefit on a large project like the kernel is around 10-15% max (keep in mind that all cores still share the same memory bandwidth). I think instead that disabling these cores will save some heat and avoid some throttling.

BTW, thanks for your message on their forums.

Because that’s not needed for this type of workload and with this amount of memory.

Not necessarily. The only other CPU you’ll find in this price range is the A53, which is very rarely available above 1.5 GHz and which clock-for-clock is just half of the performance of the A15. The A15 is of the same generation as the A57, ie a very powerful one but power-hungry.

That’s a lot for many of us using embedded devices. If I could have half of it for less money, I’d definitly do. 512 MB is far more than enough for my build farms for example.

You seem to be comparing apples and oranges. Take a look at the cost of your motherboard fitted with 512GB, look at the cooling system, the power supply and all other costs and tell us if you manage to get all of this fanless in such a small box for only $50. I guess not. A lot of us are more interested in performance per dollar, performance per watt, performance per cubic meter, or performance per decibel. All these factors count.

@cnxsoft Ceph requires a 64-bit CPU, so no Ceph on here though you could run a CephFS *client* which would be functionally identical to say an NFS client, just backed by a Ceph cluster running on other 64-bit machines.

Now a stackable machine that is cheap and works with Ceph? That’s the Espressobin in a nutshell.

@CampGareth

as far as i understand, 64-bit is required in X86 world, not in ARM world

so i think ceph can work with armv7

http://docs.ceph.com/docs/master/start/hardware-recommendations/

@ODROID

[x] done. Thanks for the offer, I’m happy to test with the new board variants and report back. In the meantime I remembered that most probably no new OMV image will be needed since I implemented Cloudshell 2 detection already so the CS2 services won’t be installed on HC1 anyway.

BTW: If heat dissipation is sufficient and HC1 will be available via german resellers within the next 3 weeks you already sold ~20 boards. 🙂

@willy

Very interested in your findings whether/how putting cpu0-cpu3 offline affects performance of your use case. I made some performance tests some time ago on XU4 comparing only either little or big cores but didn’t thought about comparing ‘big cores only’ with all cores active. Unfortunately on my XU4 I already run into throttling issues with heavy loads only on the big cores even with spinning fan so I’ll wait for the HC1s arriving since I would assume heat dissipation being much more efficient there (when combined with a fan).

I am a big fan of ceph and have been thinking along these same lines. Glad to see the new hardware out. But Ceph requires a gig of RAM per terabyte of storage. After OS overhead etc. You can only put 1T disks in these. Has anyone even had ceph running on arm or odroid yet?

@nobe

Unfortunately Ceph hardware requirements do not mention that using Ceph (or any other cluster FS) without ECC DRAM is not the greatest idea. And on most ARM devices with Ceph we run in a nice mismatch between available DRAM and supported storage sizes anyway.

@nobe

If it’s possible they’re certainly not supplying packages. Have a look at the package repository under /debian-kraken/dists/xenial/main/binary-armhf/ and what you’ll find is a list of included packages 1.2KB long (CephFS client and ceph-deploy). If you look at the arm64 section you’ll find a 56KB long list of packages (the entire suite).

Practical upshot of this is you won’t have to compile your own if you’re on 64bit ARM.

Oh and tkaiser’s right in that ECC is recommended, as is a lot of RAM and compute power. Realistically you can tweak around the RAM requirements and get usage as low as 100MB idle and 500MB in rebuild. ECC… hmm, it’s classically required because normally you have a single big box that can Never Fail Ever(tm) but clustered storage should mean you don’t need it. If a node starts having small amounts of memory errors then the data on disk will be slightly off but checksums and 2 other good copies will let you detect and correct that automatically. If a node starts having many errors it’ll get kicked out of the cluster. I’m still not sure whether I’d trust it in production but that’s only because it hasn’t been tested thoroughly, which is why I want to test it for my low-priority home storage.

Ceph can run on inexpensive commodity hardware. Small production clusters and development clusters can run successfully with modest hardware.

Process Criteria Minimum Recommended

ceph-osd Processor

1x 64-bit AMD-64

1x 32-bit ARM dual-core or better

RAM ~1GB for 1TB of storage per daemon

Volume Storage 1x storage drive per daemon

Journal 1x SSD partition per daemon (optional)

Network 2x 1GB Ethernet NICs

ceph-mon Processor

1x 64-bit AMD-64

1x 32-bit ARM dual-core or better

RAM 1 GB per daemon

Disk Space 10 GB per daemon

Network 2x 1GB Ethernet NICs

ceph-mds Processor

1x 64-bit AMD-64 quad-core

1x 32-bit ARM quad-core

RAM 1 GB minimum per daemon

Disk Space 1 MB per daemon

Network 2x 1GB Ethernet NICs

This looks almost exactly what I have been looking for.

Question though, are the cases all open, or do the pictures just not show a complete case?

@CampGareth

OK, so Ceph might be challenge on those… Is there another way that would make multiple ODROID-HC1 kits appear like one?

@tkaiser

Regarding the use of CPU cores, if at least I can have all the performance I need out of the big cluster, I may leave the other ones running for “low-performance” tasks which do not heavily compete with gcc, such as ssh, network interrupts, or maybe even distccd if I patch it to perform sched_setaffinity() before executing gcc. That may allow it to decompress the incoming traffic on the little cores and distribute requests to the heavy ones. The benefit here is not much to gain a bit more CPU, rather to lower the accept latency by not having to wait a full time slice to prepare the next request. Then it might help to increase the scheduler’s latency to 10ms to reduce context switching. I’ll very likely buy one (board or cluster depending on what is possible) to test once available.

Only in a theoretical world where your Ceph cluster never gets feed with new data. 🙂

If data corruption happens when data is entering the cluster you checksum already corrupt data, then distribute it accross several cluster nodes and in the end you have 3 bad copies that are now ‘protected’ by checksums. I would assume with data corruption it’s just like with data loss. Talking about is useless but you must experience this personally to get the idea why it’s important to protect yourself from. And for understanding how often bit flips happen a really great way is to have access to a monitoring system with a lot of servers where 1 and 2 bit errors are logged. You won’t believe how often single bit (correctable with ECC!) errors occur in the wild even on servers that survived 27 hour ‘burn in memtest86+’ without a single reported error.

Summarizing this: to get reliable Ceph nodes based on ARM hardware you want SoCs like Marvell ARMADA 8040 combined with maximum amount of ECC DRAM since otherwise storage capacity per node is laughable. And then it gets interesting how price/performance ratio looks like compared to x64.

@willy

Yeah, I would assign those other tasks to the little cores with your compile farm setup. In my testings with appropriate settings (IRQ affinity and RPS) little cores can handle all IO and network stuff at interface speed even when only allowed to clock at ~1.1GHz without becoming a bottleneck. For NAS use cases I also use the little cores for IRQ processing and send the daemons that do the real work on the big cores (being lazy using taskset command from userspace 😉 )

BTW: You might want to compare tinymembench results on your MiQi with XU4 numbers: discuss.96boards.org/t/odroid-xu4-cortex-a15-vs-hikey-960-a73-speed/2140/22

@Mike Schinkel

According to ‘ODROID’ they’re preparing a transparent plastic cover (where my last little remaining concern — position of the SD card slot — is addressed and also explained: ‘If user assembles the cover, the exposed length of the SD card is only 2~3mm.’).

A little bit delayed to a molding tool problem but the best place to ask/look is forum.odroid.com of course 🙂

@Mike Schinkel

Mike,

I think this pretty much covers everything in your use case. The physical case is a minor technicality that can be resolved easily – 3D print over the alu extrusion.

that’s interesting.

also, keep in mind that this exynos-5422 uses PoP DRAM.

so, if i understand correctly, the DRAM chip is placed just on top of the SoC.

i’ve wondered for a long time how much the SoC temperature can interfere with DRAM performance.

i suspect it shouldn’t matter when solely using tinymembench, but this might matter when compiling a kernel for example.

Absolutely. Given that you dropped the idea to ignore the little cores you guys (ant-computing.com) are the perfect match to explore cluster capabilities with MC1 (or HC1 if the latter isn’t yet available). Having another 4 slow cores available on the same SoC makes up for some minor challenges as well as some great opportunities IMO with your use case.

And wrt @nobe‘s DRAM performance concerns testing through this quickly would also be easy with MC1/HC1 (just check tinymembench results with an external fan to cool everything down and then again with a hair dryer heating the large heatsink up transferring the heat into an idle SoC).

I’ll run tinymembench later on my XU4 to check results and if they differ from the above link I’ll drop you a note here (just busy now packaging some USB3-to-SATA bridges I bought on Aliexpress to send them to Linux UAS maintainer)

@cnxsoft

GlusterFS has packages available for a load of debian versions and would do something similar. It’s a bit more simplistic, from what I can gather a gluster client just treats all the servers as if they were individual NFS shares but presents them as one to the user. The user then configures striping across shares on the client side.

@tkaiser I forgot about incoming data, you’re right that’ll hit one node then get copied across the others. Still it must be possible to get around the ECC requirement, it just isn’t common in the ARM world and in the x86 one requires xeons with their markup. In fact here’s a quote from WD’s experiment with Ceph running on controllers attached to the drives themselves:

“The Converged Microserver He8 is a microcomputer built on the existing production Ultrastar® He8 platform. The host used in the Ceph cluster is a Dual-Core Cortex-A9 ARM Processor running at 1.3 GHz with 1 GB of Memory, soldered directly onto the drive’s PCB (pictured). Options include 2 GB of memory and ECC protection. ”

ECC optional! Why on earth would they make an enterprise product without it by default though? Ultimately I want to do some tests.

This is also from 2016 before the 32-bit ARM packages got dropped.

https://pastebin.com/sXn4fx6A (I can confirm by looking at monitoring clockspeeds that CPU affinity worked, also no throttling occured when running on the big cores but results are somewhat surprising)

Huh? You realized that even JEDEC talks these days about optional on-die ECC for LPDDR4 since density increased that much that we risk today way more bit flips than in the past. It’s also not that a memory module becomes ‘bad’ over time. It’s just… bit flips happen from time to time for whatever reasons. When you have no ECC you either won’t take notice (silent data corruption) or if the bit flips affect pointers and stuff like that you see an app or the whole OS crashing (and blame software as usual). With ECC DRAM 1 bit errors are corrected (ECC –> error correction-code!) and 2 or more bit errors are at least monitored (if there’s an admin taking care). And if you really love your data you use even more redundancy to be able to recover even from multiple bit errors happening at the same time (see IBM’s Chipkill for example)

BTW: ECC is the same happening at the memory controller layer that your whole Ceph concept wants to do accross a cluster adding whole computers for redundancy — why do people accept the concept of redundancy/checksumming if it’s about adding expensive nodes to a cluster but refuse to understand the concept of redundancy/checksumming if it has to happen at a much lower layer? It’s the same concept and it addresses the same problem just at different layers. And computers simply start to fail if memory contents become corrupt so where’s the point in ‘getting around the ECC requirement’?

Wow, the results are indeed surprizing and not quite good. And the lower cores definitely are not impressive either.

Here’s the one on the MiQi at 1.8 GHz (no throttling either) : https://pastebin.com/0WMHQN09

@MickMake

Well, based on Mike’s questions over the past few months I would assume he needs high random IO values with a connected mSATA SSD. So since recently combining the so called ‘Orange Pi Zero NAS Expansion Board’ with ‘Orange Pi Zero Plus’ (Allwinner H5, Gigabit Ethernet, connecting to the NAS Expansion board via USB2 on pin headers) might be an inexpensive option.

@willy

Yes, I did not trust in either so decided to re-test. Since I was testing with my own OMV/Armbian build I added the below stuff to /boot/boot.ini, tested, adjusted DRAM clockspeed to 933 MHz as per Hardkernel’s wiki, tested again (better scores) and then tried out also their stock official Ubuntu Xenial image (also using 4.9.x). Results: https://pastebin.com/iBCq6p9U

I did not test with their old Exynos Android kernel (3.10 or 3.14, don’t remember exactly) but obviously this is something for someone else to test through since the numbers in 96boards forum look way better than those that I generated with 4.9 kernel now.

@tkaiser

It’s a granularity of cost thing. I can’t justify spending £1000 on a new server (and even old servers are expensive if you want to fill them with RAM at £15 per 8GB) but I can justify spending £30 33 times. As an added bonus if that one £1000 server blows up it’d take months to replace, but with the SBCs I might not even care.

It’s possible that you will hardly go higher. Keep in mind that one of the nicest improvements from A15 to A17 was the redesign of the memory controller and the cache. It’s possible that a lot of bandwidth is simply lost on A15 and older designs. I remember noticing very low bandwidth as well on several A9 for example.

Does it have full uboot and mainline kernel support like rpi and sunxi?

@goblin

It runs Kernel 4.9 LTS with U-boot 2017.07

Kernel : https://github.com/hardkernel/linux/tree/odroidxu4-4.9.y

U-boot : https://github.com/hardkernel/u-boot/tree/odroidxu4-v2017.05

@goblin

The vendor maintains an own 4.9 LTS branch (most people keen on NAS performance use since ‘USB Attached SCSI’ works here) but developers also run most recent mainline kernel on Exynos 5244 / XU4/HC1 since quite some time now.

@CampGareth

Well, kinda off-topic here but since Jean-Luc brought up the cluster idea (all HC1 appearing as single storage)… I’ve never seen any reliable storage cluster attempt for a few TB that didn’t suck compared to traditional NAS/SAN concepts. These setups get interesting in larger environments but for a TB I doubt it.

I also don’t understand why you’re talking about costs per server without adding storage to it (HDDs)? In the UK as well as in DE 4-bay storage servers with ECC DRAM and sufficient performance are below the 200 bucks barrier without disks, eg. a standard HP Microserver like this: https://www.amazon.co.uk/dp/B013UBCHVU/

Having added customs/VAT in mind most probably 3 HC1 cost the same as one HP Microserver. With a classical NAS/SAN approach I go with single or better double redundancy (4 HDD used in zraid2/raid6/raid10 mode) while a storage cluster dictates the redundancy setup. When talking about 4TB HDDs I end up with 8 TB usable with the NAS/SAN approach (when I vote for double redundancy, with single redundancy we would talk about 12TB already) while for the same ‘server cost’ with 3 HC1 I would end up with just 4TB available for user data since Ceph holds 3 copies.

When adding HDDs to the total costs I really do not see a single advantage for any of the storage cluster attempts if we’re talking about capacities below the PB level. And since for me ECC DRAM is a requirement having data integrity in mind I also don’t know of a single ARM based solution that could compete with x86 (Helios4 based on Armada 38x being the only exception I know of)

would be totally interesting to see this getting evolved in edge computing ecosystems :]

Today one HC1 together with some accessories arrived that Hardkernel sent out on Monday. First impression: Looks nice (not that important), heat dissipation works *very* well especially compared to XU4 fansink and to my surprise position of the heatsink fins doesn’t matter that much.

I made a few performance tests but as usual discovered a mistake I made so just another hour wasted and I’ve to redo the tests again.

Quick check whether UAS/SSD performance improved when no internal USB3 hub is in between Exynos SoC and USB-to-SATA bridge: https://pastebin.com/Ma7ejyYb (preliminary numbers since I have to retest with XU4 and an JMS578 bridge but have currently no such enclosure around since donated to Linux UAS maintainer).

Quick NAS performance tests look great but client –> HC1 performance sucks (~50 MB/s). Tested Gigabit Ethernet only with iperf3 and it’s obvious that there’s something wrong at the network layer in RX direction. Checked different 4.9 kernel versions, exchanged two times the switch between client and HC1, restarted everything… to no avail. But I’m sure that’s a problem not located at the HC1 since I did a quick check with XU4 and performance there was even worse. So I still assume there’s something wrong at my side but once this is resolved NAS performance numbers might look even better than with XU4 (though XU4 performance with UAS capable disk enclosures is hard to beat since maxing out Gigabit Ethernet already 😉 ).

Once tests are successfully finished I’ll post a link to a mini review in either Armbian or OMV forum. But to quote myself now after some hands on experiences: Very well done!

@tkaiser

There are advantages to the HC1 that your overlooking compared to the 4 bay nas. Looking at this from a more commercial point of view, what the focus group is for the HC1/MC1:

* Encryption … client per client encryption where each client there files are separate encrypted based upon unique keys for that client.

While you can do this encryption on the “production nodes” before sending it to the store nodes, it simply is more logical to have encryption done on the storage nodes. Its separates the logic a bit more clean manner. Work nodes (MC1) do real work, storage nodes (HC1) do encryption/decryption.

If a NAS offers encryption, its in general disk based or the exact same encryption for all the files ( or even worse, linked to a specific hardware nas = troublesome in case of failure ).

* Redundancy. Your 4 drives nas has a single point of failure. Its backplane. You also need to take in account, your 4 drive nas will share a 1Gbit connection, for all those drives. So if equally terms access all the drives, you can at best get 25MB/drive. Less of a issue where as the HC1 can output 100MB/s drive. The bottleneck becomes then your router but any descent commercial router has no issue multiplexing several 100MB/s streams.

* ECC while nice, is always a very disputed point as how much it “really” helps. I ran commercial software on non-ecc servers for years, and it has never been a issue. Sometimes people are running websites etc on non-ecc servers, without them even realizing. Try Hetzner (de) there servers. Everything below 200 Euro is non-ecc normal hardware. KimiSurf etc ….

ECC on a NAS is even more silly. Why? Because your more likely to have a bad cluster or non-recoverable error on your harddisk, then ever on your memory. Its always been more of a state of mind for ECC, where the clients feel they are more safe.

Your also more likely to get data corrupting errors from software bugs, or even hell design faults in your cpu. How many times did Intel/Amd ship with faults that actually resulted in data issues in rare situations. More then people realize. And those rare situations are lot more common then the theoretical memory error issue. Especially with today’s hardware being better shielded.

ECC has been one of those things that people “expect” to help but nobody has ever been able to prove it actually works. If you want data reliability, have written checksums. Have duplicated data with checksums.

If we go back maybe 20 or 30 years, when a lot more manufacturing and product testing was more in its infancy, ECC might have been a better argument.

If you are going to use the HC1 for any production, your probably going to be running 2 HC1s with data duplication as a separated raid solution. Not that hard to have a checksum file written next to your data, what actually is more reliable as it can detect more then memory issues but also storage medium, cpu, network stack etc 😉

@Benny

I’m still not convinced about Ceph or other storage cluster use cases here, especially when it’s just about a few nodes. And it will be close to impossible to convince me giving up on ECC (regardless at which level, since ECC is just adding a bit redundancy to be able to identify/correct problems) since I’ve access to a rather large monitoring system collecting DRAM bit flips that do happen from time to time. With ECC DRAM and server grade hardware you get notified and single bit flips also get corrected (see mcelog in Linux) without ECC it just happens. Depending on where it happens you get either nothing, bit rot, applications or even the whole system crashing.

And yes, it’s 2017 now. Not caring about data integrity at every level is somewhat strange. Fortunately there is more than one choice we have today to deal with this at the filesystem level (ZFS, btrfs and also some solutions that maintain external checksums for whatever reasons). BTW: I’m not talking about replacing ECC DRAM with checksummed storage but of course combining them 🙂

Hmm, why is there only a partial case? Is there a lid I am not seeing?

Yes, maybe not the best for Ceph, but if you are just needing something small for redundancy, I would think two of these with gluster would be fine.

See comment #35 above.

[x] Done. Mini review online: https://forum.armbian.com/index.php?/topic/4983-odroid-hc1/

When following some suggestions — eg. https://forum.odroid.com/viewtopic.php?f=96&t=27982 — I came to different conclusions staying with ondemand cpufreq governor but moved the IRQ handler for the Gigabit Ethernet chip from a little core to a big core to guarantee full GbE network utilization in all situations.

Depending on use case (using the big cores for other demanding stuff) this is something that users might want to revert. But due to HC1 being a big.LITTLE design playing with IRQ/HMP affinity might be really worth the efforts with non standard or special use cases.

What about the NanoPi Duo available in the meantime? https://forum.armbian.com/index.php?/topic/5035-nanopi-duo-plus-mini-shield