SolidRun MACCHIATOBin is a mini-ITX board powered by Marvell ARMADA 8040 quad core Cortex A72 processor @ up to 2.0 GHz and designed for networking and storage applications thanks to 10 Gbps, 2.5 Gbps, and 1 Gbps Ethernet interfaces, as well as three SATA port. The company is now taking order for the board (FCC waiver required) with price starting at $349 with 4GB RAM.

MACCHIATOBin board specifications:

MACCHIATOBin board specifications:

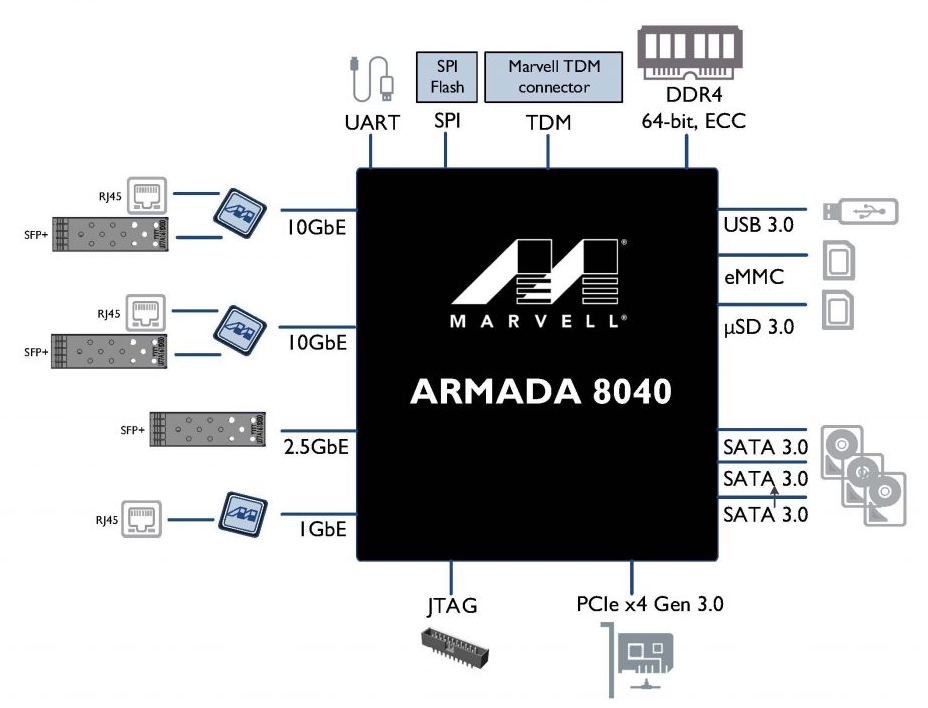

- SoC – ARMADA 8040 (88F8040) quad core Cortex A72 processor @ up to 2.0 GHz with accelerators (packet processor, security engine, DMA engines, XOR engines for RAID 5/6)

- System Memory – 1x DDR4 DIMM with optional ECC and single/dual chip select support; up to 16GB RAM

- Storage – 3x SATA 3.0 port, micro SD slot, SPI flash, eMMC flash

- Connectivity – 2x 10Gbps Ethernet via copper or SFP, 2.5Gbps via SFP, 1x Gigabit Ethernet via copper

- Expansion – 1x PCIe-x4 3.0 slot, Marvell TDM module header

- USB – 1x USB 3.0 port, 2x USB 2.0 headers (internal), 1x USB-C port for Marvell Modular Chip (MoChi) interfaces (MCI)

- Debugging – 20-pin connector for CPU JTAG debugger, 1x micro USB port for serial console, 2x UART headers

- Misc – Battery for RTC, reset header, reset button, boot and frequency selection, fan header

- Power Supply – 12V DC via power jack or ATX power supply

- Dimensions – Mini-ITX form factor (170 mm x 170 mm)

The board ships with either 4GB or 16GB DDR4 memory, a micro USB cable for debugging, 3 heatsinks, an optional 12V DC/110 or 220V AC power adapter, and an optional 8GB micro SD card. The company also offers a standard mini-ITX case for the board. The board supports mainline Linux or Linux 4.4.x, mainline U-Boot or U-Boot 2015.11, UEFI (Linaro UEFI tree), Yocto 2.1, SUSE Linux, netmap, DPDK, OpenDataPlane (ODP) and OpenFastPath. You’ll find software and hardware documentation in the Wiki.

The Wiki actually shows the board for $299 without any memory, but if you go to the order page, you can only order a version with 4GB RAM for $349, or one with 16GB RAM for $498 with the optional micro SD card and power adapter bringing the price up to $518.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

so that’s both “mini-ITX” and “thin-mini-ITX”?

which devices support on PCIe gen3.0 4x ? ı mean can we install m.2 nvme sdd? external sound card? 4x external gpu? etc…

In the picture above it’s about ECC DRAM but on the order page it’s not obvious which type of DRAM are part of the packages. Would be great to get some clarification here.

@hex

Do you know of a single PCIe GPU with ARM drivers? And good luck finding x4 GPUs anyway 😉

Ooooh yes please! An ARM board that supports ECC RAM, has good networking, processing power, is 64 bit, and has plenty of storage interfaces and PCI-E for yet more storage. Now if only it weren’t more expensive than an x86 server but I guess if size matters it still wins, at least until you think about a place to put the HDDs.

@tkaiser

Some GPUs work in PCIe-x4 mode via an extender (as their physical connectors are never x4). The driver matter is more complicated – open-source drivers for PCIe GPU are traditionally either (a) for older hw, or (b) notably sub-par in features and performance compared to the vendor’s drivers.

@blu

Sure but any decent GPU wants PCIe 3.0 x16 to perform well. And it should be obvious that any x86 mini-ITX board for $50 will perform better than this here if it’s about graphics output?

IMO if you’re not a developer and/or want to look into ODP and friends with 10GbE it’s not that easy to find use cases for this board.

@tkaiser I have Radeon HD5450 in my AArch64 box (via x8->x16 riser). Works fine with two FullHD monitors attached.

I also used Matrox G550 (PCIe x1) in past to check does it work.

The problem with PCIe graphics cards in aarch64 box is that they are not initialized by firmware so you do not have output until kernel initialize card.

@tkaiser

It’s not a problem. Just use an x1 to x16 riser. For games, the performance difference between x1 and x16 is minimal, something like 15%. It would also solve any power delivery problems, as the risers typically take power only from an external 12V source, not from the slot.

The eGPU guys have a good performance comparison of graphics cards at different link speeds if you’re that concerned about harming performance. The tl;dr is that for gaming, the link speed really doesn’t matter much.

https://www.pugetsystems.com/labs/articles/Impact-of-PCI-E-Speed-on-Gaming-Performance-518/

Or buy an x16 to x16 riser and cut the x16 PCB to fit the x4 slot. If they hadn’t placed the JTAG connector next to the PCI-E slot you could even remove the plastic from the x4 to make it an open-ended slot.

Chiming in on 4x PCI-E 3.0, that’s about as much as you’ll get by Thunderbolt 3 and the external GPUs using that while not common are well-tested. As for whether they bottleneck GPUs, yes undoubtedly to the point where there’s no sense buying more than an mid to high range card: https://www.notebookcheck.net/Razer-Core-External-GPU-and-Razer-Blade-Laptop-Review.213526.0.html

I am amazed that you’ve found a driver without a single x86 binary blob buried within, that makes PCI-E on ARM a lot more interesting to me.

@Marcin Juszkiewicz

Nice! But Radeon HD5450 is a PCIe 2.1 card so you would end up in this combination at a rather lousy PCIe 2.x x4 connection (and the whole combination would still be somewhat strange)? IMO the best fit for the PCIe slot here is a M.2 or SFF-8639 adapter or a SAS HBA to add some fast spinning rust.

+1

I think the board is for OpenDataPlane (ODP) and OpenFastPath. It is main advantage, rather than PCI-E X4.

I know the Linaro guys try to find a way to use an ARM development machine, instead of x86 computer. So that board could be a candidate.

It’s not *that* much more expensive when you consider that for the price it features onboard dual-10G, both RJ45 and SFP. That’s around $100-$150 extra cost on top of your x86 board just for this.

@Mum

I’m really not concerned about ‘gaming performance’ but that people think about degrading this networking gem to a boring PC by connecting a GPU (though I can understand that Linaro folks want to do something like this 😉 ).

@Willy

You know of a x86 server board with CPU, 2 x 10 GbE and 1 x 2.5 GbE (and the ability to push data around at these rates) for less than 8040 price?

@Mum

Thanks for mentioning the position of the JTAG connector. Just did a search for PCIe 3.0 x4 cards that physically fit. Found nothing except a passive adapter card for 2 x M.2 SSDs or TB3 controllers with Intel’s Alpine Ridge controller. It seems there exists not a single PCIe 3 x4 HBA (they’re all x8) so with this PCIe slot you’ll either use M.2 SSDs, external Thunderbolt equipment or an outdated PCIe 2 HBA to add some storage? Now really curious why the slot is not x8 instead. Most probably I’m missing something (eg. Solid-Run providing an enclosure with 90 degree x4 to x8 riser card)?

@CampGareth

Most of the performance loss in those benchmarks was because the card was rendering to the laptop’s internal display, requiring a lot of bandwidth to move the rendered frames back to the laptop for display. If you read further into the article:

“There is still a roughly 15% disparity between the desktop and eGPU systems, however. The reason for this is that as the GPU works harder and delivers fewer frames during more taxing benchmarks, the limited bandwidth (in comparison to a direct PCI Express x16 connection) becomes less of a problem.”

The MACCHIATObin doesn’t have an internal display, so the performance hit should only be around 15%, as the eGPU guys have observed on many cards, and as I said earlier 🙂

@tkaiser

Yes, the positioning of the JTAG connector is really unfortunate. It’s a bad choice, IMHO they should have left the PCI-e slot open ended so you can install an x8 or x16 card. But maybe they’re concerned about power delivery. It’s not mentioned in the wiki if the PCI-e slot can deliver 75W as desktop PCI-e slots are rated to. Perhaps they limited the slot to x4 because those cards typically have a lower power budget than x8 and x16?

The revision 1.3 of the board has an open PCIe slot and the JTAG has moved so you can plug-in x16 cards without risers or anything

I don’t know about Linaro, but this board will definitely be a dev machine for me. So I’ve been on the lookout for riser boards/extender ribbons for a while now. Which would have been unnecessary if, as tkaiser mentioned, SolidRun used a PCIe x8 connector. I guess mine will be sitting headless for a while.

They didn’t even need to put an x8 connector, they just needed to leave the x4 connector open and not put the JTAG connector right behind it…

That too. I can still try shaping the connector an off-the-shelf PCIe x8 GPU, but I’d rather do that as a last resort.

@blu

I wouldn’t ever attempt to cut the PCB of a GPU. Why not just cut the plastic of the x4 connector and desolder the JTAG header?

@tkaiser

Closest x86 board I can think of is the X10SDV-2C-TLN2F. The D-1541 based 4C version is probably a closer match performance wise but is more expensive. The Armada’s packet processor really put’s it in a league of its own though. QoS and SPI introduce a lot of overhead and can bog down routers using general-purpose cpus, even faster ones. A good example is the Ubiquiti Edge Routers. They’re very fast in their default configuration but the instant you turn on QoS the performance tanks.

@sandbender

Yeah, D-1521 based X10SDV-4C-TLN2F would be more close but is also lot more expensive. BTW: Talking about multiple 10GbE lines and Thunderbolt 3 reminded me to check ‘IP over Thunderbolt’ status with Linux kernel 🙂

@tkaiser

Now that’s a good thought! Currently TB3 to 10GbE adapters are expensive and this’d be roughly half the price. Are there any TB3 cards that aren’t vendor-specific? I can only find Asus stuff that looks like it is.

@CampGareth

Well a ‘normal’ TB [10]GbE adapter is just a PCIe device inside an enclosure behind the usual cable conditioner and TB controller that is still accessed as normal PCIe device (so even if there’s TB involved the host still talks to a PCIe attached Intel 82599 or x540 most of the times).

‘IP over Thunderbolt’ is something different but also works reasonably well even with small bandwidth hungry workgroups (we use it in some installations with a crude mixture of star and daisy-chain topology with Mac users — even the slowest MacBook Air from 2011 is able to access a NAS with +700 MB/s — MB/s not Mbits/sec). But it’s somewhat stupid to sacrifice a MacPro with its 6 TB2 ports to be the heart of such a topology so I thought let’s evaluate it, currently starting to read from lkml.org/lkml/2016/11/9/341 on.

But that’s more or less unrelated to the 2.5/10 GbE capabilities of ARMADA’s 8040 and ODP/OFP support. Would be a nice feature mix though.

Armada is 3-issue OoO while Intel is 6-issue OoO. Anyway, would also like to see some benchmarking when available since issue width is just one metric.

This is *very* nice little board but just for Linux users. BSD camp seems to be out of the way at least till someone does not get proper SoC developer reference manuals which are currently under NDA and just if you are Marvell customer. What a pity!

OoO issue width has a diminishing-return aspect, and as such is not as strong an indicator of performance as one might think. For instance, if you take a Huawei Honor 8 (A72@2.3GHz) and a Xeon-D1540 (@2.6GHz TurboBoost) in Geekbench (https://browser.primatelabs.com/v4/cpu/compare/1557107?baseline=2231296), and then normalize the single-core results for clock, you’ll notice that the Xeon does ~1.6 times the normalized performance of the A72, excluding the direct mem tests but including such outliers as the SGEMM test (where Xeon does 8-way AVX vs 4-way ASIMD in the A72). Not taking Geekbench as the ground truth, just giving a rudimentary example here.

No I don’t and that was my point. You can actually try to build a cheap machine out of a cheap x86 mini-itx board and a cheap dual-10G board but it will not be that compact and might even not be that cheap in the end. And it’ll hardly have 3 SATA ports for the price or let you chose the network connectivity.

Some boards already have such PCIe connectors with the rear plastic part open. I do have one, I don’t remember where, I think it’s on the Marvell XP-GP because I seem to remember it uses a PCIe x4 and I managed to plug my myricom 10G NICs.

But on this board unfortunately you have the JTAG connector at the rear, so I think tkaiser guessed right, they’re going to sell the enclosure with the 90 degree riser.

As my macchiatobin has arrived – a small update on the board:

* the JTAG is not populated – the PCIe can be used in full, as long as the connector is changed to an open-ended one.

* the SoC contains 2 clusters of 2 cores (so the docs were right ; )

* the default cpufreq governor is on-demand

Canonical currently have issues with their arm64 deb repos, so a good portion of the packages (I use) are unavailable. Talk about sharp timing.

A good article on why active cooling is a must for the MacchiatoBin (scroll down to the bottom):

https://www.ibm.com/developerworks/community/blogs/hpcgoulash/entry/Turning_up_the_heat_on_my_ARMV8?lang=en