WiFi is a great way to add connectivity to a large group of people, but once everybody tries to connect at the same time, the network often becomes unusable due to very high latency, a problem that can occur on servers on the ISP side too, and that’s usually caused by excessive buffering, Bufferbloat. The Bufferbloat project aims to resolve this issue with both routers using CoDel and fq_codel algorithms, as well as WiFi via Make-WiFi-Fast project.

Dave Täht gave a presentation of his work on Make-WiFi-Fast project entitled “Fixing WiFi Latency… Finally“showing how latency was reduced from seconds to milliseconds. It’s quite technical, but two slides of the presentation clearly shows the progress made.

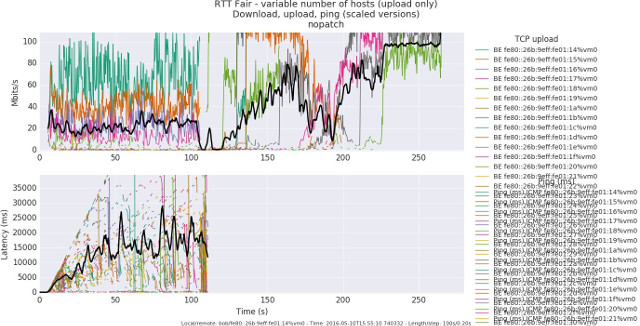

The first chart shows 100 stations connecting to a website using unpatched code with the top of the chart showing the bandwidth per node in MBits/s, while the lower part showing latency in ms. We can see that about 5 stations can download data at up to 100 Mbps, but 95 stations need to wait, many give up, and after two minutes some other stations start to download again. Average bandwidth is 20 Mbits/s and not exactly evenly distributed among stations. Latency is about 15 seconds based on that chart.

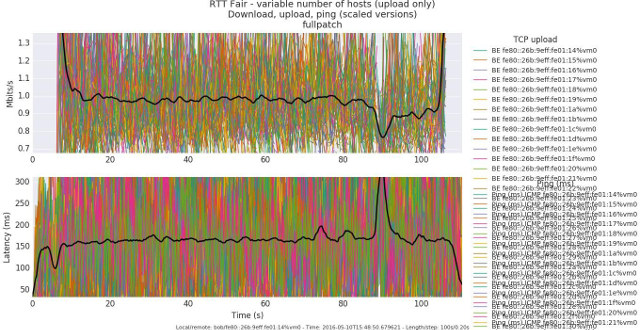

The second chart shows the same test with make-wifi-fast patch to the Linux kernel, mainly improving queue handling. Both chart shows many more stations are served with an average of 1 Mbits/s, and latency is slashed to about 150 ms, meaning the vast majority of users get a much better user experience with that “airtime fairness” solution

I understand the tool used to test network connectivity and generate data for the charts above is flent, the FLExible Network Tester. The video below discusses benchmark, make-wifi-fast, and TCP BBR using the presentation slides shared above.

There’s also an article on LWN.net discussing about this very topic. Make-wifi-fast project patchsets are queued for Linux 4.9 and 4.10 already, and yet-to-be submitted patchsets for LEDE (OpenWrt fork) can be found here.

Thanks to Zoobab for the tip.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

“showing how latency was reduced from milliseconds to seconds” pretty sure that should read the other way around?

Make WiFi Fast Again? 😉

Also the video of Openwrt summit in Berlin:

https://www.youtube.com/watch?v=fFFpo_2xlfU

See also this post on Wireless Battler Mesh mailing list:

http://ml.ninux.org/pipermail/battlemesh/2016-November/005240.html

And Toke gave a link to the LWN article:

https://lwn.net/SubscriberLink/705884/1bdb9c4aa048b0d5/

Actually, as Dave explained during WBMv8, adding a second client station will already decrease the performance of your network, you don’t need to add 100 to see a performance degradation.

Simply adding a dedicated software queue per station already improved the situation a lot:

https://www.youtube.com/watch?v=Rb-UnHDw02o

I love the packet test with humans:

https://www.youtube.com/watch?v=Rb-UnHDw02o&t=1525s

Excellent news. Thanks a ton.

The LWN article is not behind paywall anymore:

http://lwn.net/Articles/705884/