So I’ve just received a Roseapple Pi board, and I finally managed to download Debian and Android images from Roseapple pi download page. It took me nearly 24 hours to be successful, as the Debian 8.1 image is nearly 2GB large and neither download links from Google Drive nor Baidu were reliable, so I had to try a few times, and after several failed attempt it work (morning is usually better).

One way is to use better servers like Mega, at least in my experience, but another way to reduce download time and possibly bandwidth costs is to provide a smaller image, in this case not a minimal image, but an image with the same exact files and functionalities, but optimized for compression.

I followed three main steps to reduce the firmware size from 2GB to 1.5GB in a computer running Ubuntu 14.04, but other Linux operating systems should also do:

- Fill unused space with zeros using sfill (or fstrim)

- Remove unallocated and unused space in the SD card image

- Use the best compression algorithm possible. Roseapple Pi image was compressed with bzip2, but LZMA tools like 7z offer usually better compression ratio

This can be applied to any firmware, and sfill is usually the most important part.

Let’s install the required tools first:

|

1 |

sudo apt-get install secure-delete p7zip-full util-linux gdisk |

We’ll now check the current firmware file size, and uncompress it

|

1 2 3 4 5 |

ls -lh debian-s500-20151008.img.bz2 -rw------- 1 jaufranc jaufranc 2.0G Oct 13 11:45 debian-s500-20151008.img.bz2 bzip2 -d debian-s500-20151008.img.bz2 ls -lh debian-s500-20151008.img -rw------- 1 jaufranc jaufranc 7.4G Oct 13 11:49 debian-s500-20151008.img |

Good, so the firmware image is 7.4GB, since it’s an SD card image you can check the partitions with fdisk

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

fdisk -l debian-s500-20151008.img WARNING: GPT (GUID Partition Table) detected on 'debian-s500-20151008.img'! The util fdisk doesn't support GPT. Use GNU Parted. Disk debian-s500-20151008.img: 7860 MB, 7860125696 bytes 202 heads, 56 sectors/track, 1357 cylinders, total 15351808 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System debian-s500-20151008.img1 1 14680063 7340031+ ee GPT |

Normally fdisk will show the different partitions, with a start offset which you can use to mount a loop device, and run sfill. But this image a little different, as it uses GPT. fdisk recommends to use gparted graphical tool, but I’ve found out gdisk is also an option.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

sudo gdisk debian-s500-20151008.img GPT fdisk (gdisk) version 0.8.8 Partition table scan: MBR: protective BSD: not present APM: not present GPT: present Found valid GPT with protective MBR; using GPT. Command (? for help): p Disk debian-s500-20151008.img: 15351808 sectors, 7.3 GiB Logical sector size: 512 bytes Disk identifier (GUID): 4DE7340C-7BA3-4508-B556-E774FF755B2B Partition table holds up to 128 entries First usable sector is 34, last usable sector is 14680030 Partitions will be aligned on 2048-sector boundaries Total free space is 34814 sectors (17.0 MiB) Number Start (sector) End (sector) Size Code Name 1 2048 32734 15.0 MiB 8300 2 32768 106496 36.0 MiB EF00 3 <strong>139264</strong> 14680030 6.9 GiB 8300 Command (? for help): |

That’s great. There are two small partitions in the image, and a larger 6.9 GB with offset 139264. I have mounted it, and filled unused space with zeros once as follows:

|

1 2 3 4 |

mkdir mnt sudo mount -o loop,offset=$((512*139264)) debian-s500-20151008.img mnt sudo sfill -z -l -l -f mnt sudo umount mnt |

The same procedure could be repeated on the other partitions, but since they are small, the gains would be minimal. Time to compress the firmware with 7z with the same options I used to compress a Raspberry Pi minimal image:

|

1 |

7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on debian-s500-20151008.img.7z debian-s500-20151008.img |

After about 20 minutes, the results is that it saved about 500 MB.

|

1 2 |

ls -lh debian-s500-20151008.img.7z -rw-rw-r-- 1 jaufranc jaufranc 1.5G Oct 13 14:20 debian-s500-20151008.img.7z |

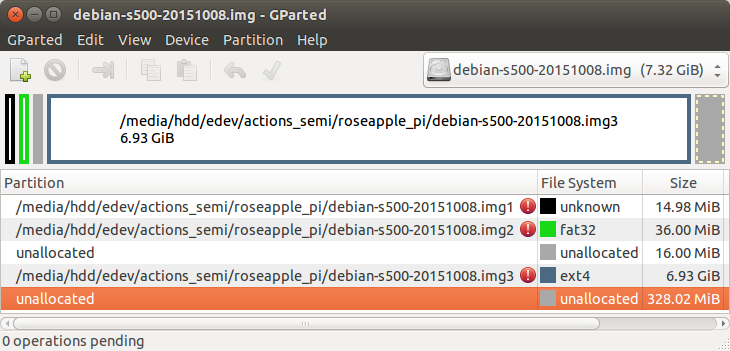

Now if we run gparted, we’ll find 328.02 MB unallocated space at the end of the SD card image.

Some more simple maths… The end sector of the EXT-4 partition is 14680030, which means the actuall useful size is (14680030 * 512) 7516175360 bytes, but the SD card image is 7860125696 bytes long. Let’s cut the fat further, and compress the image again.

|

1 2 |

truncate -s 7516175360 debian-s500-20151008.img 7z a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on debian-s500-20151008-sfill-remove_unallocated.img.7z debian-s500-20151008.img |

and now let’s see the difference:

|

1 2 3 |

ls -l *.7z -rw-rw-r-- 1 jaufranc jaufranc 1570931970 Oct 13 14:20 debian-s500-20151008.img.7z -rw-rw-r-- 1 jaufranc jaufranc 1570849097 Oct 13 14:50 debian-s500-20151008-sfill-remove_unallocated.img.7z |

Right… the file is indeed smaller, but it only saved a whooping 82,873 bytes, not very worth it, and meaning the unallocated space in that SD card image must have been filled with lots of zeros or other identical bytes.

There are also other tricks to decrease the size such as clearing the cache, running apt-get autoremove, and so on, but this is system specific, and does remove some existing files.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Those chinese manufacturers should get a VPS somewhere and host those files with a simple lighttpd (or even better, FTP and Rsync). I had the same problem with BananaPi and other chinese guys, they just don’t get it. Pan and Googledrive are free hosting, but they are not really friendly when it comes to command line downloads.

Does the effficiency of the compression algo matter much once the empty space is all zeros? Surely it’s just a matter of representing one million zeros as “zero a million times” rather than 00000….

So it seem like sfilling empty space with zeros ought to offer similar space savings, whatever the algo.

(People may want to offer images using common but less efficient compression like zip; it would be a shame if they failed to use CNX’s method because they thought they’d HAVE to use 7zip…)

@onebir

The better compression algorithm does not help with the zeros, but with the existing files.

So you could still use zip, rar, or whatever compression format you want. LZMA should decrease the size further.

I have tried to compress the firmware (after running sfill) with gzip.

So it’s still smaller than the original firmware (bzip2), but still ~400 MB larger than with lzma.

with bzip2:

A bit smaller, but still ~300MB larger. Maybe I did not use optimal settings, but it looks like it might still be worth using 7z or xz.

Can you try squashfs?

I’ve been recommended to use fstrim instead of sfill @ https://plus.google.com/u/0/110719562692786994119/posts/ceb8FeSVPAF

It is a little bit faster, and the resulting file is a tiny bit smaller (~2.4MB smaller).

@zoobab

That’s a bit different right? I’d need to change the image file system…

Not sure how to proceed easily.

@zoobab

You are right, googledrive download get stuck at 880MB here. Any idea how to use wget -c and with which URL?

@zoobab

I don’t think Google Drive works with wget. There are some tools like: https://github.com/prasmussen/gdrive

For Baidu, I sometimes use aria2c (http://www.cnx-software.com/2015/01/14/downloading-files-on-baidu-or-via-http-bittorrent-or-metalink-in-linux-with-baiduexporter-aria2-and-yaaw/), as it’s a little more reliable than from the web browser.

@cnxsoft

It seems to request a captcha, which is ugly. I even have problems to read the captcha properly.

You should check lrzip. It can compress some types of files very well at cost of very high RAM usage at compression.

They should distribute by torrent. This is what torrent is really excellent for.

I’m also surprised that the install is 7GB … that must be with every friggin feature enabled!

Once again, before no-check-certificate must be 2*hyphen –

something wrong with this comment system because it always remove one

bittorrent would solve the bandwidth / reliability problem somewhat.

One solution is also to use LZ4

It can compress at very highspeed (on SSD even at 400MB/s) and the receiving user can decompress the archive file even faster (the bottleneck will be only disk speed)

Compression rate is much worst than LZMA but you can compress an 8GB file in less than 30 secs with LZ4 on machine with SSD Sata2 (faster with Sata3) where LZMA needs about 20 minutes.

The bottleneck for LZ4 is disk speed and for LZMA Cpu/Ram speed.

And if you need higher compress ratio you can recompress LZ4 archive with LZMA.

This give you a compression ratio more near native LZMA but with compression time 1.5-2.0 times lower (even more faster, like 3-4x, with high compressable data like many zero data blocks).

An example:

8GB Odroid C1 OpenElec 6.0 image from an recycled SD and without fill zero operation, so many unused blocks are not zeroed and this will decrease compression ratio since compression algor. must compresses and keeps useless data.

Original image: 7.948.206.080 bytes

LZMA (7-Zip defaults) compressed: 2.586.505.436 bytes in 1088 secs (about 18 min)

LZ4 (level-1) compressed: 3.762.908.774 bytes in 17-18 secs

LZ4 (level-9) compressed: 3.488.373.848 bytes in 138 secs

LZ4-1+LZMA compressed: 2.993.996.128 bytes in 784 secs (about 13 min, lz4 time inclusive)

LZ4-9+LZMA compressed: 2.833.559.090 bytes in 794 secs (about 13 min, lz4 time inclusive)

@wget

It’s not ideal, but to add code / shell in comments or post, use preformatted style “pre” for example:

without \.

@Andrew

It’s probably 7.4GB because they must have installed Debian on a 8GB SD card, and simply dumped the full SD card data to the file.

Instead of using sfill or fstrim or whatever dd-ed file with zeroes makes same thing.

@Peter

How can you distinguish between actual data and unused free space with dd?

@cnxsoft

Maybe I missed something but actual data I assume it is needed and it stays after sfill or fstrim.

My dd use is like mounting partition and then writing big file with zero’s inside. After dd dies because there is no free space anymore this big file is deleted.

But like I wrote maybe we are talking about two different things?

@Peter

Yes, we must be talking about different things.

What I needed to do is to set a zero value to unused sectors in a mounted file system, so there’s both useful data (files and directory), and unused sector (aka free space). And I need to set all that free space to zero in order to improve the compression ratio, while still keeping the files and folders in the file system.

@cnxsoft

But then we ARE talking about the same thing.

Let make practical example. I make SD card and run it. Made few customizations on it. Because of this all the space was used for some temporary files. After I’m done I delete all those files. Files are gone but sectors on card is still occupied with old data. And if I make image from card with dd command and compress it all those unused bytes are still used in image.

But if I make big bile with zeroes inside after I removed all the unneeded files and delete this big file there will not be any sign of temporary files in unused sectors anymore. They will only contain zeros. And compression ration of final image is better.

Some time back I also read some article (maybe here) about writing such big sd card images with some clever technics. First program creates image file and actually removes all the zeros. Of course it also creates some metadata file with informations what was removed. So final image files is actually only useful data. Meaning 32 GB card image can be only few GB in size. Writing program then writes only useful data to sd card – it skips zeros.

@Peter

OK. I guess I now understand what you mean. So you’d type something like

dd if=/dev/zero of=big_file

To fill all free space with zeros, and then delete that big file. That would work too.

I agree with @notzed: use bittorrent as it’s agilent against lossy connections.

Furthermore: I really wonder why Raspi / Raspbian does not provide a 300-500MB base image

Combining lz4 with lzma, this is a *great* idea

(although

lz4 -9is enough for my needs)All Armbian images have minimum SD card image by default since some time. If someone is interested in used method: https://github.com/igorpecovnik/lib/blob/second/common.sh#L254