Native Client (NaCl) allows to build native C and C++, and runs it in the browser for maximum performance. Applications such as photo editing, audio mixing, 3D gaming and CAD modeling are already using it. The problem is that you have to build the code for different architecture such as ARM, MIPS or x86. To provide a portable binary, Google announced the Portable Native Client (PNaCl, pronounced pinnacle), which “lets developers compile their code once to run on any hardware platform and embed their PNaCl application in any website”.

Instead of compiling C and C++ code directly to machine code, PNaCl generates a portable bitcode executable (pexe), which can be hosted on a web server. Chrome then loads this executable, and converts it into an architecture-specific machine executable (native executable – nexe) optimized for the device where the code runs.

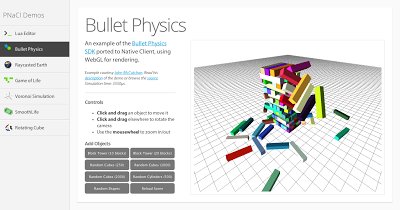

Bullet physics simulators (Shown below) and Lua interpreters are two applications that already leverage PNaCl.

If you click on one of the 2 links above, you’ll notice it will only work with Chrome 31 and greater, and other browsers will require pepper.js, a “JavaScript library to enables the compilation of native Pepper applications into JavaScript using Emscripten”. This is supposed to work with Firefox, Internet Explorer, Safari, and other browsers.

If you click on one of the 2 links above, you’ll notice it will only work with Chrome 31 and greater, and other browsers will require pepper.js, a “JavaScript library to enables the compilation of native Pepper applications into JavaScript using Emscripten”. This is supposed to work with Firefox, Internet Explorer, Safari, and other browsers.

Google hosts a page comparing NaCl and PNaCl, to explain the differences, and help make the right choice. PNaCl is now the preferred toolchain, but it comes with limitations which means you may have to use NaCl instead:

- PNaCl does not support architecture-specific instructions such as assembly. Future editions of PNaCl will attempt to mitigate this problem by introducing portable intrinsics for vector operations.

- Only static linking with the >newlib C standard library is supported, not dynamic linking nor glibc. This will be fixed in future releases.

- C++ exception handling are currently not supported

- Vector types and SIMD are currently not supported

- Some GNU extensions are unsupported such as taking the address of a label for computed gotos, or nested functions.

If your application is in the open web, NaCl is not an option, and you’ll have to use PNaCl instead.

You can find more information, and getting started with the native client SDK, on NaCl and PNaCl developers’ page.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

so first step is “interpreting shit is too slow, we need native code”

but then someone notes that native only works where it’s native.

so now the big approach is to precompile it to something, give it to google and they compile it for 3-4 native platforms, where you have to test every platform if it really works (something you even had to do with interpreted code all the same, but a bit less so)

So what is better now? That we no longer need to run the compiler 3-4 times ourself?

Why doesn’t google just pack those compilers into a nice package you could install without effort and we continue to work like we did 40 years ago?

…without needing to rely on google servers and sending everything we create to them.

Actually google doesn’t compile anything. It is my understanding PNaCl binaries contain an IL from LLVM which gets translated into binary code in the browser itself. It’s a pretty smart concept if you think about it but still not applicable for doing system work as the environment you code for is highly sandboxed(can’t access hardware directly)

I’d like to know how this differs from Java. Is it just the APIs/interfaces (to the web browser, GL ES, etc) or has Java always had performance issues?

@Ian Tester

The implementation is very similar to java. A portable IL representation from llvm is compiled on the fly into native code, kindof like java bytecode does with it’s hotspot JIT. In NaCl’s case that translation happens in one pass though whereas the Jit in Java is probably doing it as it goes maybe using runtime information to optimize further. The concept is very different though as you get to write (almost) regular C/C++ code to feed llvm with. You can thus optimize your code much better than what Java would do for you(or maybe not, it all depends on the coder afterall)