I’m always very confused when it comes to comparing GPUs in different SoCs, and I could not really find comparisons on the web, so I’m going to give it a try even though, as you’re going to find out, it’s actually quite a challenge.

There are mainly 4 companies that provide GPUs: ARM, Imagination Technologies, Vivante and Nvidia. [Update: Two comments mentioned Qualcomm Adreno and Broadcom VideoCore are missing from the list. Maybe I’ll do an update later]. Each company offers many different versions and flavors of their GPU as summarized below.

| ARM | Imagination Technologies | Vivante | Nvidia |

|

|

|

|

For each company the GPU are sorted by increasing processing power (e.g. Mali-T604 faster than Mali-400 MP, SGX535 faster than SGX531 etc…), and I did not include some older generations. As you can see there are many choices already, but you also need to take into account different number of cores (e.g. Mali-400 can have 1, 2 or 4 cores), process technology, and different operating frequencies chosen by SoC manufacturers for a given GPU, so that makes it even more complicated. That’s why I’m going to focus on one core for each GPU based on commonly used SoC: Rockchip RK3066 (Mali-400 MP4), Freescale i.MX6 (GC2000), AllWinner A31/ OMAP 5430 (SGX544 MP2), and Tegra 3 T30 (ULP Geforce).

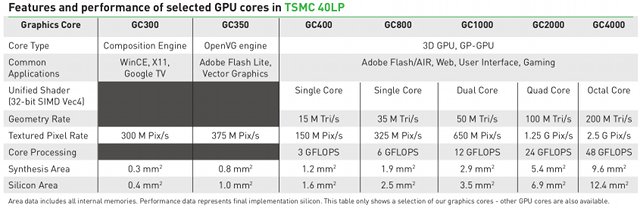

Vivante has a product brief with a nice comparison table for their GPUs.

I’m going to use part for this table as base for performance comparison. For other GPUs, I’ll need to dig into the companies’ website:

- ARM Mali-400 MP page, performance tab

- PowerVR SGX Series 5XT Factsheet (PowerVR_SGX_Series5XT_IP_Core_Family_[3.2].pdf) available from Imagination Technology Download section (requires free registration)

- Tegra 3 page on Nvidia website

| Mali-400 MP4 | PowerVR SGX544MP2 | GC2000 | Tegra 3 GPU | |

| Frequency | 240 MHz to 533 MHz | 532 MHz | 528MHz (600 MHz shader) | 520 MHz |

| Shader Core | 4 | 8 | 4 | 12 |

| Geometry Rate | 44M Tri/s for 1 core @ 400 MHz | 70 M Tri/s per core @ 400 MHz | 100 M Tri/s (Freescale claims 200 M Tri/s in i.MX6, i.MX6 Reference Manual: 88 Mtri/s,… go figure) | – |

| Textured Pixel Rate | 1.6G Pix/s for 1 core @ 400 MHz | 1 G Pix/s per core @ 200 MHz | 1.25 G Pix/s (i.MX6 RM: 1.066G pixels/sec) | – |

| Core Processing | 7.2 GFLOPS @ 200 MHz | 12.8 GFLOPS @ 200 MHz (34 GFLOPS @ 532 MHz) |

24 GFLOPS (21.6 GFLOPS in i.MX6) |

7.2 GFLOPS @ 300MHz |

| Antutu 3.x | 2D: 1338 3D: 2338 Resolution: 1280×672 Device: MK808 (Android 4.1.1) |

2D: 1058 3D: 4733 Resolution: 1024×768 Device: Onda V812 (Android 4.1.1) |

2D: 733 3D: 1272 Resolution: 1280×672 Device: Hi802 (Android 4.0.4) |

2D: 814 3D: 2943 Resolution: 800×1205 Device: Nexus 7 (Android 4.2.1) |

| Silicon Area | 4×4.7mm2 ? | – | 6.9 mm2 | – |

| Process | 65nm LP or GP | 40nm | TSMC 40nm LP | 40nm |

| API support | OpenGL ES 1.1 & 2.0 OpenVG 1.1 |

OpenGL ES 2.0 and OpenGL ES 1.1 + Extension Pack OpenVG 1.1 enabling Flash and SVG PVR2D for legacy 2D Support (BLTs, ROP2/3/4) OpenWF enabling advanced compositing OpenCL Embedded for GP-GPU |

OpenGL ES 1.1/2.0/Halti OpenCL 1.1 EP OpenVG 1.1 DirectFB 1.4 GDI/Direct2D X11/EXA DirectX 11 9.3 |

OpenGL ES 1.1/2.0 OpenVG 1.1 EGL 1.4 |

| Operating System support | Android Linux |

Linux, Symbian and Android Microsoft WinCE RTOS on request |

Android Linux Windows QNX |

Android Windows 8 |

There are all sort of numbers on the Internet, and it’s quite difficult to make sure the reported numbers are accurate, so if you can provide corrections, leave them in the comments section. For API and OS support, I mainly copied and pasted what I got from the companies’ website. I failed to get much information on Tegra 3 GPU, most probably because it’s just used in house by Nvidia, and they don’t need to release that much information [Update: I got Antutu 3.0.3 since then, Weak 2D performance, and pretty good 3D performance on Nexus 7 tablet]. So I’ll leave it out for the rest of the comparison. When we look at Geometry rates GC2000 appears to be the slowest (if 88 Mtri/s is the right number), followed by Mali-400 MP4. I’m a bit confused by textured pixel rate, because I don’t know if this scales with the number of core or not. Mali-400 MP4 appears to be the slower GPU when it comes to GFLOPS, most probably because both GC2000 and SGX544MP2 support OpenCL 1.1, but this is currently not that important since not that many applications can support GPGPU. Antutu results show Mali-400 has the best 2D performance, followed by SGX544MP2 and Vivante GC355/GC320 ( 2D is not handled by GC2000 in i.MX6), but for 3D the PowerVR GPU is clearly in the lead, with Mali-400 MP4 getting half the performance, and GC2000 half the performance of the ARM Mali GPU according to Antutu 3.0.3.

So when it comes to 2D/3D graphics performance, we should not expect Freescale i.MX6 quad core Cortex A9 processor to outperform Rockchip RK3066 dual core Cortex A9 processor, and AllWinner A31 provides excellent graphics performance even if it features slower Cortex A7 cores (4 of them).

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress

Maybe you could explain a bit about the Linux compatibility issues? If Mali supports the OpenGL and OpenVG APIs, why can’t picUntu use it for graphics acceleration? & why is there a need for someone to develop open source drivers (Lima). Which (if any) GPUs are *really* compatible with Linux (eg allowing Linux to use them for HD video & 3D graphics)?

@onebir

I wish I could explain it, but I don’t clearly understand why. For Mali-400 I can see some higher levels libs/drivers are open source (http://malideveloper.arm.com/develop-for-mali/drivers/open-source-mali-gpus-linux-exadri2-and-x11-display-drivers/) , but there are still binary blobs that appear to be SoC specific, not only GPU specific, AFAIK due to legal reasons. That’s why, for example, you may have Mali-400 support on the Snowball board, but the same Mali driver cannot be used in the Cubieboard.

New Linux based mobile OS (Ubuntu for Phone, Tizen) are getting smart about it, and they are compatible with the Android kernel so that they can use the same GPU drivers. At least, that’s what I understand.

Granted they don’t often appear in low end stuff but no Qualcomm Adreno ? they are a pretty big one to omit & their GPU’s are top of the line. Not surprising given they are ex-ATI as AMD sold off the ATI embedded division years ago to Qualcomm, what a mistake that was.

The upcoming Adreno 330 is 50% faster than the Adreno 320 which easily beats the Tegra 3.

http://www.anandtech.com/show/6112/qualcomms-quadcore-snapdragon-s4-apq8064adreno-320-performance-preview

@Dan

Oops, I’ll try to add it a bit later (like early next week). It takes a bit of time to find information.

@cnxsoft

Well, if you don’t completely understand it, my chances are… limited. Thanks! 🙂

@cnxsoft

First i would like to point out that you have missed Broadcom who design the VideoCore line in your list of ARM GPU manufacturers.

http://en.wikipedia.org/wiki/VideoCore

One source of incompatibility of drivers between operatin systems is hidden inside the libEGL.

The libEGL.so (the library that you use to initialize OpenGL ES) is implemented differently on Android and X11 GNU/Linux machines and on the Dispmanx GNU/Linux RaspberryPi.

EGL API background:

It is common and allowed by the EGL spec that libEGL EGLNativeWindowType differ for OpenGL ES implementations across operating systems, for example:

On Windows EGLNativeWindowType is a “HWND” datastructure.

On Linux/Android EGLNativeWindowType is a “ANativeWindow*” datastructure pointer.

On Linux/X11 EGLNativeWindowType is a “Window” datastructure.

and so on…

On The Raspbeery Pi using a Linux/Broadcom Videocore IV EGLNativeWindowType is a “EGL_DISPMANX_WINDOW_T” datastructure.

http://www.khronos.org/egl

http://elinux.org/RPi_VideoCore_APIs

This means that if you try to use a libEGL and OpenGL ES built for Android you will get an exception on X11 that libEGL is unable to connect OpenGL ES render output to the X11 native window surface.

@cnxsoft

After all somebody on odroid forum try to get A10 mali drivers on exynos mali

http://odroid.foros-phpbb.com/t2051-working-mali-400-opengl-es-acceleration

and

it look like it works

@misko

Nice. Do you know what “it cant use all PP blocks inside Mali-400” means? Is it just because Mali-400 only use one core in A10, so we can’t use all the cores inside the Mali-400 MP4 GPU present in Exynos 4412?

this is similar to what I have been doing, here http://blog.thinkteletronics.com/all-mobile-socsolutions/

also sgx 544mp2, I dont think it hit 136Gflops, most likely 30-30Gflops

@Dan

I dont think selling of the mobile division was a bad move, look at gcn, that scales well[power and perf], to even ulv parts like the amd temash apu

@onebir

lima is about developing a free software driver for the gpu. The gpu is a programmable processor just like a cpu, so graphics drivers are just as much software as a compiler is. A binary blob has all the problems associated with a binary blob: bugs can’t be fixed, long-term support is questionable, etc.

The reason opencl isn’t used on android is google have their own crappy alternative and don’t like using standards in general – a typical attitude from the over-qualified technicians who work there.

@EARL CAMERON

Did you see @200MHz and 136Gflops @532MHz

PowerVR SGX544MP2

136 GFLOPS

744.8M Tri/s

@532MHz

woohaa 😉

Really bad typo, huh? 😉

(its around 34GFLOPS

and less than 200MTri/s can’t remember the exact number there )

@cnxsoft

That page at the Malidevelopers website says:

“Note that these components are not a complete driver stack. To build a functional OpenGL ES or OpenVG driver you need access to the full source code of the Mali GPU DDK, which is provided under the standard ARM commercial licence to all Mali GPU customers. For a complete integration of the Mali GPU DDK with the X11 environment refer to the Integration Guide supplied with the Mali GPU DDK.”

It looks like the fault of ARM, not helping create the complete driver stack, and blocking with the Mali GPU DDK license issue. This is not something that every SoC manufacturer should try to solve. ARM should solve for all their SoC licensees.

@EARL CAMERON

@kamejoko

@1.21 jigga watts

It was not a typo, but a mistake from my part.

I read it’s 6.4 GFLOPS power core @ 200 MHz (http://en.wikipedia.org/wiki/PowerVR#Series_5XT) , so I multiplied that by 8 (shader cores) and adjusted for the frequency. The mistake with I had to multiple by 2 (GPU core) instead of 8 (shader cores). So the values were all 4 times larger than in reality.

This link confirms it’s 12.8 GFLOPS @ 200 MHz – http://www.anandtech.com/show/4413/ti-announces-omap-4470-and-specs-powervr-sgx544-18-ghz-dual-core-cortexa9

Thanks for correcting my mistake!

from dmseg

Mali PP: Creating Mali PP core: Mali-400 PP

it really looks like GPU core

As the actual main developer of the lima driver, i have some data to add here.

The mali-400 is a split shader design. 1 vertex shader (GP, geometry processor), and 1-4 fragment shaders (PP, pixel processor).

On the odroid-x2, they manage to clock the mali to 533MHz.

The GP manages 30MTris at 275MHz, so at 400MHz this translates to 43.6MTris, at 533 (which kind of matches the others) you hit 58.1MTris. The PP manages one pixel per core per clock. 1.6GPix at 4 cores at 400Mhz (so 400MPix per core), or 2.133GPix at 533Mhz. A rather amazing number compared to the competition, and exactly the reason why the exynos4 was such a great performer in the mobile space where the number of triangles tends to be low.

On the A10, there is only a mali400MP1, so 1GP and 1PP. The binary driver which we got for it has apparently been built to support only 1 PP, and apparently ignores the other 3PPs reported by the exynos kernel. Now this single PP has to work the whole display on his own, quadrupling the number of PPs, in a real world test i have here, will give4x the performance if the CPU can feed the triangles fast enough (the rockchip dual A9s will happily do that in comparison to the single A8 of my current favourite SoC, the allwinner A10).

Yes, SGX MPx rules atm. But throwing more silicon at the problem is not the best solution for the mobile space, especially not with a GPU which is a management and synchronization nightmare even on a single core.

As for the lima driver, keep your eyes peeled at FOSDEM.

@Luc Verhaegen

Thanks for working on Lima – having non-crippled Linux on (at least some) Arm processors will help a lot of people 🙂

For people like me who’ve never heard of FOSDEM, it’s on 2 & 3rd Feb this year:

https://fosdem.org/2013/

So not even a very long wait 🙂

@Luc Verhaegen

+1 Great info!

Thanks.. 🙂

which graphics is better 16 core or PowerVR SGX544?

@Hardik P

Which 16-core GPU?

“So when it comes to 2D/3D graphics performance, we should not expect Freescale i.MX6 quad core Cortex A9 processor to outperform Rockchip RK3066 dual core Cortex A9 processor, and AllWinner A31 provides excellent graphics performance even if it features slower Cortex A7 cores (4 of them).”

Sorry but i do not get this one. Does that mean SGX544MP2>Mali400MP4>Tegra3>GC200?

The reason why i ask is, because i want to know,wich chip would be better for gaming/emulating:

RockChip 3188, Quad Core, 1.8GHz (cortex A9 CPU, ARM mali400 mp4 GPU)

or

All Winner A31s, Quad Core, 1GHz (CPU:cortex A7, GPU:Power VR SGX 544 mp2)

Greetz!

@Alex

SGX is your friend

look at iPad/iPhone GPU perf. and anybody else

@m][sko

I see… but a difference in 0.8 ghz in cpu is massive. and in the apple stuff its not exactly this gpu: http://www.android-hilfe.de/attachments/onda-v972-forum/161044d1358373694-9-onda-v972-quadcore-cpu-2gb-ram-vr-sgx544mp2-gpu-powervr_gpu_compare.jpg

im really confused, the more i read about this topic, the less i understand^^

can you describe me the advantages, and disadvantages in both cases, ty

PowerVR SGX544 vs nvidia Tegra 4

wich is better?

I want to buy a cheap tablet that I can play games on. So far the choices of GPU within my budget are PowerVR SGX544MP2 and mali 400mp2. Which GPU is better? All these numbers are confusing. I need an answer in plain English.

@Dave

PowerVR SGX544MP2 has 8 cores, and Mali-400MP2 has 2 cores, so the PowerVR GPU will provide much better performance, about 4 times faster.

@cnxsoft

You are awesome! Thanks.

Gc1000 plus vs mali 450 mp4? Can help me someone with an opinion?

@tttubi

Mali-450MP4 should be much faster than GC1000 Plus.

Would the Mali-450 mp4 outperform the PowerVR SGX544 MPS? Thanks

@Tom

Only the single core (cluster) version of PowerVR SGX544 MP as use in chipset MT6589 would be outperform by Mali-450 MP4. The dual core version (PowerVRSGX544 MP2) however will offer similar performance to the named Mali GPU.

@Dave

To clarify, PowerVR SGX544MP2 is made up of 8 pixel shaders and 4 vertex shaders, Mali-400MP2 is made up of 2 pixel shaders and 1 vertex shader. So PowerVR GPU would definitely perform better because it has more shaders. Having more shaders means a GPU can render a frame better than one with less shaders.

To make things easy for non technical customers, buyers, the Android market needs a real world game test. Like the Doom, Quake series gave on the PC. A high end game that has features that push the graphics in a free demo, shareware version.

The Android TV box lower market has the potential to be the real micro console. We need beyong Riptide GP2 test. For HD gaming below £40 GBP price boxes.

Here @cnxsoft: http://kyokojap.myweb.hinet.net/gpu_gflops/

@beerendlauwers

Nice table. GFLOPS numbers are interesting but for some reasons not always relevant to graphics performance.

funny to read threads like this & found questions never been answered.

because open source ist the meaning of living as a human

REMEMBER SOILENT GREEN !?

soilent green is real…

go search, if you think you can stand the truth…

no matter what ! problem you might have…

the problem is existant in 99,9 % because of money…

so remove money…

no one needs it anymore…

& if you want to be a part of a healthy world to live in

on the actual limit of tecnology today

we are at variants between 3 hours a week, to 6 hours a month for work

if anyone who is willing and who wants to work, will work.

if you are not able to think global

youre lost

stay in your medieval times

be happy about rising rates of cancer

believe the lies of pharmaindusties

pay 3 to 5 times mor for the natural preparates

if you ever will be able to get those…

man this planet is so fucking sick…

heel it, now !

be human !!!

be guardian

be protector

be nurse

be what ever you want to be, IF THERE IS A SENSE FOR IT

you don’t need money to remove the problems

you need money if you want problems

if there is something positive that makes sense

take it

no mater from who, where, or for what crated

remove patents

insurance ? remove it… no one ever! will need those things ever! again

…

think about what you leave behind…

& think about what you want! to leave behind

go & search ideas & concepts, people are working on…

J. Fresco, M. Tellinger, Linus Torvalds

just listen & see what artists all around the world tell you ! all ! the ! time !

go NEVER to see… or NOT out of a film or a concert, if you are not able and willing to think about…

if you want rights, come back with obligations as completed duties

I have a viviniate gc 7000 gpu is it faster than a adreno 305 GPU I need answer fast