Raspberry Pi recently received a strategic investment from Sony (Semiconductor Solutions Corporation) in order to provide a development platform for the company’s edge AI devices leveraging the AITRIOS platform. We don’t have many details about the upcoming Raspberry Pi / Sony device, so instead, I decided to look into the AITRIOS platform, and currently, there’s a single hardware platform, LUCID Vision Labs SENSAiZ SZP123S-001 smart camera based on Sony IMX500 intelligent vision sensor, designed to work with Sony AITRIOS software. LUCID SENSAiZ Smart camera SENSAiZ SZP123S-001 specifications: Imaging sensor – 12.33MP Sony IMX500 progressive scan CMOS sensor with rolling shutter, built-in DSP and dedicated on-chip SRAM to enable high-speed edge AI processing. Focal Length – 4.35 mm Camera Sensor Format – 1/2.3″ Pixels (H x V) – 4,056 x 3,040 Pixel Size, H x V – 1.55 x 1.55 μm Networking – 10/100M RJ45 port Power Supply – PoE+ via […]

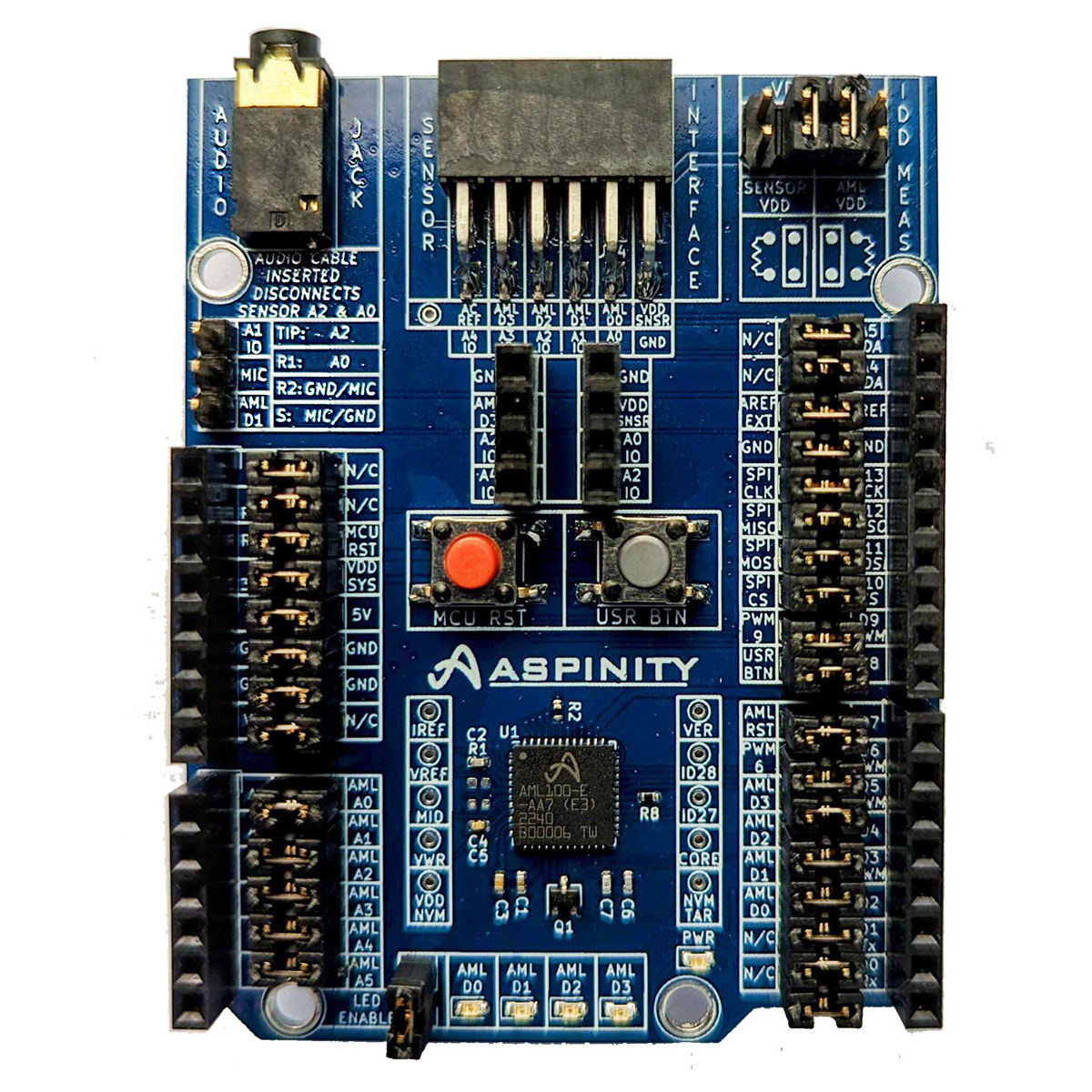

Aspinity AB2 AML100 Arduino Shield supports ultra-low-power analog machine learning

Aspinity AB2 AML100 is an Arduino Shield based on the company’s AML100 analog machine learning processor that reduces power consumption by 95 percent compared to equivalent digital ML processors, and the shield works with Renesas Quick-Connect IoT platform or other development platforms with Arduino Uno Rev3 headers. The AML100 analog machine learning processor is said to consume just 15µA for sensor interfacing, signal processing, and decision-making and operates completely within the analog domain offloading most of the work from the microcontroller side that can stay its lowest power state until an event/anomaly is detected. Aspinity AB2 AML100 Arduino Shield specifications: ML chip – Aspinity AML100 analog machine learning chip Software programmable analogML core with an array of configurable analog blocks (CABs) with non-volatile memory and analog signal processing Processes natively analog data Near-zero power for inference and events detection Consumes <20µA when always-sensing Reduces analog data by 100x Supports up […]

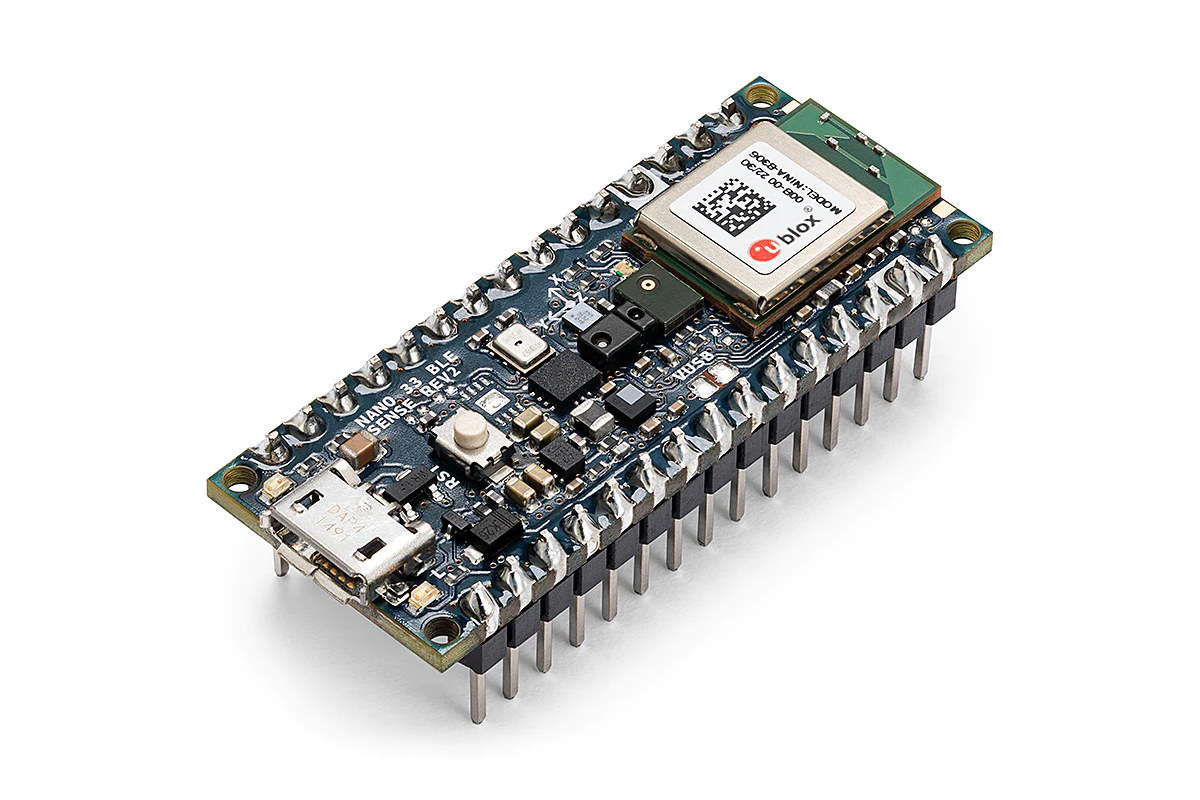

Arduino Nano 33 BLE Sense Rev2 switches to BMI270 & BMM150 IMUs, HS3003 temperature & humidity sensor

Arduino Nano 33 BLE Sense Rev2 is a new revision of the Nano 33 BLE Sense machine learning board with basically the same functionality but some sensors have changed along with some other modifications “to improve the experience of the users”. The main changes are that STMicro LSM9DS1 9-axis IMU has been replaced by two IMUs from Bosch SensorTech, namely the BMI270 6-axis accelerometer and gyroscope, and the BMM150 3-axis magnetometer, a Renesas HS3003 temperature & humidity sensor has taken the place of an STMicro HTS221, and the microphone is now an MP34DT06JTR from STMicro instead of an MP34DT05. All of the replaced parts are from STMicro, so it’s quite possible the second revision of the board was mostly to address supply issues. Arduino Nano 33 BLE Sense Rev2 (ABX00069) specifications: Wireless Module – U-blox NINA-B306 module powered by a Nordic Semi nRF52840 Arm Cortex-M4F microcontroller @ 64MHz with 1MB […]

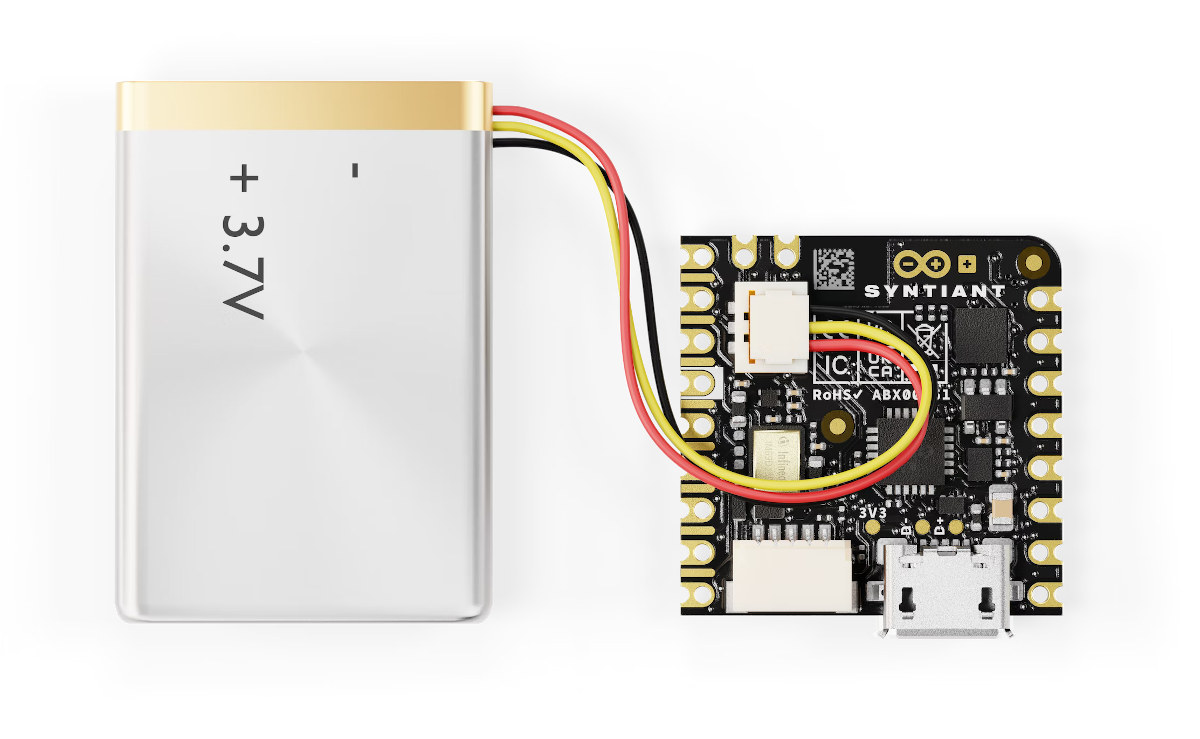

Arduino Nicla Voice enables always-on speech recognition with Syntiant NDP120 “Neural Decision Processor”

Nicla Voice is the latest board from the Arduino PRO family with support for always-on speech recognition thanks to the Syntiant NDP120 “Neural Decision Processor” with a neural network accelerator, a HiFi 3 audio DSP, and a Cortex-M0+ microcontroller core, and the board also includes a Nordic Semi nRF52832 MCU for Bluetooth LE connectivity. Arduino previously launched the Nicla Sense with Bosch SensorTech’s motion and environmental sensors, followed by the Nicla Vision for machine vision applications, and now the company is adding audio and voice support for TinyML and IoT applications with the Nicla Voice. Nicla Voice specifications: Microprocessor – Syntiant NDP120 Neural Decision Processor (NDP) with one Syntiant Core 2 ultra-low-power deep neural network inference engine, 1x HiFi 3 Audio DSP, 1x Arm Cortex-M0 core up to 48 MHz, 48KB SRAM Wireless MCU – Nordic Semiconductor nRF52832 Arm Cortex-M4 microcontroller @ 64 MHz with 512KB Flash, 64KB RAM, Bluetooth […]

ArduCam Mega – A 3MP or 5MP SPI camera for microcontrollers (Crowdfunding)

ArduCam Mega is a 3MP or 5MP camera specifically designed for microcontrollers with an SPI interface, and the SDK currently supports Arduino UNO and Mega2560 boards, ESP32/ESP8266 boards, Raspberry Pi Pico and other boards based on RP2040 MCU, BBC Micro:bit V2, as well as STM32 and MSP430 platform. Both cameras share many of the same specifications including their size, but the 3MP model is a fixed-focus camera, while the 5MP variant supports autofocus. Potential applications include assets monitoring, wildfire monitoring, remote meter reading, TinyML applications, and so on. ArduCam Mega specifications: Camera Type 3MP with fixed focus 5MP with auto-focus from 8cm to infinity Optical size – 1/4-inch Shutter type – Rolling Focal ratio 3MP – F2.8 5MP – F2.0 Still Resolutions 320×240, 640×480, 1280×720 x 1600 x1200x 1920 x 1080 3MP – 2048 x 1536 5MP – 2592×1944 Output formats – RGB, YUV, or JPEG Wake-up time 3MP – […]

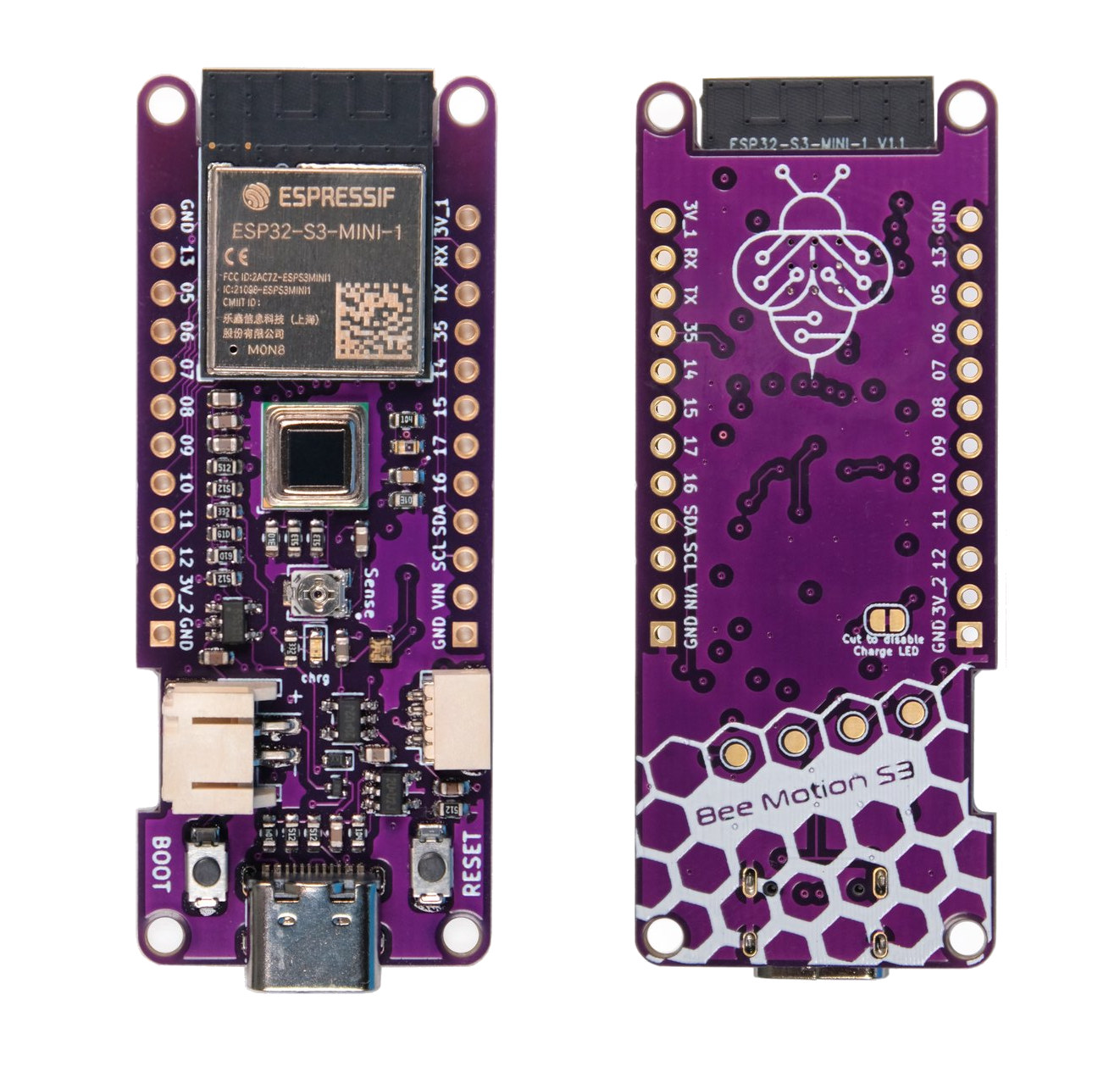

Bee Motion S3 – An ESP32-S3 board with a PIR motion sensor (Crowdfunding)

The Bee Motion S3 is an ESP32-S3 WiFi and Bluetooth IoT board with a PIR motion sensor beside the more usual I/Os, Qwiic connector, USB-C port, and LiPo battery support. It is at least the third PIR motion wireless board from Smart Bee Designs, as the company previously introduced the ESP32-S2 powered Bee Motion board and the ultra-small Bee Motion Mini with an ESP32-C3 SoC. The new Bee Motion S3 adds a few more I/Os, a light sensor, and the ESP32-S3’s AI vector extensions could potentially be used for faster and/or lower-power TinyML processing. Bee Motion S3 specifications: Wireless module – Espressif Systems ESP32-S3-MINI-1 module (PDF datasheet) based on ESP32-S3 dual-core Xtensa LX7 microcontroller with 512KB SRAM, 384KB ROM, WiFi 4 and Bluetooth 5.0 connectivity, and equipped with 8MB of QSPI flash and a PCB antenna USB – 1x USB Type-C port for power and programming Sensors PIR sensor S16-L221D […]

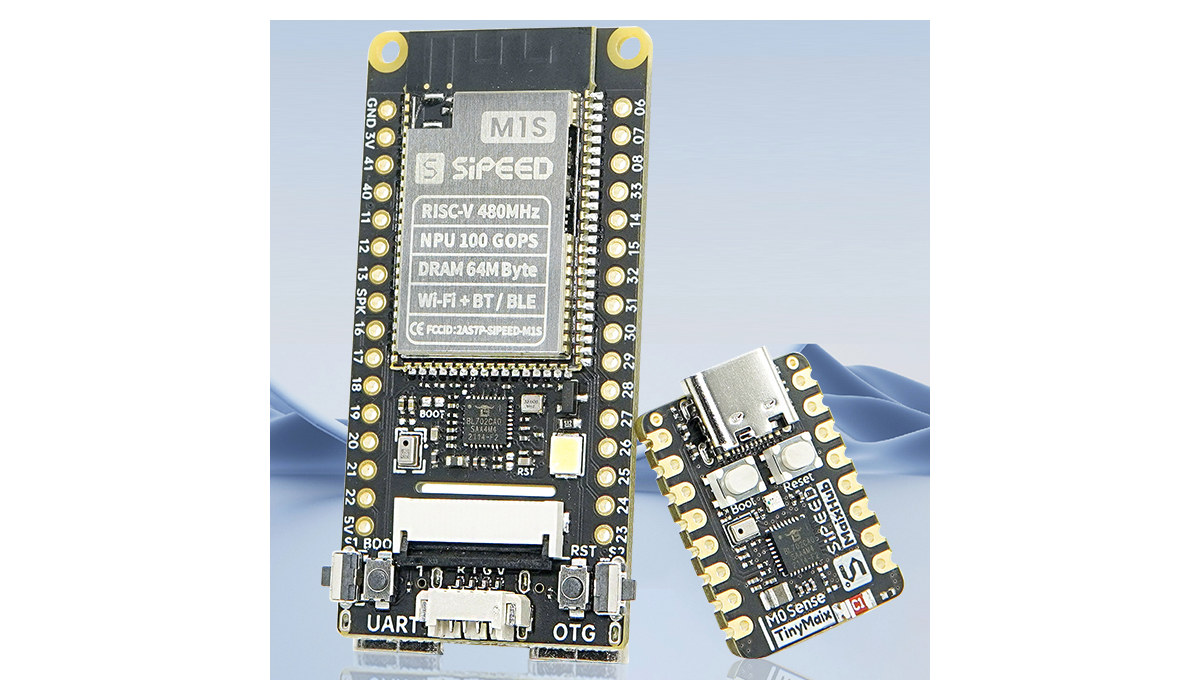

Sipeed M1s & M0sense – Low-cost BL808 & BL702 based AI modules (Crowdfunding)

Sipeed has launched the M1s and M0Sense AI modules. Designed for AIoT application, the Sipeed M1s is based on the Bouffalo Lab BL808 32-bit/64-bit RISC-V wireless SoC with WiFi, Bluetooth, and an 802.15.4 radio for Zigbee support, as well as the BLAI-100 (Bouffalo Lab AI engine) NPU for video/audio detection and/or recognition. The Sipeed M0Sense targets TinyML applications with the Bouffa Lab BL702 32-bit microcontroller also offering WiFi, BLE, and Zigbee connectivity. Sipeed M1s AIoT module The Sipeed M1S is an update to the Kendryte K210-powered Sipeed M1 introduced several years ago. Sipeed M1s module specifications: SoC – Bouffalo Lab BL808 with CPU Alibaba T-head C906 64-bit RISC-V (RV64GCV+) core @ 480MHz Alibaba T-head E907 32-bit RISC-V (RV32GCP+) core @ 320MHz 32-bit RISC-V (RV32EMC) core @ 160 MHz Memory – 768KB SRAM and 64MB embedded PSRAM AI accelerator – NPU BLAI-100 (Bouffalo Lab AI engine) for video/audio detection/recognition delivering up […]

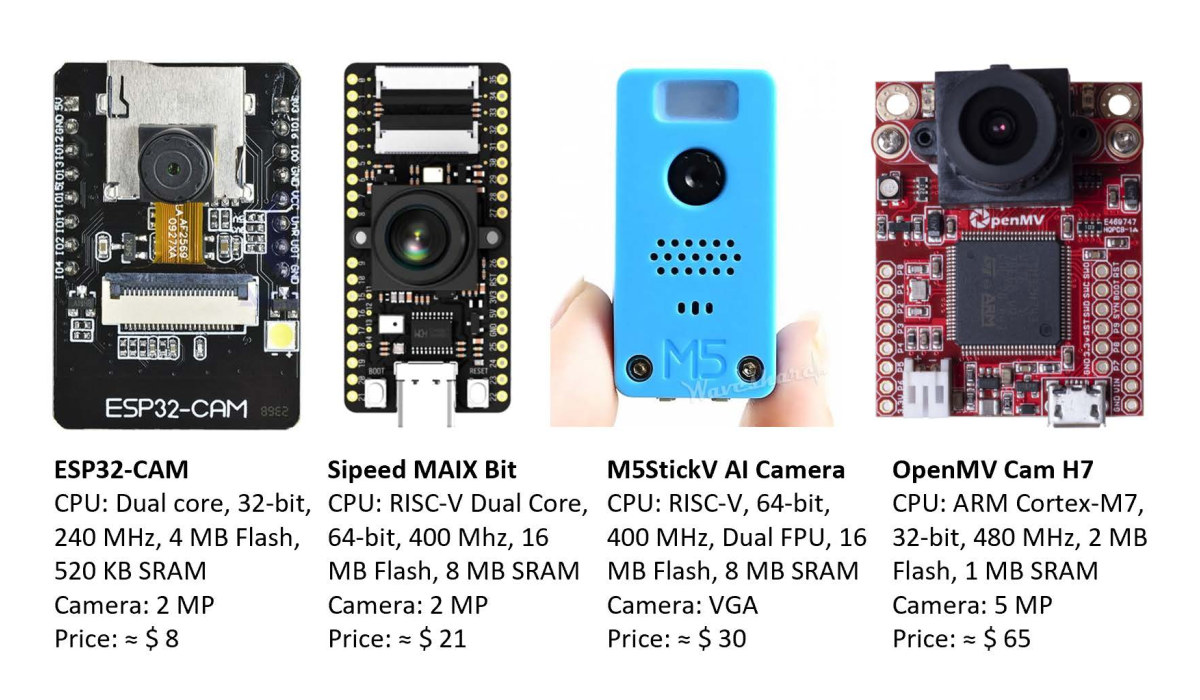

TinyML-CAM pipeline enables 80 FPS image recognition on ESP32 using just 1 KB RAM

The challenge with TinyML is to extract the maximum performance/efficiency at the lowest footprint for AI workloads on microcontroller-class hardware. The TinyML-CAM pipeline, developed by a team of machine learning researchers in Europe, demonstrates what’s possible to achieve on relatively low-end hardware with a camera. Most specifically, they managed to reach over 80 FPS image recognition on the sub-$10 ESP32-CAM board with the open-source TinyML-CAM pipeline taking just about 1KB of RAM. It should work on other MCU boards with a camera, and training does not seem complex since we are told it takes around 30 minutes to implement a customized task. The researchers note that solutions like TensorFlow Lite for Microcontrollers and Edge Impulse already enable the execution of ML workloads, onMCU boards, using Neural Networks (NNs). However, those usually take quite a lot of memory, between 50 and 500 kB of RAM, and take 100 to 600 ms […]