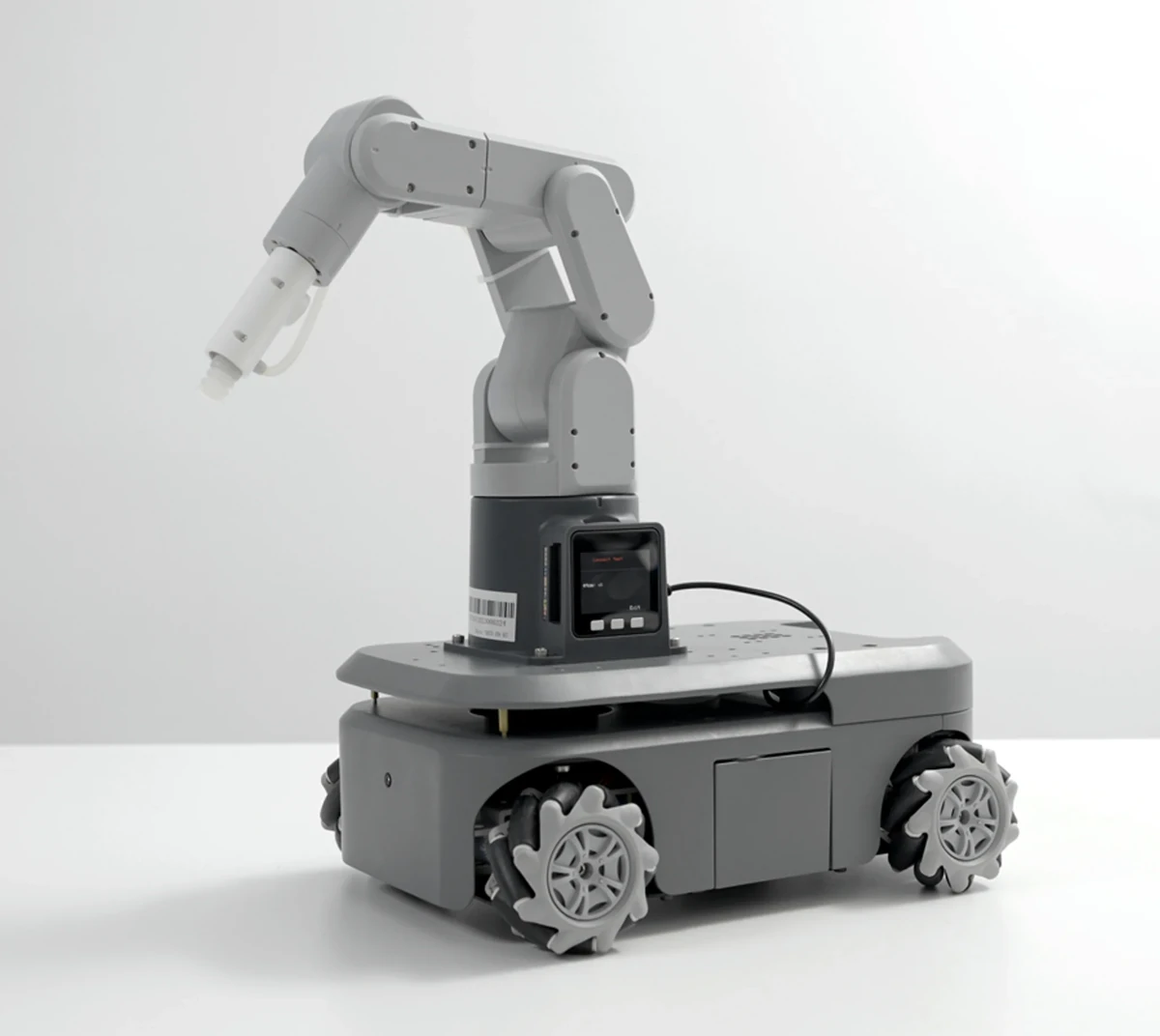

Elephant Robotics myAGV 2023 is a 4-wheel mobile robot available with either Raspberry Pi 4 Model B SBC or NVIDIA Jetson Nano B01 developer kit, and it supports five different types of robotic arms to cater to various use cases. Compared to the previous generation myAGV robot, the 2023 model is fitted with high-performance planetary brushless DC motors, supports vacuum placement control, can take a backup battery, handles large payloads up to 5 kg, and integrates customizable LED lighting at the rear. myAGV 2023 specifications: Control board Pi model – Raspberry Pi 4B with 2GB RAM Jetson Nano model – NVIDIA Jetson Nano B01 with 4GB RAM Wheels – 4x Mecanuum wheels Motor – Planetary brushless DC motor Maximum linear speed – 0.9m/s Maximum Payload – 5 kg Video Output Pi model – 2x micro HDMI ports Jetson Nano model – HDMI and DisplayPort video outputs Camera Pi model – […]

PALMSHELL NeXT H2 SBC and micro server offers 10GbE for $199 and up

PALMSHELL NeXT H2 is an affordable AMD Ryzen Embedded R1505G-powered micro server, also available as an SBC for developers, that features two 10GbE SFP+ cages and one 2.5GbE RJ45 port, as well as expansion sockets for wireless connectivity. The system supports up to 32GB RAM plus an M.2 NVMe SSD and two SATA drives for storage, offers several wireless connectivity options with WiFi 6, Bluetooth 5.2, and 4G LTE/5G, and is equipped with two 4Kp60-capable HDMI ports, and four USB ports. PALMSHELL NeXT H2 specifications: SoC – AMD Ryzen Embedded R1505G dual-core/quad-thread processor @ 2.4GHz up to 3.3GHz with AMD Radeon Vega 3 graphics; TDP set to 25W System Memory – Optional 8GB or 16GB DDR4 @ 2400 MT/s via 2x SO-DIMM slots Storage Optional 256GB or 512GB NVMe (PCIe 3.0) SSD via M.2 Key-N 2280 socket 2x SATA III connector MicroSD card socket Video Output – 2x HDMI […]

Rockchip RK3588 AIoT gateway supports WiFi 6, 5G, RS232, RS485, LoRaWAN, BLE, and Ethernet

Dusun DSGW-380, also called Dusun Pi 5, is an industrial AIoT gateway powered by the Rockchip RK3588 octa-core processor with a 6 TOPS AI accelerator and supporting a wide range of connectivity options. The gateway comes with 8GB LPDDR4 memory, and up to 128GB eMMC flash, and operates in a wide -25 to +75°C temperature range. It supports dual gigabit Ethernet, RS232, and R485 wired connectivity and various wireless protocols including WiFi 6, Bluetooth LE, 5G, and LoRaWAN. Dusun DSGW-380 specifications: SoC – Rockchip RK3588 octa-core processor with CPU – 4x Cortex‑A76 cores @ up to 2.4 GHz, 4x Cortex‑A55 core @ 1.8 GHz GPU – Arm Mali-G610 MP4 “Odin” GPU @ 1.0 GHz Video decoder – 8Kp60 H.265, VP9, AVS2, 8Kp30 H.264 AVC/MVC, 4Kp60 AV1, 1080p60 MPEG-2/-1, VC-1, VP8 Video encoder – 8Kp30 H.265/H.264 video encoder AI accelerator – 6 TOPS NPU System Memory – 8GB RAM Storage – […]

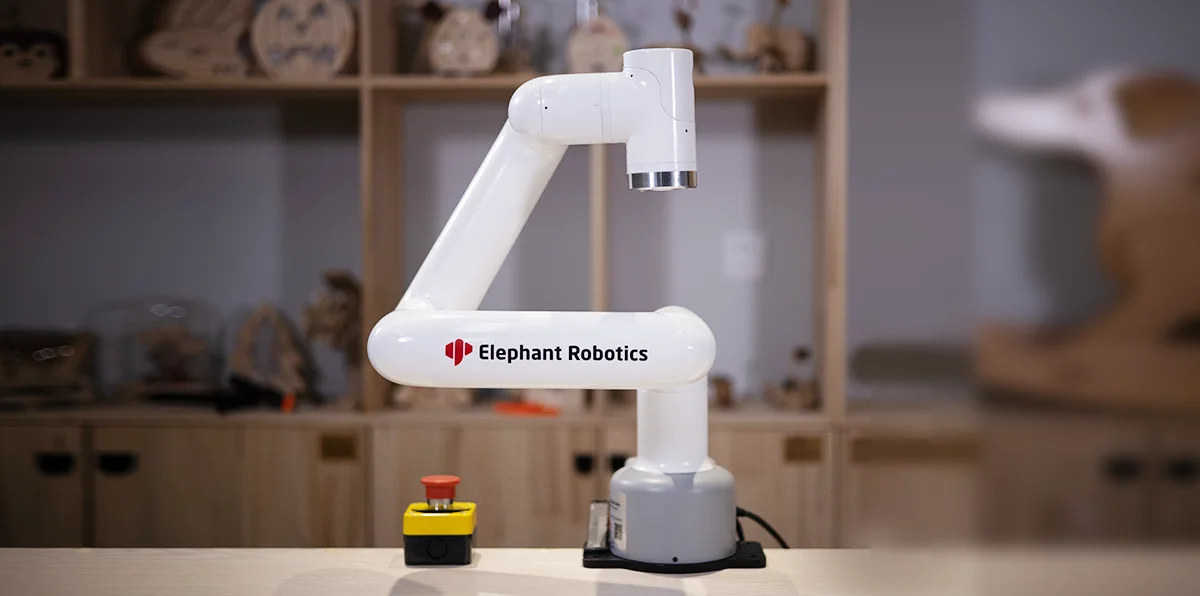

myCobot Pro 600 Raspberry Pi 4-based robot arm supports 600mm working range, up to 2kg payload

Elephant Robotics has launched its most advanced 6 DoF robot arm so far with the myCobot Pro 600 equipped with a Raspberry Pi 4 SBC, offering a maximum 600mm working range and support for up to 2kg payloads. We’ve covered Elephant Robotics’ myCobot robotic arms based on Raspberry Pi 4, ESP32, Jetson Nano, or Arduino previously, even reviewed the myCobot 280 Pi using both Python and visual programming, and the new Raspberry Pi 4-based myCobot Pro 600 provides about the same features but its much larger design enables it to be used on larger areas and handles heavier objects. myCobot Pro 600 specifications: SBC – Raspberry Pi 4 single board computer MCU – 240 MHz ESP32 dual-core microcontroller (600 DMIPS) with 520KB SRAM, Wi-Fi & dual-mode Bluetooth Video Output – 2x micro HDMI 2.0 ports Audio – 3.5mm audio jack, digital audio via HDMI Networking – Gigabit Ethernet, dual-band WiFi […]

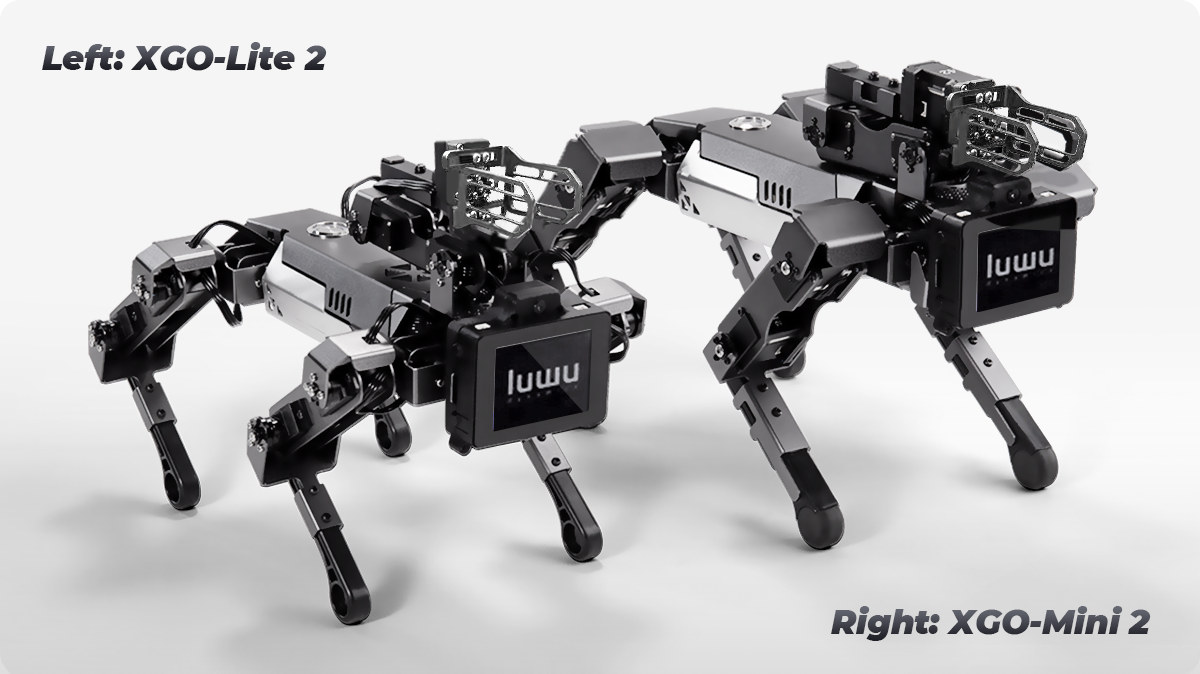

XGO 2 – A Raspberry Pi CM4 based robot dog with an arm (Crowdfunding)

XGO 2 is a desktop robot dog using the Raspberry Pi CM4 as its brain, the ESP32 as the motor controller for the four legs and an additional robotic arm that allows the quadruped robot to grab objects. An evolution of the XGO mini robot dog with a Kendryte K210 RISC-V AI processor, the XGO 2 robot offers 12 degrees of freedom and the more powerful Raspberry CM4 model enables faster AI edge computing applications, as well as features such as omnidirectional movement, six-dimensional posture control, posture stability, and multiple motion gaits. The XGO 2 robot dog is offered in two variants – the XGO-Lite 2 and the XGO-Mini 2 – with the following key features and specifications: The company also says the new robot can provide feedback on its own postures thanks to its 6-axis IMU and sensors for the joints reporting the position and electric current. A display […]

ultraArm P340 Arduino-based robotic arm draws, engraves, and grabs

Elephant Robotics ultraArm P340 is a robot arm with an Arduino-compatible ATMega2560 control board with a 340mm working radius whose arm can be attached with different accessories for drawing, laser engraving, and grabbing objects. We’ve previously written and reviewed the myCobot 280 Pi robotic arm with a built-in Raspberry Pi 4 SBC, but the lower-cost ultraArm P340 works a little differently since it only contains the electronics for controlling the servos and attachments, and needs to be connected to a host computer running Windows or a Raspberry Pi over USB. ultraArm P340 specifications: Control board based on Microchip ATMega2560 8-bit AVR microcontroller @ 16MHz with 256KB flash, 4Kb EEPROM, 8KB SRAM DOF – 3 to 4 axis depending on accessories Working radius – 340mm Positioning Accuracy – ±0.1 mm Payload – Up to 650 grams High-performance stepper motor Maximum speed – 100mm/s Communication interfaces – RS485 and USB serial Attachment […]

Sipeed MetaSense RGB ToF 3D depth cameras are made for MCUs & ROS Robots (Crowfunding)

We’ve just written about the Arducam ToF camera to add depth sensing to Raspberry Pi, but there are now more choices, as Sipeed has just introduced its MetaSense ToF (Time-of-Flight) camera family for microcontrollers and robots running ROS with two models offering different sets of features and capabilities. The MetaSense A075V USB camera offers 320×240 depth resolution plus an extra RGB sensor, an IMU unit, and a CPU with built-in NPU that makes it ideal for ROS 1/2 robots, while the lower-end MetaSense A010 ToF camera offers up to 100×100 resolution, integrates a 1.14-inch LCD display to visualize depth data in real-time and can be connected to a microcontroller host, or even a Raspberry Pi, through UART or USB. MetaSense A075V specifications: SoC – Unnamed quad-core Arm Cortex-A7 processor @ 1.5 GHz with 0.4 TOPS NPU System Memory – 128 MB RAM Storage – 128MB flash Cameras 800×600 @ 30 […]

AAEON launches UP Xtreme i11 & UP Squared 6000 robotic development kits

AAEON’s UP Bridge the Gap community has partnered with Intel to release two robotic development kits based on either UP Xtreme i11 or UP Squared 6000 single board computers in order to simplify robotics evaluation and development. Both robotic development kits come with a four-wheeled robot prototype that can move omnidirectionally, sense and map its environment, avoid obstacles, and detect people and objects. Since those capabilities are already implemented, users can save time and money working on further customization for specific use cases. Key features and specifications: SBC UP Squared 6000 SBC – Recommended for power efficiency and extended battery power SoC – Intel Atom x6425RE Elkhart Lake processor clocked up to 1.90 GHz with Intel UHD Graphics System Memory – 8GB LPDDR4 Storage – 64GB eMMC flash, SATA port UP Xtreme i11 SBC – Recommended for higher performance SoC Intel Core i7-1185GRE clocked at up to 4.40 GHz and […]