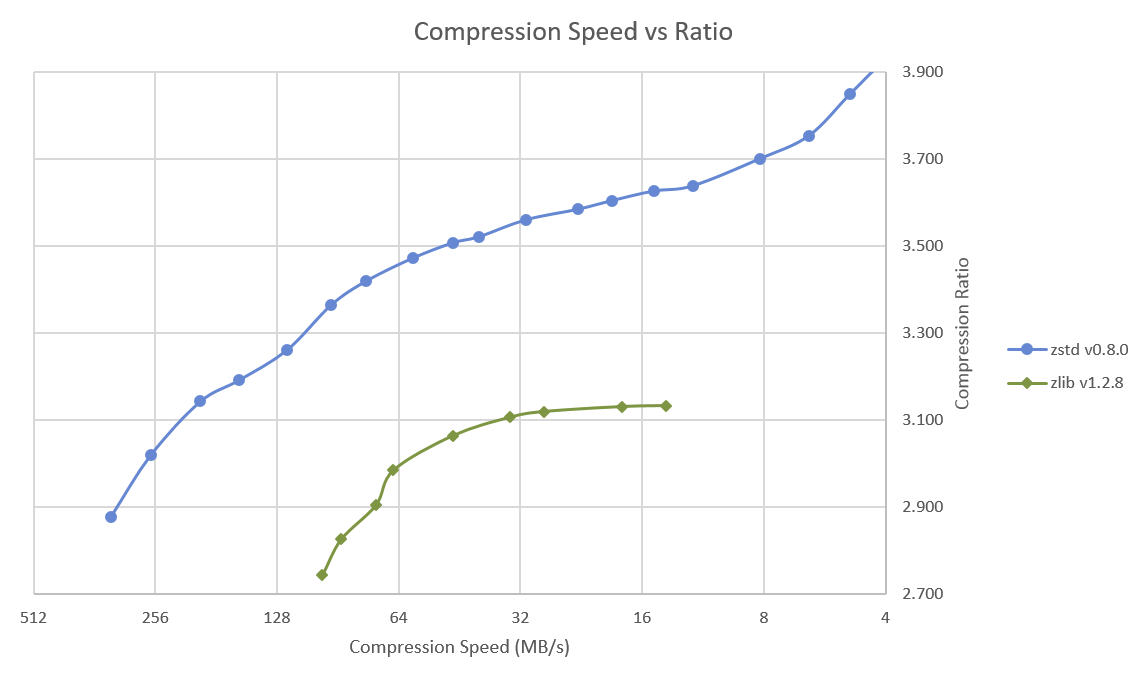

Ubuntu 16.04 and – I assume – other recent operating systems are still using single-thread version of file & data compression utilities such as bzip2 or gzip by default, but I’ve recently learned that compatible multi-threaded compression tools such as lbzip2, pigz or pixz have been around for a while, and you can replace the default tools by them for much faster compression and decompression on multi-core systems. This post led to further discussion about Facebook’s Zstandard 1.0 promising both smaller and faster data compression speed. The implementation is open source, released under a BSD license, and offers both zstd single threaded tool, and pzstd multi-threaded tool. So we all started to do own little tests and were impressed by the results. Some concerns were raised about patents, and development is still work-in-progess with a few bugs here and there including pzstd segfaulting on ARM. Zlib has 9 levels of […]

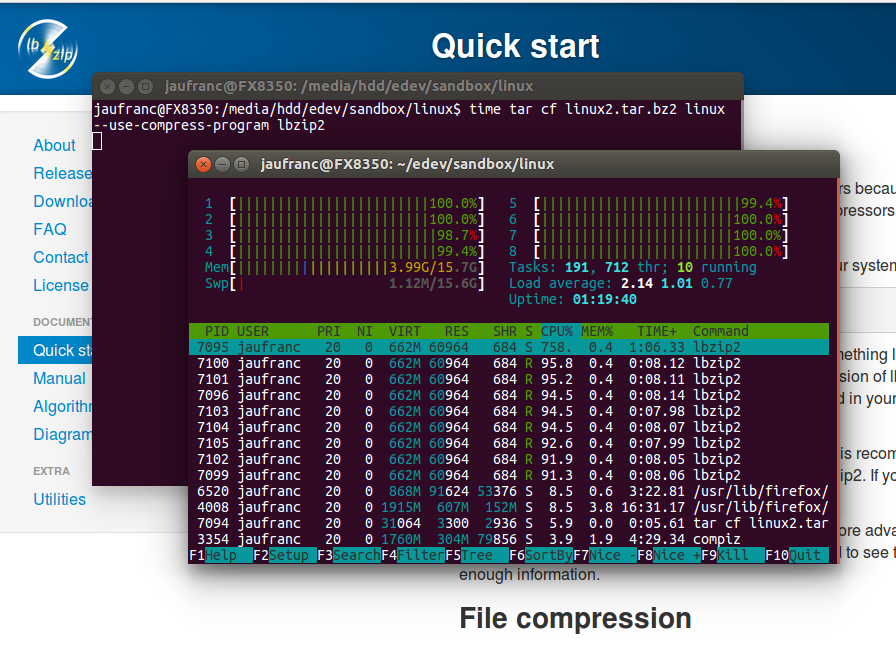

Compress & Decompress Files Faster with lbzip2 multi-threaded version of bzip2

Bzip2 is still one of the most commonly used compression tools in Linux, but it only works with a single thread, and I’ve been made aware that lbzip2 allows multi-threaded bzip2 compressions which should lead to much better performance on multi-core systems. lbzip2 was not installed by default in my Ubuntu 16.04 machine, but it’s easy enough to install:

|

1 |

sudo apt install lbzip2 |

I have cloned mainline linux repository on my machine, so let’s see how long it takes to compress the directory with bzip2 (one core compression):

|

1 2 3 4 5 |

time tar cjf linux.tar.bz2 linux real 9m22.131s user 7m42.712s sys 0m19.280s |

9 minutes and 22 seconds. Now let’s repeat the test with lbzip2 using all 8 cores from my AMD FX8350 processor:

|

1 2 3 4 5 |

time tar cf linux2.tar.bz2 linux --use-compress-program=lbzip2 real 2m32.660s user 7m4.072s sys 0m17.824s |

2 minutes 32 seconds. Almost 4x times, not bad at all. It’s not 8 times faster because you have to take into account I/Os, and at the beginning the system is scanning the drive, using all 8-core but not all full throttle. […]

Use GNU Parallel to Speed Up Script Execution on Multiple Cores and/or Machines

I attended BarCamp Chiang Mai 5 last week-end, and a lot of sessions were related to project management, business apps and web development, but there were also a few embedded systems related sessions dealing with subjects such as Arduino (Showing how to blink an LED…) and IOIO board for Android, as well as some Linux related sessions. The most useful talk I attended was about “GNU Parallel”, a command line tool that can dramatically speed up time-consuming tasks that can be executed in parallel, by spreading tasks across multiple cores and/or local machines on a LAN. This session was presented by the developer himself (Ole Tange). This tool is used for intensive data processing tasks such as DNA sequencing analysis (Bioinformatics), but it might be possible to find a way to use GNU Parallel to shorten the time it takes to build binaries. Make is already doing a good job at […]