SmartCow, an AI engineering company specializing in video analytics, AIoT devices, and smart city solutions, and an NVIDIA Metropolis partner, has released a new version of the Ultron controller. Smartcow Ultron is an AI-enabled controller with sensor fusion capabilities that empowers industrial applications of vision AI at the edge. Powered by the NVIDIA Jetson Xavier NX system-on-module and compatible with Jetson Orin NX, Ultron is suitable for smart traffic and manufacturing that can leverage its high computing power and low latency for vision AI applications in various configurations. It is also applicable in smart factories, smart cities, smart buildings, and smart agriculture. Ultron takes automation a step further than traditional PLC solutions with vision analysis. Based on powerful NVIDIA Jetson modules, Ultron can handle demanding visual AI processing workloads, with model architecture such as image classification, object detection, and segmentation. The new version of Ultron enhances the flexibility of its […]

reComputer J4012 mini PC features NVIDIA Jetson Orin NX for AI Edge applications

reComputer J4012 is a mini PC or “Edge AI computer” based on the new NVIDIA Jetson Orin NX, a cost-down version of the Jetson AGX Orin, delivering up to 100 TOPS modern AI performance. The mini PC is based on the Jetson Orin NX 16GB, comes with a 128GB M.2 SSD preloaded with the NVIDIA JetPack SDK and offers Gigabit Ethernet, four USB 3.2 ports, and HDMI 2.1 output. Wireless connectivity could be added through the system’s M.2 Key E socket. reComputer J4012 / J401 specifications: SoM – NVIDIA Jetson Orin NX 16GB with CPU – 8x Arm Cortex-A78AE core @ up to 2.0 GHz with 2MB L2 + 4MB L3 cache GPU/AI 1024-core NVIDIA Ampere GPU with 32 Tensor Cores @ up to 918 MHz 2x NVDLA v2.0 @ 614 MHz PVA v2 vision accelerator 100 TOPS AI performance (sparse) Video Encoder (H.265) 1x 4Kp60 | 3x 4Kp30 6x […]

Orbbec Femto Mega 3D depth and 4K RGB camera features NVIDIA Jetson Nano, Microsoft ToF technology

Orbbec Femto Mega is a programmable multi-mode 3D depth and RGB camera based on NVIDIA Jetson Nano system-on-module and based on Microsoft ToF technology found in Hololens and Azure Kinect DevKit. As an upgrade of the earlier Orbbec Femto, the camera is equipped with a 1MP depth camera with a 120 degrees field of view and a range of 0.25m to 5.5m as well as a 4K RGB camera, and enables real-time streaming of processed images over Ethernet or USB. Orbbec Femto Mega specifications: SoM – NVIDIA Jetson Nano system-on-module Cameras 1MP depth camera Precision: ≤17mm Accuracy: < 11 mm + 0.1% distance NFoV unbinned & binned:H 75°V 65° WFoV unbinned & binned:H 120°V 120° Resolutions & framerates NFoV unbinned: 640×576 @ 5/15/25/30fps NFoV binned: 320×288 @ 5/15/25/30fps WFoV unbinned: 1024×1024 @ 5/15fps NFoV binned: 512×512 @ 5/15/25/30fps 4K RGB camera FOV – H: 80°, V: 51°b D: 89°±2° Resolutions […]

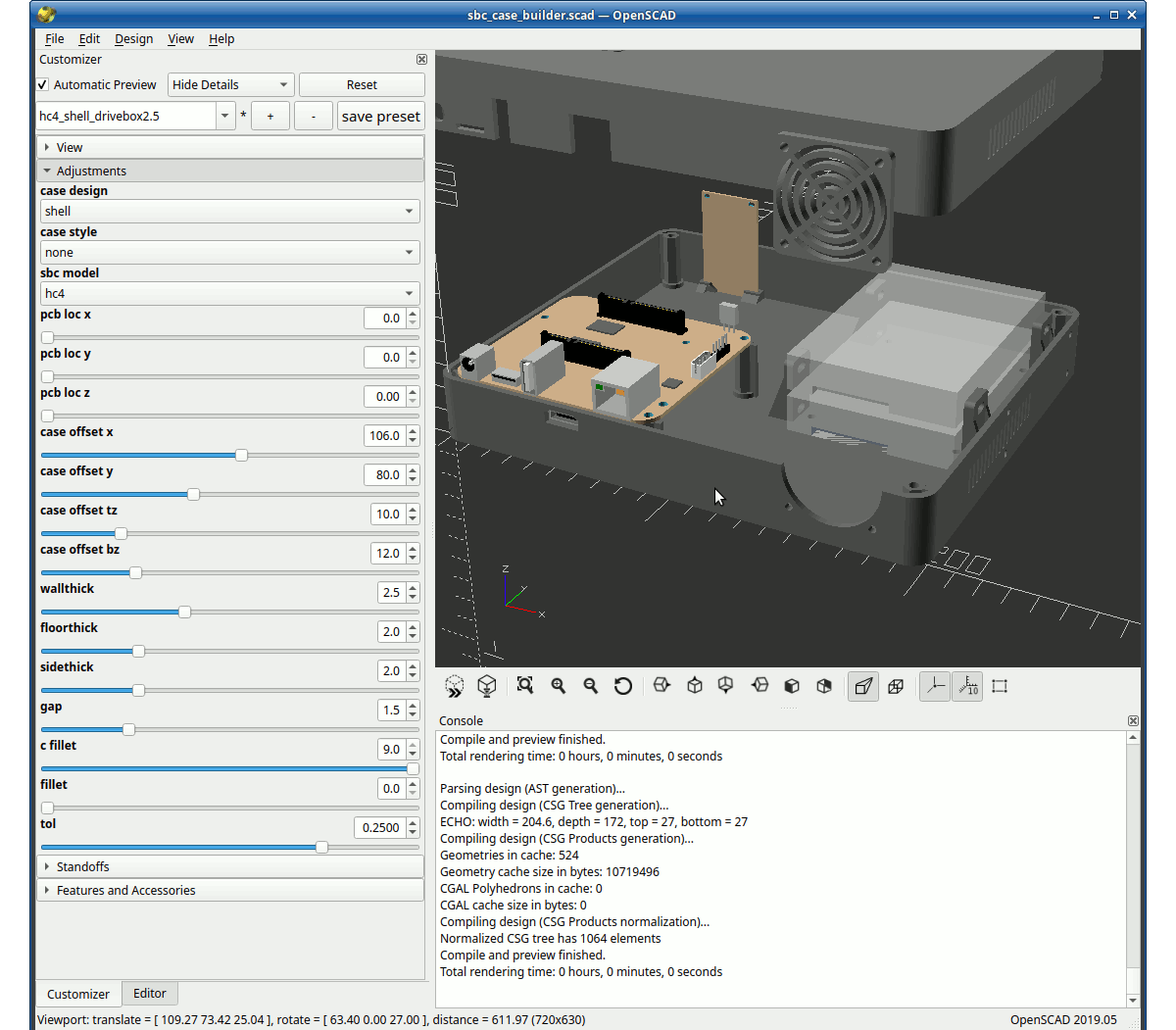

SBC Case Builder 2.0 released with GUI

SBC Case Builder 2.0 tool to create enclosures for single board computers has been released with a customizer graphical user interface, additional cases & SBCs, support for variable height standoffs, and more. We wrote about the SBC Case Builder tool to easily generate various types of 3D printable enclosures using OpenSCAD earlier this year. The SBC Model Framework used in the solution was focused on ODROID boards, and you had to type the parameters in a configuration file. SBC Case Builder 2.0 software changes that with a convenient-to-use graphical interface allowing for the dynamic adjustment of any of the case attributes. The new version of the software also supports variable height standoffs, multi-associative parametric accessory positioning, and offers 8 “base cases”, namely shell, panel, stacked, tray, round, hex, snap, and fitted. The solution works with 47 SBCs defined in the latest version of the SBC Model Framework. The following SBCs […]

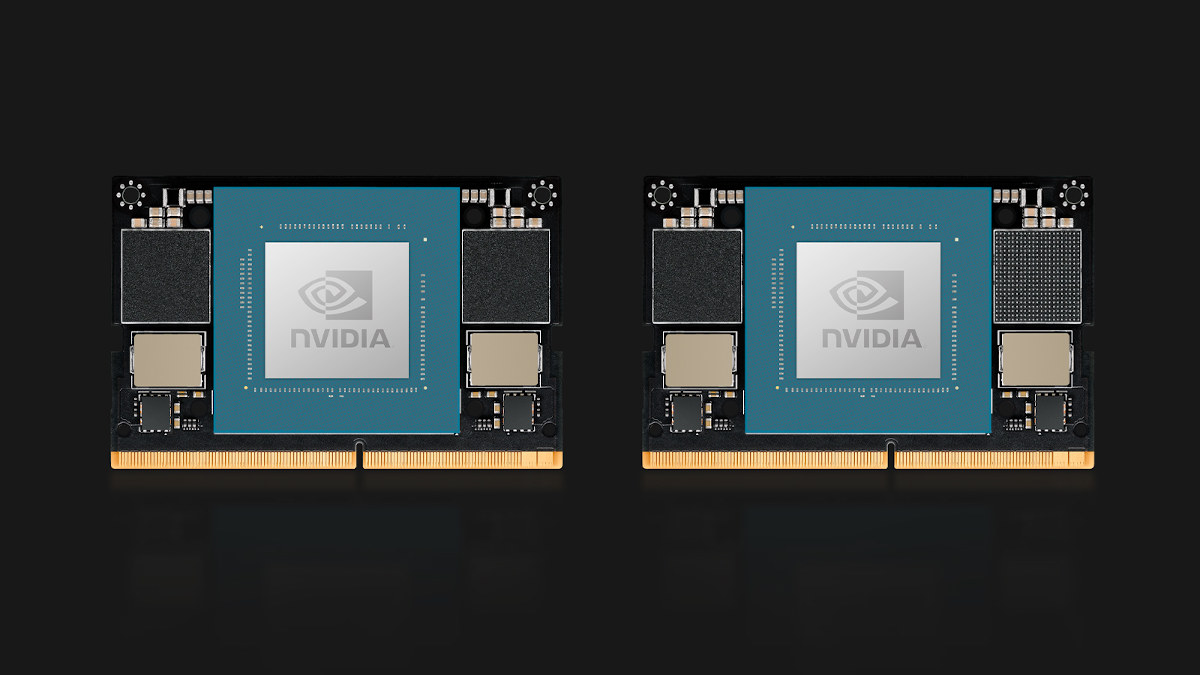

$199+ NVIDIA Jetson Orin Nano system-on-module delivers up to 40 TOPS

NVIDIA Jetson Orin Nano system-on-module (SoM) is an update to the Jetson Nano entry-level Edge AI and robotics module that delivers up to 40 TOPS of AI performance, meaning it’s up to 80 times faster than the original module. The new SoM features an hexa-core Arm Cortex-A78AE processor, an up to 1024-core NVIDIA Ampere architecture GPU with 32 Tensor cores, up to 8GB RAM, and the same 260-pin SO-DIMM connector found in the Jetson Orin NX modules. Two versions are offered with the following specifications: That means the Jetson Orin family has now six modules ranging from 20 TOPS to 275 TOPS. There’s no specific development kit for the Jetson Orin Nano SoM since it can be emulated on the NVIDIA Jetson AGX Orin developer kit, and supported by the JetPack 5.0.2 SDK based on Ubuntu 20.04. NVIDIA has tested some dense INT8 and FP16 pre-trained models from NGC and […]

NVIDIA Jetpack 5.0.2 release supports Ubuntu 20.04, Jetson AGX Orin

The NVIDIA Jetpack 5.0.2 production release is out with Ubuntu 20.04, the Jetson Linux 35.1 BSP 1 with Linux Kernel 5.10, an UEFI-based bootloader, support for Jetson AGX Orin module and developer kit, as new as updated packages such as CUDA 11.4, TensorRT 8.4.1, cuDNN 8.4.1. NVIDIA Jetson modules and developer kits are nice little pieces of hardware for AI workloads, but the associated NVIDIA Jetpack SDK was based on the older Ubuntu 18.04 which was not suitable for some projects. But the good news is that Ubuntu 20.04 was being worked on and initially available through the Jetpack 5.0.0/5.0.1 developer previews, and NVIDIA Jetpack 5.0.2 SDK is the first production release with support for Ubuntu 20.04. Besides the upgrade to Ubuntu 20.04, the Jetpack 5.0.2 SDK also adds support for both the Jetson AGX Orin Developer Kit and the newly-available Jetson AGX Orin 32 GB production module. It still […]

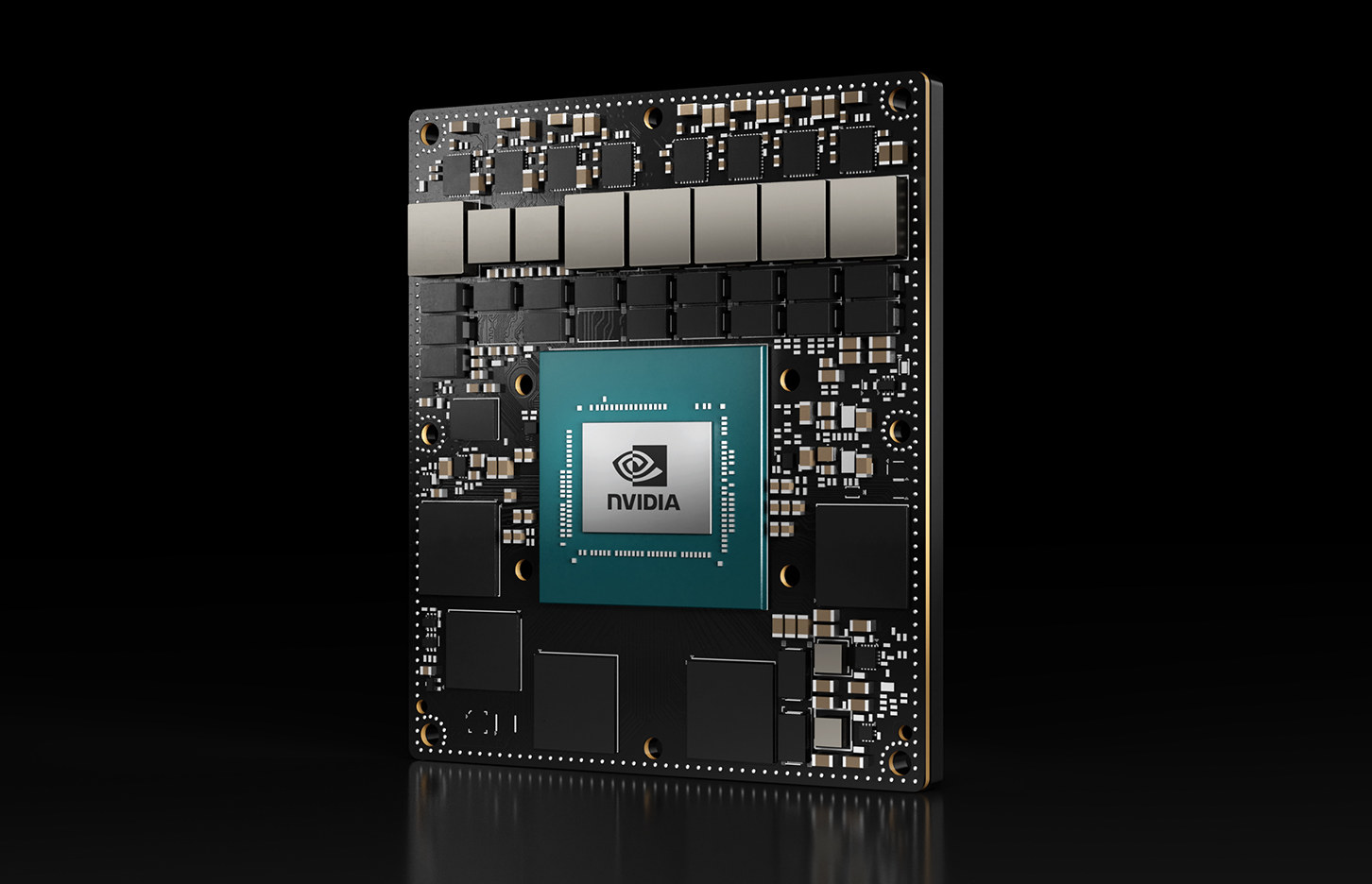

NVIDIA Jetson AGX Orin 32GB production module is now available

NVIDIA Jetson AGX Orin 32GB production module is now in mass production and available after the 12-core Cortex-A78E system-on-module was first announced in November 2021, and the Jetson AGX Orin developer kit was launched last March for close to $2,000. Capable of up to 200 TOPS of AI inference performance, or up to 6 times faster than the Jetson AGX, the NVIDIA Jetson AGX Orin 32GB can be used for AI, IoT, embedded, and robotics deployments, and NVIDIA says nearly three dozen partners are offering commercially available products based on the new module. Here’s a reminder of NVIDIA Jetson AGX Orin 32GB specifications: CPU – 8-core Arm Cortex-A78AE v8.2 64-bit processor with 2MB L2 + 4MB L3 cache GPU / AI accelerators NVIDIA Ampere architecture with 1792 NVIDIA CUDA cores and 56 Tensor Cores @ 1 GHz DL Accelerator – 2x NVDLA v2.0 Vision Accelerator – PVA v2.0 (Programmable Vision […]

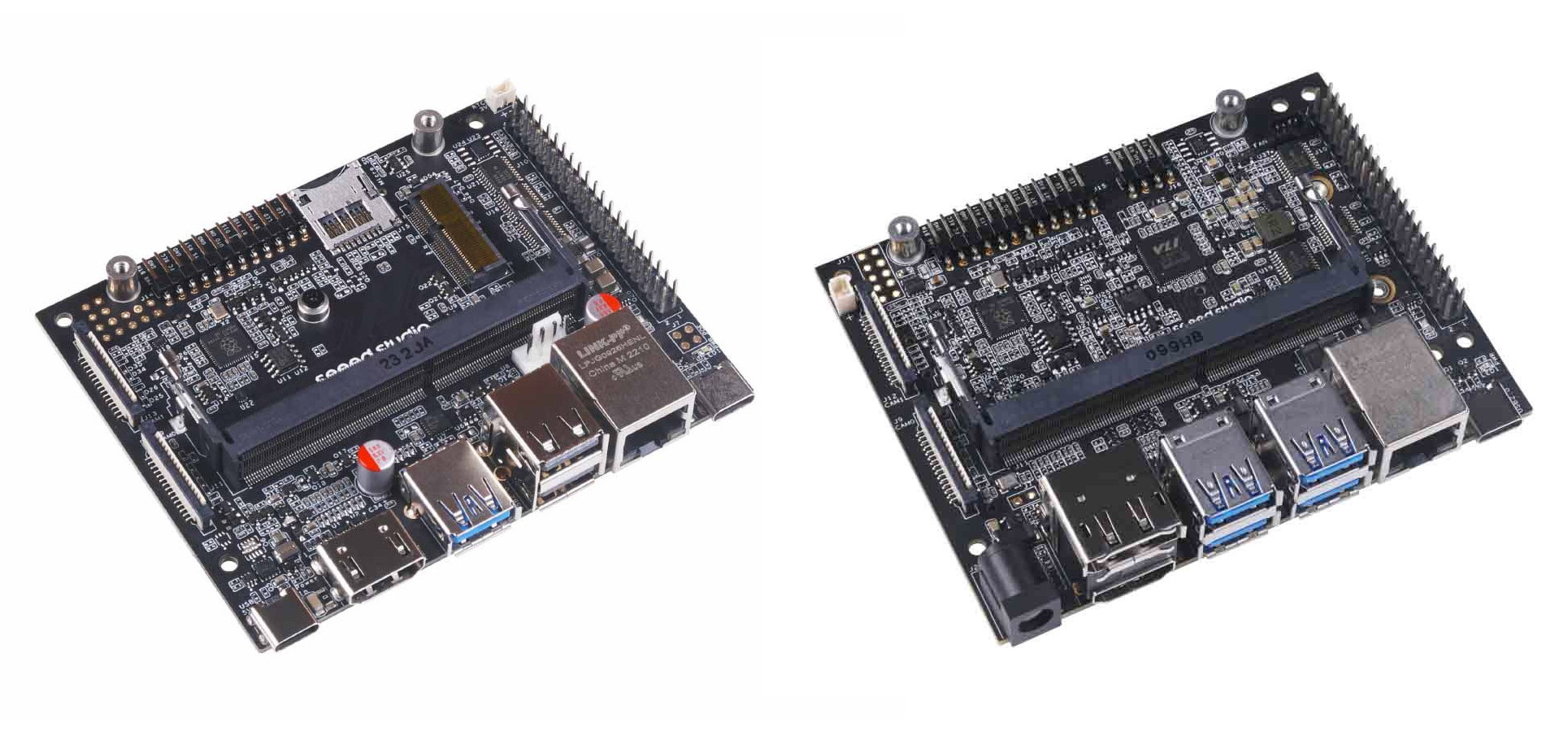

reComputer J101/J202 carrier boards are designed for Jetson Nano/NX/TX2 NX SoM

Seeed Studio’s reComputer J101 & J202 are carrier boards with a similar form factor as the ones found in NVIDIA Jetson Nano and Jetson Xavier NX developer kits, but with a slightly different feature set. The reComputer J101 notably features different USB Type-A/Type-C ports, a microSD card, takes power from a USB Type-C port, and drops the DisplayPort connector, while the reComputer J201 board replaces the micro USB device port with a USB Type-C port, adds a CAN Bus interface, and switches to 12V power input instead of 19V. The table below summarizes the features and differences between the Jetson Nano devkit (B1), reComputer J101, Jetson Xavier NX devkit, and reComputer J202. Note the official Jetson board should also support production SoM with eMMC flash, but they do ship with a non-production SoM with a built-in MicroSD card socket instead. The carrier boards are so similar that if NVIDIA would […]