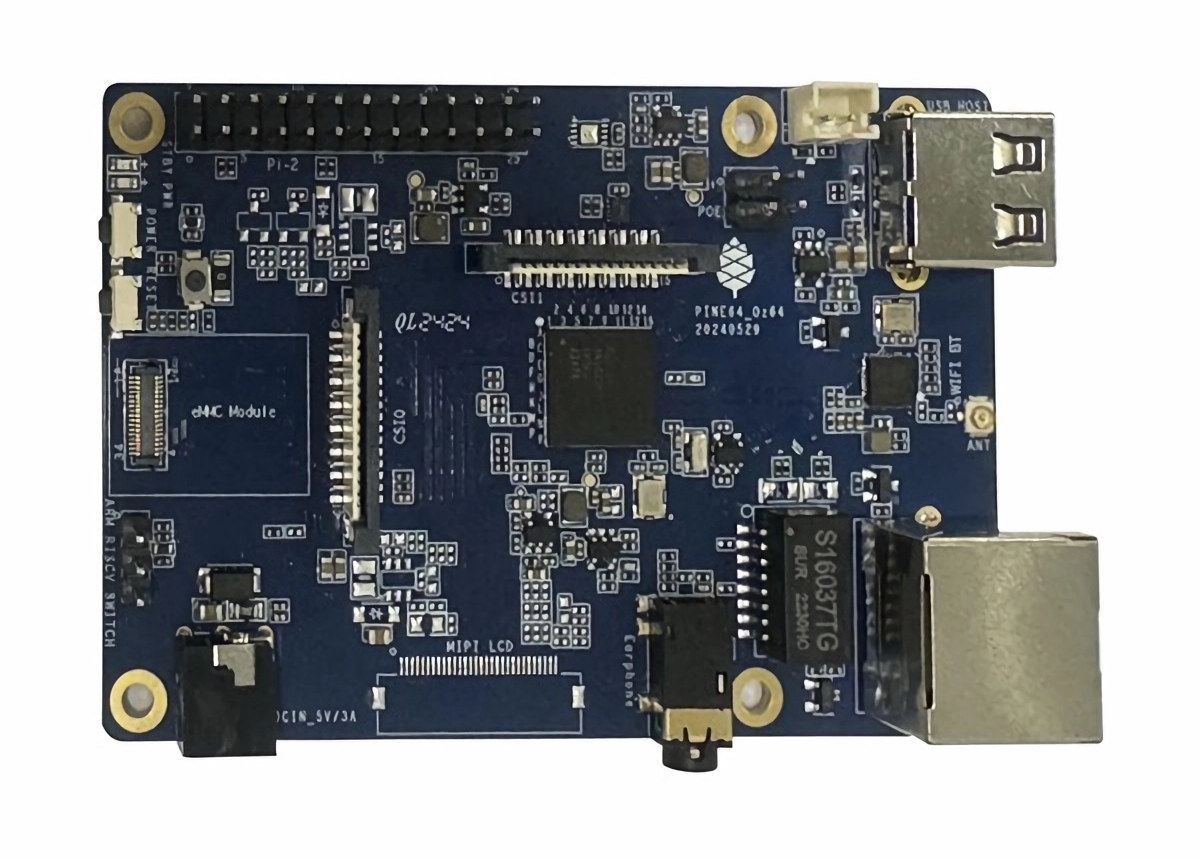

Pine64 Oz64 is an upcoming credit card-sized SBC based on the SOPHGO SG2000 RISC-V+Arm(+8051) processor that currently runs NuTTX RTOS, and a Debian Linux image is also in the works. With a name likely inspired by the earlier Pine64 Ox RISC-V SBC, the Oz64 is a more powerful embedded board with 512 MB of DRAM integrated into the SG2000, a microSD card, an eMMC flash module connector, Ethernet port, WiFi 6 and Bluetooth 5.2, a USB 2.0 Type-A host port, and a 26-pin GPIO header. Pine64 Oz64 specifications: SoC – SOPHGO SG2000 Main core – 1 GHz 64-bit RISC-V C906 or Arm Cortex-A53 core (selectable) Minor core – 700 MHz 64-bit RISC-V C906 core Low-power core – 25 to 300 MHz 8051 MCU core with 8KB SRAM NPU – 0.5 TOPS INT8, supports BF16 Integrated 512MB DDR3 (SiP) Storage MicroSD card slot eMMC flash module connector Display – Optional 2-lane […]

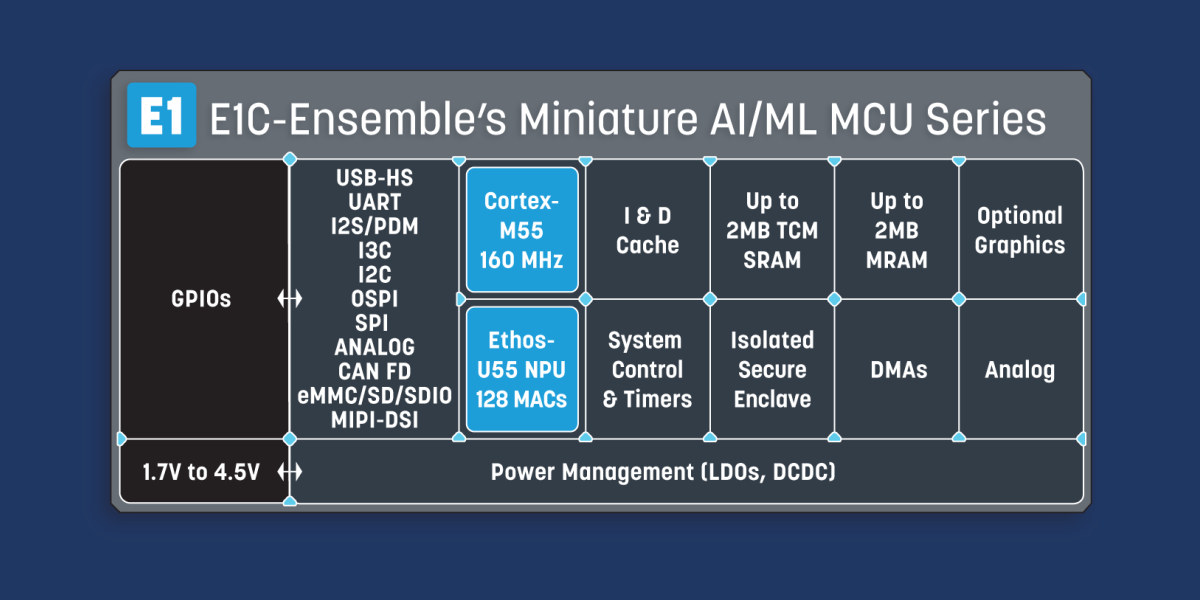

Alif Semi Ensemble E1C is an entry-level Cortex-M55 MCU with a 46 GOPS Ethos-U55 AI/ML accelerator

Alif Semi Ensemble E1C is an entry-level addition to the company’s Ensemble Cortex-A32M35 processors and microcontrollers with Ethos-U55 microNPUs that targets the very edge with a 160 MHz Cortex-M55 microcontroller and a 46 GOPS Ethos-U55 NPU. The Ensemble E1C is virtually the same as the E1 microcontroller but with less memory (2MB SRAM) and storage (up to 1.9MB non-volatile MRAM), and offered in more compact packages with 64, 90, or 120 pins as small as 3.9 x 3.9mm. Alif Semi Ensemble E1C specifications: CPU – Arm Cortex-M55 core up to 160 MHz with Helium Vector Processing Extension, 16KB Instruction and Data caches, Armv8.1-M ISA with Arm TrustZone; 4.37 CoreMark/MHz GPU – Optional D/AVE 2D Graphics Processing Unit MicroNPU – 1x Arm Ethos-U55 Neural Processing Unit with 128 MAC; up to 46 GOPS On-chip application memory Up to 1.9 MB MRAM Non-Volatile Memory Up to 2MB Zero Wait-State SRAM with optional […]

Orange Pi 3B V2.1 SBC has been revamped with better WiFi 5 connectivity, M.2 2280 NVMe/SATA SSD socket

Shenzhen Xunlong Software has introduced the Orange Pi 3B V2.1 SBC with an M.2 socket that supports 2280 NVMe or SATA SSDs, and a new Ampak AP6256 WiFi 5 and Bluetooth 5 wireless module replacing the Allwinner AW859A-based CDTech 20U5622 module in the first revision of the board. The Orange Pi 3B SBC was first introduced in August 2023 as a Rockchip RK3566 SBC with Raspberry Pi 3B form factor and support for M.2 2230 and 2242 NVMe or SATA storage. The new Orange Pi 3B V2.1 supports longer M.2 2280 SSDs at the cost of being slightly bigger than a credit card (89×56 mm) and offers better WiFi 5 connectivity. Orange Pi 3B V2.1 specifications: SoC – Rockchip RK3566 CPU – Quad-core Cortex-A55 processor @ up to 1.8 GHz GPU – Arm Mali-G52 2EE GPU with support for OpenGL ES 1.1/2.0/3.2, OpenCL 2.0, Vulkan 1.1 NPU – 0.8 TOPS […]

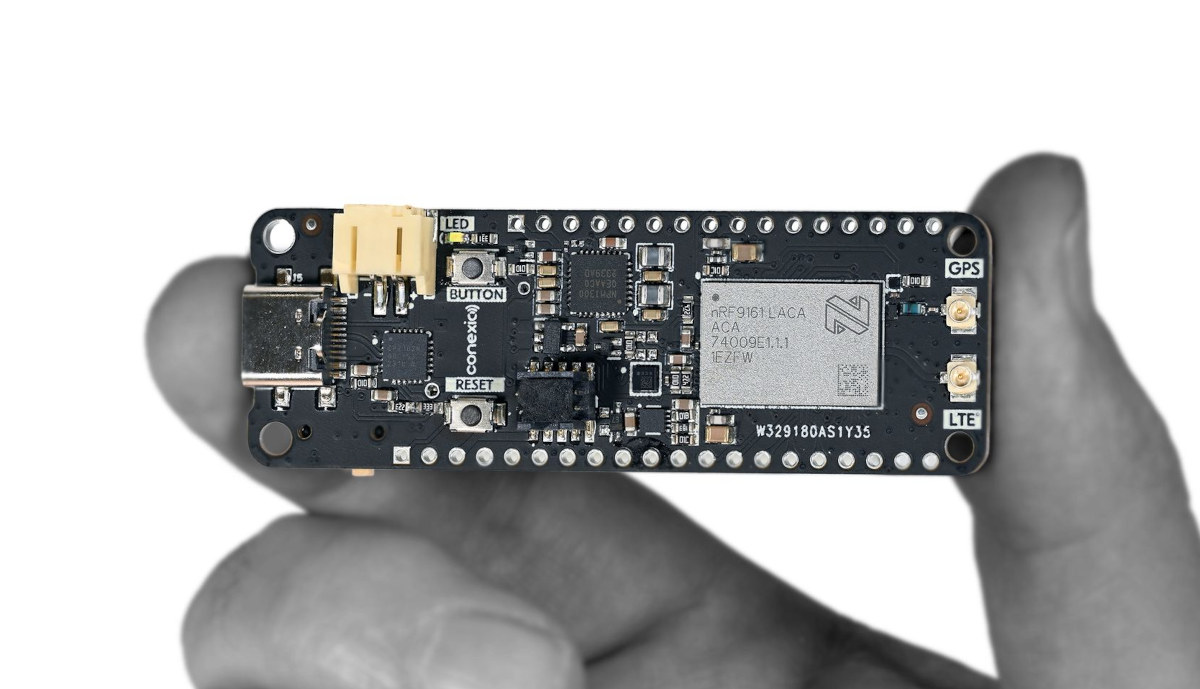

Conexio Stratus Pro – A battery-powered nRF9161 development kit with LTE IoT, DECT NR+, GNSS connectivity (Crowdfunding)

Conexio Stratus Pro is a tiny IoT development kit based on Nordic Semi nRF9161 system-in-package (SiP) with LTE-M/NB-IoT, DECT NR+, and GNSS connectivity and designed to create battery-powered cellular-connected electronic projects and products such as asset trackers, environmental monitors, smart meters, and industrial automation devices. Just like the previous generation Conexio Startus board based on the Nordic Semi nRF9160 cellular IoT SiP, the new Conexio Stratus Pro board supports solar energy harvesting and comes with a Feather form factor and Qwiic connector for each expansion. Conexio Stratus Pro specifications: System-in-package – Nordic Semi nRF9161 SiP MCU – Arm Cortex-M33 clocked at 64 MHz with 1 MB Flash pre-programmed MCUBoot bootloader, 256 KB RAM Modem Transceiver and baseband 3GPP LTE release 14 LTE-M/NB-IoT support DECT NR+ ready GPS/GNSS receiver RF Transceiver for global coverage supporting bands: B1, B2, B3, B4, B5, B8, B12, B13, B17, B18, B19, B20, B25, B26, B28, […]

Leveraging GPT-4o and NVIDIA TAO to train TinyML models for microcontrollers using Edge Impulse

We previously tested Edge Impulse machine learning platform showing how to train and deploy a model with IMU data from the XIAO BLE sense board relatively easily. Since then the company announced support for NVIDIA TAO toolkit in Edge Impulse, and now they’ve added the latest GPT-4o LLM to the ML platform to help users quickly train TinyML models that can run on boards with microcontrollers. What’s interesting is how AI tools from various companies, namely NVIDIA (TAO toolkit) and OpenAI (GPT-4o LLM), are leveraged in Edge Impulse to quickly create some low-end ML model by simply filming a video. Jan Jongboom, CTO and co-founder at Edge Impulse, demonstrated the solution by shooting a video of his kids’ toys and loading it in Edge Impulse to create an “is there a toy?” model that runs on the Arduino Nicla Vision at about 10 FPS. Another way to look at it […]

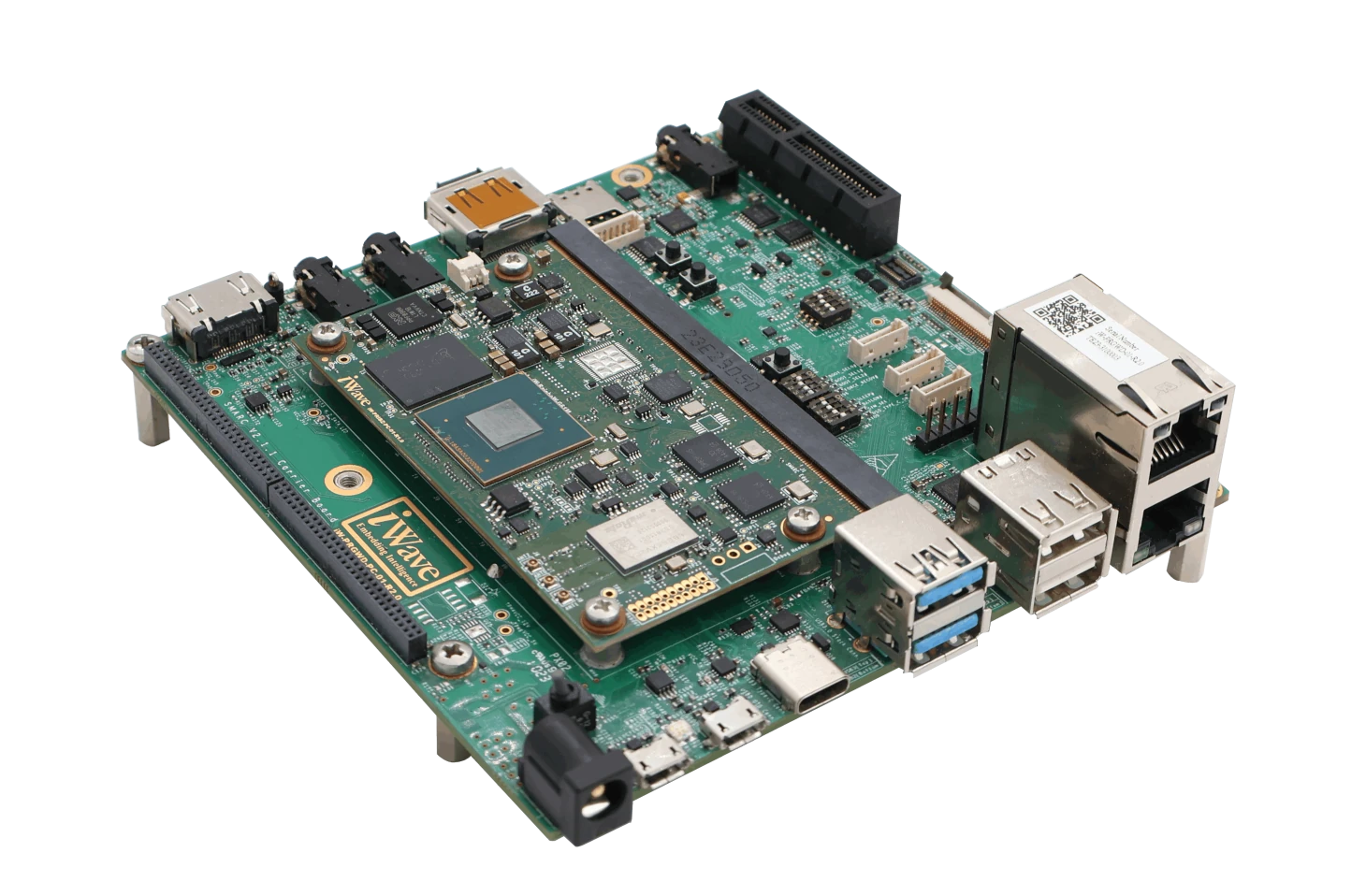

NXP i.MX 95 SMARC 2.1 system-on-modules – ADLINK LEC-IMX95 and iWave iW-RainboW-G61M

Several companies have unveiled SMARC 2.1 compliant system-on-modules powered by the NXP i.MX 95 AI SoC, and today we’ll look at the ADLINK LEC-IMX95 and iWave Systems iW-RainboW-G61M and related development/evaluation kits. The NXP i.MX 95 SoC was first unveiled at CES 2023 with up to six Cortex-A55 application cores, a Cortex-M33 real-time core, and a low-power Cortex-M7 core, as well as an eIQ Neutron NPU for machine learning applications. Since then a few companies have unveiled evaluation kits and system-on-modules such as the Toradex Titan evaluation kit or the Variscite DART-MX95 SoM, but none of those were compliant with a SoM standard, but at least two SMARC 2.1 system-on-modules equipped with the NXP i.MX 95 processor have been introduced. ADLINK LEC-IMX95 Specifications: SoC – NXP i.MX 95 CPU Up to 6x Arm Cortex-A55 application cores clocked at 2.0 GHz with 32K I-cache and D-cache, 64KB L2 cache, and 512KB […]

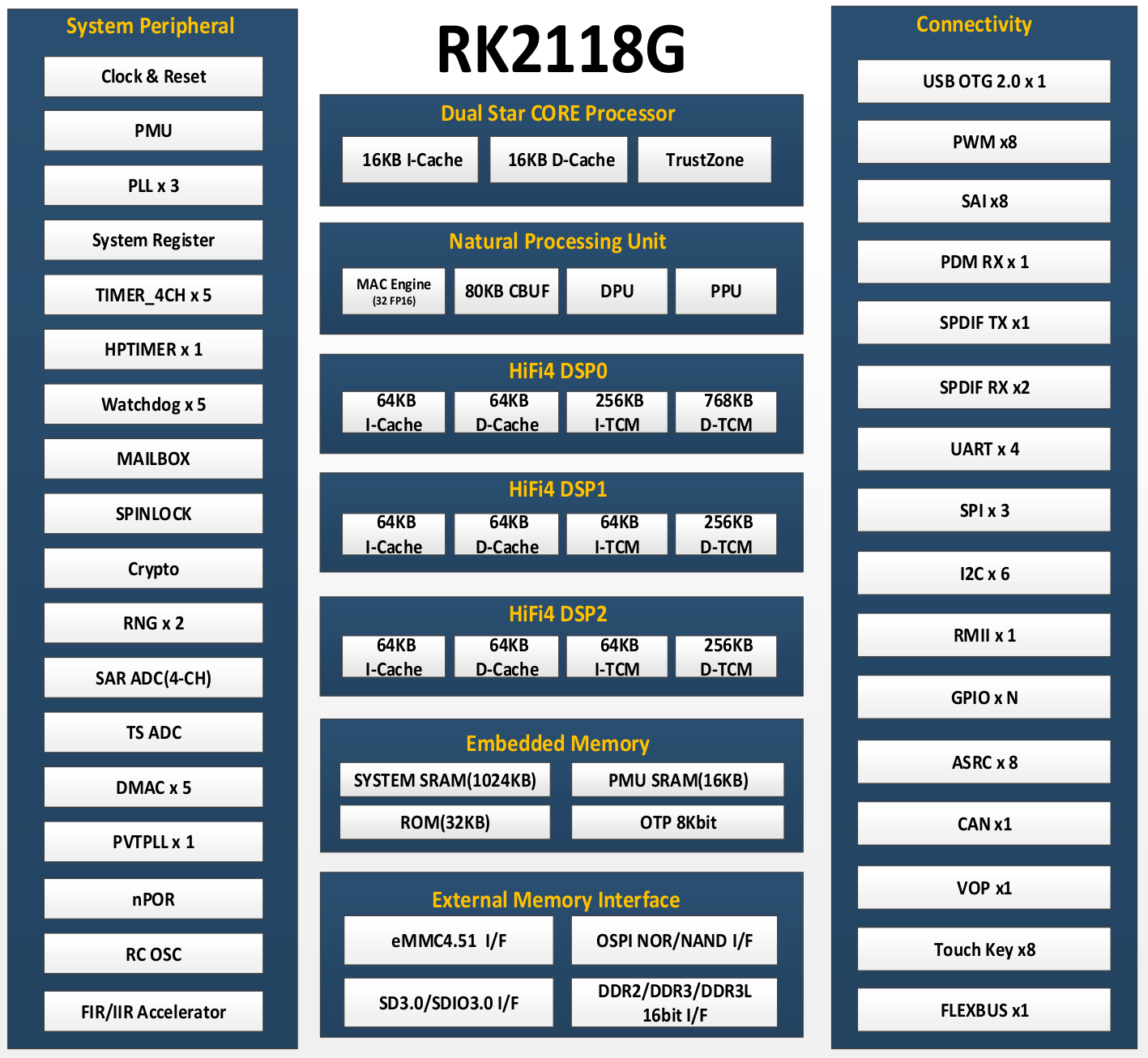

Rockchip RK2118G/RK2118M dual-core Star-SE Armv8-M microcontrollers target smart audio applications

Rockchip RK2118G and RK2118M smart audio microcontrollers based on a dual-core Star-SE Armv8-M processor, an NPU for smart AI audio processor, three DSPs, 1024KB SRAM, optional DDR memory in package, and a range of peripherals. I first noticed the RK2118M in slides from the Rockchip Developer Conference 2024 last March, but I did not have enough information for an article at the time. Things have now changed since I’ve just received a bunch of datasheets including the one for the RK2118G and RK2118G microcontrollers, which look identical except for the DDR interface and optional built-in 64MB RAM for the RK2118G. The datasheets have only one reference to Arm with the string “Arm-V8M” and nothing else, and Cortex is not mentioned at all. But the slide above reveals the STAR-SE core looks to be an Arm Cortex-M33 core. We also learn the top frequencies for the “STAR-M33″/”STAR-SE” core (300MHz) and the […]

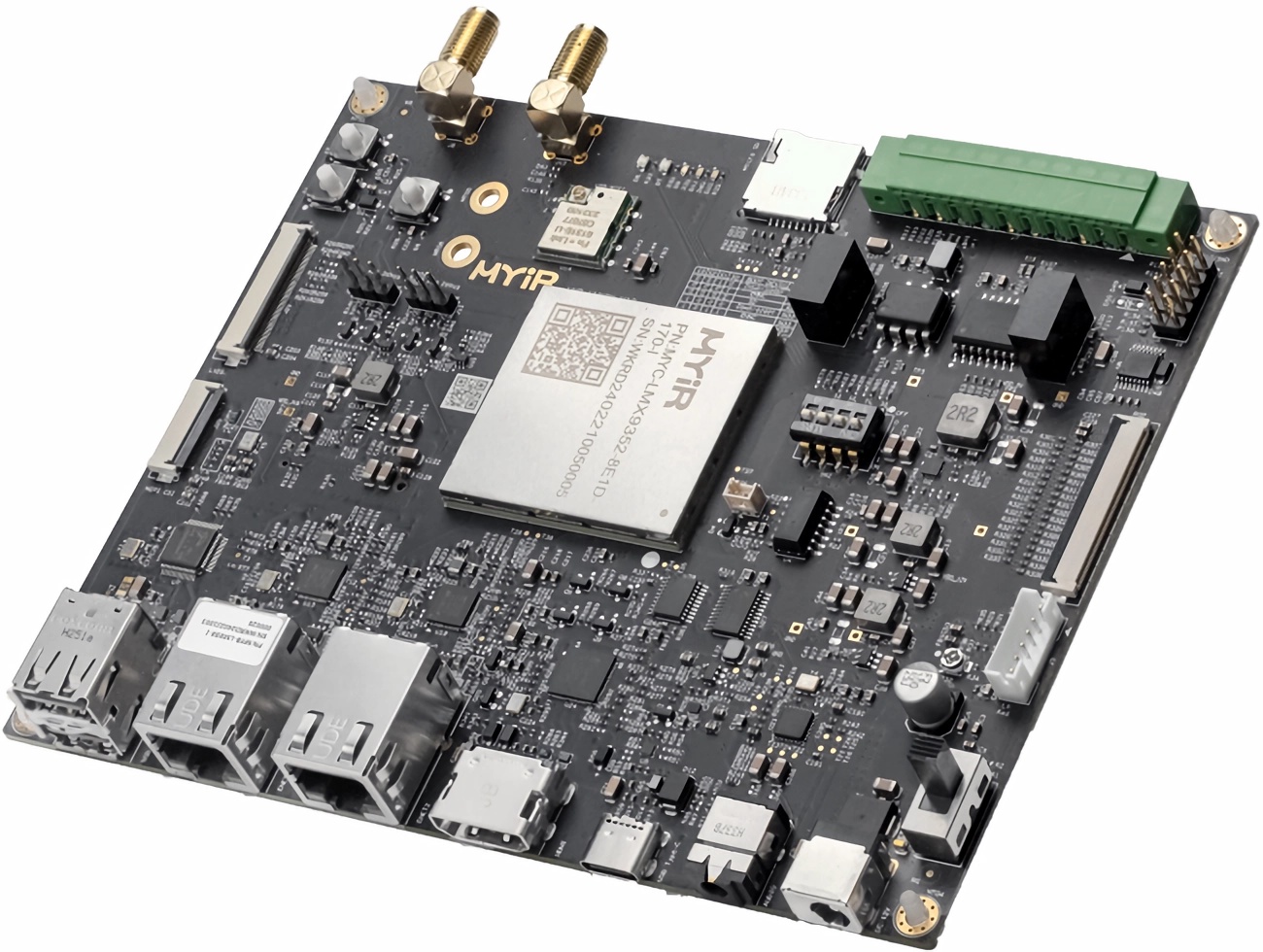

New NXP i.MX 93-based system-on-modules launched by MYiR, Variscite, and Compulab

We have covered announcements about early NXP i.MX 93-based system-on-modules such as the ADLINK OSM-IMX93 and Ka-Ro Electronics’ QS93, as well as products integrating the higher-end NXP i.MX 95 processor such as the Toradex Titan Evaluation kit. Three additional NXP i.MX 93 SoMs from Variscite, Dart, and Compulab are now available. Targeted at industrial, IoT, and automotive applications, the NXP i.MX 93 features a 64-bit dual-core Arm Cortex-A55 application processor running at up to 1.7GHz and a Cortex-M33 co-processor running at up to 250MHz. It integrates an Arm Ethos-U65 microNPU, providing up to 0.5TOPS of computing power, and supports EdgeLock secure enclave, NXP’s hardware-based security subsystem. The heterogeneous multicore processing architecture allows the device to run Linux on the main core and a real-time operating system on the Cortex-M33 core. The processor is designed for cost-effective and energy-efficient machine learning applications. It supports LVDS, MIPI-DS, and parallel RGB display protocols […]