Arm has just unveiled Armv8.1-M architecture that adds Arm Helium technology, the M-Profile Vector Extension (MVE) for the Arm Cortex-M cores that will improve the compute performance of Cortex-M based microcontrollers. Helium will deliver up to 15 times more machine learning (ML) performance and up to 5 times uplift to signal processing allowing local decision-making on low-power embedded devices. Helium instructions will enable new applications for Arm Cortex-M microcontrollers in audio devices, sensor hubs, keyword spotting, voice command control, power electronics, communications and still image processing. Helium and Neon (the Advanced SIMD technology for Arm Cortex-A processors) are similarities but Helium has been designed for efficient signal processing performance in small processors. One different illustrated below is that while NEON loads 128-bit instructions (e.g. VLDR, VLMA), Helium will split up 128‑bit wide instruction into four equally sized chunks, called “beats” (labelled A to D) due to difference between Cortex-M and […]

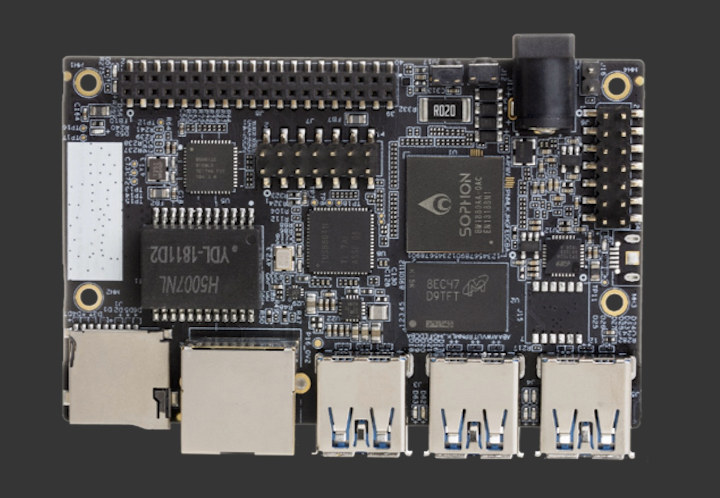

96Boards AI Sophon Edge Developer Board Features Bitmain BM1880 ASIC SoC

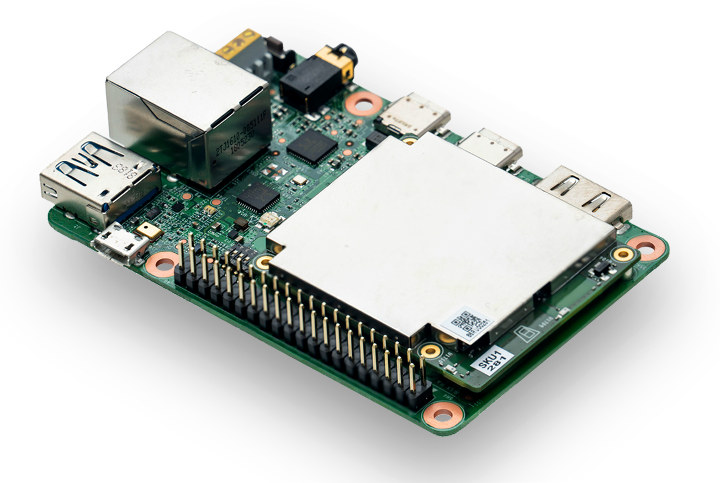

Bitmain, a company specializing in cryptocurrency, blockchain, and artificial intelligence (AI) application, has just joined Linaro, and announced the first 96Boards AI platform featuring an ASIC: Sophon BM1880 Edge Development Board, often just referred to as “Sophon Edge”. The board conforms to the 96Boards CE specification, and include two Arm Cortex-A53 cores, a Bitmain Sophon Edge TPU delivering 1 TOPS performance on 8-bit integer operations, USB 3.0 and gigabit Ethernet. Sophon Edge specifications: SoC ASIC – Sophon BM1880 dual core Cortex-A53 processor @ 1.5 GHz, single core RISC-V processor @ 1 GHz, 2MB on-chip RAM, and a TPU (Tensor Processing Unit) that can provide 1TOPS for INT8,and up to 2 TOPs by enabling Winograd convolution acceleration System Memory – 1GB LPDDR4 @ 3200Mhz Storage – 8GB eMMC flash + micro SD card slot Video Processing – H.264 decoder, MJPEG encoder/decoder, 1x 1080p @ 60fps or 2x 1080p @ 30fps H.264 decoder, […]

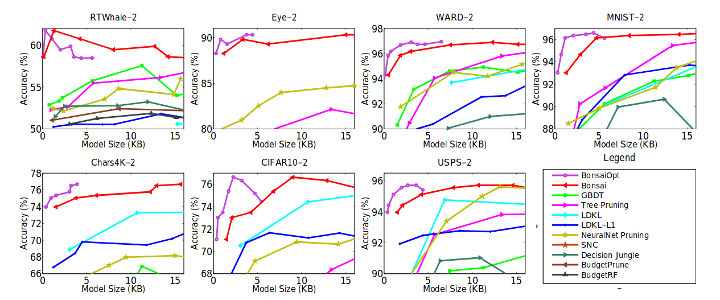

Bonsai Algorithm Enables Machine Learning on Arduino with a 2KB RAM Footprint

Machine learning used to be executed in the cloud, then the inference part moved to the edge, and we’ve even seen micro-controllers able to do image recognition with GAP8 RISC-V micro-controller. But I’ve recently come across a white paper entitled “Resource-efficient Machine Learning in 2 KB RAM for the Internet of Things” that shows how it’s possible to perform such tasks with very little resources. Here’s the abstract: This paper develops a novel tree-based algorithm, called Bonsai, for efficient prediction on IoT devices – such as those based on the Arduino Uno board having an 8 bit ATmega328P microcontroller operating at 16 MHz with no native floating point support, 2 KB RAM and 32 KB read-only flash. Bonsai maintains prediction accuracy while minimizing model size and prediction costs by: (a) developing a tree model which learns a single, shallow, sparse tree with powerful nodes; (b) sparsely projecting all data into […]

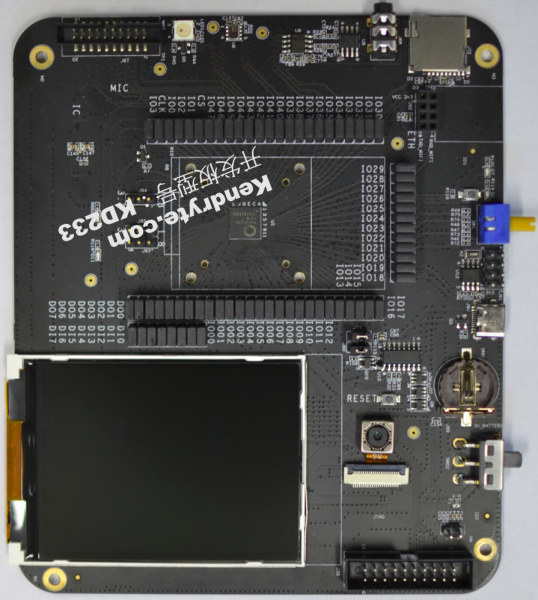

$50 Kendryte KD233 Board Features K210 Dual Core RISC-V SoC

RISC-V is talked about a lot, and we’re started to see a few development boards coming to market, or at least being announced with some based on SiFive processors such as HiFive Unleashed or Arduino Cinque, as well as other like GAPUINO GAP8 for low power A.I. applications. The Arduino board is not for sale yet, and HiFive Unleashed and GAPUINO GAP8 are fairly expensive at $999 and $229. Kendryte KD233 board is another RISC-V development board, based on Kendryte K210 dual core 64-bit RISC-V processor designed for machine vision and “machine hearing”. The board goes for $49.99 on AnalogLamb. Kendryte KD233 board specifications: SoC – Kendryte K210 dual core 64-bit RISC-V processor, KPU Convolutional Neural Network (CNN) hardware accelerator, APU audio hardware accelerator, 6MiB of on-chip general-purpose SRAM memory and 2MiB of on-chip AI SRAM memory, AXI ROM to load user program from SPI flash Storage – 128 Mbit […]

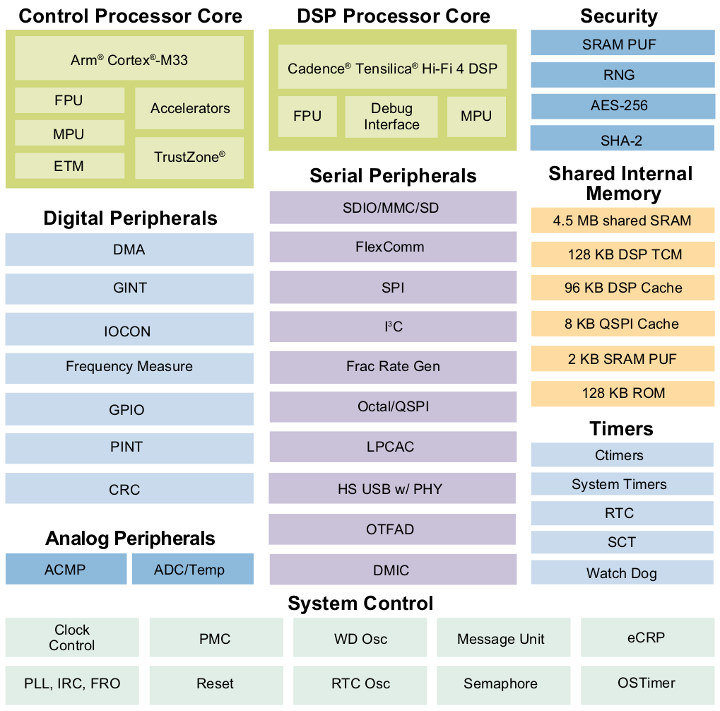

NXP Unveils i.MX RT600 Series Arm Cortex-M33 + Audio DSP Crossover Processor

A little over a year ago, NXP introduces their first crossover processor that blurs the line between real-time capabilities of microcontrollers and higher performance of application processors with NXP i.MX RT1050 processor equipped with a Cortex-M7 core clocked at up to 700 MHz. The company has now announced another model with lower power consumption. NXP i.MX RT600 series comes with a Cortex M33 core clocked at up to 300MHz, a Cadence Tensillica HiFi 4 audio DSP, and up to 4.5MB shared SRAM. Main features of NXP i.MX RT685 crossover processor: CPU Core – Arm Cortex-M33 up to 300 MHz DSP – Tensilica Hi-Fi 4 up to 600 MHz Memory Up to 4.5 MB on-chip RAM 128KB DSP TCM, 128 KB DSP Cache Storage 96KB ROM on-chip 2x SDIO with 1x supporting eMMC5.0 w/ HS400 1x Octal/Quad SPI up to 100MB/s Peripherals 2x DMA Engines with 35 channels each 1x USB […]

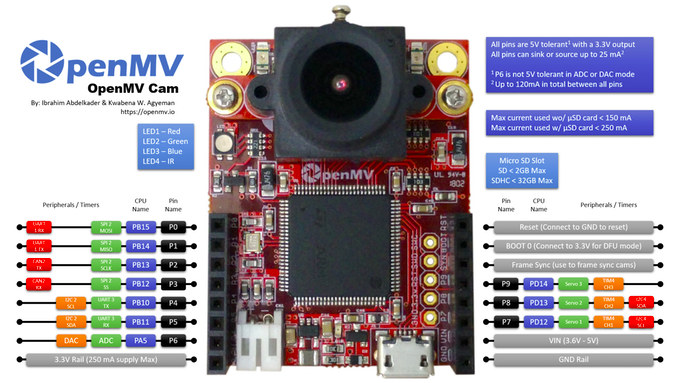

OpenMV Cam H7 MicroPython Machine Vision Camera Launched on Kickstarter

OpenMV team has launched an upgrade to their popular OpenMV CAM M7 machine vision camera, with OpenMV CAM H7 replacing the STMicro STM32F7 micro-controller by a more powerful STM32H7 MCU clocked at up to 400 MHz. Beside having twice the processing power, the new camera board also features removable camera modules for thermal vision and global shutter support. OpenMV CAM H7 camera board specifications: MCU – STMicro STM32H743VI Arm Cortex M7 microcontroller @ up to 400 MHz with 1MB RAM, 2MB flash. External Storage – micro SD card socket supporting up to 100 Mbps read/write to record videos and store machine vision assets. Camera modules Omnivision OV7725 image sensor (default) capable of taking 640×480 8-bit Grayscale / 16-bit RGB565 images at 60 FPS when the resolution is above 320×240 and 120 FPS when it is below; 2.8mm lens on a standard M12 lens mount Optional Global Shutter camera module to capture […]

Google Unveils Edge TPU Low Power Machine Learning Chip, AIY Edge TPU Development Board and Accelerator

Google introduced artificial intelligence and machine learning concepts to hundreds of thousands of people with their AIY projects kit such as the AIY Voice Kit with voice recognition and the AIY Vision Kit for computer vision applications. The company has now gone further by unveiling Edge TPU, its own purpose-built ASIC chip designed to run TensorFlow Lite ML models at the edge, as well as corresponding AIY Edge TPU development board, and AIY Edge TPU accelerator USB stick to add to any USB compatible hardware. Google Edge TPU (Tensor Processing Unit) & Cloud IoT Edge Software Edge TPU is a tiny chip for machine learning (ML) optimized for performance-per-watt and performance-per-dollar. It can either accelerate ML inferencing on device, or can pair with Google Cloud to create a full cloud-to-edge ML stack. In either case, local processing reduces latency, remove the needs for a persistent network connection, increases privacy, and […]

FOSSASIA Summit 2018 Schedule – March 22-25

FOSDEM is the “Free & Open Source Software Developers’ European Meeting” takes place the first week-end of February every year in Brussels, Belgium. It turns out there’s an event in Asia called FOSSASIA Summit that’s about to take place in Singapore on March 22-25. There are some differences however, as while FOSDEM is entirely free to attend, FOSSASIA requires to pay an entry fee to attend talks, although there are free tickets to access the exhibition hall and career fair. There are also less sessions as in FOSDEM, but still twelve different tracks with: Artificial Intelligence Blockchain Cloud, Container, DevOps Cybersecurity Database Kernel & Platform Open Data, Internet Society, Community Open Design, IoT, Hardware, Imaging Open Event Solutions Open Source in Business Science Tech Web and Mobile Since the event is spread out over four days, it should be easier to attend the specific sessions you are interested in. I’ve […]