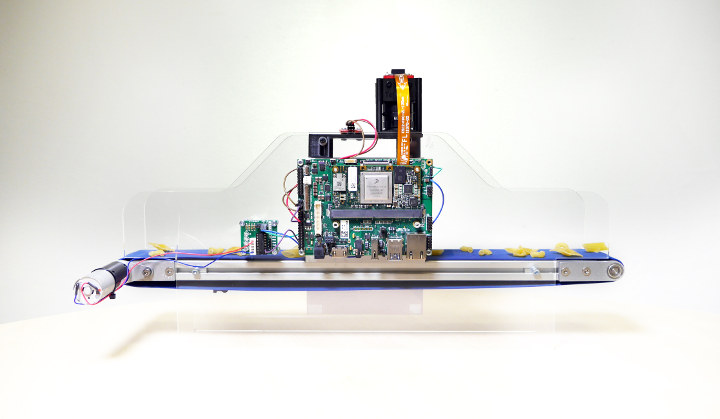

Toradex, Amazon Web Services (AWS), and NXP Semiconductors collaborated to create the AI Embedded Vision Starter Kit aiming to ease the development of cloud-connected computer vision and machine learning applications in industries such as industrial automation, agriculture, medical equipment, and many more. The AI Embedded Vision Starter Kit includes the following items: Toradex Apalis iMX8 System on Module (SoM) powered by NXP i.MX 8QuadMax applications processor Toradex Ixora Carrier Board Allied Vision Alvium 1500 industrial-grade MIPI CSI-2 camera All required cables and a 12VDC (30W) power supply Full software stack, including source code for running the device as well as for cloud deployment Extensive documentation 50 USD AWS credit The kit will help developers meet the must-have requirements of smart connected devices including secure connectivity, remote monitoring, OTA updates, maximum uptime & reliability, compact form factor, cost-optimized hardware, computer vision and machine learning algorithms optimized for low-power hardware, and more. […]

Arm Introduces Ethos NPU Family, Mali-G57 GPU, and Mali-D37 Display Processor

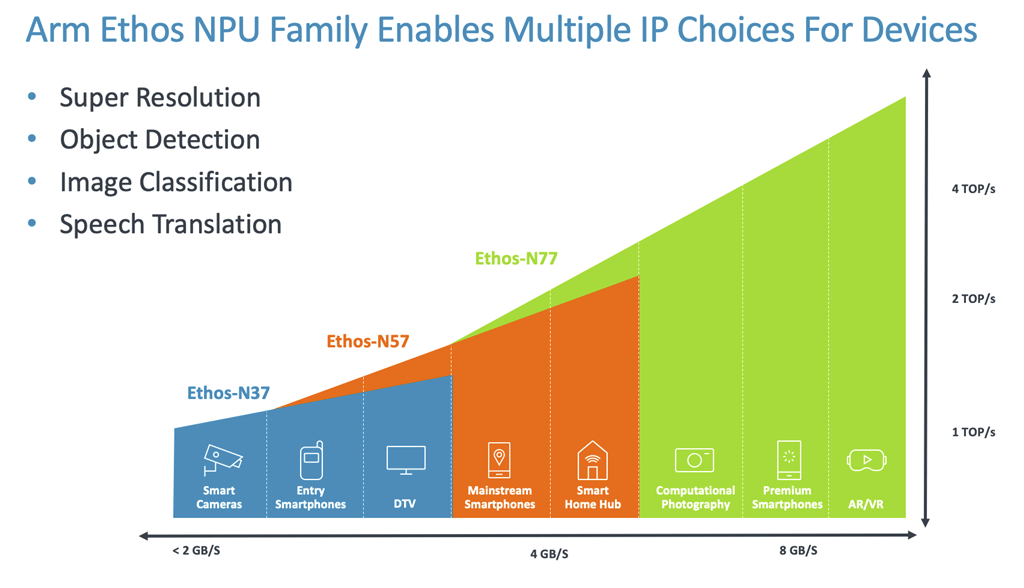

Arm has just announced four new IP solutions with the most interesting being Ethos NPU (Neural Processing Unit) family with both Ethos-N57 and Ethos-N37 NPUs for mainstream devices, but the company also announced the new Arm Mali-G57, the first mainstream Valhall GPU, as well as Arm Mali-D37 DPU (Display Processing Unit) for full HD and 2K resolution. Arm Ethos NPU Family There are three members of the new Ethos family, and if you’ve never heard about Ethos-N77 previously, that’s because it was known as Arm ML processor. The three NPU’s cater to different AI workloads / price-point from 1 TOPS to 4 TOPS: Ethos-N37 Optimized for 1 TOP/s ML performance range 512 8×8 MAC/cycle 512KB internal memory Small footprint (<1mm2) For smart cameras, entry smartphones, DTV Ethos-N57 Optimized for 2 TOP/s ML performance range 1024 8×8 MAC/cycle 512KB internal memory For mainstream smartphones, smart home hubs Ethos-N77 Up to 4 […]

Intrinsyc Unveils Open-Q 845 µSOM and Snapdragon 845 Mini-ITX Development Kit

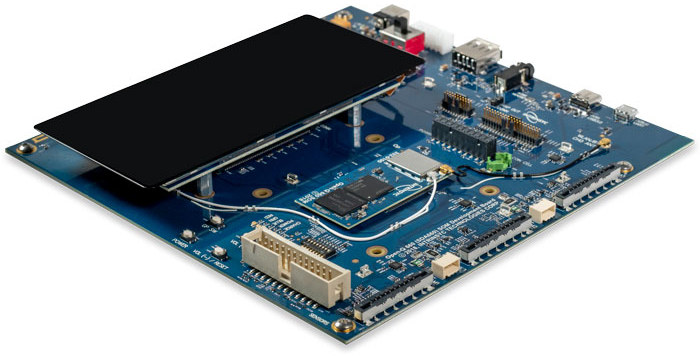

Intrinsyc introduced the first Qualcomm Snapdragon 845 hardware development platform last year with its Open-Q 845 HDK designed for OEMs and device makers. But the company has now just announced a solution for embedded systems and Internet of Things (IoT) products with Open-Q 845 micro system-on-module (µSOM) powered by the Snapdragon 845 octa-core processor, as well as a complete development kit featuring the module and a Mini-ITX baseboard. Open-Q845 µSOM Specifications: SoC – Qualcomm Snapdragon SDA845 octa-core processor with 4x Kryo 385 Gold cores @ 2.649GHz + 4x Kryo 385 Silver low-power cores @ 1.766GHz cores, Hexagon 685 DSP, Adreno 630 GPU with OpenGL ES 3.2 + AEP (Android Extension Pack), DX next, Vulkan 2, OpenCL 2.0 full profile System Memory – 4GB or 6GB dual-channel high-speed LPDDR4X SDRAM at 1866MHz Storage – 32GB or 64GB UFS Flash Storage Connectivity Wi-Fi 5 802.11a/b/g/n/ac 2.4/5Ghz 2×2 MU-MIMO (WCN3990) with 5 GHz […]

Embedded Linux Conference (ELC) Europe 2019 Schedule – October 28-30

I may have just written about Linaro Connect San Diego 2019 schedule, but there’s another interesting event that will also take place this fall: the Embedded Linux Conference Europe on October 28 -30, 2019 in Lyon, France. The full schedule was also published by the Linux Foundation a few days ago, so I’ll create a virtual schedule to see what interesting topics will be addressed during the 3-day event. Monday, October 28 11:30 – 12:05 – Debian and Yocto Project-Based Long-Term Maintenance Approaches for Embedded Products by Kazuhiro Hayashi, Toshiba & Jan Kiszka, Siemens AG In industrial products, 10+ years maintenance is required, including security fixes, reproducible builds, and continuous system updates. Selecting appropriate base systems and tools is necessary for efficient product development. Debian has been applied to industrial products because of its stability, long-term supports, and powerful tools for packages development. The CIP Project, which provides scalable and […]

Arm Techcon 2019 Schedule – Machine Learning, Security, Containers, and More

Arm TechCon will take place on October 8-10, 2019 at San Jose Convention Center to showcase new solutions from Arm and third-parties, and the company has now published the agenda/schedule for the event. There are many sessions and even if you’re not going to happen it’s always useful to checkout what will be discussed to learn more about what’s going on currently and what will be the focus in the near future for Arm development. Several sessions normally occur at the same time, so as usual I’ll make my own virtual schedule with the ones I find most relevant. Tuesday, October 8 09:00 – 09:50 – Open Source ML is rapidly advancing. How can you benefit? by Markus Levy, Director of AI and Machine Learning Technologies, NXP Over the last two years and still continuing, machine learning applications have benefited tremendously from the growing number of open source frameworks, tools, […]

FOSSASIA 2019 Schedule – March 14-17

As its name implies, FOSSASIA is a Free and Open Source Software event taking place every year in Asia, more specifically in Singapore. I first discovered it last year, and published a virtual FOSSASIA 2018 schedule last year to give an idea about the subjects discussed at the event. It turns out FOSSASIA 2019 is coming really soon, as in tomorrow, so I’m a bit late, but I’ll still had a look at the schedule and made my own for the 4-day event. Thursday – March 14, 2019 10:05 – 10:25 – For Your Eyes Only: Betrusted & the Case for Trusted I/O by Bunnie Huang, CTO Chibitronics Security vulnerabilities are almost a fact of life. This is why system vendors are increasingly relying on physically separate chips to handle sensitive data. Unfortunately, private keys are not the same as your private matters. Exploits on your local device still have […]

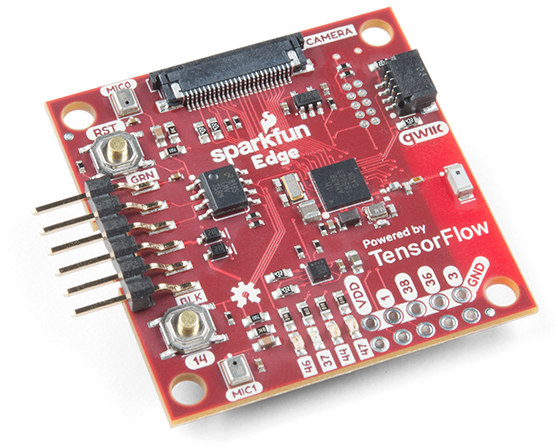

$15 Sparkfun Edge Board Supports Tensorflow Lite for Microcontrollers

The 2019 TensorFlow Dev Summit is now taking place, and we’ve already covered the launch of Google’s Coral Edge TPU dev board and USB accelerator supporting TensorFlow Lite, but there has been another interesting new development during the event: TensorFlow Lite now also supports microcontrollers (MCU), instead of the more powerful application processors. You can easily get started with Tensorflow Lite for MCU with SparkFun Edge development board powered by Ambiq Micro Apollo3 Blue Bluetooth MCU whose ultra-efficient Arm Cortex-M4F core can run TensorFlow Lite using only 6uA/MHz. SparkFun Edge specifications: MCU – Ambiq Micro Apollo3 Blue 32-bit Arm Cortex-M4F processor at 48MHz / 96MHz (TurboSPOT) with DMA, 1MB flash, 384 KB SRAM, 6uA/MHz power usage, Bluetooth support. Connectivity – Bluetooth LE 5 (on-chip) + Bluetooth antenna Camera – OV7670 camera connector Audio – 2x MEMS microphones with operational amplifier Sensor – STMicro LIS2DH12 3-axis accelerometer Expansion – Qwiic connector, […]

Google to Launch Edge TPU Powered Coral Development Board and USB Accelerator

Several low power neural network accelerators have been launched over the recent years in order to accelerator A.I. workloads such as object recognition, and speech processing. Recent announcements include USB devices such as Intel Neural Compute Stick 2 or Orange Pi AI Stick2801. I completely forgot about it, but Google also announced their own Edge TPU ML accelerator, development kit, and USB accelerator last summer. The good news is that Edge TPU powered Coral USB accelerator and Coral dev board and are going to launch in the next few days for respectively $74.99 and $149.99. Coral Development Board Coral dev board is comprised of a base board and SoM wit the following specifications: Edge TPU Module SoC – NXP i.MX 8M quad core Arm Cortex-A53 processor with Arm Cortex-M4F real-time core, GC7000 Lite 3D GPU ML accelerator – Google Edge TPU coprocessor delivering up to 4 TOPS System Memory – […]