Google Coral SBC was the first development board with Google Edge TPU. The AI accelerator was combined with an NXP i.MX 8M quad-core Arm Cortex-A53 processor and 1GB RAM to provide an all-in-all AI edge computing platform. It launched for $175, and now still retails for $160 which may not be affordable to students and hobbyists. Google announced a new model called Coral Dev Board Mini last January, and the good news is that the board is now available for pre-order for just under $100 on Seeed Studio with shipping scheduled to start by the end of the month. Coral Dev Board Mini specifications haven’t changed much since the original announcement, but we know a few more details: SoC – MediaTek MT8167S quad-core Arm Cortex-A35 processor @ 1.3 GHz with Imagination PowerVR GE8300 GPU AI/ML accelerator – Google Edge TPU coprocessor with up to 4 TOPS as part of Coral […]

Microchip SAMD21 Machine Learning Evaluation Kits Work with Cartesiam, Edge Impulse and Motion Gestures Solutions

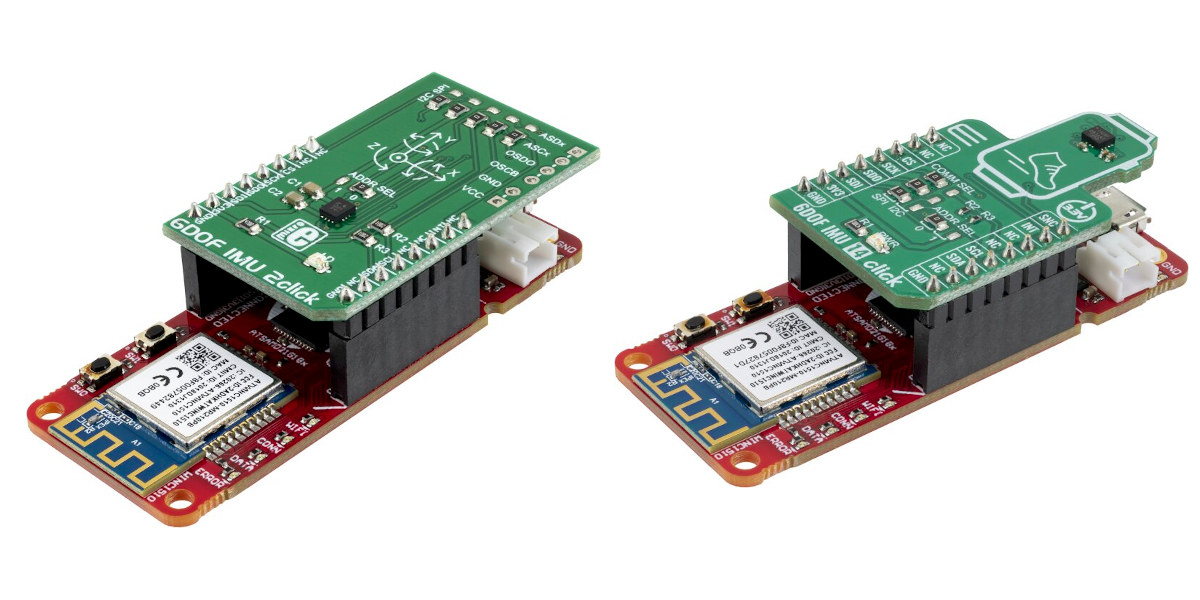

While it all started in the cloud Artificial Intelligence is now moving at the very edge is ultra-low power nodes, and Microchip has launched two SAMD21 Arm Cortex-M0+ machine learning evaluation kits that now work with AI/ML solutions from Cartesiam, Edge Impulse, and Motion Gestures. Bot machine learning evaluation kits come with SAMD21G18 Arm Cortex-M0+ 32-bit MCU, an on-board debugger (nEDBG), an ATECC608A CryptoAuthentication secure element, ATWINC1510 Wi-Fi network controller, as well as Microchip MCP9808 high accuracy temperature sensor and a light sensor. But EV45Y33A development kit is equipped with an add-on board featuring Bosch’s BMI160 low-power Inertial Measurement Unit (IMU), while EV18H79A features an add-on board with TDK InvenSense ICM-42688-P 6-axis MEMS. The photo above makes it clear both SAMD21 machine learning evaluation kits rely on the same baseboard with a MikroBus socket connected to either 6DOF IMU 2click or 6DOF IMU 14 click add-on board from MikroElektronika. Both […]

CrowPi2 Raspberry Pi 4 Education Laptop Review

I started my review of CrowPi2 Raspberry Pi 4 Learning Kit a while ago and at the time I showed content from the kit and its first boot. I’ve now spent more time with this very special Raspberry Pi 4 laptop and will focus this review on the education part, namely CrowPi2 software, but will also look at thermal cooling under stress with and without a fan, and try to install another Raspberry Pi compatible board inside the laptop shell. CrowPi2 Education Software It’s quite important to read the user manual before getting started as there are a few non-intuitive steps you may have to take. First I assume the wireless keyboard would just connect after pressing the power button, but it did not. The user manual explains the RF dongle is inside the mouse, and once you connect it you’ll be able to use the keyboard that has some […]

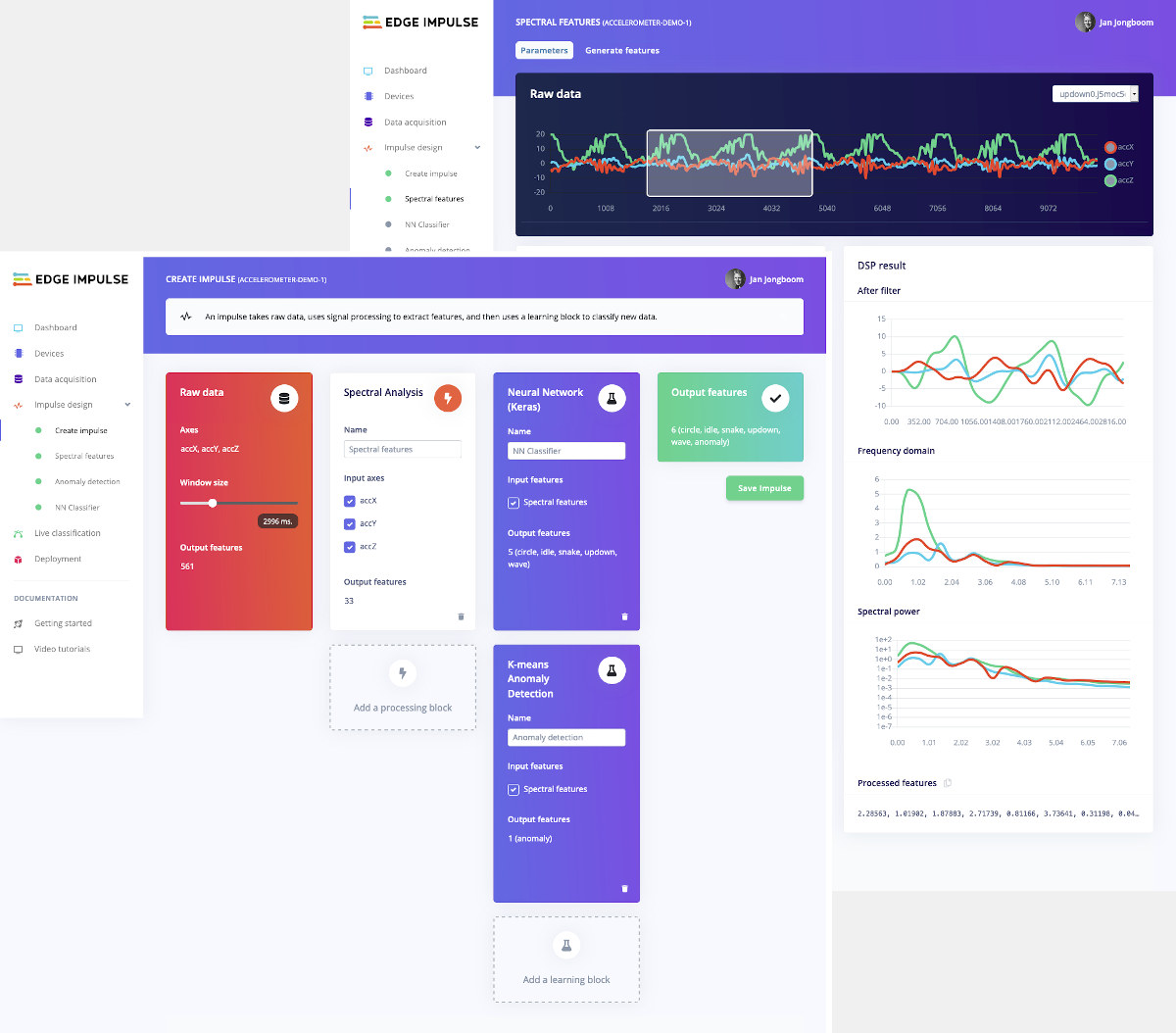

Edge Impulse Enables Machine Learning on Cortex-M Embedded Devices

Artificial intelligence used to happen almost exclusively in the cloud, but this introduces delays (latency) for the users and higher costs for the provider, so it’s now very common to have on-device AI on mobile phones or other systems powered by application processors. But recently there’s been a push to bring machine learning capabilities to even lower-end embedded systems powered by microcontrollers, as we’ve seen with GAP8 RISC-V IoT processor or Arm Cortex-M55 core and the Ethos-U55 micro NPU for Cortex-M microcontrollers, as well as Tensorflow Lite. Edge Impulse is another solution that aims to ease deployment of machine learning applications on Cortex-M embedded devices (aka Embedded ML or TinyML) by collecting real-world sensor data, training ML models on this data in the cloud, and then deploying the model back to the embedded device. The company collaborated with Arduino and announced support for the Arduino Nano 33 BLE Sense and […]

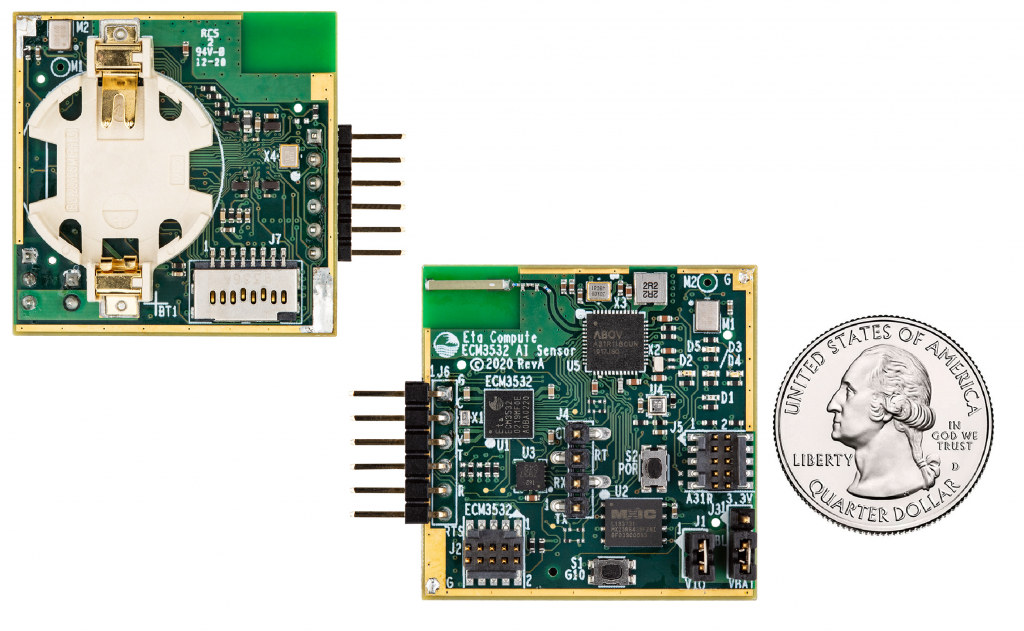

ECM3532 AI Sensor Board Features Cortex-M3 MCU & 16-bit DSP “TENSAI” SoC for TinyML Applications

Eta Compute ECM3532 is a system-on-chip (SoC) with a Cortex-M3 microcontroller clocked at up to 100 Mhz, and NXP CoolFlux 16-bit DSP designed for machine learning on embedded devices, aka TinyML, and part of the company’s TENSAI platform. The chip is also integrated into the ECM3532 AI sensor board featuring two MEMS microphones, a pressure & temperature sensor, and a 6-axis motion sensor (accel/gyro) all powered by a CR2032 coin-cell battery. ECM3532 AI sensor board specifications: SoC – ECM3532 neural sensor processor with Arm Cortex-M3 core @ up to 100 MHz (< 5μA/MHz run mode) combines with 512KB embedded FLASH, 256KB SRAM, and 8KB BootROM + secure bootloader, and NXP CoolFlux 16-bit DSP @ up to 100 MHz with 32KB program memory, 64KB data memory. See the product brief for details. Storage – 64Mbit serial Flash for datalogging Connectivity – Bluetooth 4.2 LE via ABOV Semiconductor A31R118 and PCB antenna […]

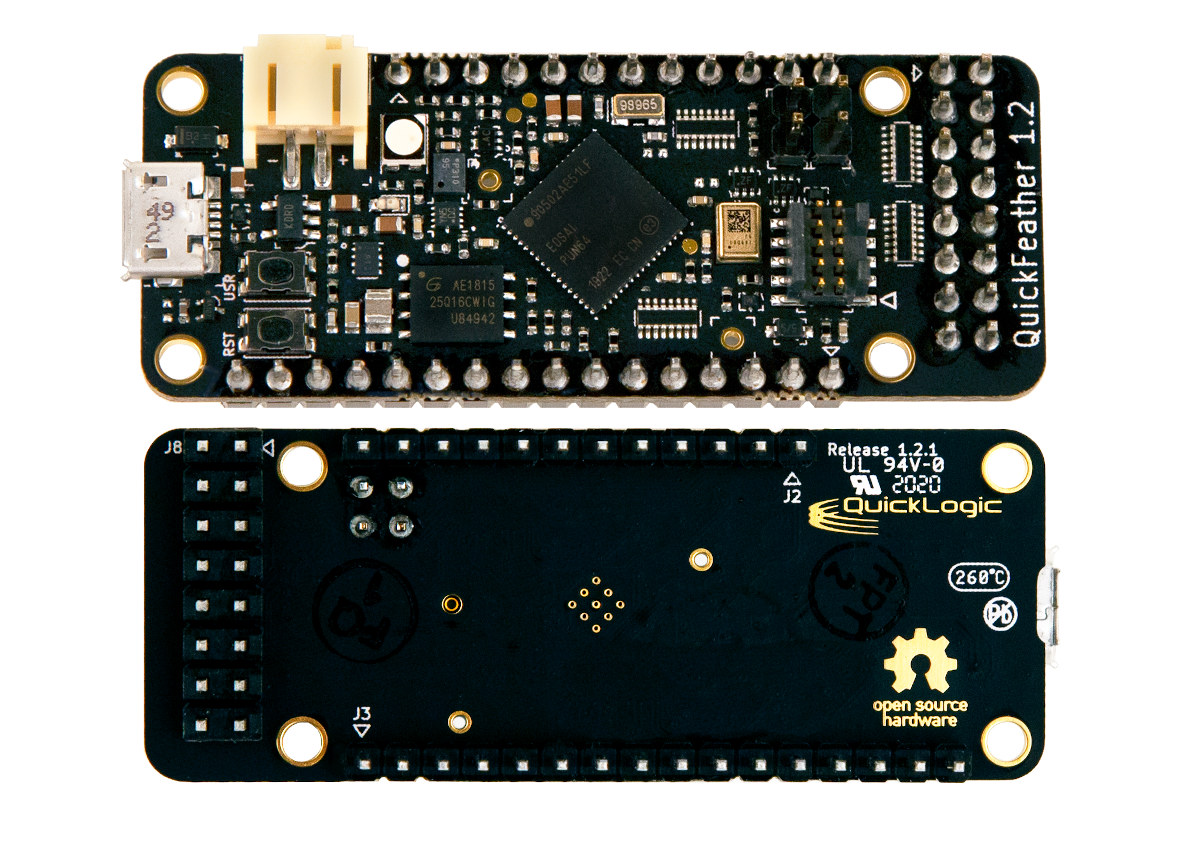

QuickFeather Board is Powered by QuickLogic EOS S3 Cortex-M4F MCU with embedded FPGA (Crowdfunding)

Yesterday, I wrote about what I felt what a pretty unique board: Evo M51 board following Adafruit Feather form factor, and equipped with an Atmel SAMD51 Cortex-M4F MCU and an Intel MAX 10 FPGA. But less than 24 hours later, I’ve come across another Adafruit Feather-sized Cortex-M4F board with FPGA fabric. But instead of using a two-chip solution, QuickLogic QuickFeather board leverages the company’s EOS S3 SoC with a low-power Cortex-M4F core and embedded FPGA fabric. QuickFeather board QuickFeather specifications: SoC – QuickLogic EOS S3 with Arm Cortex-M4F Microcontroller @ up to 80 MHz and 512 Kb SRAM, plus an embedded FPGA (eFPGA) with 2400 effective logic cells and 64Kb RAM Storage – 16Mbit SPI NOR flash USB – Micro USB port with data signals tied to eFPGA programmable logic Sensors – Accelerometer, pressure sensor, built-in PDM microphone Expansion I/Os – Breadboard-compatible 0.1″ (2.54 mm) pitch headers including 20 Feather-defined […]

Bamboo Systems B1000N 1U Server Features up to 128 64-bit Arm Cores, 512GB RAM

SolidRun CEx7-LX2160A COM Express module with NXP LX2160A 16-core Arm Cortex A72 processor has been found in the company’s Janux GS31 Edge AI server in combination with several Gyrfalcon AI accelerators. But now another company – Bamboo Systems – has now launched its own servers based on up to eight CEx7-LX2160A module providing 128 Arm Cortex-A72 cores, support for up to 512GB DDR4 ECC, up to 64TB NVMe SSD storage, and delivering a maximum of 160Gb/s network bandwidth in a single rack unit. Bamboo Systems B1000N Server specifications: B1004N – 1 Blade System B1008N – 2 Blade System N series Blade with 4x compute nodes each (i.e. 4x CEx7 LX2160A COM Express modules) Compute Node – NXP 2160A 16-core Cortex-A72 processor for a total of 64 cores per blade. Memory – Up to 64GB ECC DDR4 per compute node or 256GB per blade. Storage – 1x 2.5” NVMe SSD PCIe […]

Register to the Embedded Online Conference for Free Before February 29th

Events such as the Embedded Linux Conference and Embedded Systems Conference take place in the US and Europe every year. There are plenty of talks and it’s certainly good for networking, but you need to travel to the event and the entrance fee to have access to all session costs several hundred dollars if you book early, and over one thousand dollars if you register close to the date of the event. Most ELC/ELCE videos usually end up on The Linux Foundation YouTube channel, but the Beningo Embedded Group and Embedded Related website decided to organize a similar conference happening online and simply called the “Embedded Online Conference“. The conference offers topics about embedded systems, DSP, machine learning and FPGA and will take place on May 20. There are currently 17 talks, but they are still calling for talks so more sessions may be added before the actual event. You’ll […]