DeepSeek R1 model was released a few weeks ago and Brian Roemmele claimed to run it locally on a Raspberry Pi at 200 tokens per second promising to release a Raspberry Pi image “as soon as all tests are complete”. He further explains the Raspberry Pi 5 had a few HATs including a Hailo AI accelerator, but that’s about all the information we have so far, and I assume he used the distilled model with 1.5 billion parameters. Jeff Geerling did his own tests with DeepSeek-R1 (Qwen 14B), but that was only on the CPU at 1.4 token/s, and he later installed an AMD W7700 graphics card on it for better performance. Other people made TinyZero models based on DeepSeekR1 optimized for Raspberry Pi, but that’s specific to countdown and multiplication tasks and still runs on the CPU only. So I was happy to finally see Radxa release instructions to […]

Roboreactor – A Web-based platform to design Raspberry Pi or Jetson-based robots from electronics to code and 3D files

Roboreactor is a web-based platform enabling engineers to build robotic and automation systems based on Raspberry Pi, NVIDIA Jetson, or other SBCs from a web browser including parts selection, code generation through visual programming, and generating URDF models from Onshape software. You can also create your robot with LLM if you wish. The first step is to create a project with your robot specifications and download and install the Genflow Mini image to your Raspberry Pi or NVIDIA Jetson SBC. Alternatively, you can install Gemini Mini middleware with a script on other SBCs, but we’re told the process takes up to 10 hours… At this point, you should be able to access data from sensors and other peripherals connected to your board, and you can also start working on the Python code using visual programming through the Roboreactor node generator without having to write code or understand low-level algorithms. Another […]

Phison’s aiDAPTIV+ AI solution leverages SSDs to expand GPU memory for LLM training

While looking for new and interesting products I found ADLINK’s DLAP Supreme series, a series of Edge AI devices built around the NVIDIA Jetson AGX Orin platform. But that was not the interesting part, what got my attention was it has support for something called the aiDAPTIV+ technology which made us curious. Upon looking we found that the aiDAPTIV+ AI solution is a hybrid (software and hardware) solution that uses readily available low-cost NAND flash storage to enhance the capabilities of GPUs to streamline and scale large-language model (LLM) training for small and medium-sized businesses. This design allows organizations to train their data models on standard, off-the-shelf hardware, overcoming limitations with more complex models like Llama-2 7B. The solution supports up to 70B model parameters with low latency and high-endurance storage (100 DWPD) using SLC NAND. It is designed to easily integrate with existing AI applications without requiring hardware changes, […]

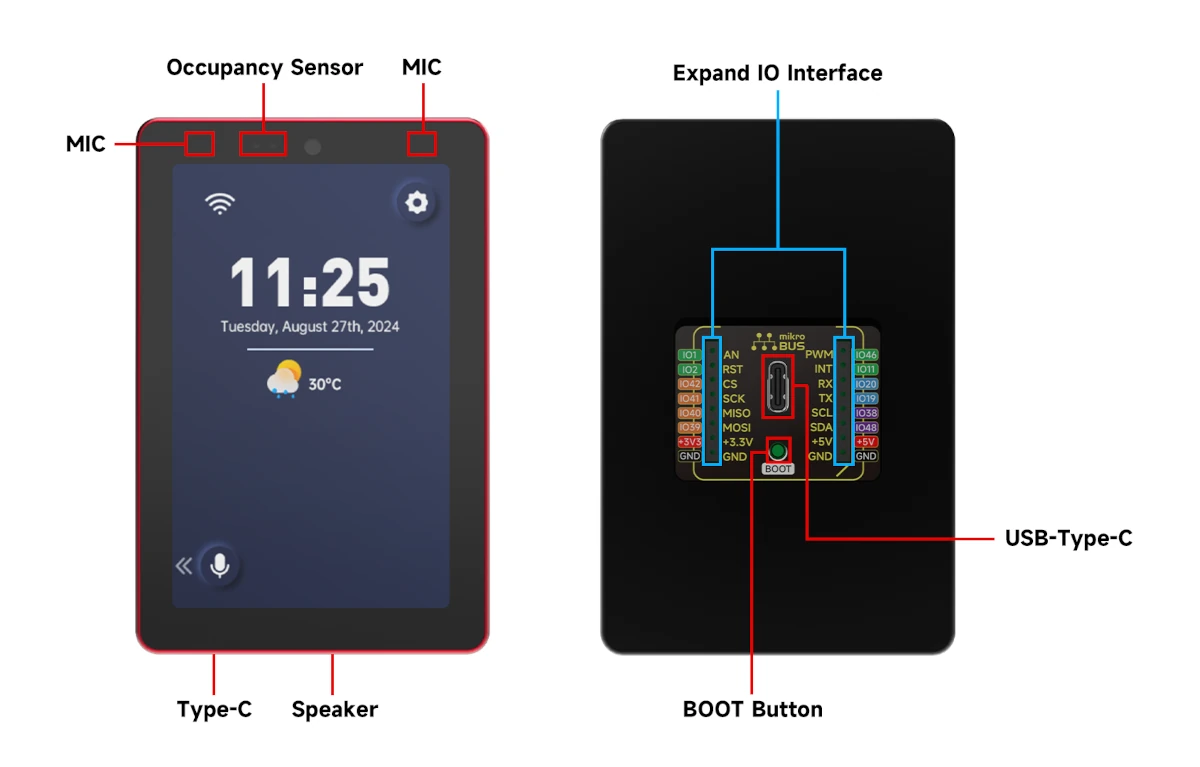

ESP32 Agent Dev Kit is an LLM-powered voice assistant built on the ESP32-S3 platform (Crowdfunding)

The ESP32 Agent Dev Kit is an ESP32-S3-powered voice assistant that offers integrations with popular LLM models such as ChatGPT, Gemini, and Claude. Wireless-Tag says the Dev Kit is suitable for “95% of AIoT applications, from smart home devices to desktop toys, robotics, and instruments” In some ways, it is similar to the SenseCAP Watcher, but it has a larger, non-touch display and dual mic input. It however does not support local language models. It also features a standard MikroBUS interface for expansion. For voice capabilities, the ESP32 Dev Kit integrates two onboard, noise-reducing microphones and a high-fidelity speaker. The built-in infrared laser proximity sensor detects human proximity and movement for “smart interactive experiences”. ESP32 Agent Dev Kit specifications: MCU – ESP32-S3 dual-core Tensilica LX7 microcontroller @ 240MHz, 8MB PSRAM Storage – 16MB flash Display – 3.5-inch Touchscreen, 480×360 resolution Camera – 5MP OmniVision OV5647 camera module, 120° field of […]

M5Stack LLM630 Compute Kit features Axera AX630C Edge AI SoC for on-device LLM and computer vision processing

M5Stack LLM630 Compute Kit is an Edge AI development platform powered by Axera Tech AX630C AI SoC with a 3.2 TOPS NPU designed to run computer vision (CV) and large language model (LLM) tasks at the edge, in other words, on the device itself without access to the cloud. The LLM630 Compute Kit is also equipped with 4GB LPDDR4 and 32GB eMMC flash and supports both wired and wireless connectivity thanks to a JL2101-N040C Gigabit Ethernet chip and an ESP32-C6 module for 2.4GHz WiFi 6 connectivity. You can also connect a display and a camera through MIPI DSI and CSI connectors. M5Stack LLM630 Compute Kit specifications: SoC – Axera Tech (Aixin in China) AX630C CPU – Dual-core Arm Cortex-A53 @ 1.2 GHz; 32KB I-Cache, 32KB D-Cache, 256KB L2 Cache NPU – 12.8 TOPS @ INT4 (max), 3.2 TOPS @ INT8 ISP – 4K @ 30fps Video – Encoding: 4K; Decoding:1080p […]

$249 NVIDIA Jetson Orin Nano Super Developer Kit targets generative AI applications at the edge

NVIDIA Jetson Orin Nano Super Developer Kit is an upgrade to the Jetson Orin Nano Developer Kit with 1.7 times more generative AI performance, a 70% increase in performance to 67 INT8 TOPS, and about half the price, making it a great development platform for generative AI at the edge, mostly robotics. We’ve seen several AI boxes and boards in the last year capable of offline generative AI applications like the Firefly AIO-1684XQ motherboard or Radxa Fogwise Airbox which I reviewed with Llama3, Stable diffusion, Imgsearch, etc… A product like the Fogwise Airbox delivers up to 32 TOPS (INT8) and sells for around $330 which was very competitive then (June 2024). However, the Jetson Orin Nano Super Developer Kit will certainly disrupt the market with over twice the performance, a lower price, and a larger developer community. NVIDIA Jetson Orin Nano Super specifications: NVIDIA Jetson Orin Nano 8GB Module CPU […]

The SenseCAP Watcher is a voice-controlled, physical AI agent for LLM-based space monitoring (Crowdfunding)

Seeed Studio has launched a Kickstarter campaign for the SenseCAP Watcher, a physical AI agent capable of monitoring a space and taking actions based on events within that area. Described as the “world’s first Physical LLM Agent for Smarter Spaces,” the SenseCAP Watcher leverages onboard and cloud-based technologies to “bridge the gap between digital intelligence and physical applications.” The SenseCAP Watcher is powered by an ESP32-S3 microcontroller coupled with a Himax WiseEye2 HX6538 chip (Cortex-M55 and Ethos-U55 microNPU) for image and vector data processing. It builds on the Grove Vision AI V2 module and comes in a form factor about one-third the size of an iPhone. Onboard features include a camera, touchscreen, microphone, and speaker, supporting voice command recognition and multimodal sensor expansion. It runs the SenseCraft software suite which integrates on-device tinyML models with powerful large language models, either running on a remote cloud server or a local computer […]

Firefly EC-R3576PC FD is an Embedded Large-Model Computer based on Rockchip RK3576 processor

Firefly EC-R3576PC FD is described as an “Embedded Large-Model Computer” powered by a Rockchip RK3576 octa-core Cortex-A72/A53 processor with a 6 TOPS NPU and supporting large language models (LLMs) such as Gemma-2B, LlaMa2-7B, ChatGLM3-6B, or Qwen1.5-1.8B. It looks to be based on the ROC-RK3576-PC SBC we covered a few weeks ago, and also designed for LLM. But the EC-R3576PC FD is a turnkey solution that will work out of the box and should deliver decent performance now that the RKLLM toolkit has been released with NPU acceleration. However, note there are some caveats doing that on RK3576 instead of RK3588 that we’ll discuss below. Firefly EC-R3576PC FD specifications: SoC – Rockchip RK3576 CPU 4x Cortex-A72 cores at 2.2GHz, four Cortex-A53 cores at 1.8GHz Arm Cortex-M0 MCU at 400MHz GPU – ARM Mali-G52 MC3 GPU clocked at 1GHz with support for OpenGL ES 1.1, 2.0, and 3.2, OpenCL up to 2.0, and […]