Radxa Fogwise Airbox, also known as Fogwise BM168M, is an edge AI box powered by a SOPHON BM1684X Arm SoC with a built-in 32 TOPS TPU and a VPU capable of handling the decoding of up to 32 HD video streams. The device is equipped with 16GB LPDDR4x RAM and a 64GB eMMC flash and features two gigabit Ethernet RJ45 jacks, a few USB ports, a speaker, and more. Radxa sent us a sample for evaluation. We’ll start the Radxa Fogwise Airbox review by checking out the specifications and the hardware with an unboxing and a teardown, before testing various AI workloads with Tensorflow and/or other frameworks in the second part of the review. Radxa Fogwise Airbox specifications The specifications below come from the product page as of May 30, 2024: SoC – SOPHON SG2300x CPU – Octa-core Arm Cortex-A53 processor up to 2.3 GHz VPU Decoding of up to […]

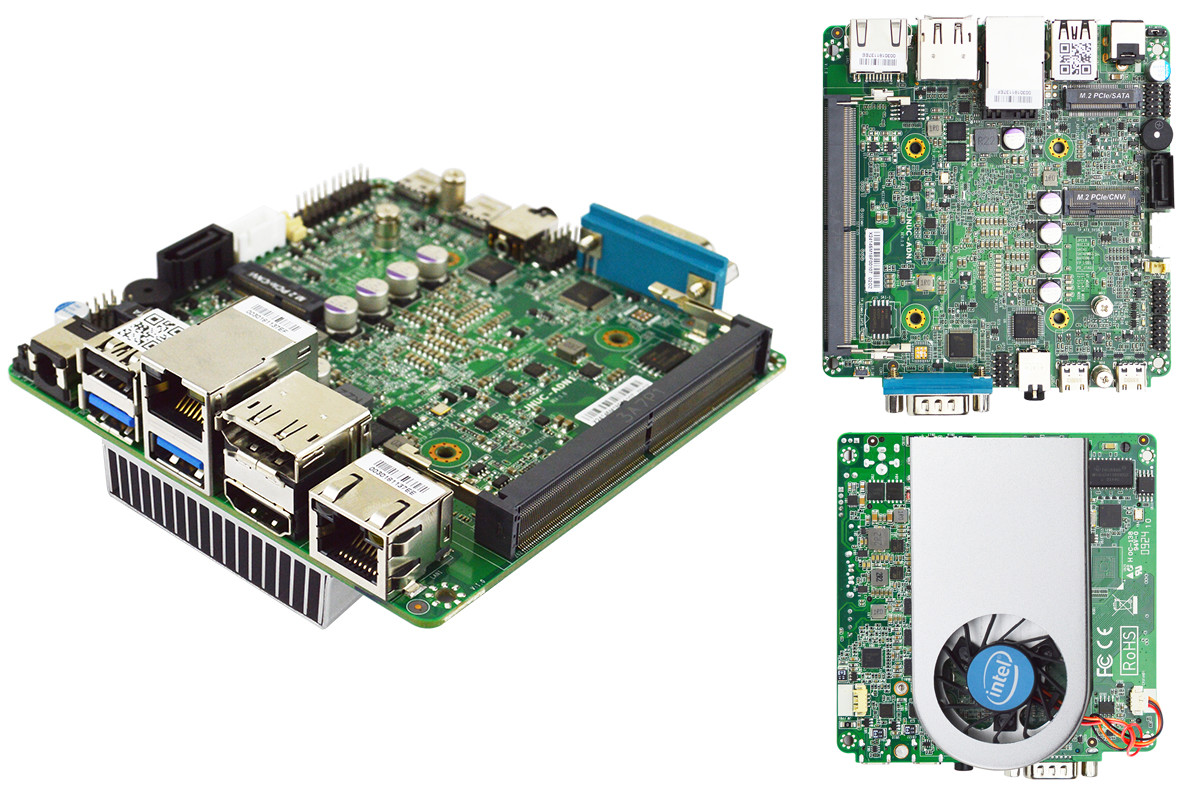

Jetway JNUC-ADN1 4″x4″ Intel N97 “NUC” SBC features two 2.5GbE ports for industrial automation and edge computing

Jetway JNUC-ADN1 is an Intel N97-powered SBC in the Next Unit of Computing (NUC) form factor. The SBC can be equipped with up to 16GB DDR5 RAM via a single SO-DIMM socket, 64GB of eMMC storage, and dual M.2 sockets for additional storage and networking. The SBC comes with two 2.5GbE Ethernet ports and has an operating temperature range of -20°C ~ 60°C, making it suitable for Edge Computing, IoT, and industrial applications. Jetway offers four variants of their NUC-ADN1 SBC whereas the N97000 version does not include eMMC or TPM 2.0 security, the N97002 version features TPM 2.0 but doesn’t have eMMC, the N97004 version omits TPM 2.0 but offers 64GB of eMMC storage, and finally the N97008 version has both TPM 2.0 and 64GB of storage. Previously we have written about similar SBCs from Jetway like the JF35-ADN1 and the Jetway MI05-0XK, we also covered several N97-based industrial […]

de next-TGU8-EZBOX tiny Tiger Lake mini PC is offered with fanless or active-cooling options

AAEON claims the de next-TGU8-EZBOX is the world’s smallest Edge PC with an embedded Intel Core CPU based on the de next-TGU8 SBC introduced in 2022 with an up to up to an Intel Core i7-1185G7E Tiger Lake SoC, 16GB LPDDR4, and M.2 NVMe storage. The de next-TGU8-EZBOX is offered either as a fanless mini PC measuring 95.5 x 69.5 x 42.5mm, or a slightly taller system (45.4mm) with an active cooler. The mini PC features an HDMI port, Gigabit Ethernet and 2.5GbE ports, and USB 3.0. ports making it suitable for edge computing, digital signage, and industrial use. de next-TGU8-EZBOX specifications: SoC Intel Core i7-1185G7E quad-core/octa-thread processor @ 1.80GHz / 4.40GHz with Intel UHD graphics; TDP: 15W (TPD up: 28W); cooler version only Intel Core i5-1145G7E quad-core/octa-thread processor @ 1.50GHz / 4.10GHz with Intel UHD graphics; TDP: 15W (TPD up: 28W); cooler version only Intel Core i3-1115G4E dual-core/quad-thread processor […]

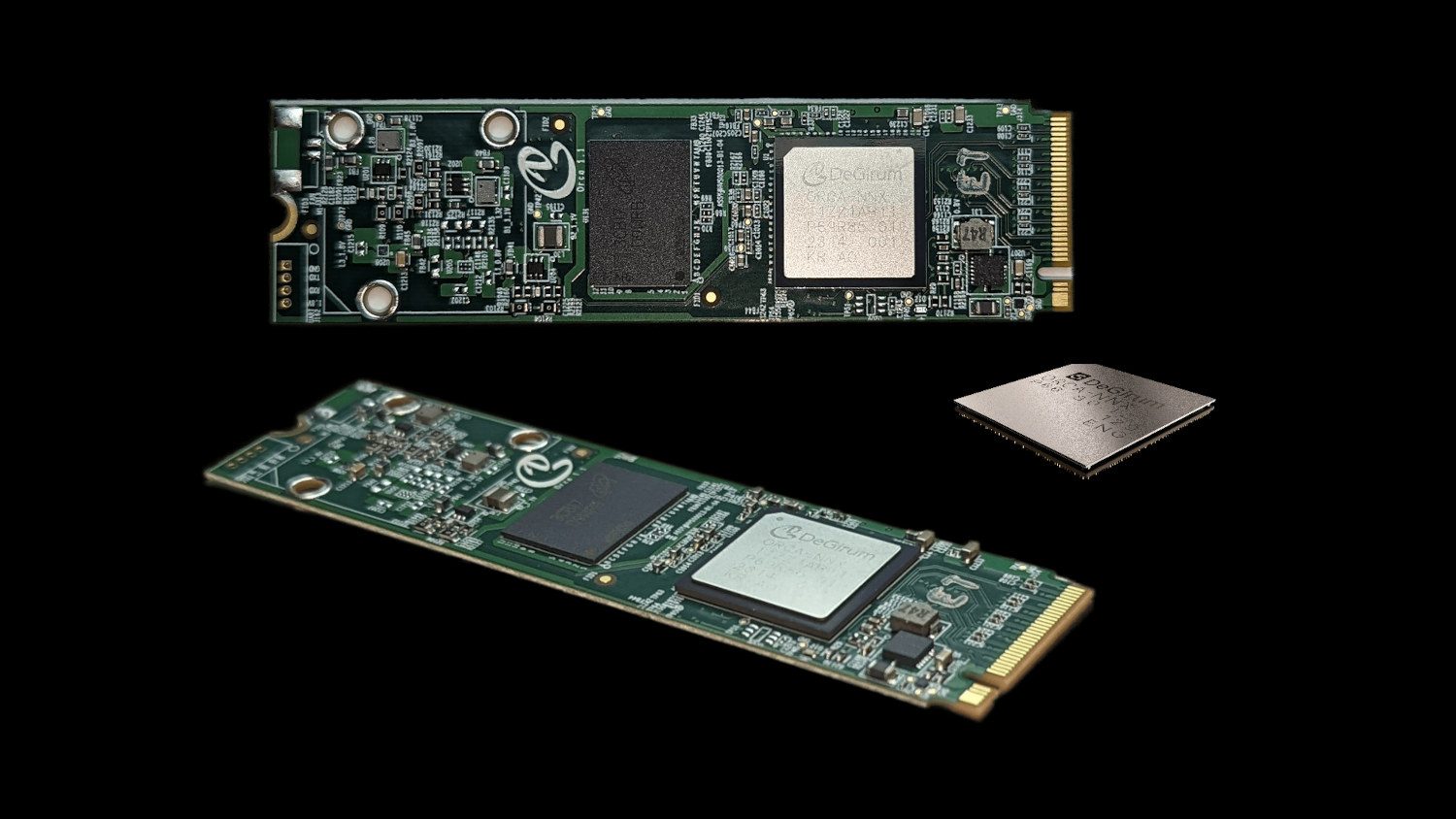

DeGirum ORCA M.2 and USB Edge AI accelerators support Tensorflow Lite and ONNX model formats

I’ve just come across an Atom-based Edge AI server offered with a range of AI accelerator modules namely the Hailo-8, Blaize P1600, Degirum ORCA, and MemryX MX3. I had never heard about the last two, and we may cover the MemryX module a little later, but today, I’ll have a closer at the Degirum ORCA chip and M.2 PCIe module. The DeGirum ORCA is offered as an ASIC, an M.2 2242 or 2280 PCIe module, or (soon) a USB module and supports TensorFlow Lite and ONNX model formats and INT8 and Float32 ML precision. They were announced in September 2023, and have already been tested in a range of mini PCs and embedded box PCs from Intel (NUC), AAEON, GIGABYTE, BESSTAR, and Seeed Studio (reComputer). DeGirum ORCA specifications: Supported ML Model Formats – ONNX, TFLite Supported ML Model Precision – Float32, Int8 DRAM Interface – Optional 1GB, 2GB, or 4GB […]

BrainChip’s Neuromorphic Akida Edge AI Box is now available for pre-orders at $799

BrainChip has recently opened preorders for their Akida Edge AI Box, built in partnership with VVDN Technologies. This box features an NXP i.MX 8M Plus SoC and two Akida AKD1000 neuromorphic processors for low-latency, high-throughput AI processing at the edge. The system features USB 3.0 and micro-USB ports, HDMI, 4GB LPDDR4 memory, 32GB eMMC with up to 1TB micro-SDXC expansion, dual-band Wi-Fi, and two gigabit Ethernet ports for external camera connections, all within a compact, passively-cooled chassis, powered by 12V DC. BrainChip Akida Edge AI Box Specifications: Host CPU – NXP i.MX 8M Plus Quad SOC with 64-bit Arm Cortex-A53 processor running at up to 1.8GHz AI/ML Accelerator – Dual Brainchip AKD1000 (Akida Chip) over PCIe for efficient AI processing Memory – 4GB LPDDR4 Storage 32GB eMMC flash MicroSD card slot for additional storage options Display Output – HDMI output supporting up to 3840 x 2160p30 resolutions with a pixel clock […]

Digi IX40 5G edge computing industrial IoT cellular gateway is designed for Industry 4.0 use cases

Digi IX40 is a 5G edge computing industrial IoT cellular router solution designed for Industry 4.0 use cases such as advanced robotics, predictive maintenance, asset monitoring, industrial automation, and smart manufacturing. The IIoT gateway is based on an NXP i.MX 8M Plus Arm processor running a custom Linux distribution, and besides 5G and 4G LTE cellular connectivity, offers gigabit Ethernet networking with 6 RJ45 and SFP ports, GNSS for geolocation and time, as well as digital and analog I/Os and an RS232/RS422/RS485 serial interface supporting Modbus. Digi IX40 specifications: SoC – NXP i.MX 8M Plus Arm Cortex-A53 processor @ 1.6 GHz with 2.3 TOPS NPU System Memory – 1GB RAM Storage – 8GB eMMC flash Wireless Cellular IX40-05 5G NSA, 5G SA, 4G LTE-ADVANCED PRO CAT 19 5G NR bands – n1, n2, n3, n5, n7, n8, n12, n13, n14, n18, n20, n25, n26, n28, n29, n30, n38, n40, n41, […]

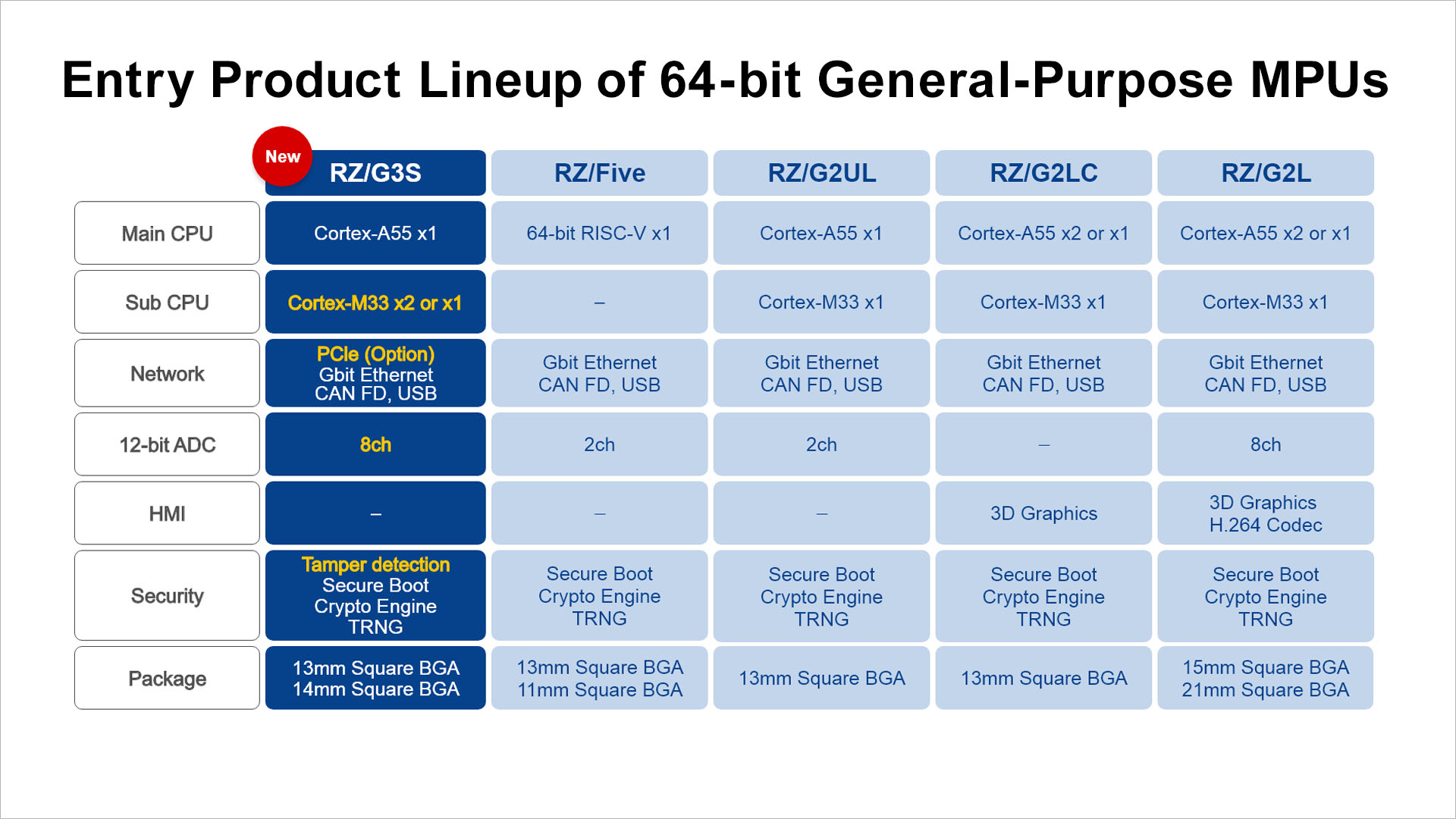

Renesas RZ/G3S is a 64-bit Arm Cortex-A55/M33 microprocessor with low power consumption and enhanced peripherals

Renesas RZ/G3S 64-bit Arm Cortex-A55 microprocessor (MPU) designed for IoT edge and gateway devices consumes as little as 10µW in standby mode thanks to its Cortex-M33 core(s) and features a PCIe interface for high-speed data transfers with 5G wireless modules. A single-core ARM Cortex-A55 CPU powers the RZ/G3s and can distribute workloads to two sub-CPUs, boosting efficiency in task handling and resulting in fewer components and a smaller system size. The Cortex-A55 core operates at 1.1GHz and is designed to improve power efficiency and performance over its predecessor, the Cortex-A53. The RZ/G3S isn’t Renasas’ first Cortex-A55 product as they have previously released the RZ/A3UL and the RZ/G2L, both powered by the same Cortex-A55 CPU. Additionally, Renesas launched the 64-bit RISC-V RZ/Five processor late last year. The RZ/G3S boasts high-speed interfaces such as PCIe and GbE, a better standby mode, and improved security features which make it suitable for IoT applications […]

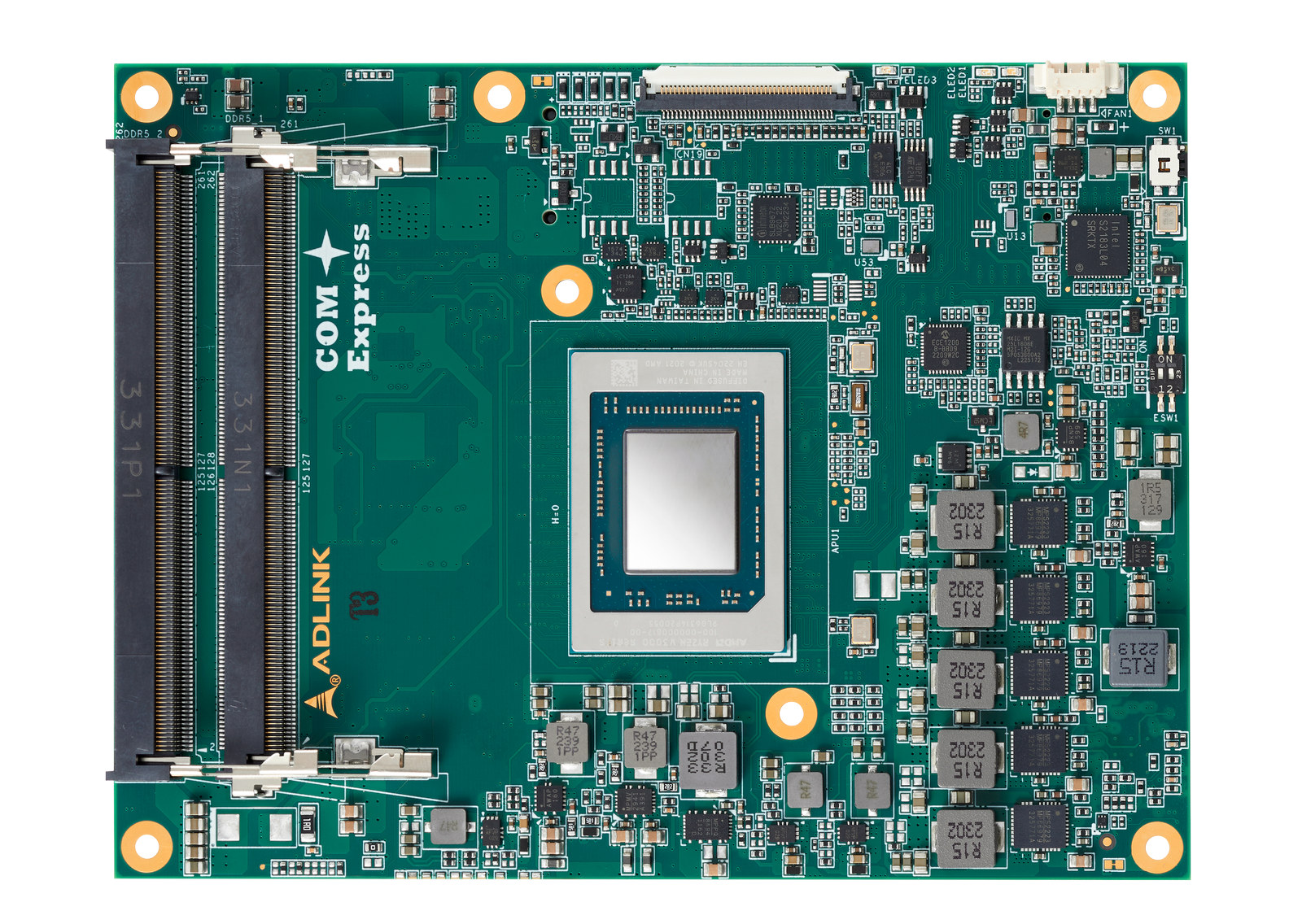

AMD Ryzen Embedded V3000 COM Express Type 7 module supports up to 64GB DDR5 memory

ADLINK Express VR7 is a COM Express Basic size Type 7 computer-on-module powered by the eight-core AMD Ryzen Embedded V3000 processor with two 10GbE interfaces, fourteen PCIe Gen 4 lanes, and optional support for the “extreme temperature range” between -40°C and 85°C. The COM Express module supports up to 64GB dual-channel DDR5 SO-DIMM (ECC/non-ECC) memory and targets headless embedded applications such as edge networking equipment, 5G infrastructure at the edge, video storage analytics, intelligent surveillance, industrial automation and control, and rugged edge servers. Express-VR7 specifications: SoC – AMD Embedded Ryzen V3000 (one or the other Ryzen V3C48 8-core/16-thread processor @ 3.3/3.8GHz; TDP: 45W Ryzen V3C44 4-core/8-thread processor @ 3.5/3.8GHz; TDP: 45W Ryzen V3C18I 8-core/16-thread processor 1.9/3.8GHz; TDP: 15W (useful in the extreme temperature range) Ryzen V3C16 6-core/12-thread processor 2.0/3.8GHz; TDP: 15W Ryzen V3C14 4-core/8-thread processor 2.3/3.8GHz; TDP: 15W System Memory – Up to 64GB (2x 32GB) dual-channel ECC/non-ECC DDR5 memory […]