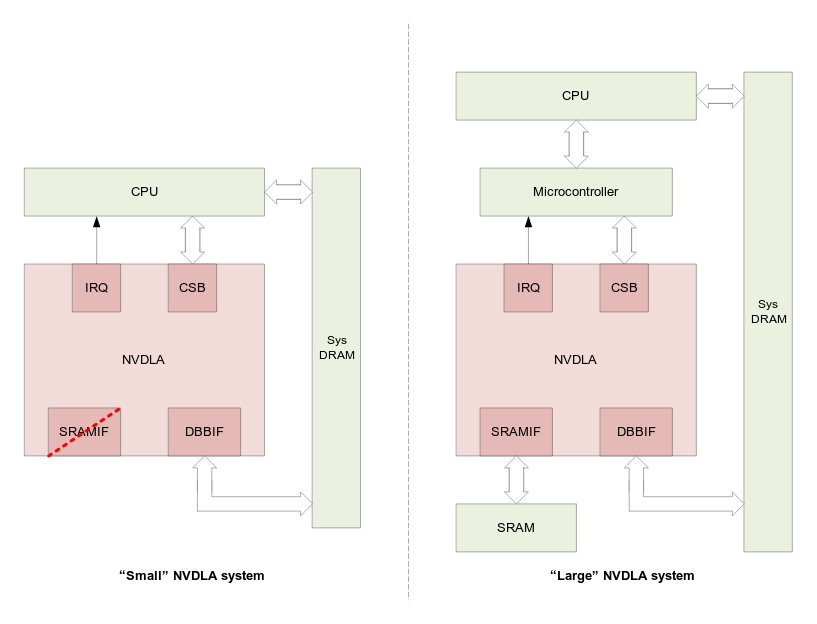

NVIDIA is not exactly known for their commitment to open source projects, but to be fair things have improved since Linus Torvalds gave them the finger a few years ago, although they don’t seem to help much with Nouveau drivers, I’ve usually read positive feedback for Linux for their Nvidia Jetson boards. So this morning I was quite surprised to read the company had launched NVDLA (NVIDIA Deep Learning Accelerator), “free and open architecture that promotes a standard way to design deep learning inference accelerators” The project is based on Xavier hardware architecture designed for automotive products, is scalable from small to large systems, and is said to be a complete solution with Verilog and C-model for the chip, Linux drivers, test suites, kernel- and user-mode software, and software development tools all available on Github’s NVDLA account. The project is not released under a standard open source license like MIT, […]

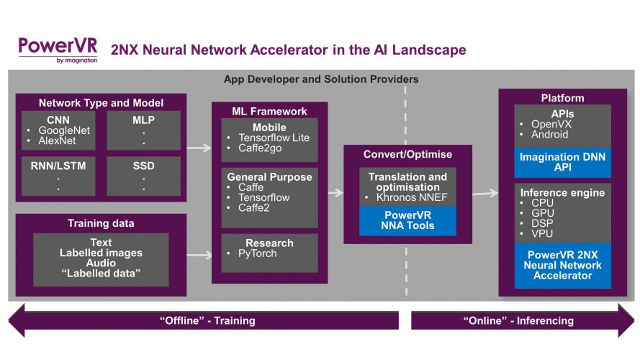

Imagination Announces PowerVR Series2NX Neural Network Accelerator (NNA), and PowerVR Series9XE and 9XM GPUs

Imagination Technologies has just made two announcements: one for their PowerVR Series2NX neural network accelerator, and the other for the new high-end GPU families: PowerVR Series9XE and 9XM. PowerVR Series2NX neural network accelerator The companies claims 2NX can deliver twice the performance and half the bandwidth of nearest competitor, and it’s the first dedicated hardware solution with flexible bit-depth support from 16-bit down to 4-bit. Key benefits of their solution (based on market data available in August 2017 from a variety of sources) include: Highest inference/mW IP cores to deliver the lowest power consumption Highest inference/mm2 IP cores to enable the most cost-effective solutions Lowest bandwidth solution with support for fully flexible bit depth for weights and data including low bandwidth modes down to 4-bit 2048 MACs/cycle in a single core, with the ability to go to higher levels with multi core The PowerVR 2NX NNA is expected to be […]

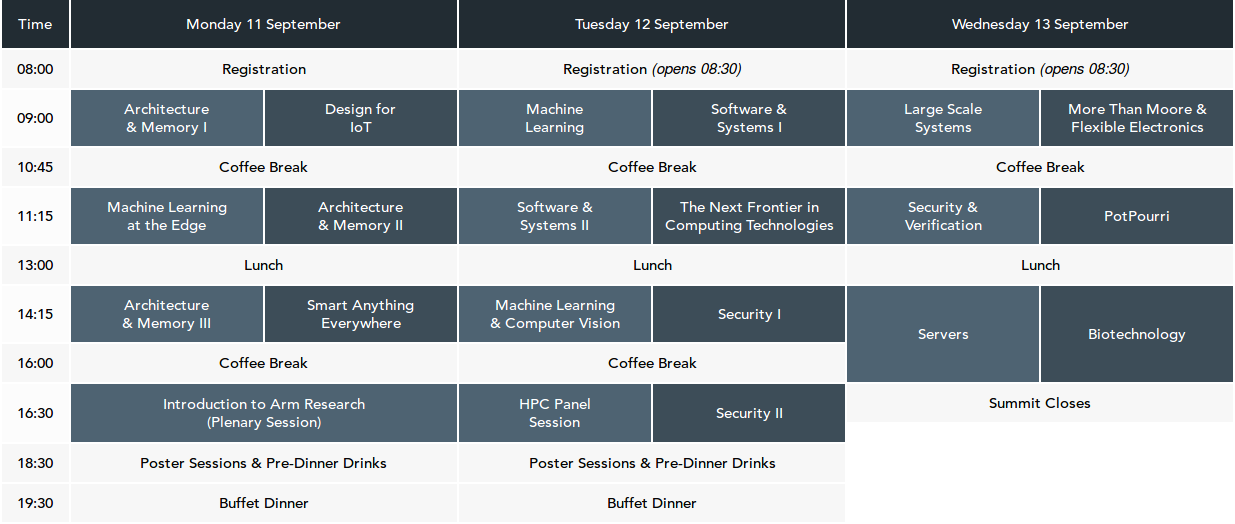

Arm Research Summit 2017 Streamed Live on September 11-13

The Arm Research Summit is “an academic summit to discuss future trends and disruptive technologies across all sectors of computing”, with the second edition of the even taking place now in Cambridge, UK until September 13, 2017. The Agenda includes various subjects such as architecture and memory, IoT, HPC, computer vision, machine learning, security, servers, biotechnology and others. You can find the full detailed schedule for each day on Arm website, and the good news is that the talks are streamed live in YouTube, so you can follow the talks that interest you from the comfort of your home/office. Note that you can switch between rooms in the stream above by clicking on <-> icon. Audio volume is a little low… Thanks to Nobe for the tip. Jean-Luc Aufranc (CNXSoft)Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting […]

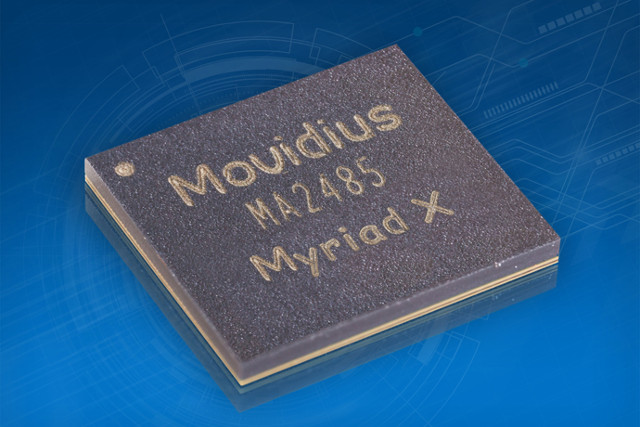

Intel Introduces Movidius Myriad X Vision Processing Unit with Dedicated Neural Compute Engine

Intel has just announced the third generation of Movidius Video Processing Units (VPU) with Myriad X VPU, which the company claims is the world’s first SoC shipping with a dedicated Neural Compute Engine for accelerating deep learning inferences at the edge, and giving devices the ability to see, understand and react to their environments in real time. Movidius Myraid X VPU key features: Neural Compute Engine – Dedicated on-chip accelerator for deep neural networks delivering over 1 trillion operations per second of DNN inferencing performance (based on peak floating-point computational throughput). 16x programmable 128-bit VLIW Vector Processors (SHAVE cores) optimized for computer vision workloads. 16x configurable MIPI Lanes – Connect up to 8 HD resolution RGB cameras for up to 700 million pixels per second of image signal processing throughput. 20x vision hardware accelerators to perform tasks such as optical flow and stereo depth. On-chip Memory – 2.5 MB homogeneous […]

Movidius Neural Compute Stick Shown to Boost Deep Learning Performance by about 3 Times on Raspberry Pi 3 Board

Intel recently launched Movidius Neural Compute Stick (MvNCS)for low power USB based deep learning applications such as object recognition, and after some initial confusions, we could confirm the Neural stick could also be used on ARM based platforms such as the Raspberry Pi 3. Kochi Nakamura, who wrote the code for GPU accelerated object recognition on the Raspberry Pi 3 board, got hold of one sample in order to compare the performance between GPU and MvNCS acceleration. His first attempt was quite confusing as with GoogLeNet, Raspberry Pi 3 + MvNCS achieved an average inference time of about 560ms, against 320 ms while using VideoCore IV GPU in RPi3 board. But then it was discovered that the “stream_infer.py” demo would only use one core out of the 12 VLIW 128-bit vector SHAVE processors in Intel’s Movidius Myriad 2 VPU, and after enabling all those 12 cores instead of just one, […]

Intel’s Movidius Neural Compute Stick Brings Low Power Deep Learning & Artificial Intelligence Offline

Intel has released several Compute Stick over the years which can be used as tiny Windows or Linux computer connected to the HDMI port of your TV or monitor, but Movidius Neural Computer Stick is a complete different beast, as it’s a deep learning inference kit and self-contained artificial intelligence (A.I.) accelerator that connects to the USB port of computers or laptops. Intel did not provide the full hardware specifications for the kit, but we do know the following specifications: Vision Processing Unit – Intel Movidius Myriad 2 VPU with 12 VLIW 128-bit vector SHAVE processors @ 600 MHz optimized for machine vision, Configurable hardware accelerators for image and vision processing; 28nm HPC process node; up to 100 gigaflops USB 3.0 type A port Power Consumption – Low power, the SoC has a 1W power profile Dimensions – 72.5mm x 27mm x 14mm You can enter a trained Caffe, feed-forward […]

Intel DLIA is a PCIe Card Powered by Aria 10 FPGA for Deep Learning Applications

Intel has just launched their DLIA (Deep Learning Inference Accelerator) PCIe card powered by Intel Aria 10 FPGA, aiming at accelerating CNN (convolutional neural network) workloads such as image recognition and more, and lowering power consumption. Some of Intel DLIA hardware specifications: FPGA – Intel (previously Altera) Aria 10 FPGA @ 275 MHz delivering up to 1.5 TFLOPS System Memory – 2 banks 4G 64-bit DDR4 PCIe – Gen3 x16 host interface; x8 electrical; x16 power & mechanical Form Factor – Full-length, full-height, single wide PCIe card Operating Temperature – 0 to 85 °C TDP – 50-75Watts hence the two cooling fans The card is supported in CentOS 7.2, and relies on Intel Caffe framework, Math Kernel library for Deep Neural Networks (MKL-DNN), and works with various network topologies (AlexNet, GoogleNet, CaffeNet, LeNet, VGG-16, SqueezeNet…). The FPGA is pre-programmed with Intel Deep Learning Accelerator IP (DLA IP). Intel DLIA can […]

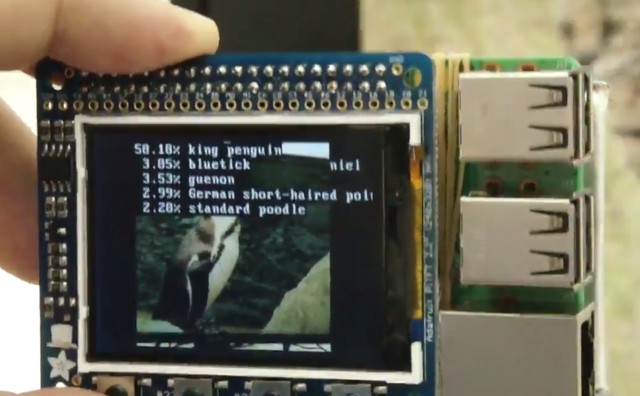

GPU Accelerated Object Recognition on Raspberry Pi 3 & Raspberry Pi Zero

You’ve probably already seen one or more object recognition demos, where a system equipped with a camera detects the type of object using deep learning algorithms either locally or in the cloud. It’s for example used in autonomous cars to detect pedestrian, pets, other cars and so on. Kochi Nakamura and his team have developed software based on GoogleNet deep neural network with a a 1000-class image classification model running on Raspberry Pi Zero and Raspberry Pi 3 and leveraging the VideoCore IV GPU found in Broadcom BCM283x processor in order to detect objects faster than with the CPU, more exactly about 3 times faster than using the four Cortex A53 cores in RPi 3. They just connected a battery, a display, and the official Raspberry Pi camera to the Raspberry Pi boards to be able to recognize various objects and animals. The first demo is with Raspberry Pi Zero. […]