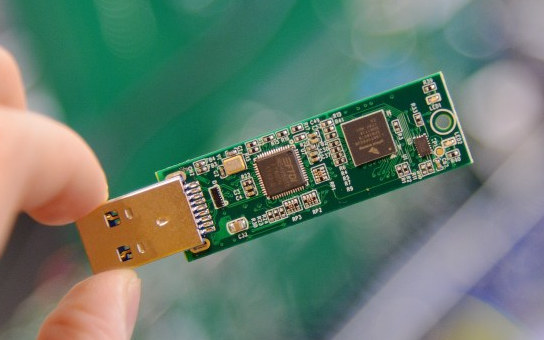

Intel’s Movidius Neural Compute Stick is a low power deep learning inference kit and “self-contained” artificial intelligence (A.I.) accelerator that connects to the USB port of computers or development boards like Raspberry Pi 3, delivering three times more performance than a solution accelerated with VideoCore IV GPU. So far it was the only A.I USB stick solution that I heard of, but Gyrfalcon Technology , a US startup funded at the beginning of last year, has developed its own “artificial intelligence processor” with Lightspeeur 2801S, as well as a neural USB compute stick featuring the solution: Laceli AI Compute Stick. The company claims Laceli AI Compute Stick runs at 2.8 TOPS (Trillion operation per second) performance within 0.3 Watt of power, which is 90 times more efficient than the Movidius USB Stick that can deliver 100 GFLOPS (0.1 TOPS) within 1 Watt of power. Information about the processor and stick […]

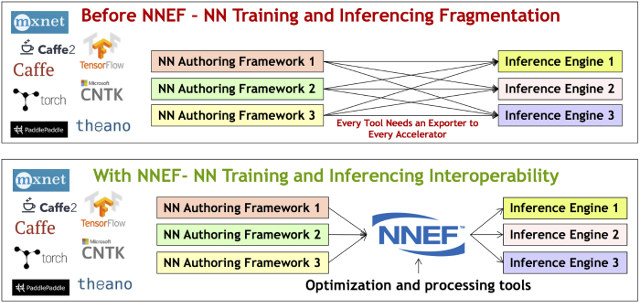

Khronos Group Releases Neural Network Exchange Format (NNEF) 1.0 Specification

The Khronos Group, the organization behind widely used standards for graphics, parallel computing, or vision processing such as OpenGL, Vulkan, or OpenCL, has recently published NNEF 1.0 (Neural Network Exchange Format) provisional specification for universal exchange of trained neural networks between training frameworks and inference engines. NNEF aims to reduce machine learning deployment fragmentation by enabling data scientists and engineers to easily transfer trained networks from their chosen training framework into various inference engines via a single standardized exchange format. NNEF encapsulates a complete description of the structure, operations and parameters of a trained neural network, independent of the training tools used to produce it and the inference engine used to execute it. The new format has already been tested with tools such as TensorFlow, Caffe2, Theano, Chainer, MXNet, and PyTorch. Khronos has also released open source tools to manipulate NNEF files, including a NNEF syntax parser/validator, and example exporters, which can […]

Qualcomm Developer’s Guide to Artificial Intelligence (AI)

Qualcomm has many terms like ML (Machine Learning), DL (Deep Learning), CNN (Convolutional Neural Network), ANN (Artificial Neural Networks), etc.. and is currently made possible via frameworks such as TensorFlow, Caffe2 or ONNX (Open Neural Network Exchange). If you have not looked into details, all those terms may be confusions, so Qualcomm Developer Network has released a 9-page e-Book entitled “A Developer’s Guide to Artificial Intelligence (AI)” that gives an overview of all the terms, what they mean, and how they differ. For example, they explain that a key difference between Machine Learning and Deep Learning is that with ML, the input features of the CNN are determined by humans, while DL requires less human intervention. The book also covers that AI is moving to the edge / on-device for low latency, and better reliability, instead of relying on the cloud. It also quickly go through the workflow using Snapdragon […]

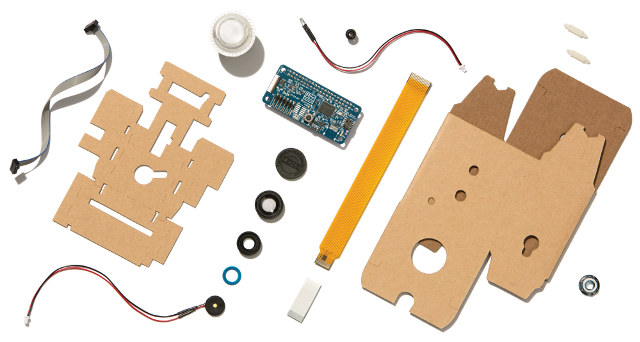

$45 AIY Vision Kit Adds Accelerated Computer Vision to Raspberry Pi Zero W Board

AIY Projects is an initiative launched by Google that aims to bring do-it yourself artificial intelligence to the maker community by providing affordable development kits to get started with the technology. The first project was AIY Projects Voice Kit, that basically transformed Raspberry Pi 3 board into a Google Home device by adding the necessary hardware to support Google Assistant SDK, and an enclosure. The company has now launched another maker kit with AIY Project Vision Kit that adds a HAT board powered by Intel/Movidius Myriad 2 VPU to Raspberry Pi Zero W, in order to accelerate image & objects recognition using TensorFlow’s machine learning models. The kit includes the following items: Vision Bonnet accessory board powered by Myriad 2 VPU (MA2450) 2x 11mm plastic standoffs 24mm RGB arcade button and nut 1x Privacy LED 1x LED bezel 1x 1/4/20 flanged nut Lens, lens washer, and lens magnet 50 mil […]

AWS DeepLens is a $249 Deep Learning Video Camera for Developers

Amazon Web Services (AWS) has launched Deeplens, the “world’s first deep learning enabled video camera for developers”. Powered by an Intel Atom X5 processor with 8GB, and featuring a 4MP (1080p) camera, the fully programmable system runs Ubuntu 16.04, and is designed expand deep learning skills of developers, with Amazon providing tutorials, code, and pre-trained models. AWS Deeplens specifications: SoC – Intel Atom X5 Processor with Intel Gen9 HD graphics (106 GFLOPS of compute power) System Memory – 8GB RAM Storage – 16GB eMMC flash, micro SD slot Camera – 4MP (1080p) camera using MJPEG, H.264 encoding Video Output – micro HDMI port Audio – 3.5mm audio jack, and HDMI audio Connectivity – Dual band WiFi USB – 2x USB 2.0 ports Misc – Power button; camera, WiFi and power status LEDs; reset pinhole Power Supply – TBD Dimensions – 168 x 94 x 47 mm Weight – 296.5 grams The […]

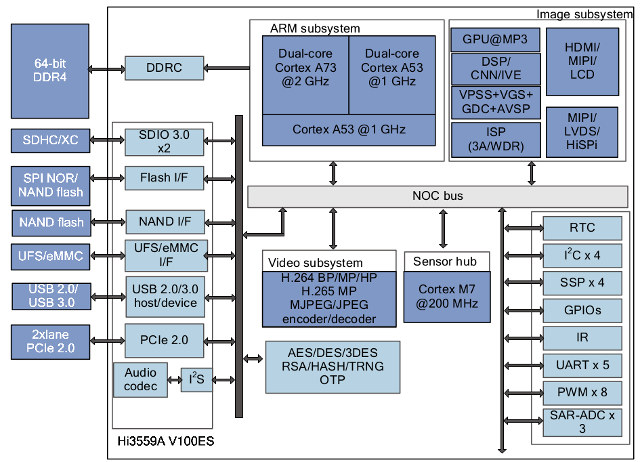

Hisilicon Hi3559A V100ES is an 8K Camera SoC with a Neural Network Accelerator

Earlier today, I published a review of JeVois-A33 machine vision camera, noting that processing is handled by the four Cortex A7 cores of Allwinner A33 processor, but in the future we can expect such type of camera to support acceleration with OpenCL/Vulkan capable GPUs, or better, Neural network accelerators (NNA) such Imagination Tech PowerVR Series 2NX. HiSilicon already launched Kirin 970 SoC with such similarIP, except they call it an NPU (Neural-network Processing Unit). However, while looking for camera SoC with NNA, I found a list of deep learning processors, including the ones that go into powerful servers and autonomous vehicles, that also included a 8K Camera SoC with a dual core CNN (Convolutional Neural Network) acceleration engine made by Hisilicon: Hi3559A V100ES. Hisilicon Hi3559A V100ES specifications: Processor Cores 2x ARM Cortex A73 @ 2 GHz, 32 KB I cache, 64KB D cache or 512 KB L2 cache 2x ARM […]

JeVois Smart Machine Vision Camera Review – Part 1: Developer / Robotics Kit Unboxing

JeVois-A33 computer vision camera was unveiled at the end of last year through a Kickstarter campaign. Powered by an Allwinner A33 quad core Cortex A7 processor, and a 1.3MP camera sensor, the system could detect motion, track faces and eyes, detect & decode ArUco makers & QR codes, follow lines for autonomous cars, etc.. thanks to JeVois framework. Most rewards from KickStarter shipped in April of this year, so it’s quite possible some of the regular readers of this blog are already familiar the camera. But the developer (Laurent Itti) re-contacted me recently, explaining they add improves the software with Python support, and new features such as the capability of running deep neural networks directly on the processor inside the smart camera. He also wanted to send a review sample, which I received today, but I got a bit more than I expected, so I’ll start the review with an […]

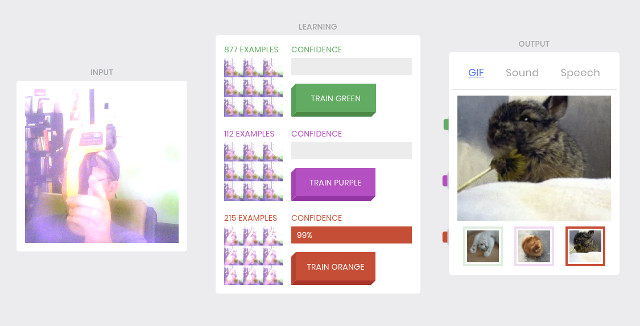

Google’s Teachable Machine is a Simple and Fun Way to Understand How Machine Learning Works

Artificial intelligence, machine learning, deep learning, neural networks… are all words we hear more and more today, as machines get the ability to recognize objects, answer voice requests / commands, and so on. But many people may not know at all the basics of how machine learning works, and with that in mind, Google launched Teachable Machine website to let people experiment and understand the basics behind machine learning without having to install an SDK or even code. So I quickly tried it with Google Chrome, as it did not seem to work with Mozilla Firefox. It’s best to have audio on, as a voice explains how to use it. Basically you connect your webcam, authorize Chrome too use it, and you should see the image in the input section on the left. After you’re being to train the machine in the learning section in the middle with three difference […]