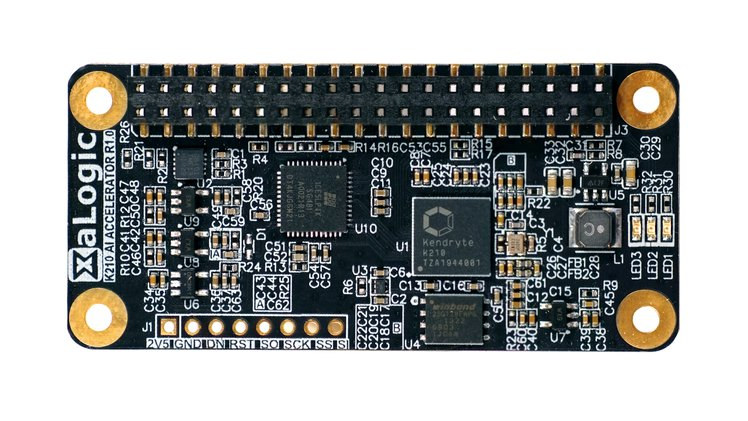

Kendryte K210 is a dual-core RISC-V AI processor that was launched in 2018 and found in several smart audio and computer vision solutions. We previously wrote a Getting Started Guide for Grove AI HAT for Raspberry Pi using Arduino and MicroPython, and XaLogic XAPIZ3500 offered an even more compact K210 solution as a Raspberry pi pHAT with Raspberry Pi Zero form factor. The company is now back with another revision of the board called “XaLogic K210 AI accelerator” designed to work with Raspberry Pi Zero and larger boards with the 40-pin connector. K210 AI Accelerator board specifications: SoC – Kendryte K210 dual-core 64-bit RISC-V processor @ 400 MHz with 8MB on-chip RAM, various low-power AI accelerators delivering up to 0.5 TOPS, Host Interface – 40-pin Raspberry Pi header using: SPI @ 40 MHz via Lattice iCE40 FPGA I2C, UART, JTAG, GPIOs signals Security Infineon Trust-M cloud security chip 128-bit AES […]

Arduino Portenta H7 Gets Embedded Vision Shield with Ethernet or LoRa Connectivity

[Update January 28, 2021: The LoRa version of Portenta Vision Shield is now available] Announced last January at CES 2020, Arduino Portenta H7 is the first board part industrial-grade “Arduino Pro” Portenta family. The Arduino MKR-sized MCU board has plenty of processing power thanks to STMicro STM32H7 dual-core Arm Cortex-M7/M4 microcontroller. It was launched with a baseboard providing access to all I/Os and ports like Ethernet, USB, CAN bus, mPCIe socket (USB), etc… But as AI moves to the very edge, it makes perfect sense for Arduino to launch Portenta Vision Shield with a low-power camera, two microphones, and a choice of wired (Ethernet) or wireless (LoRA) connectivity for machine learning applications. Portenta Vision Shield key features and specifications: Storage – MicroSD card socket Camera – Himax HM-01B0 camera module with 324 x 324 active pixel resolution with support for QVGA Image sensor – High sensitivity 3.6μ BrightSense pixel technology […]

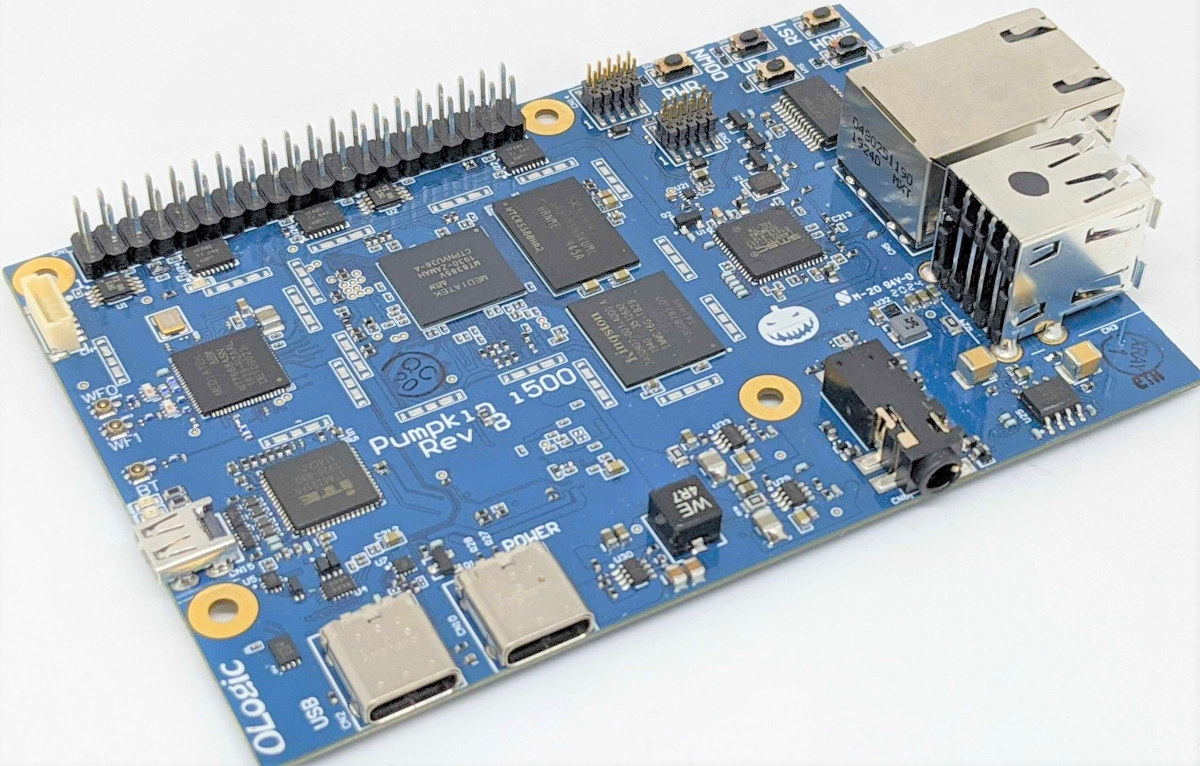

Pumpkin i500 SBC uses MediaTek i500 AIoT SoC for computer vision and AI Edge computing

MediaTek Rich IoT SDK v20.0 was released at the beginning of the year together with the announcement of Pumpkin i500 SBC with very few details except it would be powered by MediaTek i500 octa-core Cortex-A73/A55 processor and designed to support computer vision and AI Edge Computing. Pumpkin i500 hardware evaluation kit was initially scheduled to launch in February 2020, but it took much longer, and Seeed Studio has only just listed the board for $299.00. We also now know the full specifications for Pumpkin i500 SBC: SoC – MediaTek i500 octa-core processor with four Arm Cortex-A73 cores at up to 2.0 GHz and four Cortex-A53 cores, an Arm Mali-G72 MP3 GPU, and dual-core Tensilica Vision P6 DSP/AI accelerator @ 525 MHz System Memory – 2GB LPDDR4 Storage – 16GB eMMC flash Display – 4-lane MIPI DSI connector Camera – Up to 25MP via MIPI CSI connector Video Decoding – 1080p60 […]

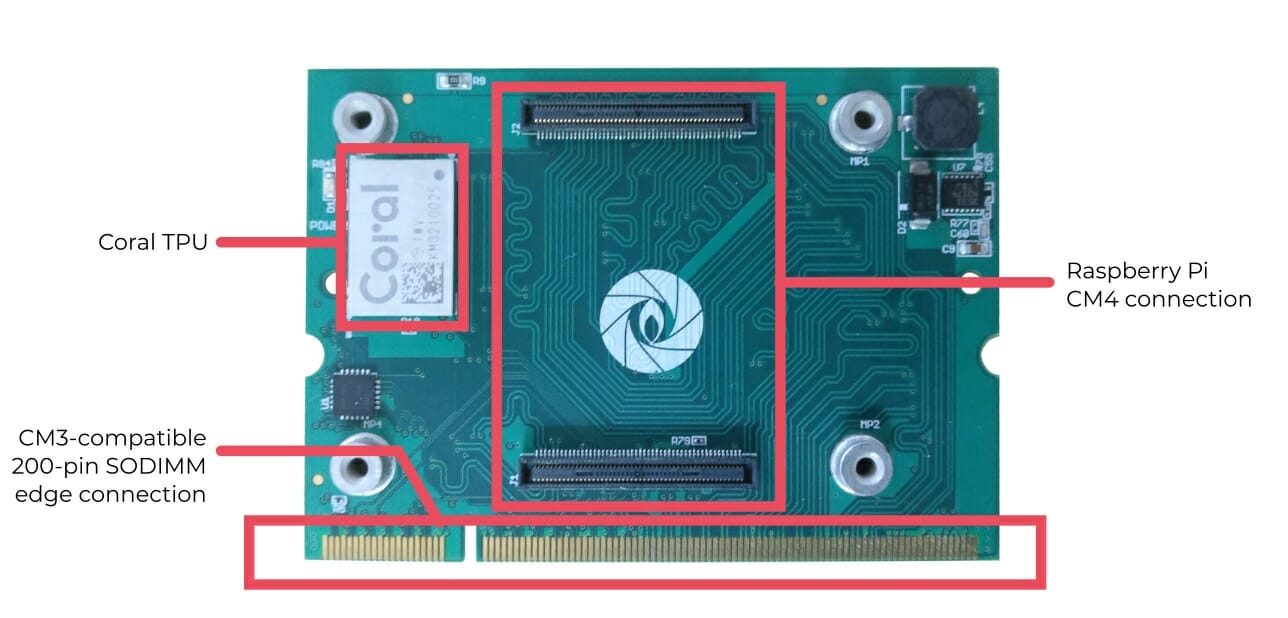

Gumstix Introduces CM4 to CM3 Adapter, Carrier Boards for Raspberry Pi Compute Module 4

Raspberry Pi Trading has just launched 32 different models of Raspberry Pi CM4 and CM4Lite systems-on-module, as well as the “IO board” carrier board. But the company has also worked with third-parties, and Gumstix, an Altium company, has unveiled four different carrier boards for the Raspberry Pi Compute Module 4, as well as a convenient CM4 to CM3 adapter board that enables the use of Raspberry Pi CM4 on all/most carrier boards for the Compute Module 3/3+. Raspberry Pi CM4 Uprev & UprevAI CM3 adapter board Gumstix Raspberry Pi CM4 Uprev follows the Raspberry Pi Compute Module 3 form factor but includes two Hirose connectors for Computer Module 4. The signals are simply routed from the Hirose connectors to the 200-pin SODIMM edge connector used with CM3. Gumstix Raspberry Pi CM4 Uprev is the same except it adds a Google Coral accelerator module. Gumstix Raspberry Pi CM4 Development Board Specifications: […]

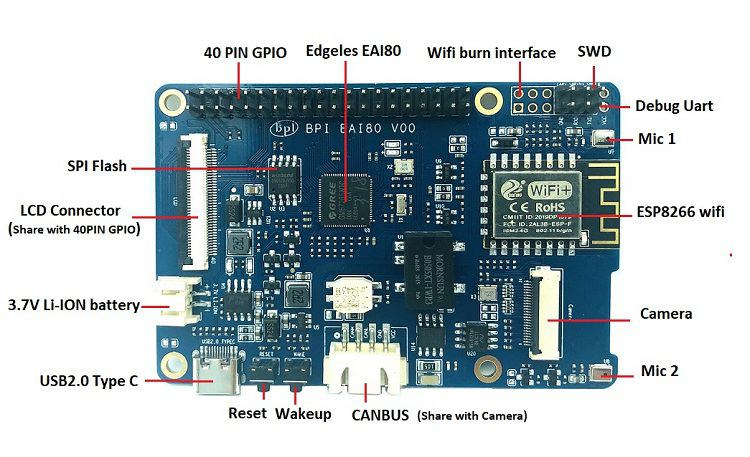

$16 Banana Pi BPI-EAI80 Cortex-M4F Board Embeds AI Accelerator, WiFi Module

Last April, we wrote about Edgeless EAI-Series dual Arm Cortex-M4 MCU equipped with a 300 GOPS CNN-NPU for AI at the very edge as we had discovered the chip in an upcoming Banana Pi board. It turns out Banana Pi BPI-EAI80 development board powered by Edgeless EAI80 AI microcontroller has just launched for $16 on Aliexpress, or you could get a complete kit with a touchscreen display, a camera, and a USB power supply for $80. Banana Pi BPI-EAI80 development board specifications: System-in-Package – Edgeless EIA80 dual-core Cortex-M4F microcontroller @ 200MHz with 300GOPS AI accelerator (CNN-NPU), 384KB of SRAM including 256KB for CNN-NPU, and 8MB SDRAM Storage – SPI flash Display I/F – LCD connector up to 1024×768 Camera I/F – 1x DVP camera interface Audio – 2x onboard microphones Connectivity – 2.4GHz 802.11b/g/n WiFI 4 using ESP8266 module USB – 1x USB 2.0 Type-C port Expansion 40-pin GPIO header […]

Lantronix Open-Q 865XR SoM Brings Snapdragon XR2 Processor Beyond Virtual Reality

Qualcomm Snapdragon XR2 (SXR2130P) is the latest and most powerful virtual & extended reality processor from the company and Facebook recently announced it would be found in their Oculus Quest 2 standalone VR headset. But it now looks like the processor will be used well beyond virtual reality applications as Lantronix has unveiled a Snapdragon XR2 SoM with Open-Q 865XR system-on-module designed for AI boxes, video conference systems, multi-camera systems, machine vision platforms, advanced high-resolution multi-display systems, medical imaging, and handheld data collectors. Open-Q 865XR SoM Open-Q 865XR SoM specifications: SoC – Qualcomm SXR2130P (Snapdragon XR2) Octa-core processor with 1x Kryo Gold prime @ 2.84 GHz + 3x Kryo Gold @ 2.42 GHz + 4x Kryo Silver @ 1.81 GHz Adreno 650 GPU @ up to 587 MHz Hexagon 698 DSP with quad Hexagon Vector eXtensions Spectra 480 Image Signal Processor Adreno 665 Video Processing unit for decode up to […]

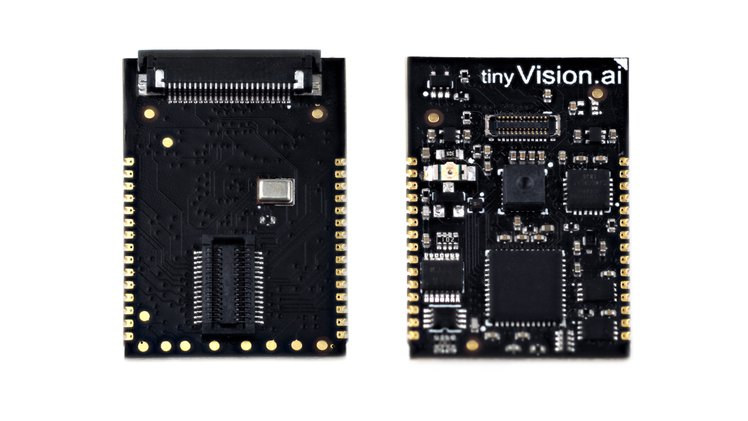

Vision FPGA SoM Integrates Audio, Vision and Motion-Sensing with Lattice iCE40 FPGA (Crowdfunding)

tinyVision.ai’s Vision FPGA SoM is a tiny Lattice iCE40 powered FPGA module with integrated vision, audio, and motion-sensing capability with a CMOS image sensor, an I2S MEMS microphone and a 6-axis accelerometer & gyroscope. The module enables low power vision (10-20 mW) for battery-powered applications, can interface via SPI to a host processor as a storage device, comes with open-source toolchain and sample code, and is optimized for volume production. Vision FPGA SoM specifications: FPGA – Lattice iCE40UP5k FPGA with 5K LUT’s, 1 Mb RAM, 8 MAC units Memory – 64 Mbit QSPI SRAM for temporary data Storage – 4 Mbit QSPI Flash for FPGA bitstream/code storage Sensors Himax HM01B0 CMOS image sensor Knowles MEMS I2S microphone, expandable to a stereo configuration with an off-board I2S microphone InvenSense IMU 60289 6-axis Gyro/accelerometer I/Os 4x GPIOs with programmable IO voltage SPI host interface with programmable IO voltage Misc – Tri-color LED, […]

Raspberry Pi SBC Now Supports OpenVX 1.3 Computer Vision API

OpenVX is an open, royalty-free API standard for cross-platform acceleration of computer vision applications developed by The Khronos Group that also manages the popular OpenGL ES, Vulkan, and OpenCL standards. After OpenGL ES 3.1 conformance for Raspberry Pi 4, and good progress on the Vulkan implementation, the Raspberry Pi Foundation has now announced that both Raspberry Pi 3 and 4 Model B SBC’s had achieved OpenVX 1.3 conformance (somehow dated 2020-07-23). Raspberry Pi OpenVX open-source sample implementation passes the Vision, Enhanced Vision, & Neural Net conformance profiles specified in OpenVX 1.3 standard. However, it is NOT intended to be a reference implementation, as it is not optimized, production-ready, nor actively maintained by Khronos publically. The sample can be built on multiple operating systems (Windows, Linux, Android) using either CMake or Concerto. Detailed instructions are provided for Ubuntu 18.04 64-bit x86 and Raspberry Pi SBC. Here’s the list of commands to […]